# Biomni-R0: Advancing Biomedical AI with Multi-Turn Reinforcement Learning for Expert-Level Reasoning

## How is AI transforming biomedical research today?

AI is rapidly becoming a cornerstone of biomedical research, enabling agents to tackle complex tasks across genomics, clinical diagnostics, and molecular biology. These tools go beyond simple fact-retrieval, aiming to reason through biological problems, interpret patient data, and extract insights from vast biomedical databases.

### Summary

This section explores the expanding role of AI in biomedical research, highlighting the shift from basic data processing to advanced reasoning and tool interaction, and why domain-specific capabilities are critical for supporting modern research workflows.

The field of biomedical artificial intelligence is evolving at an unprecedented pace, driven by the growing demand for agents that can handle the multifaceted nature of biomedical tasks. Unlike general-purpose AI models, which excel at broad tasks but lack specialization, biomedical AI agents must interface with domain-specific tools, understand intricate biological hierarchies, and simulate the workflows of human researchers.

For example, in genomics, these agents are expected to analyze gene expression data, identify potential biomarkers, and even predict how genetic variations might influence disease progression. In clinical diagnostics, they need to interpret medical images, integrate patient history, and assist in differential diagnosis—tasks that require not just data access but the ability to connect disparate pieces of information in a clinically meaningful way.

Consider a scenario where a research team is studying a rare genetic disorder. A biomedical AI agent might be tasked with sifting through thousands of research papers, genomic datasets, and patient records to identify potential causal genes. This goes beyond keyword matching; it requires understanding the biological pathways involved, recognizing patterns in how similar disorders present, and prioritizing genes that fit the clinical picture—all while adapting to new information as the research progresses.

Author’s reflection: What strikes me most about this evolution is the shift from AI as a “helper” for routine tasks to AI as a “collaborator” in complex reasoning. Biomedical research is inherently interdisciplinary, and the most valuable AI tools are those that can mirror the way human experts connect knowledge across genetics, biochemistry, and clinical practice.

## What is the core challenge in developing effective biomedical AI?

The primary challenge is equipping AI agents with expert-level reasoning capabilities, particularly in complex areas like multi-step problem-solving, rare disease diagnosis, and gene prioritization.

### Summary

This section examines why achieving expert-level performance in biomedical tasks is so difficult, focusing on the limitations of current models in handling nuanced, context-dependent reasoning that human experts perform intuitively.

While AI has made strides in biomedical applications, achieving performance on par with domain experts remains elusive. Most large language models (LLMs) can handle surface-level tasks like retrieving facts from research papers or recognizing patterns in structured data, but they falter when faced with the depth of reasoning required for complex biomedical problems.

Take rare disease diagnosis, for example. A human geneticist might consider a patient’s symptoms, family history, genetic sequencing data, and even subtle phenotypic details to narrow down potential diagnoses—often connecting seemingly unrelated clues. Current AI models, however, often struggle with this multi-step reasoning process. They may miss critical connections between symptoms and genetic markers, or fail to account for rare exceptions to typical disease presentations.

Similarly, in gene prioritization—identifying which genes are most likely involved in a specific biological process or disease—expertise involves understanding not just individual gene functions but how genes interact within pathways, how mutations affect protein structure, and how these changes scale up to impact cellular function. General AI models often lack the depth of domain knowledge to weigh these factors appropriately, leading to less accurate prioritization.

The gap is clear: biomedical AI needs to move beyond pattern recognition and retrieval to truly “think” like an expert—adapting to new information, questioning assumptions, and building logical chains of reasoning that hold up to scientific scrutiny.

## Why do traditional AI approaches fail to meet biomedical research needs?

Traditional approaches, such as supervised learning on curated datasets or retrieval-augmented generation, lack adaptability and robustness, struggling with dynamic environments, tool execution, and complex reasoning chains.

### Summary

This section outlines the limitations of conventional AI methods in biomedical research, explaining why static prompts, pre-defined behaviors, and over-reliance on pre-trained data hinder their ability to handle the field’s complexities.

Many existing biomedical AI solutions rely on supervised learning, where models are trained on labeled datasets of biomedical information. While this works for specific, well-defined tasks—like classifying images of cancerous cells—it falls short when tasks require flexibility. Supervised learning locks models into patterns present in their training data, making them rigid when faced with novel scenarios, such as a rare genetic variant not seen in the training set.

Retrieval-augmented generation (RAG) is another common approach, where models pull information from external databases or literature to ground their responses. While this improves factual accuracy, it introduces new challenges. RAG systems often depend on static prompts to guide information retrieval, which can’t adapt when the research question evolves. For example, if a researcher shifts from investigating a gene’s role in diabetes to its involvement in cardiovascular disease, a RAG model with a fixed prompt might fail to retrieve relevant cardiovascular literature, leading to incomplete or misleading insights.

Perhaps most critically, traditional models struggle with executing external tools—a key capability for biomedical research. Tasks like querying genomic databases, running statistical analyses on patient data, or simulating molecular interactions require seamless tool use. Many current AI agents fumble here, either misinterpreting tool outputs, failing to adjust parameters when initial results are uninformative, or abandoning reasoning chains entirely when tools return unexpected data.

Author’s reflection: The fragility of these traditional approaches in dynamic environments is particularly problematic in biomedicine, where research questions are rarely static. A tool that works for analyzing gene expression in liver cells might need significant adjustments for kidney cells, and an AI that can’t adapt to these shifts becomes more of a liability than an asset.

## What is Biomni-R0, and how does it address these limitations?

Biomni-R0 is a new family of agentic large language models (LLMs) developed by researchers from Stanford University and UC Berkeley, designed using reinforcement learning (RL) to achieve expert-level reasoning in biomedical tasks.

### Summary

This section introduces Biomni-R0, explaining its origins, core design philosophy, and how it leverages reinforcement learning to overcome the limitations of traditional biomedical AI models.

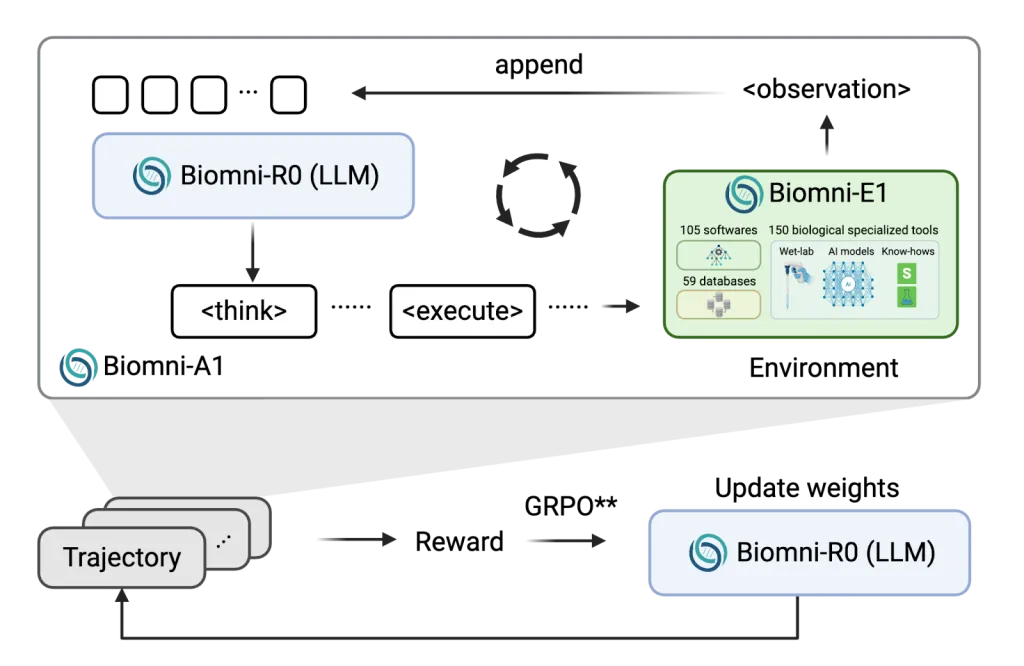

Biomni-R0 represents a paradigm shift in biomedical AI, moving away from static training methods toward a more dynamic, adaptive approach. The model family includes two variants: Biomni-R0-8B (8 billion parameters) and Biomni-R0-32B (32 billion parameters), both built on a foundation of reinforcement learning tailored specifically for biomedical reasoning.

The development of Biomni-R0 was a collaboration between Stanford’s Biomni agent and environment platform—a system designed to simulate biomedical research workflows—and UC Berkeley’s SkyRL reinforcement learning infrastructure, which provides the computational framework for training adaptive agents. This combination allowed researchers to create an AI that doesn’t just mimic biomedical reasoning but actively learns to improve it through interaction with a simulated research environment.

Unlike traditional models that are trained on fixed datasets, Biomni-R0 learns through trial and error, similar to how human experts refine their skills over time. For example, when tasked with diagnosing a rare disease, the model might initially propose a set of potential genes, then use feedback on those suggestions to adjust its reasoning—incorporating new data from tools or literature to narrow down the possibilities. This iterative learning process mirrors the way researchers refine hypotheses in real labs.

Biomni-R0’s focus on “agentic” behavior is key. Rather than passively generating responses, it acts as an active agent: planning research steps, executing tools, evaluating results, and revising its approach based on what it learns. This agency is critical for handling the open-ended, multi-step tasks that define biomedical research.

## How was Biomni-R0 trained to achieve expert-level performance?

Biomni-R0 used a two-phase training process—supervised fine-tuning (SFT) followed by reinforcement learning (RL)—with a focus on structured reasoning, external tool use, and efficient resource management.

### Summary

This section details Biomni-R0’s training strategy, including the two-phase pipeline, reward mechanisms, and technical innovations that enabled efficient scaling and improved reasoning capabilities.

The training of Biomni-R0 was designed to build both foundational biomedical knowledge and adaptive reasoning skills through a structured, two-phase process:

-

Supervised Fine-Tuning (SFT): The first phase bootstrapped the model’s ability to follow structured reasoning formats using high-quality trajectories sampled from Claude-4 Sonnet, a powerful general LLM, via rejection sampling. Rejection sampling involves generating multiple responses to a task and selecting the highest-quality one, ensuring that Biomni-R0 learned from exemplars of clear, logical reasoning. This phase focused on teaching the model to structure its thinking—for example, breaking down a diagnosis task into steps like “review symptoms,” “query genomic database,” “cross-reference with literature,” and “rank potential causes.”

-

Reinforcement Learning (RL): In the second phase, the model was fine-tuned using reinforcement learning, with two key reward signals:

-

Correctness: Rewards for accurate outcomes, such as selecting the right gene in a prioritization task or making the correct diagnosis. -

Response Formatting: Rewards for using structured tags (e.g., and ) to organize reasoning, ensuring clarity and consistency in how the model presents its thought process.

-

To handle the computational demands of training a large biomedical agent—particularly when using external tools with varying execution times—the team developed asynchronous rollout scheduling. This technique minimized bottlenecks caused by tool delays, ensuring that GPU resources were used efficiently even when some tools took longer to return results than others.

Another critical innovation was expanding the model’s context length to 64k tokens. This allowed Biomni-R0 to manage long, multi-step reasoning conversations—essential for tasks like tracking a research project over weeks, where the model needs to reference previous analyses, tool outputs, and hypotheses to build coherent conclusions.

Image source: Marktechpost

Author’s reflection: The decision to combine SFT with RL is particularly insightful. SFT provides a strong foundation of “good habits” in reasoning, while RL allows the model to adapt and improve in ways that rigid training data can’t predict—much like how medical students first learn from textbooks (SFT) then refine their skills through residency (RL).

## How does Biomni-R0 perform compared to existing state-of-the-art models?

Biomni-R0 outperforms leading general-purpose models like Claude 4 Sonnet and GPT-5 on most biomedical tasks, with significant improvements in rare disease diagnosis, gene prioritization, and multi-step reasoning.

### Summary

This section presents key performance results, highlighting how Biomni-R0’s specialized training translates to superior outcomes in critical biomedical tasks, with specific metrics comparing it to other leading models.

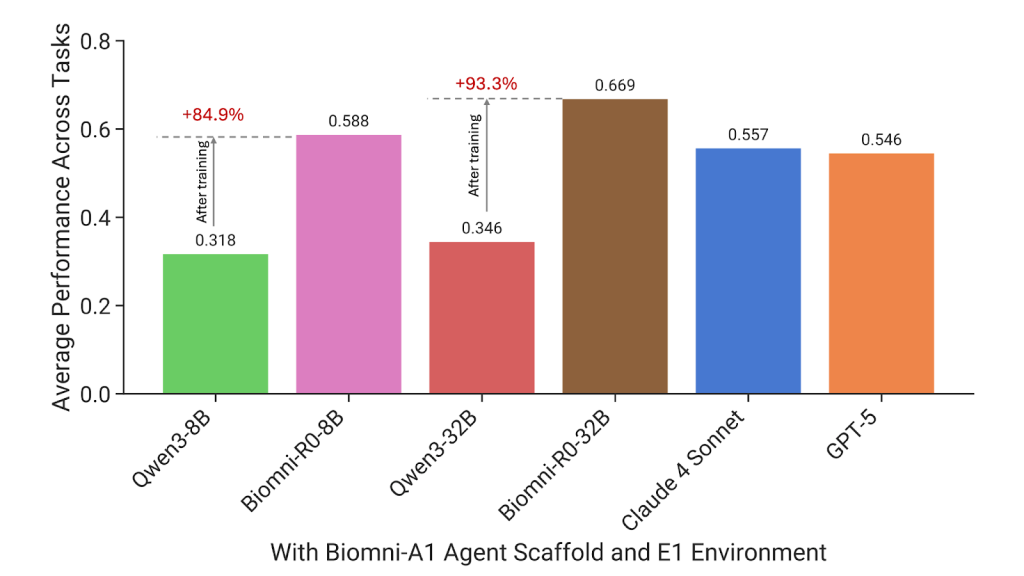

The performance gains of Biomni-R0 over traditional models are striking. Biomni-R0-32B achieved an overall score of 0.669, more than doubling the base model’s score of 0.346. Even the smaller Biomni-R0-8B outperformed larger general-purpose models, scoring 0.588 compared to Claude 4 Sonnet and GPT-5—despite being significantly smaller in size.

On a task-by-task basis, Biomni-R0-32B led in 7 out of 10 biomedical tasks, with GPT-5 leading in 2 and Claude 4 Sonnet in just 1. Some of the most notable improvements occurred in areas that demand deep domain expertise:

-

Rare Disease Diagnosis: Biomni-R0-32B scored 0.67, compared to Qwen-32B’s score of 0.03—a more than 20× improvement. This is critical because rare diseases often lack large datasets, making them challenging for models reliant on pattern recognition. Biomni-R0’s ability to reason through limited data, connect symptoms to genetic markers, and weigh rare possibilities mirrors the expertise of human geneticists.

-

GWAS Variant Prioritization: In genome-wide association study (GWAS) variant prioritization—identifying which genetic variants are most likely linked to a disease—Biomni-R0-32B’s score jumped from 0.16 (base model) to 0.74. This improvement directly impacts researchers’ ability to focus on the most promising genetic targets, accelerating drug discovery and disease understanding.

These results demonstrate that specialized training in biomedical reasoning—rather than sheer model size—drives performance in domain-specific tasks. General-purpose models, while powerful, lack the tailored reinforcement signals and domain-specific environment training that make Biomni-R0 effective.

Image source: Marktechpost

## How is Biomni-R0 designed for scalability and precision in real-world research?

Biomni-R0’s design decouples environment execution from model inference, enabling efficient scaling, handling of varying tool latencies, and support for longer, more coherent reasoning sequences.

### Summary

This section explains the technical innovations that make Biomni-R0 scalable and precise, focusing on resource management, handling of external tools, and the link between structured reasoning length and performance.

Training large biomedical AI agents requires managing resource-heavy processes, including executing external tools (e.g., genomic databases, statistical software), querying databases, and evaluating code. To address this, the Biomni-R0 team decoupled environment execution (e.g., running a tool or query) from model inference (e.g., generating reasoning steps). This separation allows the system to scale more flexibly: model inference can continue on one set of GPUs while tools execute on another, reducing idle time and maximizing resource use.

This design is particularly valuable when working with tools that have varying execution latencies. For example, a simple query to a gene database might return results in seconds, while a molecular dynamics simulation could take hours. By decoupling these processes, Biomni-R0 avoids bottlenecks—ensuring that the model isn’t left waiting for slow tools or, conversely, that fast tools aren’t underutilized while the model processes other tasks.

Another key design choice was prioritizing longer, structured reasoning sequences. RL training encouraged Biomni-R0 to produce lengthier responses with clear, step-by-step reasoning—and this correlated strongly with better performance. In biomedical research, where conclusions depend on linking multiple pieces of evidence (e.g., gene function, patient data, pathway analysis), a longer, structured reasoning trace isn’t just more transparent—it’s more accurate. It mirrors the way experts document their thought processes in lab notebooks, ensuring that each step is justified and traceable.

For example, when prioritizing genes for a new therapeutic target, Biomni-R0 might outline: 1) which databases it queried, 2) how it filtered results based on expression in relevant tissues, 3) which pathways the genes are involved in, and 4) how it weighed conflicting evidence from different studies. This structured approach not only improves accuracy but also makes the model’s conclusions more interpretable—critical for gaining trust in scientific settings.

## What are the key takeaways from Biomni-R0’s development?

Biomni-R0 demonstrates that reinforcement learning, combined with domain-specific training, can enable AI agents to achieve expert-level reasoning in biomedical research, outperforming larger general models and addressing critical limitations of traditional approaches.

### Summary

This section distills the core insights from Biomni-R0’s development, highlighting its implications for the future of biomedical AI and the key factors that drove its success.

The research behind Biomni-R0 offers several critical lessons for the development of biomedical AI:

-

Deep reasoning, not just retrieval, is essential: Biomedical agents must do more than fetch facts—they need to connect ideas across genomics, diagnostics, and molecular biology to solve complex problems. -

Expert-level performance requires focus on complex tasks: The central challenge is mastering areas like rare disease diagnosis and gene prioritization, where multi-step reasoning and domain judgment are key. -

Traditional methods are too rigid: Supervised fine-tuning and retrieval-based models lack the adaptability needed for dynamic research environments, where questions and tools evolve. -

Reinforcement learning enables specialization: Biomni-R0’s use of RL with expert-based rewards and structured output formatting allowed it to adapt to biomedical workflows in ways traditional models can’t. -

A two-phase training pipeline works: Combining SFT (to build foundational skills) with RL (to refine reasoning) proved highly effective for optimizing both performance and reasoning quality. -

Smaller models can outperform larger ones with specialization: Biomni-R0-8B showed strong results despite its smaller size, while Biomni-R0-32B set new benchmarks by outperforming larger general models on most tasks. -

Longer reasoning traces indicate expertise: RL training led to longer, more coherent reasoning sequences—mirroring the way human experts think through complex problems.

These takeaways point to a future where biomedical AI agents aren’t just tools for data processing but active collaborators in research, capable of automating complex workflows with the precision and adaptability of human experts.

## Conclusion

Biomni-R0 represents a significant leap forward in biomedical AI, proving that reinforcement learning tailored to domain-specific reasoning can bridge the gap between general-purpose models and expert-level performance. By focusing on adaptability, structured reasoning, and efficient resource use, it addresses critical limitations of traditional approaches and sets a new standard for what biomedical AI can achieve. As research in this field advances, models like Biomni-R0 have the potential to accelerate discoveries in genomics, diagnostics, and drug development—ultimately improving patient outcomes and expanding our understanding of human biology.

## Action Checklist / Implementation Steps

-

Understand the limitations of current tools: Evaluate existing biomedical AI solutions to identify gaps in multi-step reasoning, tool execution, and adaptability. -

Prioritize domain-specific training: When developing or adopting biomedical AI, focus on models trained on reinforcement learning in biomedical environments rather than general-purpose LLMs. -

Leverage structured reasoning: Implement tools that encourage clear, step-by-step reasoning (e.g., using structured tags) to improve accuracy and interpretability. -

Optimize resource management: For large-scale biomedical AI, decouple environment execution from model inference to handle varying tool latencies and reduce idle resources. -

Evaluate on complex tasks: Test models on challenging tasks like rare disease diagnosis and gene prioritization to assess true expert-level capabilities. -

Balance model size and specialization: Consider smaller, domain-specialized models (like Biomni-R0-8B) for applications where efficiency is critical, as they can outperform larger general models.

## One-page Overview

-

What is Biomni-R0? A family of agentic LLMs (8B and 32B parameters) designed for biomedical research, using reinforcement learning to achieve expert-level reasoning. -

Key Innovation: Two-phase training (SFT + RL) with rewards for correctness and structured reasoning, plus a 64k token context length for long multi-step tasks. -

Performance: Biomni-R0-32B scored 0.669 (vs. base model’s 0.346), outperforming Claude 4 Sonnet and GPT-5 on 7/10 tasks, with 20× better rare disease diagnosis and 4.6× better GWAS prioritization. -

Design Strengths: Decoupled environment execution for scalability, asynchronous rollout scheduling for efficiency, and emphasis on longer, structured reasoning traces. -

Implications: Shows that domain-specific RL training enables AI to match expert reasoning in biomedicine, accelerating research in genomics, diagnostics, and beyond.

## FAQ

-

What makes Biomni-R0 different from other biomedical AI models?

Biomni-R0 uses reinforcement learning tailored to biomedical reasoning, rather than relying solely on supervised learning or retrieval. This allows it to adapt to dynamic tasks and improve through interaction with tools and environments. -

How does Biomni-R0 handle rare diseases, which have limited data?

Its RL training focuses on multi-step reasoning, enabling it to connect sparse data points (e.g., symptoms, genetic markers) in ways that pattern-based models can’t, leading to a 20× improvement in rare disease diagnosis. -

Is Biomni-R0 larger than other leading models?

No. The 8B parameter version outperforms larger general models like Claude 4 Sonnet and GPT-5, showing that specialization matters more than size in biomedical tasks. -

What is the two-phase training process?

First, supervised fine-tuning (SFT) on high-quality reasoning examples from Claude-4 Sonnet. Second, reinforcement learning with rewards for correctness and structured formatting. -

Why is a 64k token context length important?

It allows Biomni-R0 to manage long, multi-step research conversations—critical for tracking hypotheses, tool outputs, and conclusions over extended projects. -

Can Biomni-R0 execute external tools?

Yes. Its design includes efficient handling of external tools (e.g., genomic databases), with asynchronous scheduling to manage varying execution times. -

How does Biomni-R0 ensure its reasoning is interpretable?

RL rewards structured formatting (using tags like and ), encouraging clear, step-by-step reasoning that mirrors human expert documentation. -

What tasks does Biomni-R0 excel at?

It leads in 7/10 biomedical tasks, including rare disease diagnosis, GWAS variant prioritization, and multi-step biological reasoning.