「ROMA: The Key to AI’s Long-Horizon Tasks – And We Built It Ourselves」

❝

Complex task decomposition, transparent execution, reliable results – this open-source framework is redefining AI agent development

❞

As a developer who’s spent years immersed in cutting-edge AI technologies, I’ve witnessed the rise and fall of countless “next breakthrough frameworks.” But when Sentient AI released ROMA, I had to admit – this time feels different.

Remember those love-hate relationships with AI agent development? Individual tasks handled beautifully, but once you encounter problems requiring multi-step reasoning, the system starts circling like a ship without navigation. With ROMA’s arrival, we finally have a key to unlock long-horizon tasks.

Why Your AI Agent Keeps Failing at Complex Tasks

Imagine this: you ask an AI agent to analyze climate differences between two cities and generate a report. Each individual step – searching data, extracting information, comparative analysis, report writing – modern LLMs handle reasonably well. But when you chain these steps together, the results often disappoint.

The problem isn’t model capability – it’s 「system architecture」.

Even with 99% reliable single-step processing, chaining ten steps causes overall success rates to plummet below 90%. Worse, traditional agent frameworks operate like black boxes – when the final result fails, you can hardly pinpoint where things went wrong.

This is ROMA’s core mission: 「solving error accumulation and debugging difficulties in long-horizon tasks」.

ROMA’s Architectural Revolution: The Wisdom of Recursive Task Trees

ROMA stands for Recursive Open Meta-Agent – a name that perfectly captures its core innovation as a 「recursive, open meta-agent framework」.

The Four-Stage Control Loop: Atomize → Plan → Execute → Aggregate

Each task node in ROMA follows the same simple yet powerful decision logic:

def solve(task):

if is_atomic(task): # Step 1: Atomicity check

return execute(task) # Step 2: Execute atomic task

else:

subtasks = plan(task) # Step 2: Planning decomposition

results = []

for subtask in subtasks:

results.append(solve(subtask)) # Recursive call

return aggregate(results) # Step 3: Aggregate results

Behind this seemingly simple loop lies deep wisdom for solving complex problems. Let me illustrate with a concrete example:

Suppose you ask ROMA: “Which movies with production budgets over $350 million failed to become box office champions in their release years?”

The 「Atomizer」 first determines this task is too complex for single-step completion.

The 「Planner」 then decomposes it into:

-

Find movies with budgets exceeding $350 million -

Get box office champion information for relevant years -

Compare and analyze to generate the final list

「Executors」 handle each subtask – potentially calling search APIs, querying databases, or using specialized tools.

The 「Aggregator」 finally integrates various results into a coherent answer.

The true brilliance lies in how each subtask can undergo the same decomposition process, forming a genuine 「task tree」. This recursive design enables ROMA to handle tasks of arbitrary complexity while maintaining code simplicity.

Information Flow Design: Glass-Transparent Context Passing

ROMA’s biggest difference from traditional agent frameworks is its 「complete information transparency」. During task decomposition, context flows top-down; during result aggregation, data flows bottom-up. Crucially, dependency relationships are strictly respected – tasks requiring previous results wait patiently, while independent tasks execute in parallel.

The direct benefit is 「debuggability」. Developers can always know precisely:

-

What task is currently executing -

What the task inputs are -

What outputs were generated -

Where exactly problems occurred

Real-World Performance: Let Benchmarks Speak

However elegant the architectural design, it must prove itself through actual performance. The Sentient team built ROMA Search using their framework and tested it across multiple authoritative benchmarks.

SEAL-0 Benchmark: The Ultimate Multi-Source Reasoning Challenge

SEAL-0 is specifically designed to test systems handling conflicting, noisy information. In this “nightmare difficulty” test, ROMA Search delivered remarkable results:

「45.6% accuracy」, significantly outperforming Kimi Researcher (36%) and Gemini 2.5 Pro (19.8%), making it the current 「state-of-the-art system」 on this benchmark.

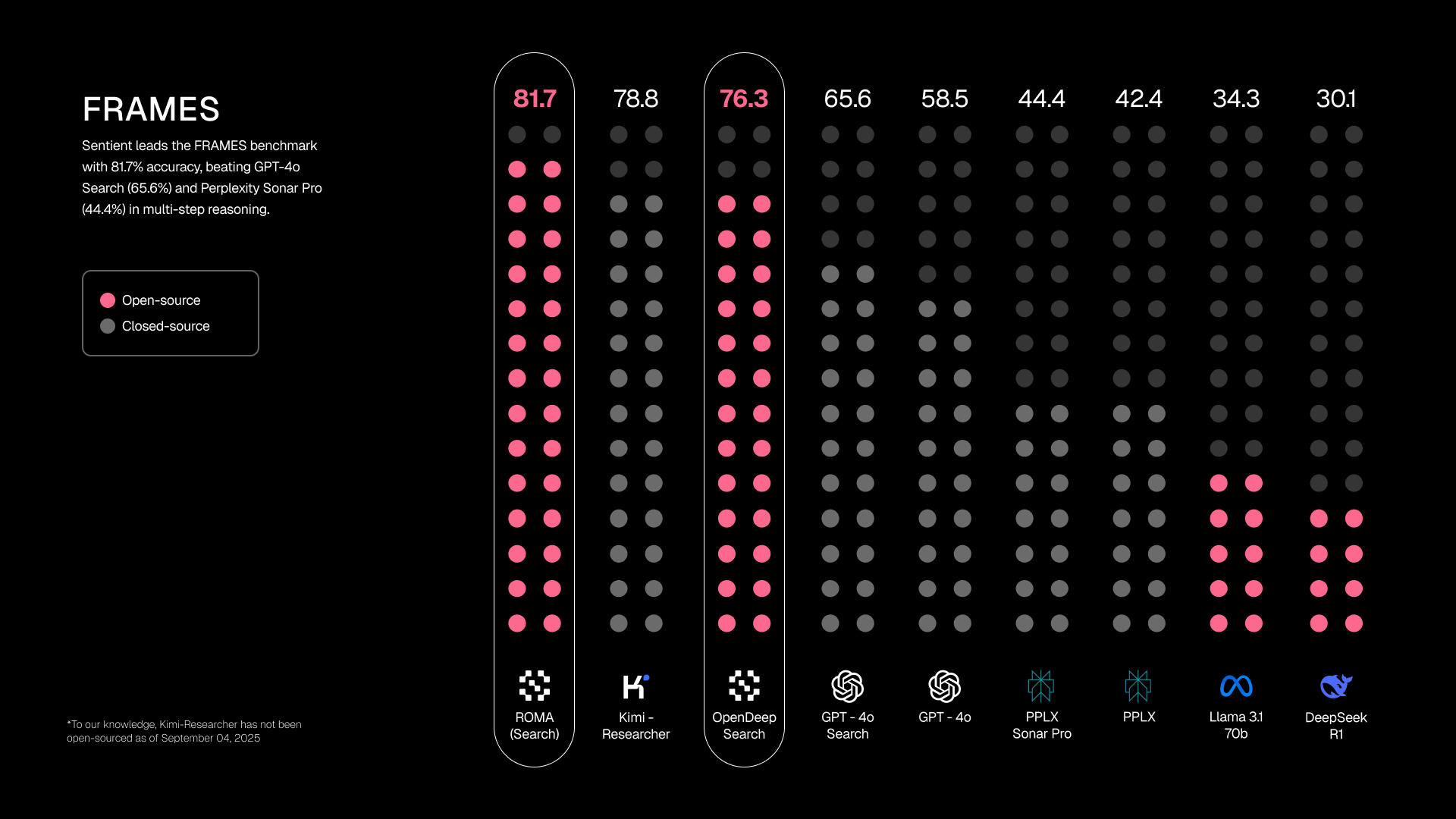

FRAMES and SimpleQA: Comprehensive Leadership

On the FRAMES benchmark testing multi-step reasoning, ROMA Search similarly achieved 「state-of-the-art performance」. For factual retrieval tasks in SimpleQA, it reached 「near state-of-the-art levels」.

These results prove ROMA’s architectural advantages translate into tangible performance improvements, particularly for tasks requiring complex reasoning and multi-source verification.

Five-Minute Setup: Building Your First ROMA Agent from Scratch

Enough theory – let’s get hands-on. ROMA’s onboarding experience is impressively smooth – within five minutes, you can have a fully functional agent system.

One-Command Environment Setup

git clone https://github.com/sentient-agi/ROMA.git

cd ROMA

./setup.sh

Behind this simple command, ROMA offers two choices:

-

「Docker deployment」 (recommended): Complete isolation environment, avoiding dependency conflicts -

「Native installation」: Better suited for development and customization

Either way, you’ll have a complete system with frontend interface (localhost:3000) and backend service (localhost:5000) within minutes.

Pre-Built Agents: Powerful Capabilities Out-of-the-Box

ROMA thoughtfully provides several pre-built agents for immediate framework experience:

The 「General Task Solver」 based on ChatGPT Search Preview handles everything from technical questions to creative projects. This is my personal favorite starting point, letting you immediately experience ROMA’s smooth multi-step reasoning.

The 「Deep Research Agent」 optimizes complex research tasks, automatically breaking research questions into search, analysis, and synthesis phases, processing multiple information sources in parallel, ultimately generating well-structured research reports.

The 「Crypto Analytics Agent」 demonstrates ROMA’s application in specialized domains, integrating real-time market data, on-chain analysis, and DeFi metrics for deep cryptocurrency insights.

Your First Custom Agent

The simplicity of creating custom agents might surprise you:

from sentientresearchagent import SentientAgent

agent = SentientAgent.create()

result = await agent.run("Create a podcast outline about AI safety for me")

Behind these three lines of code, ROMA executes the complete task decomposition, planning, execution, and aggregation workflow. You can observe the entire execution process in real-time through the frontend interface, witnessing how complex tasks get solved step by step.

Advanced Features: Built for Production Environments

ROMA isn’t just a research framework – it considers production environment needs from the ground up.

E2B Sandbox Integration: Secure Execution of Untrusted Code

For tasks requiring code execution, ROMA provides seamless E2B sandbox integration:

./setup.sh --e2b # Configure E2B template

./setup.sh --test-e2b # Test integration

This integration brings crucial advantages:

-

🔒 「Security isolation」: Untrusted code runs in sandboxes -

☁️ 「Data synchronization」: Automatic data sync with S3 environments -

🚀 「High-performance access」: S3 filesystem mounting via goofys

Enterprise-Grade Data Management

ROMA’s data persistence layer design is equally impressive:

-

「S3 mounting」: Support for enterprise-grade S3 storage -

「Path injection protection」: Comprehensive security validation -

「Credential security」: AWS credentials verified before operations -

「Dynamic Docker orchestration」: Secure volume mounting strategies

These features enable ROMA to meet enterprise-level security and reliability requirements.

Open Source Ecosystem: Evolving with the Community

In technology, nothing excites more than seeing a project that solves real problems while building a healthy ecosystem. ROMA uses Apache 2.0 licensing, is completely open source, and encourages community participation and contribution.

The project’s modular design means you can:

-

「Easily swap components」: Different LLM providers, tools, or execution environments -

「Extend new capabilities」: Add custom features based on clear interfaces -

「Share improvements」: Community-driven framework evolution

This openness ensures ROMA won’t stagnate like some closed systems, but can evolve rapidly alongside the entire AI community.

Frequently Asked Questions

「Q: How does ROMA differ from frameworks like AutoGPT or LangChain?」

A: ROMA’s core distinction lies in its recursive task tree architecture and completely transparent execution flow. While other frameworks support task decomposition, ROMA’s unified four-stage loop and structured I/O provide unparalleled debuggability and control.

「Q: What are ROMA’s computational resource requirements?」

A: Thanks to parallel execution of independent tasks, ROMA actually utilizes computational resources more efficiently. For simple tasks, resource consumption compares to single model calls; for complex tasks, parallelization can reduce overall response times.

「Q: Does it support local models?」

A: Full support. Through LiteLLM integration, ROMA can connect to any model providing compatible APIs, including locally deployed Ollama, vLLM instances, and more.

「Q: What application scenarios suit ROMA best?」

A: Particularly ideal for scenarios requiring multi-step reasoning, information integration, and complex decision-making, such as: deep research, financial analysis, content creation, data analysis, and technical assessment.

「Q: How complex is production deployment?」

A: ROMA provides Dockerized deployment and clear configuration guidance, significantly reducing production deployment difficulty. Security features like sandbox execution and credential management are carefully designed for enterprise requirements.

Future Outlook: The New Era of Recursive Agents

ROMA’s emergence marks a turning point in AI agent development. It demonstrates that through proper architectural design, we can indeed build AI systems that reliably handle complex tasks.

But more importantly, ROMA provides the entire community with a foundation for co-evolution. Just as Linux established foundations for operating system development, ROMA could become a similar cornerstone for intelligent agents.

I’m particularly excited to see various innovative applications the community builds on ROMA – from scientific research assistants to creative collaboration partners, from business analysis tools to educational tutoring systems. The possibilities are limited only by our imagination.

Now, the key is in your hands. It’s time to open that door to reliable AI agents and explore the infinite possibilities of long-horizon tasks.

Ready to start your ROMA journey? Visit the GitHub repository for source code, join the Discord community to connect with other developers, or read the technical blog for deep architectural insights. The next breakthrough AI application might just start with your inspiration.