A PM’s Guide to AI Agent Architecture: Why Capability Doesn’t Equal Adoption

Introduction to AI Agent Challenges

What makes some AI agents succeed in user adoption while others fail, even with high accuracy? The key lies in architectural decisions that build trust and shape user experiences, rather than just focusing on making agents smarter.

In this guide, we’ll explore the layers of AI agent architecture using a customer support agent example. We’ll see how product decisions at each layer influence whether users perceive the agent as magical or frustrating. By understanding these choices, product managers can design agents that encourage ongoing use instead of abandonment after initial trials.

图片来源:Substack

Building a Customer Support Agent: The Core Scenario

How should an AI agent handle a complex user request like “I can’t access my account and my subscription seems wrong”? The response depends on architectural choices that either resolve issues seamlessly or lead to user frustration and escalation.

Consider a scenario where a user faces both a locked account and a billing dispute. In one approach, the agent checks systems immediately, identifies a missed password reset email and a plan downgrade due to billing, then explains and offers a one-click fix. This creates a magical experience by anticipating and resolving interconnected problems.

In contrast, another approach has the agent ask clarifying questions like “When did you last log in?” or “What error do you see?” before escalating to a human. While safe, this can feel inefficient, leading users to abandon the agent after the first complex issue.

These differences stem from the same underlying systems but vary based on product decisions. For instance, in a real product launch, metrics showed 89% accuracy and positive feedback, yet users dropped off when facing multifaceted problems, highlighting that capability alone doesn’t drive adoption.

Personal Reflection: From conversations with PMs who’ve shipped agents, I’ve learned that overemphasizing accuracy often overlooks the human element of trust. It’s a lesson in balancing tech prowess with user psychology—agents that feel like helpful colleagues retain users better than flawless but opaque robots.

The Four Layers of AI Agent Architecture

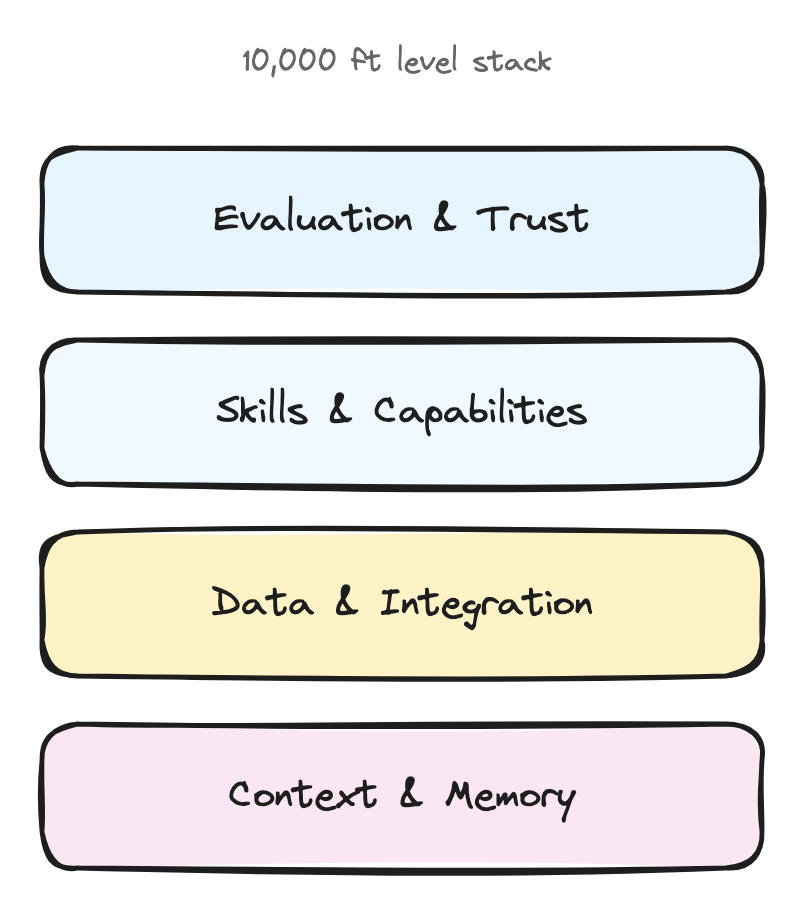

What are the key layers in AI agent architecture, and how do decisions in each layer impact user trust and adoption? These layers—context & memory, data & integration, skills & capabilities, and evaluation & trust—form a stack where each choice shapes the overall experience.

图片来源:Substack

Layer 1: Context & Memory

How much information should an AI agent remember, and for how long, to create a sense of understanding? The decision on memory types determines if the agent feels robotic or like a knowledgeable partner.

In the customer support agent example, session memory allows referencing the current conversation, such as “You mentioned billing issues earlier.” Customer memory draws from past interactions, noting “Last month you had a similar issue.” Behavioral memory observes patterns like “I notice you typically use our mobile app,” while contextual memory includes current account states and recent activities.

Implementing more memory layers enables anticipation of needs. For a user with recurring login troubles, the agent could proactively suggest fixes based on history, reducing frustration. However, this increases complexity and costs, so start with essential memory and expand.

Here’s a table comparing memory types:

In practice, for a user saying “My plan changed unexpectedly,” an agent with strong customer memory might link it to a prior downgrade request, explaining and resolving without repetition.

Personal Reflection: Reflecting on agent builds, I’ve seen that skimping on memory leads to repetitive interactions, eroding trust. The unique insight is that memory isn’t just data—it’s the foundation for empathy in AI, making users feel seen and valued.

Layer 2: Data & Integration

Which systems should an AI agent connect to, and at what depth, to become indispensable? Deeper integrations make the agent a platform, but they also introduce failure risks like API limits or downtime.

For the support agent, starting with core integrations like Stripe for billing is key. Adding Salesforce CRM, Zendesk ticketing, user databases, and audit logs enhances utility. Each connection allows handling more scenarios, such as cross-checking billing with login logs for a locked account.

Successful agents begin with 2-3 integrations based on common user requests, expanding iteratively. In a billing dispute scenario, integrating Stripe and Zendesk lets the agent pull payment history and create a ticket seamlessly, turning a potential abandonment into a resolved case.

Avoid integrating everything at once to prevent overwhelm. For example, if a user reports a subscription error, the agent queries the billing system directly, confirms the issue, and offers options without manual escalation.

图片来源:Substack

Personal Reflection: One lesson from PM discussions is that over-integration early on creates brittle systems. The insight: Treat integrations as user-driven evolutions—start small to build a robust core that users depend on, fostering loyalty.

Layer 3: Skills & Capabilities

What specific skills should an AI agent possess, and how deeply should they be implemented, to differentiate from competitors? This layer focuses on creating user dependency through targeted capabilities.

In the support agent, basic skills might include reading account info, while advanced ones allow modifying billing, resetting passwords, or changing plans. Each skill adds value but raises risks, like erroneous changes.

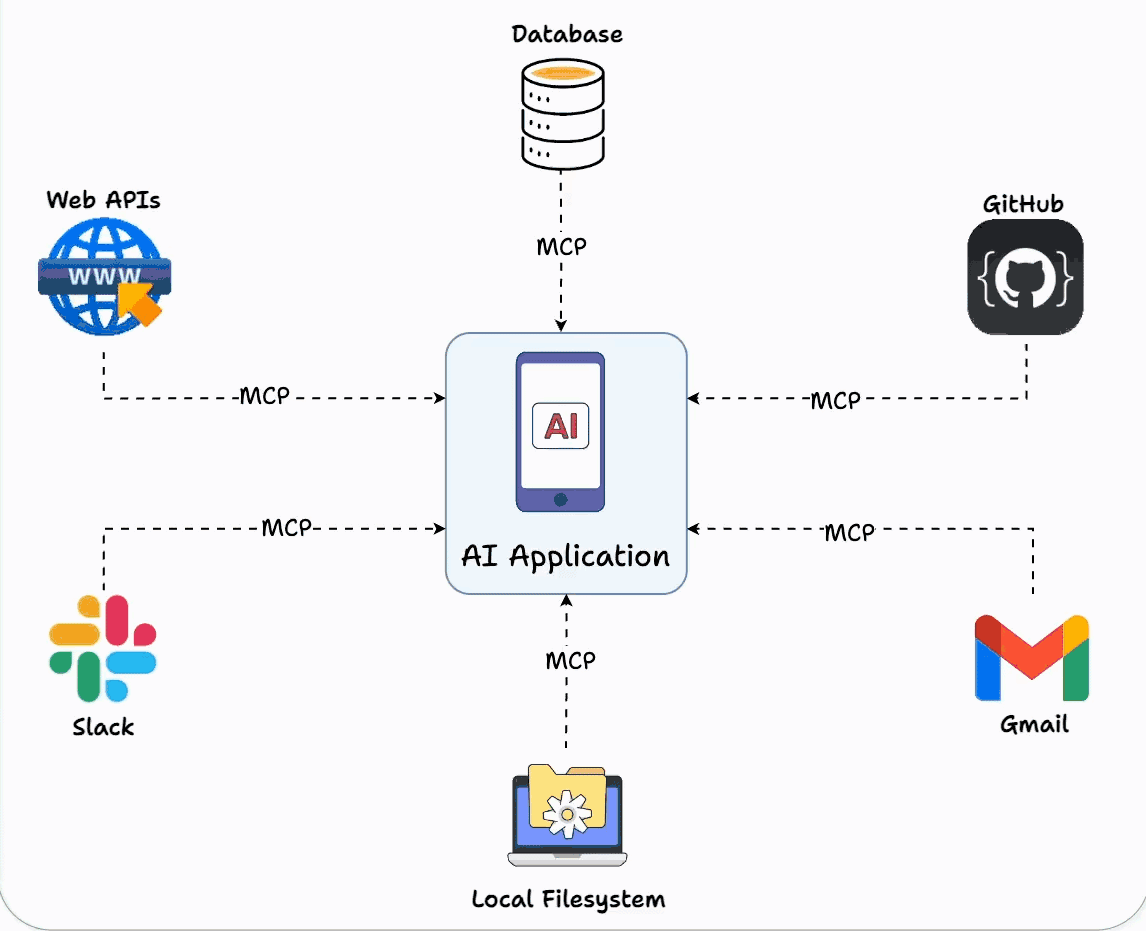

Tools like Model Context Protocol (MCP) simplify building and sharing skills. For instance, an MCP-enabled skill for password resets could be reused across agents, streamlining development.

In a practical scenario, when a user says “Fix my login,” the agent uses a read-only skill to diagnose, then a modification skill to reset, confirming first to build trust. This creates dependency, as users prefer the agent’s efficiency over manual processes.

List of skill considerations:

-

Read-only skills: Low risk, for info gathering (e.g., check subscription status). -

Modification skills: Higher value, for actions (e.g., update plan). -

Depth levels: Basic (query data) vs. advanced (multi-step resolutions).

Personal Reflection: I’ve observed that chasing too many skills dilutes focus. The counterintuitive see: Depth in a few key skills trumps breadth, as it solves real pain points deeply, making the agent irreplaceable.

Layer 4: Evaluation & Trust

How can an AI agent communicate limitations and build user confidence beyond mere accuracy? This layer emphasizes trustworthiness through transparency, not perfection.

For the support agent, strategies include confidence scores (“I’m 85% confident”), reasoning explanations (“I checked three systems”), and confirmations before actions. Graceful boundaries, like escalating complex issues, prevent frustration.

In a scenario with a combined login and billing problem, the agent might say: “I’m confident about the login but need to verify billing—let me connect you to a specialist.” This honesty fosters trust more than confident errors.

Trust strategies:

-

Confidence indicators: Calibrate to match actual success rates. -

Reasoning transparency: Show checks and findings. -

Graceful boundaries: Smooth human handoffs with context. -

Confirmation patterns: Ask permission for high-impact actions.

Users trust agents that admit uncertainty, as it aligns expectations and reduces disappointment.

Personal Reflection: The biggest lesson is that trust isn’t accuracy—it’s predictability. Agents that overpromise erode confidence; those that underpromise and overdeliver build lasting adoption.

Orchestration Approaches for AI Agents

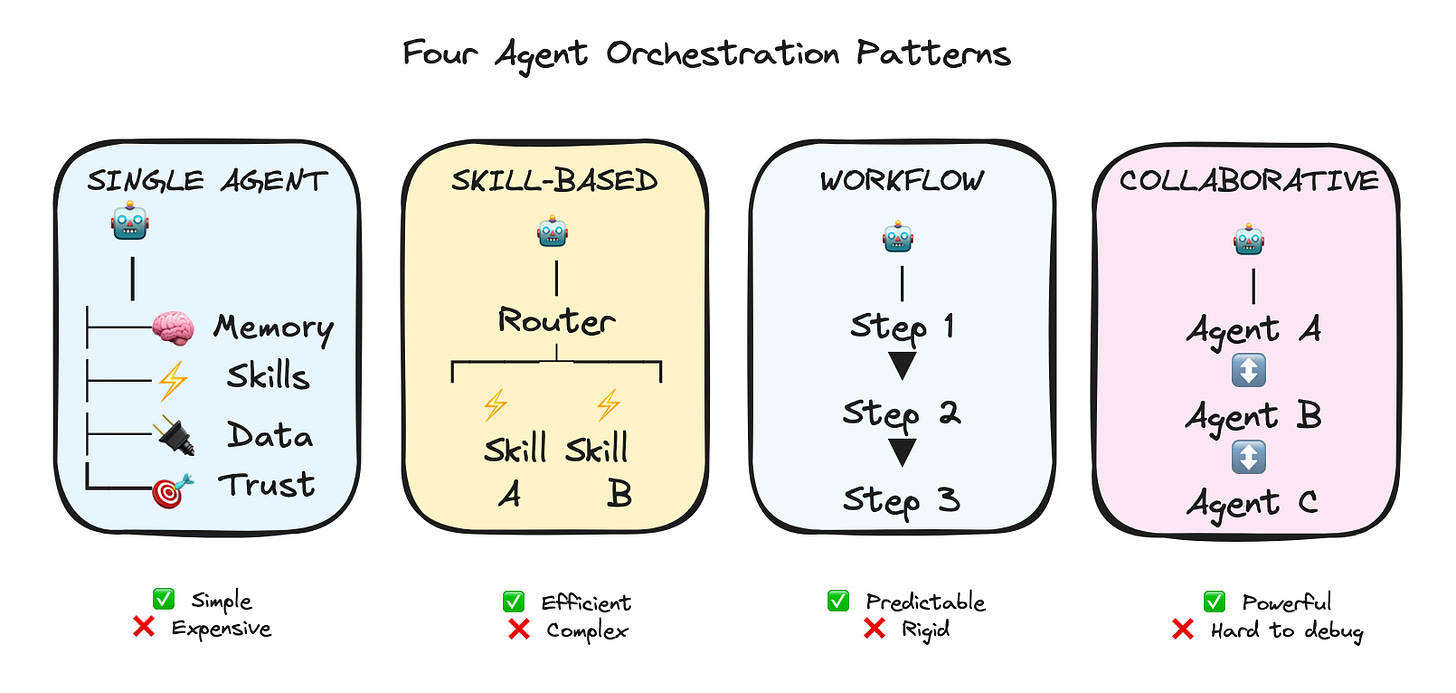

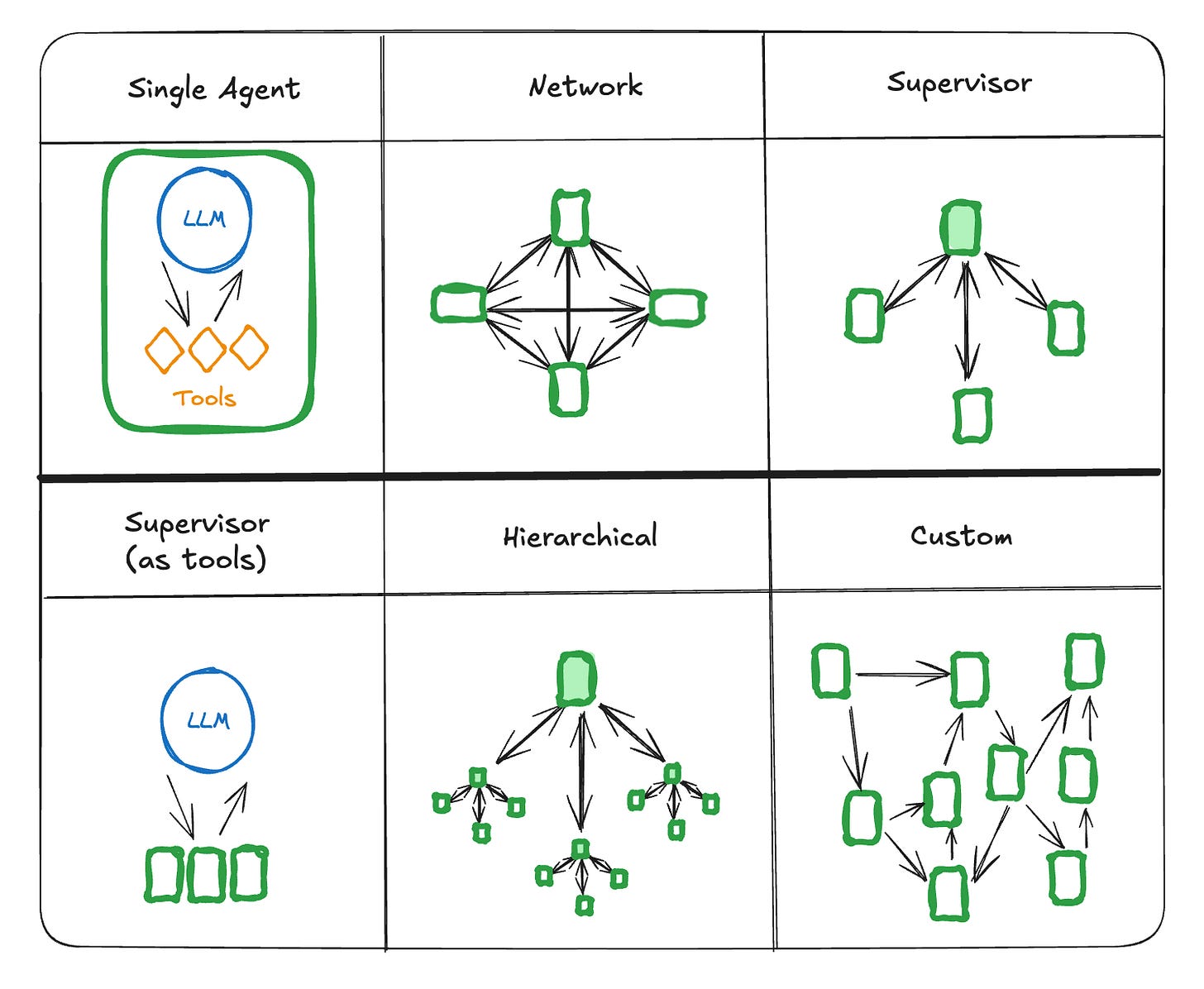

How do you implement AI agent architecture to handle user requests efficiently? Orchestration choices like single-agent or skill-based determine development ease and iteration speed.

图片来源:Substack

Single-Agent Architecture

What is the simplest way to build an effective AI agent? Start with a single-agent setup where all processing occurs in one context, ideal for straightforward implementations.

In the support agent, a user query like “I can’t log in” is handled end-to-end: checking status, identifying issues, and offering solutions. This is simple, debuggable, and cost-predictable.

Pros: Easy to manage; knows exact capabilities. Cons: Expensive for complex requests due to full context loading.

Many teams succeed here without advancing, as it covers most cases.

Skill-Based Architecture

When does a router and specialized skills improve AI agent efficiency? Use this for optimization, where a router directs queries to specific skills.

Example flow for “I can’t log in”:

-

Router sends to LoginSkill. -

LoginSkill checks and detects billing issue. -

Hands off to BillingSkill for renewal.

MCP standardizes skill capabilities, easing routing. Pros: Model optimization per skill. Cons: Complex coordination.

Workflow-Based Architecture

How can predefined workflows ensure predictability in AI agents? Tools like LangGraph or CrewAI define steps for common scenarios.

For account access: Check status, then failed attempts, billing, and route accordingly. Pros: Auditable for compliance. Cons: Rigid for edge cases.

Collaborative Architecture

What does the future of multi-agent collaboration look like? Specialized agents coordinate via protocols for complex problems.

In support, AuthenticationAgent, BillingAgent, and CommunicationAgent work together. Vision: Cross-company collaboration, like booking and airline agents. Challenges: Security, reliability.

Start simple with single-agent; add complexity as needed.

图片来源:Substack

Personal Reflection: Debugging multi-agent setups can be nightmare-inducing, but the insight is that simplicity scales better initially. Overcomplicating early often stalls launches.

The Counterintuitive Truth About Building Trust

Why do users trust AI agents that admit weaknesses more than perfect ones? Honesty about uncertainty prevents trust erosion from confident mistakes.

In support, an agent saying “I’ve reset your password” that fails breaks trust. Instead, “I’m 80% confident—should I proceed?” sets expectations.

Focus on:

-

Confidence calibration: Match stated to actual rates. -

Reasoning transparency: Detail checks. -

Graceful escalation: Smooth human transfers.

Transparency trumps accuracy for adoption.

Personal Reflection: Obsessing over perfection misses the point—users want reliability, not infallibility. This shifts PM focus from metrics to experiences.

What’s Next in AI Agent Design

What autonomy and governance decisions await in advanced AI agent building? Upcoming discussions will cover independence levels, permission vs. forgiveness, and practical governance challenges.

Conclusion

Architecting AI agents for adoption means prioritizing trust through layered decisions and transparent orchestration. By focusing on user experiences in scenarios like customer support, PMs can create magical, dependable agents.

图片来源:Unsplash

Practical Summary / Action Checklist

-

Assess Memory Needs: Start with session and contextual; add customer/behavioral based on user feedback. -

Prioritize Integrations: Begin with 2-3 key systems like billing and CRM. -

Define Skills: Focus on core read/modify capabilities; use MCP for scalability. -

Build Trust Mechanisms: Implement confidence scores, transparency, and escalations. -

Choose Orchestration: Default to single-agent; evolve to skill-based as complexity grows. -

Iterate on Feedback: Monitor abandonment points and refine layers accordingly.

One-Page Speedview

Core Problem: Why capability ≠ adoption? Architectural choices build trust.

Layers:

-

Memory: Types for understanding. -

Integration: Depth for utility. -

Skills: Differentiation via capabilities. -

Trust: Transparency over accuracy.

Orchestration:

-

Single: Simple start. -

Skill: Efficient routing. -

Workflow: Predictable paths. -

Collaborative: Future coordination.

Trust Insight: Admit limits for better adoption.

Next: Autonomy and governance.

FAQ

What decisions define AI agent memory layers?

Memory choices like session, customer, behavioral, and contextual determine how well the agent anticipates user needs.

How do integrations affect AI agent reliability?

Starting with key systems like billing and CRM adds value but introduces risks like downtime.

What role do skills play in AI agent differentiation?

Skills like reading or modifying data create dependency, enhanced by tools like MCP.

Why is trust more important than accuracy in AI agents?

Users prefer agents that communicate uncertainties honestly to avoid disappointment.

When should you use single-agent architecture?

It’s ideal for starting simple, handling most cases without added complexity.

How does skill-based architecture improve efficiency?

By routing to specialized skills and using appropriate models for each.

What are the drawbacks of workflow-based architecture?

It can feel rigid for uncommon edge cases.

Why start simple in AI agent orchestration?

To avoid unnecessary complexity and focus on real limitations.