PageIndex: When RAG Bids Farewell to Vector Databases—How Reasoning-Driven Retrieval is Reshaping Long-Document Analysis

Image source: PageIndex Official Repository

The core question this article answers: Why do traditional vector-based RAG systems consistently fail when handling professional long documents, and how does PageIndex achieve truly human-like precision through its “vectorless, chunkless” reasoning-driven architecture?

If you’ve ever asked a financial analysis RAG system about the specific reasons for intangible asset impairment in a company’s Q3 report, only to receive generic statements about fixed asset depreciation, you’ve experienced the structural flaw that plagues traditional retrieval systems. Semantic similarity is not the same as true relevance, and this fundamental mismatch becomes catastrophic when dealing with complex professional documents.

What Is PageIndex?

Summary: This section introduces PageIndex as a reasoning-based RAG framework that eliminates vector databases and chunking, enabling LLMs to navigate documents like human experts.

The core question this section answers: What exactly is PageIndex, and why is it called the first RAG framework to enable “human-like retrieval”?

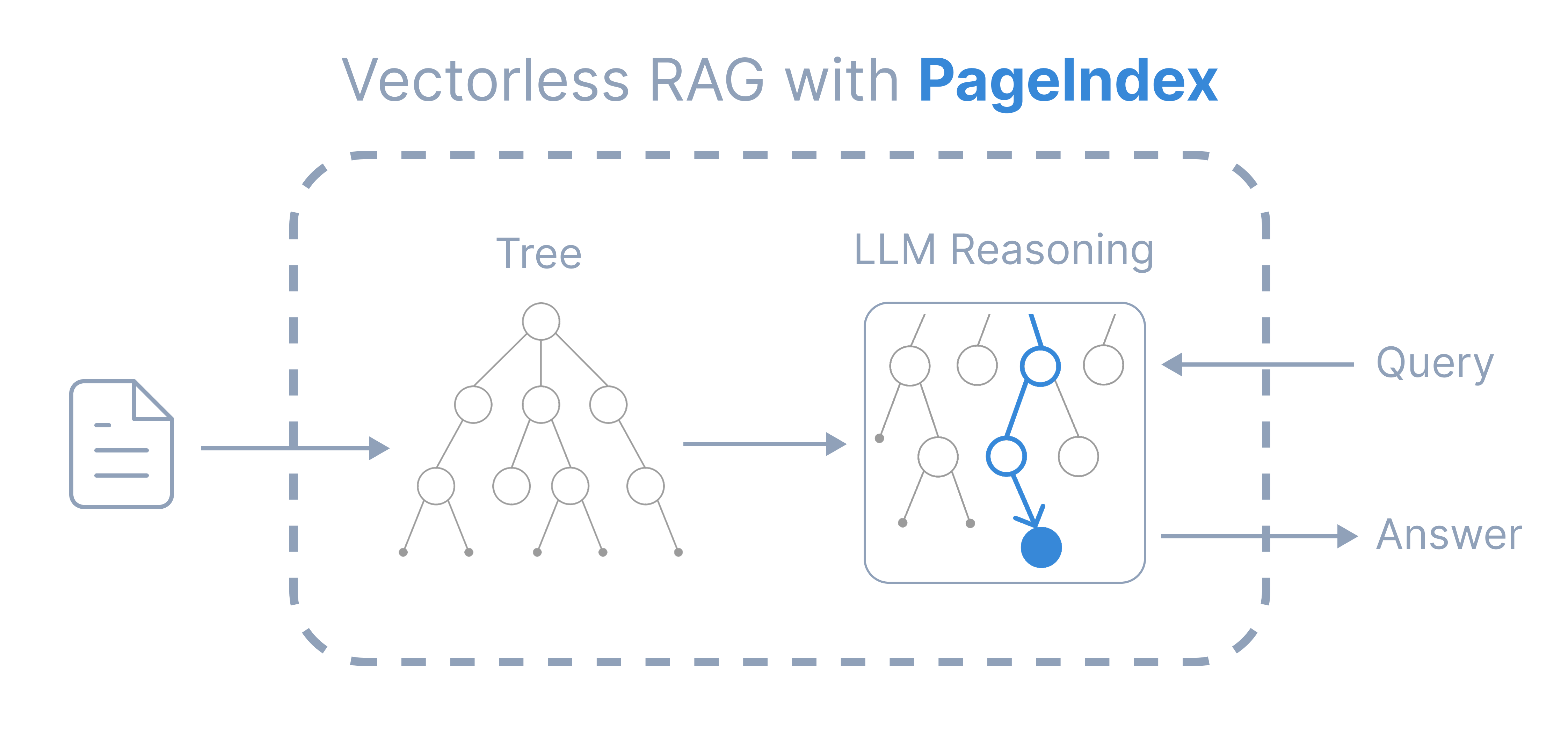

PageIndex is an open-source, reasoning-driven retrieval-augmented generation system built on a radical premise: completely abandon vector databases, reject mechanical text chunking, and let large language models “think” about how to retrieve information. It transforms lengthy PDF documents into hierarchical tree structures, mimicking how human experts navigate complex documents—first scanning the table of contents to locate sections, then diving into specific paragraphs to extract precise knowledge.

This framework’s distinction lies not in incremental optimization of existing RAG pipelines, but in reconstructing the fundamental logic of retrieval. While traditional RAG relies on vector similarity for “fuzzy matching,” PageIndex generates a “table-of-contents” tree index and performs “precise reasoning” through tree search algorithms. This design demonstrates remarkable accuracy and explainability when processing professional documents that exceed LLM context limits.

PageIndex has evolved into a complete product ecosystem: an open-source code repository for local deployment, a ready-to-use cloud-based Chat Platform, plus MCP protocol integration and API access. This flexible deployment model means whether you’re an independent developer or an enterprise user, you can find a suitable integration path.

Author’s reflection: Having evaluated dozens of RAG solutions, most get trapped in endless cycles of adjusting vector dimensions and chunking strategies, attempting to compensate for fundamental flaws with finer parameter tuning. PageIndex’s philosophy made me realize we’ve likely been over-optimizing in the wrong direction. Human experts never slice documents into fragments and calculate similarity—they use structured tables of contents and logical reasoning to rapidly locate answers. This “back-to-basics” design philosophy is precisely what AI systems most lack today.

Technical Deep Dive: How the Architecture Works

Summary: This section explains PageIndex’s two-stage process—tree structure generation and reasoning-based tree search—and reveals why it surpasses vector similarity.

The core question this section answers: How does PageIndex’s “vectorless, chunkless” architecture actually work, and what’s the technical essence of its reasoning mechanism?

PageIndex operates through two distinct phases, each representing a complete departure from conventional RAG design patterns.

Stage One: Generating the Hierarchical Tree Index

In the first phase, the system ingests a long document (PDF or Markdown) and transforms it into a semantic tree structure. This isn’t simple table-of-contents extraction—it’s a “table-of-contents” index optimized for LLMs, generated based on content semantics. Each node contains five essential attributes:

-

title: A semantic title summarizing the node’s content scope -

node_id: A unique identifier tracking the retrieval path -

start_index/end_index: The page range covered by the node -

summary: A condensed abstract of the node’s content -

nodes: An array of child nodes forming the hierarchical relationship

A typical generated tree structure looks like this:

{

"title": "Financial Stability",

"node_id": "0006",

"start_index": 21,

"end_index": 22,

"summary": "The Federal Reserve ...",

"nodes": [

{

"title": "Monitoring Financial Vulnerabilities",

"node_id": "0007",

"start_index": 22,

"end_index": 28,

"summary": "The Federal Reserve's monitoring ..."

},

{

"title": "Domestic and International Cooperation",

"node_id": "0008",

"start_index": 28,

"end_index": 31,

"summary": "In 2023, the Federal Reserve collaborated ..."

}

]

}

This structure preserves the document’s original logical hierarchy. When analyzing a 200-page SEC filing, the system doesn’t mechanically slice it into twenty 10-page chunks. Instead, it generates semantically clear chapter nodes like “Management Discussion,” “Financial Statements,” and “Risk Factors,” with each chapter subdivided into specific topics.

Application scenario: Imagine analyzing a legal contract. Traditional RAG might conflate “Breach of Contract” clauses with “Dispute Resolution” provisions because they share semantic keywords. PageIndex constructs a tree with nodes like “Contract General Terms,” “Rights and Obligations,” “Breach of Contract,” and “Dispute Resolution.” When you ask about “liquidated damages calculation methods,” the system precisely navigates to the “Breach of Contract” node instead of getting lost among similar-looking paragraphs.

Stage Two: Reasoning-Based Tree Search

The second phase embodies the core innovation. Instead of computing vector similarity, the system employs an LLM to perform “reasoning search” on the generated tree. This process resembles AlphaGo’s decision-making on a Go board: the model evaluates the relevance between the current node and the query, decides whether to dive into child nodes or backtrack to parent nodes, and progressively narrows the scope through multi-step reasoning to pinpoint the most relevant page range.

Specifically, the system maintains a search path where each step is determined by the LLM based on:

-

Semantic alignment between the node summary and the query -

The guidance value of child node titles -

Context from previously visited paths -

Remaining search budget (to prevent infinite loops)

This design delivers two breakthrough advantages:

-

Explainability: The retrieval path is fully transparent. You can clearly understand why the system chose Chapter 7 over Chapter 3, with each decision explicitly justified by reasoning. No more opaque, approximate vector search (“vibe retrieval”). -

Precision: Since search is based on structured reasoning rather than fuzzy matching, the system can distinguish between “looks similar” and “truly relevant.” In financial analysis, this means accurately differentiating between “revenue recognition policy” and “revenue growth rate”—concepts that are semantically close but fundamentally different.

Author’s reflection: Traditional RAG’s “black box” nature has always been a pain point. When a system returns incorrect answers, diagnosing whether the problem lies with the embedding model, chunking strategy, or vector retrieval limitations is nearly impossible. PageIndex’s transparent paths remind me of decision trees in early expert systems—every branch is auditable. Achieving this balance between peak performance and interpretability is especially valuable in today’s AI development landscape.

Core Features & Advantages

Summary: This section compares PageIndex against traditional vector RAG across multiple dimensions, backed by performance data.

The core question this section answers: What concrete improvements does PageIndex deliver compared to traditional vector-based RAG?

| Feature Dimension | Traditional Vector RAG | PageIndex | Real-World Impact |

|---|---|---|---|

| Search Foundation | Vector similarity search | LLM reasoning-driven | From “fuzzy matching” to “precise reasoning” |

| Document Processing | Fixed-length chunking | Preserves original structure | Retains chapter logic and context |

| Knowledge Representation | Flat vector space | Hierarchical tree index | Simulates human document navigation |

| Explainability | Black-box retrieval | Full reasoning path | Traceable decision basis |

| Scalability | Requires vector database | No additional storage needed | Reduces system complexity and cost |

| Professional Performance | Prone to failure on edge cases | 98.7% finance benchmark accuracy | Meets professional document analysis needs |

The Real Value of Eliminating Vector Databases

Removing vector databases isn’t just architectural simplification—it’s a paradigm shift. Vector databases require a continuous index maintenance pipeline: whenever documents update, you must recalculate embeddings, update indexes, and handle version compatibility. In fast-changing business environments, this overhead is often underestimated.

PageIndex’s “zero-storage” design means you can perform real-time reasoning directly on source documents. For legal consultation scenarios, when new regulations are released, the system can immediately answer questions based on the new files without waiting for index rebuilds. This immediacy often matters more than retrieval speed in professional domains.

The Natural Advantage of No Chunking

Traditional RAG’s chunking strategy is an eternal dilemma. Chunks that are too large lose precision; chunks that are too small lose context. PageIndex perfectly resolves this contradiction through semantic tree nodes: parent nodes provide breadth (chapter overviews) while child nodes provide depth (specific clauses). When asking a comprehensive question like “Describe the company’s main risks and mitigation measures,” the system automatically collects information from all child nodes under the “Risk Factors” parent to generate a complete answer.

Application scenario: In medical research, a clinical trial report contains sections like “Patient Baseline Characteristics,” “Intervention Protocol,” “Adverse Events,” and “Statistical Analysis.” Traditional RAG might split “drug dosage” and “administration frequency” into different chunks, leading to incomplete answers. PageIndex maintains the integrity of the “Intervention Protocol” chapter, ensuring all related parameters are retrieved and understood within the same node.

Real-World Deployment Guide

Summary: A step-by-step implementation guide covering installation, configuration, and advanced parameter tuning for production use.

The core question this section answers: How can developers integrate PageIndex into their projects? What are the concrete steps and best practices?

Environment Setup & Installation

Deploying PageIndex requires minimal prerequisites—a Python environment and a LLM API key. No vector database or complex middleware installations needed.

First, clone the repository and install dependencies:

# Clone the project

git clone https://github.com/VectifyAI/PageIndex.git

cd PageIndex

# Install dependencies (minimal Python packages required)

pip3 install --upgrade -r requirements.txt

The dependency list is remarkably lean, primarily including OpenAI’s Python SDK and some PDF processing libraries. This means you can complete environment setup within 15 minutes without wrestling with CUDA drivers or database configurations.

Next, configure your API key by creating a .env file in the project root:

CHATGPT_API_KEY=sk-your-openai-api-key-here

Author’s reflection: The minimalist dependency design impressed me deeply. In past RAG implementations, environment configuration often consumed over 30% of project time, especially with team members using different operating systems. PageIndex’s “lightweight” philosophy lets developers focus on business logic rather than infrastructure—a boon for startup teams and resource-constrained projects.

Core Implementation

Running PageIndex requires just one command, but understanding its parameters is crucial for optimal results:

python3 run_pageindex.py --pdf_path /path/to/your/document.pdf

This command triggers the complete pipeline: PDF parsing → tree generation → index persistence. After processing, you receive a JSON file containing the full tree index ready for querying.

Let’s understand this process through a realistic scenario. Suppose you’re analyzing Tesla’s SEC 10-Q filing for Q3 2024 (~150 pages). The system generates a top-level structure like:

{

"title": "FORM 10-Q Quarterly Report",

"node_id": "0001",

"start_index": 1,

"end_index": 150,

"summary": "Tesla, Inc. quarterly report for Q3 2024 covering financial results, risk factors, and management analysis",

"nodes": [

{

"title": "PART I - FINANCIAL INFORMATION",

"node_id": "0002",

"start_index": 2,

"end_index": 45,

"summary": "Condensed consolidated financial statements and notes"

},

{

"title": "PART II - OTHER INFORMATION",

"node_id": "0003",

"start_index": 46,

"end_index": 150,

"summary": "Risk factors, legal proceedings, and management discussion"

}

]

}

This structure immediately reveals the document’s macro-architecture, enabling subsequent queries to directly target “Financial Information” or “Other Information” sections.

Advanced Parameter Tuning Strategies

For power users seeking optimal performance, PageIndex offers fine-grained parameter controls:

python3 run_pageindex.py \

--pdf_path /path/to/complex_report.pdf \

--model gpt-4o-2024-11-20 \

--toc-check-pages 30 \

--max-pages-per-node 5 \

--max-tokens-per-node 15000 \

--if-add-node-id yes \

--if-add-node-summary yes

Practical implications of parameter tuning:

-

--toc-check-pages 30: For documents without explicit tables of contents, increasing the check range helps the system better identify chapter boundaries. Especially useful for scanned PDFs. -

--max-pages-per-node 5: Reduces maximum node granularity from the default 10 pages to 5 pages, suitable for scenarios requiring finer retrieval precision like legal clause analysis. Trade-off is increased token consumption and generation time. -

--max-tokens-per-node 15000: Controls node summary length to ensure conciseness. For technical manual documents, increasing this value retains more details.

Application scenario: In audit workpaper analysis, auditors frequently jump between “Revenue Recognition,” “Cost Accounting,” and “Internal Controls” details scattered across different chapters. Setting max-pages-per-node to 3-5 pages ensures each audit point has an independent retrieval entry, preventing critical information from getting lost in lengthy text blocks.

Markdown Support & Special Considerations

Summary: PageIndex’s Markdown processing capabilities and important caveats about hierarchy preservation.

The core question this section answers: How does PageIndex handle Markdown files, and what pitfalls should developers avoid?

PageIndex supports direct Markdown file processing, offering developers great flexibility:

python3 run_pageindex.py --md_path /path/to/your/document.md

However, a critical limitation exists: Markdown processing relies on “#” symbols for hierarchy. If the file was converted from PDF or HTML and the conversion tool failed to preserve the original structure (a common problem with most existing tools), the generated tree structure will be flat and distorted.

Best practice: For technical documentation, run PageIndex directly on the source Markdown rather than converted files. If you must process PDFs, use the PageIndex OCR service mentioned in the documentation (though commented out in the open-source repository), which is specifically designed to preserve global document structure.

Author’s reflection: This feature design reveals PageIndex team’s deep understanding of real-world pain points. Many developers attempt “quick conversions” from PDF to Markdown, only to lose structural information. Explicitly warning users about this trap and offering a specialized OCR solution demonstrates product thinking that prioritizes user value over feature list padding—no point in claiming functionality while hiding its limitations.

Application Scenarios & Real Cases

Summary: Concrete examples from the financial domain demonstrating PageIndex’s real-world impact and performance advantages.

The core question this section answers: In which business scenarios does PageIndex deliver maximum value, and what do actual deployments look like?

Financial Document Analysis: The Mafin 2.5 Benchmark

PageIndex’s most compelling success story is the Mafin 2.5 financial QA system. It achieved 98.7% accuracy on the FinanceBench benchmark—a professional dataset of complex financial questions where traditional vector RAG typically scores only 70-80%.

Specifically, Mafin 2.5 processes SEC filings, earnings call transcripts, and investor presentations. For example, when asked “How much of Amazon’s Q4 2023 capex was allocated to AWS infrastructure?” the system must:

-

Locate the “Consolidated Statements of Cash Flows” in a 400-page 10-K filing -

Find the “Purchases of property and equipment” breakdown -

Cross-validate AWS segment capital expenditure disclosures -

Calculate the ratio and provide a cited answer

Traditional RAG might find paragraphs about “capital expenditure” but cannot precisely link to AWS-specific data. PageIndex’s tree structure enables the system to first lock onto the “Financial Statements” parent node, then dive into the “Statement of Cash Flows” child node, and finally pinpoint the exact page for “segment-level capital expenditure.”

Real-world impact: For financial analysts at institutions, this shrinks work from hours of “keyword search + manual browsing” to “natural language query + second-level precise location.” Crucially, system responses include complete reasoning paths, allowing auditors to trace every figure’s source page—meeting compliance requirements.

Additional High-Value Scenarios

Legal Contract Review: In M&A due diligence, lawyers must quickly locate “Representations and Warranties,” “Indemnification,” and “Termination Conditions.” PageIndex’s tree structure naturally aligns with legal document organization, enabling page-level retrieval precision that dramatically reduces the risk of missing critical clauses.

Academic Literature Review: Facing hundreds of relevant papers, researchers can first use PageIndex to build a “Problem-Methods-Results-Conclusion” tree for each paper, then perform “tree-to-tree” reasoning-based literature synthesis rather than simple keyword matching.

Technical Support Manuals: For complex equipment maintenance manuals, when technicians ask “Troubleshooting start-up failure for Engine Model X in low-temperature environments,” the system navigates directly to “Troubleshooting” → “Start-up Issues” → “Environmental Factors,” returning complete answers with procedure steps, diagram page numbers, and safety warnings.

Author’s Reflection: The Limits of Vector Search & Future of Reasoning-Based Retrieval

Summary: A perspective on why PageIndex signals a fundamental shift in RAG thinking and what developers should learn from it.

The core question this section answers: From a technical practice standpoint, what fundamental shifts in RAG does PageIndex reveal? How should developers adjust their thinking?

After deep usage of PageIndex, I’m increasingly convinced we’re at an inflection point in RAG paradigms. For five years, the industry has poured energy into optimizing vector search: better embedding models, more efficient approximate nearest neighbor algorithms, smarter re-ranking strategies. These are all local optimizations under the flawed premise that “similarity ≠ relevance.”

PageIndex’s value isn’t just providing a new tool—it’s proposing a new mental model: Retrieval is fundamentally a reasoning problem, not a matching problem. When we query a system, we expect a “thoughtful answer,” not a “text snippet that looks like an answer.”

This cognitive shift means three practical recommendations for developers:

-

Stop over-optimizing chunking strategies: If you’re spending more than 20% of your time adjusting chunk size and overlap, you likely need to reconsider your architecture choice. -

Respect document structure: In RAG systems, a document’s original structure (table of contents, chapters, paragraphs) is a more reliable knowledge carrier than vector similarity. -

Explainability is core competitiveness: In professional domains, users care more about “why this answer was given” than “how fluent the answer sounds.”

Unique insight: PageIndex’s deeper revelation is “LLM as index.” Traditional architectures separate embedding models from generation models, creating a semantic gap. PageIndex lets the same LLM both “read” the document and “understand” the query. This end-to-end unification might be the future design direction for all AI systems—not for unification’s sake, but because the integrity of the reasoning chain determines the ceiling of system intelligence.

Practical Implementation Checklist

Summary: A concise, actionable checklist for quickly putting PageIndex into production workflows.

The core question this section answers: How can you rapidly apply PageIndex to real work? What are the critical steps and watchouts?

Deployment Checklist

-

Environment Requirements: Python 3.8+, OpenAI API key (or compatible LLM) -

Installation: Execute pip3 install -r requirements.txt -

Quick Test: Use the sample PDFs in the repository to verify installation -

Production Readiness: -

Assess document complexity and tune max-pages-per-node -

For scanned PDFs, consider OCR preprocessing -

Implement result caching to avoid redundant tree generation

-

Best Usage Practices

-

Structured documents (with clear TOCs): Default parameters work well; the system auto-detects chapter boundaries -

Unstructured documents (scanned reports): Increase --toc-check-pagesto 30-50 and manually verify tree structure quality -

Oversized files (500+ pages): Split into logical volumes (by year, department) and generate trees separately, then build a top-level index -

Cost optimization: Tree generation is the main token consumer but reusable. For frequent queries, persist the tree structure in JSON format

Troubleshooting

-

Flat tree structure: Check if PDF is scanned; try OCR preprocessing -

Irrelevant retrieval results: Reduce max-pages-per-nodevalue for finer granularity -

Slow processing: Use GPT-4-turbo instead of GPT-4, or specify a faster model via --model

One-Page Overview

PageIndex at a Glance:

-

Purpose: Reasoning-driven RAG framework for long documents, no vector database required -

Innovation: Tree structure index + LLM tree search, simulating human expert reading -

Performance: 98.7% accuracy on finance benchmarks, fully explainable retrieval paths -

Deployment: Self-hosted open-source, cloud platform, MCP, API -

Use Cases: Financial reports, legal documents, technical manuals, academic papers -

Limitations: Scanned PDF quality depends on OCR, Markdown requires native hierarchy -

Cost: Main expense is one-time tree generation; subsequent queries are low-cost

Quick start commands:

pip3 install -r requirements.txt

echo "CHATGPT_API_KEY=your_key" > .env

python3 run_pageindex.py --pdf_path your_doc.pdf

Frequently Asked Questions

1. What’s the biggest performance difference between PageIndex and traditional RAG?

The core difference is retrieval accuracy. Traditional RAG relies on vector similarity, achieving 70-80% accuracy on professional long documents. PageIndex uses reasoning-driven retrieval, reaching 98.7% on financial benchmarks. The gap stems from matching keywords versus understanding true intent.

2. Without vector databases, how does it scale to large document collections?

PageIndex doesn’t compare all documents at runtime. Instead, it pre-generates a tree structure for each document. During queries, the system performs reasoning search on each tree sequentially (or in parallel). While per-query cost is slightly higher, you eliminate vector index build and maintenance overhead, resulting in a simpler overall system.

3. How do I ensure the generated tree structure is high quality? What optimization methods exist?

Quality depends on document structure clarity and LLM comprehension. Optimization methods include: adjusting --toc-check-pages to help identify chapter boundaries, setting --max-pages-per-node to control granularity, and using higher-quality PDFs (non-scanned). For critical applications, manual sampling of tree structure quality is recommended.

4. Does PageIndex support models besides OpenAI? How to configure?

The codebase defaults to OpenAI GPT models, but you can integrate any LLM supporting structured output by modifying the model call logic in run_pageindex.py. The key requirement is the model must understand hierarchical structures and generate formatted JSON.

5. What’s the recommended approach for processing extremely long documents (e.g., 1000-page technical manuals)?

Split the document into logical modules (by volume, section, or function) and generate separate trees. Then build a top-level “directory tree” pointing to each subtree. This maintains retrieval precision while controlling per-run token consumption.

6. How does PageIndex meet audit requirements in highly regulated sectors like finance and law?

Every retrieval result includes complete node access paths and page citations, creating an audit trail. You can precisely trace “answer came from page X, section Y,” which meets compliance needs better than traditional RAG’s “similarity scores.”

7. If the source document updates after tree generation, what’s the update mechanism?

Currently there’s no incremental update mechanism—document changes require full tree regeneration. Recommend version-controlled storage of tree structures corresponding to document versions. For frequently updated documents, consider scheduled automated regeneration.

8. What’s the primary cost structure when using PageIndex? How to estimate?

The main cost is LLM token consumption during tree generation, proportional to document length and node granularity. A 100-page document using GPT-4o costs approximately 0.10 per query. Compared to the human cost of maintaining vector databases, total cost of ownership is often lower.

Image source: PageIndex Official Documentation

Final thought: PageIndex reminds us that AI system evolution sometimes isn’t about stacking more complex components, but returning to first principles. While the industry drills deeper into vector dimensions, it chooses a seemingly “contrarian” path that’s actually closer to human cognition. For teams building document intelligence systems, my advice is: temporarily set aside all assumptions about embeddings and vector search, and ask one question—”How would a human expert step-by-step find this answer?” If your technical solution can faithfully simulate that process, you’re on the right track.