KAT-Dev-32B & KAT-Coder: Reshaping Code Intelligence Through Scalable Agentic RL

“

It’s late at night, you’re staring at a complex bug that refuses to be solved, your coffee has gone cold for the third time, and the deadline is tomorrow morning. This scenario is familiar to every developer—until now.

In the world of software development, we’ve been searching for that intelligent assistant that truly understands our intent. Not simple code completion, not mechanical pattern matching, but a partner that can genuinely participate in thinking, understand context, and even proactively identify problems.

Today, that vision takes a significant leap forward.

A New Milestone in Code Intelligence: From Assistant to Partner

Imagine an AI partner that understands your vague requirement descriptions, automatically calls appropriate tools, and delivers complete functionality within just a few conversation turns. This is no longer science fiction—Kuaishou AI’s open-source KAT-Dev-32B and its flagship version KAT-Coder are turning this vision into reality.

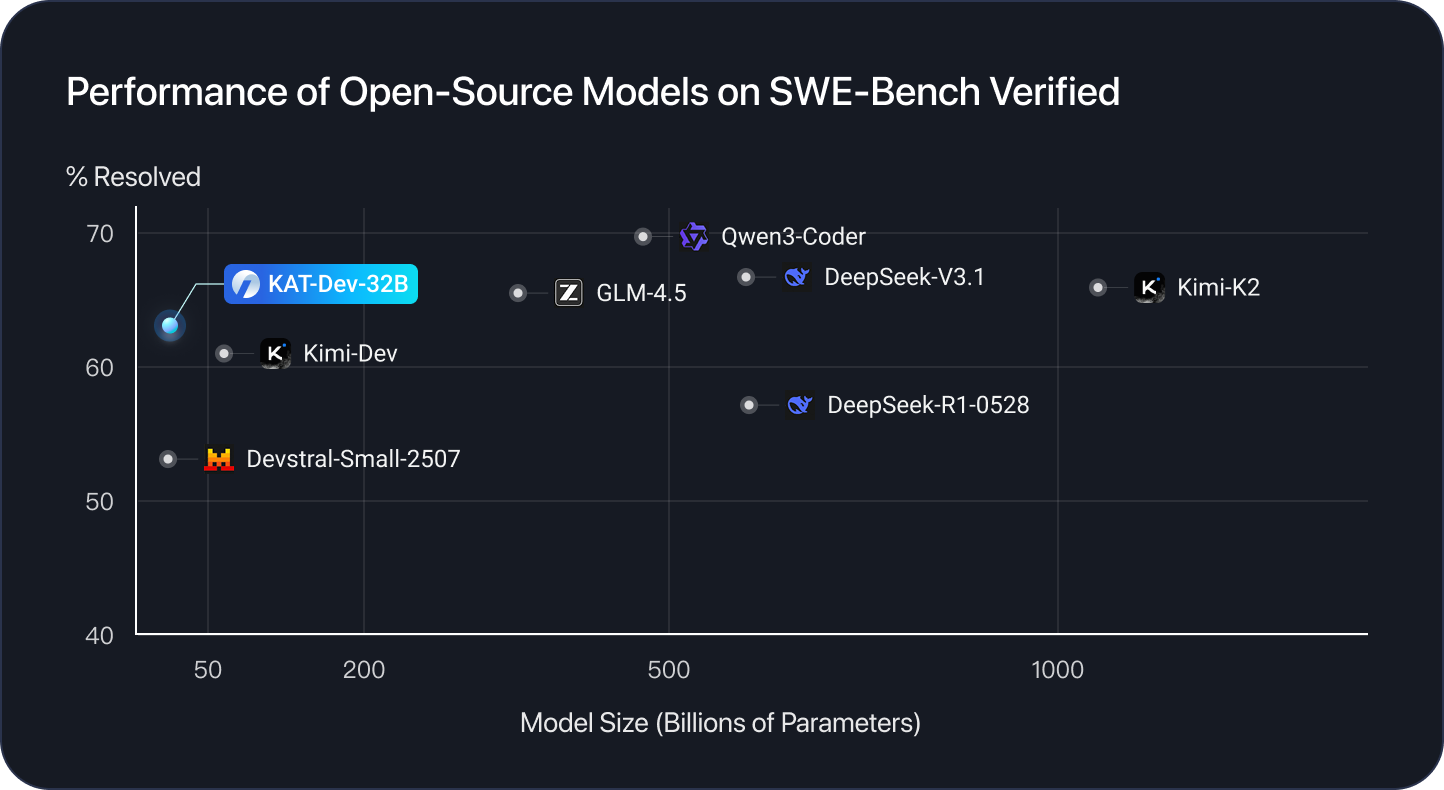

As the newest member of the open-source community, KAT-Dev-32B achieves a 62.4% resolution rate on the SWE-Bench Verified benchmark, ranking fifth among all open-source models. Its bigger brother, KAT-Coder, reaches an impressive 73.4%, marking the official entry of code intelligence models into practical application.

But the story behind the numbers is more compelling: these models didn’t achieve breakthroughs through simple scaling, but through a novel training paradigm—scalable Agentic Reinforcement Learning.

Three-Stage Training: From Foundational Skills to Autonomous Intelligence

Mid-Training: Building Agent Fundamentals

Traditional code model training often starts directly with code data, but the KAT team discovered a key insight: foundational capabilities determine the ceiling.

During the mid-training phase, they infused the model with six core capabilities:

-

Tool-use capability: Real interaction data for thousands of tools executed in sandbox environments -

Multi-turn dialogue understanding: Constructed dialogues spanning hundreds of turns among humans, assistants, and tools -

Domain knowledge injection: Integrated high-quality programming expertise -

Real development workflows: Incorporated extensive Pull Request data from Git repositories -

Instruction following: Collected 30+ categories of common user instructions -

General reasoning ability: Enhanced reasoning and problem-solving across domains

“It’s like training an intern,” the team analogizes in their technical report. “First familiarize them with all tools and workflows, rather than throwing them directly into a project.”

Supervised Fine-Tuning: From Theory to Practice

With foundational capabilities established, the next step was practical training. The team meticulously designed eight task types and eight programming scenarios to ensure the model could handle real-world complexity.

Eight task types covering the full development lifecycle:

-

Feature implementation and enhancement -

Bug fixing and refactoring -

Performance optimization and test case generation -

Code understanding and configuration deployment

Eight programming scenarios ensuring professional coverage:

-

Application development and UI/UX engineering -

Data science and machine learning -

Database systems and infrastructure -

Specialized domains and security engineering

This comprehensive coverage ensures the model doesn’t excel in specific areas while underperforming in others.

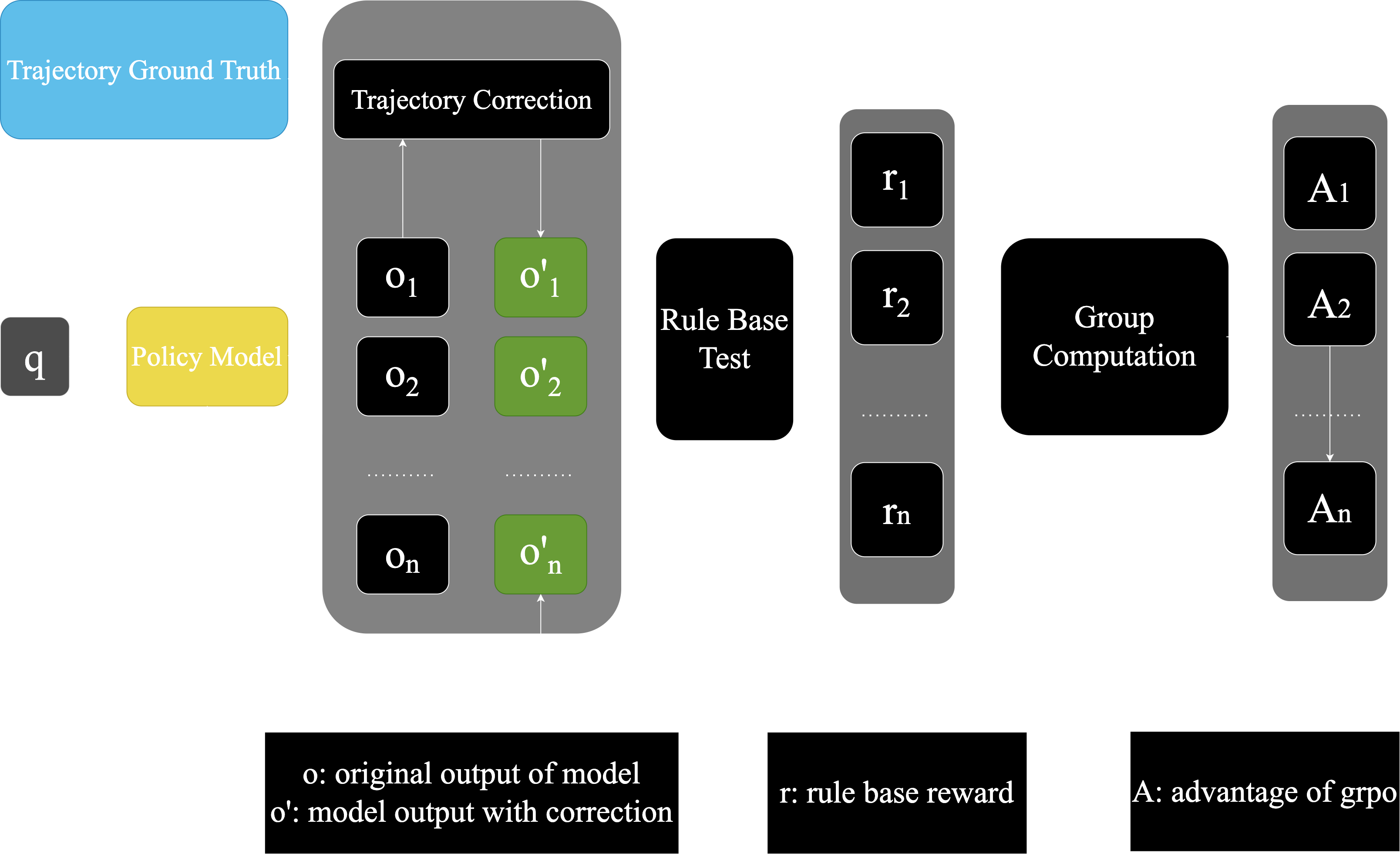

Reinforcement Fine-Tuning: The Gentle Guidance of a Driving Instructor

This represents one of the most innovative aspects of the KAT training pipeline. Before traditional reinforcement learning, they introduced an RFT (Reinforcement Fine-Tuning) stage.

Imagine learning to drive: after getting your license, you don’t immediately hit the highway alone—you have an experienced instructor in the passenger seat. RFT is that instructor.

By incorporating “teacher trajectories” annotated by human engineers, the model receives correct behavior demonstrations before beginning autonomous exploration. This approach not only improves performance but, more importantly, significantly stabilizes subsequent RL training.

Agentic RL Scaling: The Technical Revolution in Agent Training

If the first three stages lay the foundation, Agentic RL Scaling is what truly makes the model “get it.”

Three Challenges and Elegant Solutions

Scaling agentic reinforcement learning faces three core challenges, and the KAT team found innovative solutions for each:

1. Learning efficiency over nonlinear trajectory histories

-

Solution: Prefix caching mechanism to avoid redundant computations

2. Leveraging intrinsic model signals

-

Solution: Entropy-based trajectory pruning to retain the most informative nodes

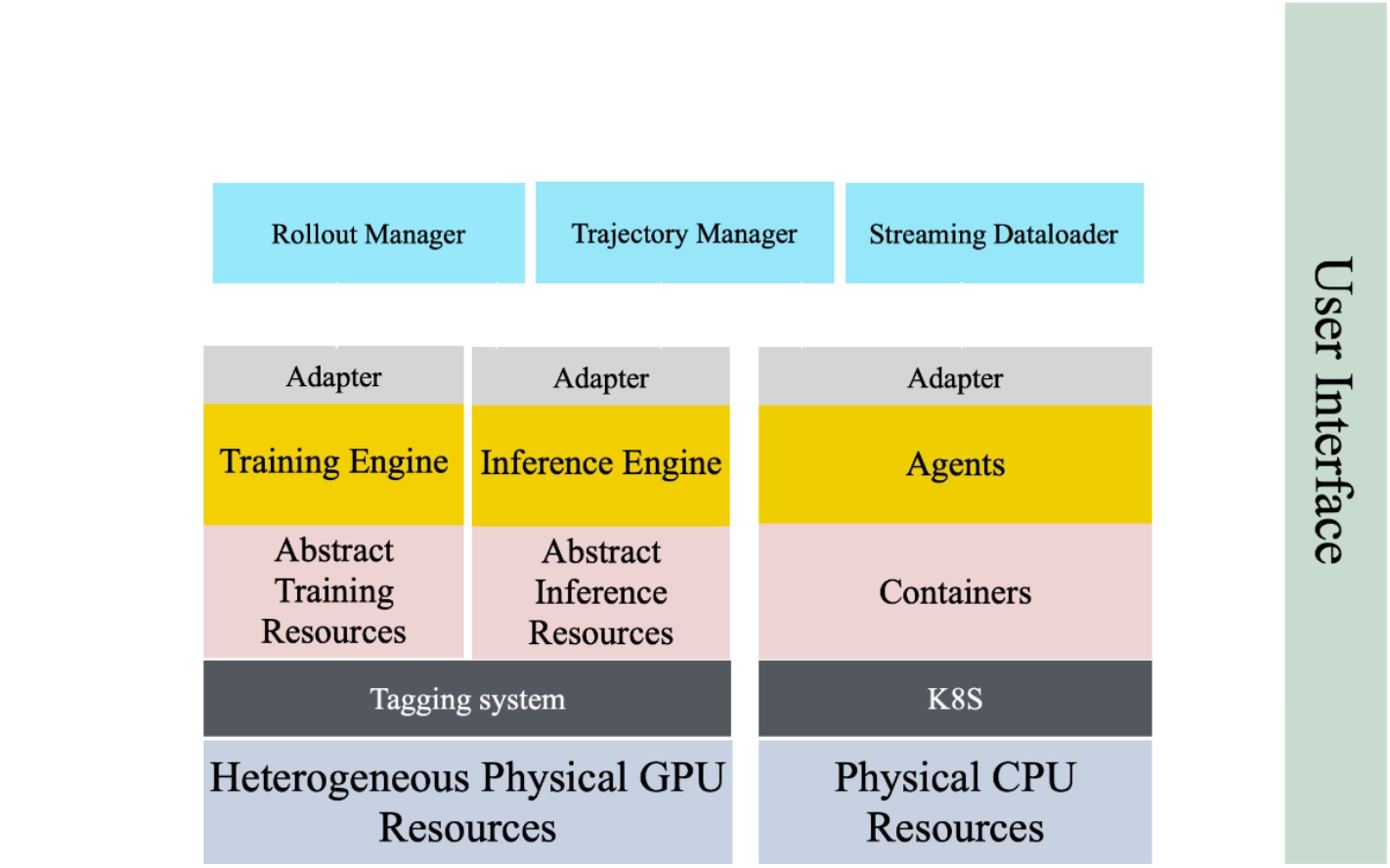

3. Building high-throughput infrastructure

-

Solution: SeamlessFlow architecture for complete decoupling of agents and training

Entropy-Based Tree Pruning: The Core Algorithm for Intelligent Training

Even with the above techniques, training all tokens across the full tree structure remains prohibitively expensive. The team developed an entropy-based pruning mechanism:

“We prune the trajectory tree like a gardener pruning fruit trees,” explains the technical lead. “We only keep the branches that bear the most fruit.”

Specifically, they compress trajectories into a prefix tree where each node represents a shared prefix and each edge corresponds to token segments. Under fixed computational budgets, the system estimates node informativeness based on entropy signals and reach probability, expanding nodes in order of importance until the budget is exhausted.

Real-World Data: From Open Source to Enterprise Codebases

Unlike models trained solely on GitHub repositories, the KAT team went further. Beyond open-source data, they collected and utilized enterprise-grade codebases from real industrial systems.

“Public repositories often contain simpler projects,” the team notes. “These large-scale, complex codebases—spanning multiple programming languages and representing genuine business logic—expose models to significantly more challenging development scenarios.”

This exposure to real-world complexity is key to the model’s outstanding performance in industrial environments.

Getting Started with KAT: From Open Source to Production

Option 1: Embrace Open Source—KAT-Dev-32B

For most developers and researchers, KAT-Dev-32B offers the best entry point. The model is fully open-sourced on Hugging Face:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Kwaipilot/KAT-Dev"

# Load tokenizer and model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# Prepare model input

prompt = "Implement a Python function to calculate the nth Fibonacci number"

messages = [{"role": "user", "content": prompt}]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# Generate code

generated_ids = model.generate(

**model_inputs,

max_new_tokens=512

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

content = tokenizer.decode(output_ids, skip_special_tokens=True)

print("Generated code:", content)

For scenarios requiring higher performance, you can deploy with vLLM:

MODEL_PATH="Kwaipilot/KAT-Dev"

vllm serve $MODEL_PATH \

--enable-prefix-caching \

--tensor-parallel-size 8 \

--tool-parser-plugin $MODEL_PATH/qwen3coder_tool_parser.py \

--chat-template $MODEL_PATH/chat_template.jinja \

--enable-auto-tool-choice --tool-call-parser qwen3_coder

Option 2: Ultimate Performance—KAT-Coder API

For enterprise users and developers needing top-tier performance, KAT-Coder is available via API through the StreamLake platform:

# Install Claude Code client

npm install -g @anthropic-ai/claude-code

# Configure API endpoint (replace with your Endpoint ID and API Key)

export ANTHROPIC_BASE_URL=https://wanqing.streamlakeapi.com/api/gateway/v1/endpoints/ep-xxx-xxx/claude-code-proxy

export ANTHROPIC_AUTH_TOKEN=YOUR_API_KEY

The team also recommends claude-code-router—a third-party routing utility that enables flexible switching between different backend APIs.

Emergent Behaviors: When Models Start “Thinking”

During Agentic RL Scaling, the team observed some pleasantly surprising emergent behaviors:

32% Reduction in Multi-turn Interactions

After RL training, the model required nearly one-third fewer average interaction turns to complete tasks compared to the post-SFT model. “It’s like the model learned to get straight to the heart of problems,” researchers commented. “It no longer needs as much back-and-forth confirmation.”

Parallel Tool Calling

Even more impressive, the model began demonstrating the ability to call multiple tools in parallel, breaking through traditional sequential calling patterns.

The team theoretically explains this phenomenon: in trajectory tree structures, parallel tool calling creates additional branching possibilities. These branches are processed independently during training, while the long-term entropy pruning mechanism retains nodes with higher information content—and multi-tool calling nodes typically exhibit higher entropy values.

Real-World Cases: From Concept to Implementation

Starry Sky Animation Generation

From simple descriptions, KAT-Coder can generate complete starry sky animation code, including particle systems, motion trajectories, and interaction logic.

Classic Game Recreation

The team tested the model’s ability to recreate classic games—from game logic to physics engines, from UI design to performance optimization, the model demonstrated comprehensive understanding.

Future Roadmap: The Next Frontier of Code Intelligence

The KAT team’s vision extends far beyond current achievements. Their roadmap includes four key directions:

Deep Tool Integration

Deep integration with mainstream IDEs, version control systems, and development workflows to create seamless coding experiences.

Multi-Language Expansion

Coverage of emerging programming languages and frameworks to ensure comprehensive language support.

Collaborative Coding Systems

Exploration of multi-agent systems where KAT models can collaborate on complex software projects.

Multimodal Code Intelligence

Integration of visual understanding capabilities to process architecture diagrams, UI designs, debugging screenshots, and documentation images.

Frequently Asked Questions

Q: What’s the main difference between KAT-Dev-32B and KAT-Coder?

A: KAT-Dev-32B is the open-source version with 62.4% pass rate on SWE-Bench; KAT-Coder is the closed-source flagship version with 73.4% pass rate, available via API.

Q: What configuration is needed to run KAT-Dev-32B locally?

A: Recommended: GPU with at least 80GB VRAM (like A100/H100), supporting vLLM inference acceleration. For resource-constrained users, consider quantized versions or cloud services.

Q: Which programming languages does the model support?

A: Currently supports mainstream languages including Python, JavaScript, Java, C++, Go, with continuous expansion ongoing.

Q: How to get API access to KAT-Coder?

A: Apply for API keys through the StreamLake Wanqing platform, following the official documentation process.

Q: Does the training data include enterprise-sensitive code?

A: All enterprise codebases used in training undergo strict anonymization and desensitization processes, ensuring no sensitive information is included.

Conclusion: The Dawn of a New Developer Era

The release of the KAT model series represents more than just another AI model birth—it marks the transition of code intelligence from simple assistance tools to genuine intelligent partners. Through scalable Agentic RL training paradigms, we’re witnessing AI’s ability to understand complex tasks, proactively use tools, and even optimize its own workflows.

As one early tester shared: “It doesn’t feel like conversing with a tool, but like collaborating with a senior developer who understands your intent.”

In this new era, developers are evolving from code writers to problem definers and solution architects. Partners like KAT will help us focus on creative work, delegating repetitive coding tasks to AI companions that understand our intentions.

§

Start your intelligent coding journey: Hugging Face | StreamLake