GLM-5 vs. Kimi K2.5: A Deep Dive into China’s Open-Source AI Rivalry and Hardware Independence

「The Core Question This Article Answers:」 With two frontier open-source models emerging from China within weeks of each other, how do GLM-5 and Kimi K2.5 differ in architecture, agent capabilities, and strategic value, and which one should developers choose?

In the span of just 14 days, the AI landscape was presented with two major open-weight frontier models. Both hail from China. Both are MIT-licensed. Yet, beneath the surface similarities, they represent fundamentally different bets on the future of artificial intelligence.

I spent a full day analyzing the technical specifications, benchmarks, architecture, and pricing of both models. What I found wasn’t just a comparison of specs, but a fork in the road for AI development: one path prioritizes deep reasoning and hardware sovereignty, while the other champions multimodal versatility and agent swarms.

Here is everything you need to know to make an informed decision.

The Basics: Setting the Stage

「The Core Question:」 What are the fundamental specifications defining these two heavyweights?

Let’s start with the scorecard. 「GLM-5」 is the product of Zhipu AI, a Tsinghua University spinoff that recently raised $558 million in its Hong Kong IPO. It is a colossal model, boasting 745 billion parameters, with 44 billion active per token, and a 200K context window.

「Kimi K2.5」 comes from Moonshot AI in Beijing. It pushes the scale even further with 1 trillion parameters, though it activates 32 billion per token, supported by a larger 256K context window. The critical differentiator here is that Kimi K2.5 is natively multimodal—it is designed to “see” images and video, not just process text.

Both utilize Mixture of Experts (MoE) architectures and are open-weight, costing a fraction of their Western counterparts like OpenAI or Anthropic. But this is where the similarities end.

Architecture Bet: Scalpel vs. Swiss Army Knife

「The Core Question:」 How does the underlying architectural design influence practical task performance?

Diving into the MoE configurations reveals a stark contrast in design philosophy.

GLM-5 activates more compute per token (44B vs. 32B). It employs fewer experts, but each one “works harder.” This architectural choice results in robust performance on structured, sequential tasks. Think of coding challenges, step-by-step mathematical reasoning, or long agentic sessions where consistency is non-negotiable.

「Practical Scenario:」 Imagine debugging a complex codebase over a long session. GLM-5 acts like a sharpened scalpel, maintaining logical coherence and precision without drifting off course.

Kimi K2.5, conversely, spreads its intelligence across 384 experts (compared to GLM-5’s 256). To compensate for the lower active parameter count, it integrates a dedicated 400M parameter vision encoder named MoonViT. Crucially, it was trained on 15 trillion mixed visual and text tokens from day one. Vision isn’t an afterthought; it is native to the model.

「Practical Scenario:」 If your workflow involves analyzing UI designs to generate code, or extracting insights from video data, Kimi K2.5 is the “Swiss Army knife” that can handle the visual input natively, unlike the text-centric GLM-5.

Benchmarks: Reading Between the Lines

「The Core Question:」 How reliable are the current performance metrics, and what do they tell us?

We must approach these numbers with a giant asterisk. GLM-5 launched very recently, meaning independent third-party benchmarks are still incoming. Most current data is derived from Zhipu’s press materials or extrapolated from its predecessor, GLM-4.7. Kimi K2.5, having been out for two weeks, has solid community-verified numbers.

Here is what the data shows so far:

-

「SWE-bench Verified (Coding):」 Kimi scores an impressive 76.8%. GLM-5 is estimated around 76%, based on the trajectory of GLM-4.7 (73.8%). Essentially, it’s a tie. -

「HLE with Tools (Reasoning):」 Kimi leads with 50.2%. GLM-4.7 scored 42.8%, and while GLM-5 is expected to improve, the numbers aren’t finalized. -

「BrowseComp (Web Agents):」 Kimi hits 74.9%, flexing its “Agent Swarm” capabilities. GLM-5 data is pending. -

「AIME 2025 (Math):」 Another near-tie. Kimi sits at 96.1%, while GLM-4.7 was at 95.7%.

Reuters reports that GLM-5 “approaches Claude Opus 4.5 on coding benchmarks.” While promising, I recommend waiting for independent community verification expected in the coming weeks before drawing final conclusions.

The Agentic Split: Depth vs. Width

「The Core Question:」 Which model handles complex agent workflows better?

This is the most significant divergence between the two.

「Kimi K2.5: The Orchestrator」

Its signature feature is “Agent Swarm.” It can coordinate up to 100 AI sub-agents working in parallel, handling up to 1,500 tool calls. Trained with Parallel-Agent Reinforcement Learning, it cuts execution time by 4.5x in specific benchmarks.

-

Use Case: You need to conduct a complex market research project. Kimi spins up a researcher, a fact-checker, and an analyst simultaneously. It is ideal for wide searches and batch content creation. However, early tests show that coordination can sometimes be inconsistent, such as agents using slightly different definitions when compiling data.

「GLM-5: The Deep Thinker」

GLM-5 inherits “Preserved Thinking” from GLM-4.7. This feature retains reasoning blocks across multi-turn conversations rather than re-deriving logic from scratch.

-

Use Case: If you have ever had an AI agent “lose the plot” after turn 5 of a deep coding session, GLM-5 is the solution. It is built to maintain sanity over 50+ turns.

「Verdict:」 Building swarms of agents? Go with Kimi. Building one agent that doesn’t lose its mind during deep work? GLM-5 is the answer.

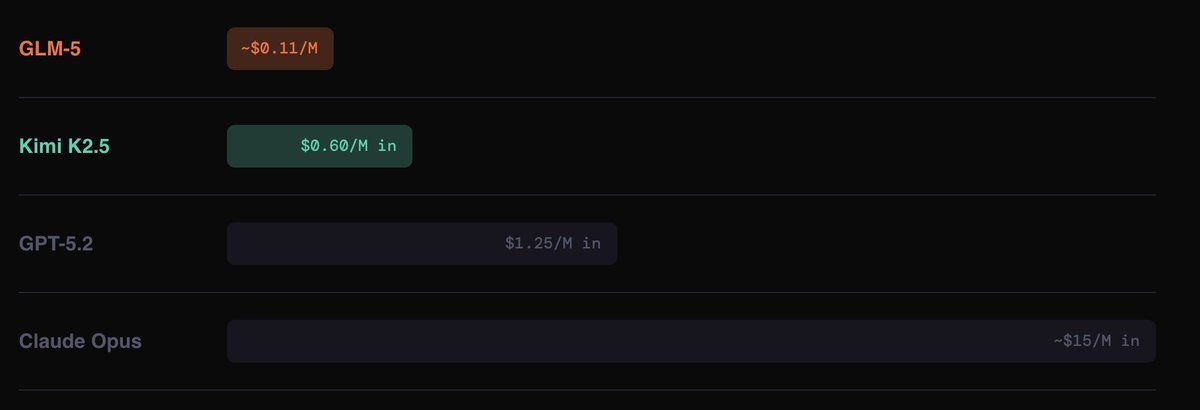

Pricing: The Hidden Variables

「The Core Question:」 Beyond the sticker price, what are the true cost implications?

This is where the comparison gets fascinating.

-

「GLM-5:」 ~$0.11 per million tokens. -

「Kimi K2.5:」 2.50-3.00/M output. -

「GPT-5.2:」 $1.25/M input. -

「Claude Opus:」 ~$15/M input.

GLM-5 is roughly 5x cheaper than Kimi on input and over 100x cheaper than Claude Opus. However, there are two critical trade-offs:

-

「Verbosity:」 Kimi is verbose. Artificial Analysis benchmarks showed it consumed 89 million tokens on an evaluation—2.5x more than comparable models. This effectively raises the “per task” cost. -

「Speed vs. Iterations:」 GLM-5 is slower (17-19 tok/sec) compared to Kimi (39 tok/sec). However, in iterative agent loops, Kimi’s speed might justify its higher per-token cost due to faster convergence.

「Bottom Line:」 For high-volume, single-pass generation (like reports), GLM-5 wins on cost. For iterative loops where speed saves developer time, the math is closer than the sticker price suggests.

The Geopolitical Chip Story: Hardware Independence

「The Core Question:」 Why does GLM-5’s training infrastructure matter for the future of AI?

This section transcends typical benchmark discussions.

GLM-5 was trained entirely on 「Huawei Ascend chips」 using the MindSpore framework. We are talking about a cluster of 100,000 chips with 「zero NVIDIA hardware」 involved.

Context: Zhipu AI was added to the US Entity List in January 2025, cutting them off from NVIDIA H100/H200 chips and US cloud inference. The export controls were designed to slow them down. Instead, they built a domestic 100,000-chip cluster and trained a frontier model that Reuters states approaches Claude Opus in coding.

「Reflection:」

Regardless of one’s political stance, the data point is undeniable: 「Hardware independence at frontier scale is now a demonstrated reality, not just a theory.」

Kimi K2.5, by contrast, likely trained on standard NVIDIA hardware. Moonshot’s priority appears to be model architecture and agent capability rather than hardware sovereignty. These are two valid but distinct strategic priorities.

Who Should Use What?

「The Core Question:」 Based on specific project needs, which model is the correct choice?

「Choose GLM-5 if:」

-

You are optimizing strictly for cost. -

You need structured coding and reasoning with long-context consistency. -

You are building for markets where US supply chain risk is a factor (hardware independence). -

You require extremely long output generation (131K max output).

「Choose Kimi K2.5 if:」

-

You need multi-agent parallel execution. -

Your application relies on visual inputs (screenshot-to-code, video analysis). -

Faster inference speed is a priority. -

You prefer a model with two weeks of independent community testing behind it.

Both are open-weight, run locally, and integrate well with coding environments.

Author’s Reflection: A Fork in the Road

Analyzing these models, I am struck by the maturity of the AI ecosystem. We are moving past the era of “one model to rule them all” into a specialized tooling era.

GLM-5 impresses me not just with its specs, but with its engineering resilience. Building a frontier model on a domestic chip stack while under sanctions is a feat of engineering that redefines the concept of “compute sovereignty.”

Kimi K2.5, on the other hand, feels like a glimpse into the future of work—where AI isn’t just a smart intern, but a project manager orchestrating a team of specialists.

My takeaway? The choice isn’t about which model is “smarter.” It’s about choosing your workflow. Do you need a single, deep-thinking partner (GLM-5) or a fast-moving, multitasking swarm (Kimi)?

Practical Summary & Checklist

| Dimension | GLM-5 | Kimi K2.5 |

|---|---|---|

| 「Core Advantage」 | Ultra-low cost, stable long-form reasoning, hardware sovereignty | Multimodal, Agent Swarms, fast inference |

| 「Best Scenario」 | Long-text generation, deep coding, budget-sensitive projects | Complex task decomposition, visual tasks, rapid iteration |

| 「Hardware Stack」 | 100% Huawei Ascend | Presumed NVIDIA |

| 「Pricing」 | ~$0.11/M tokens | $0.60/M (input) |

「Actionable Checklist:」

-

「Cost Test:」 Run a typical task on both. Calculate the total cost to reach a satisfactory result, not just the per-token price. -

「Consistency Test:」 If building a conversational agent, test GLM-5’s “Preserved Thinking” after 20+ turns. -

「Vision Test:」 If your app handles images, start with Kimi K2.5 to test the MoonViT encoder’s capabilities. -

「Compliance Check:」 Review hardware sourcing requirements; GLM-5 offers a unique non-NVIDIA supply chain path.

One-Page Summary

-

「Two Paths:」 GLM-5 focuses on depth and cost-efficiency (The Scalpel). Kimi K2.5 focuses on multimodal breadth and parallel execution (The Swiss Army Knife). -

「Hardware Breakthrough:」 GLM-5 proves that frontier models can be trained entirely without NVIDIA chips. -

「Agent Strategy:」 Kimi specializes in “Agent Swarms” (parallel execution), while GLM-5 specializes in “Preserved Thinking” (long-context consistency). -

「Cost Reality:」 GLM-5 is unbeatable on sticker price; Kimi may win on iteration speed, though verbosity can increase hidden costs. -

「Final Verdict:」 Choose GLM-5 for cost control and deep reasoning; Choose Kimi for visual tasks and complex orchestration.

FAQ

「1. Is GLM-5 cheaper than Kimi K2.5?」

Yes, significantly. GLM-5 is roughly 0.60/M input. However, Kimi’s faster inference speed might reduce total iteration costs in some development loops.

「2. Can both models process images?」

No. Kimi K2.5 is natively multimodal and can process images and video. GLM-5 is primarily designed for text and code.

「3. Does GLM-5 use NVIDIA chips?」

No. GLM-5 was trained entirely on Huawei Ascend chips, demonstrating hardware independence from US technology.

「4. Which model is better for coding?」

Both score similarly on benchmarks. GLM-5 is better for long, consistent coding sessions due to its “Preserved Thinking.” Kimi is better for quick iterations or tasks requiring visual context (like frontend code from screenshots).

「5. What is “Agent Swarm”?」

It is a feature of Kimi K2.5 that allows the model to coordinate up to 100 sub-agents to work on a task in parallel, significantly speeding up complex workflows.

「6. Are these models open source?」

Yes, both are released under the MIT license with open weights, allowing for local deployment and commercial use.

「7. What is “Preserved Thinking” in GLM-5?」

It is a feature that allows the model to remember reasoning blocks from previous turns in a conversation, preventing the AI from losing context or logic during long interactions.

「8. Are the GLM-5 benchmarks reliable?」

Currently, most GLM-5 benchmarks are from the developer or extrapolated from previous versions. Independent community benchmarks are expected within 1-2 weeks of launch.