Yan Framework: Redefining the Future of Real-Time Interactive Video Generation

1. What is the Yan Framework?

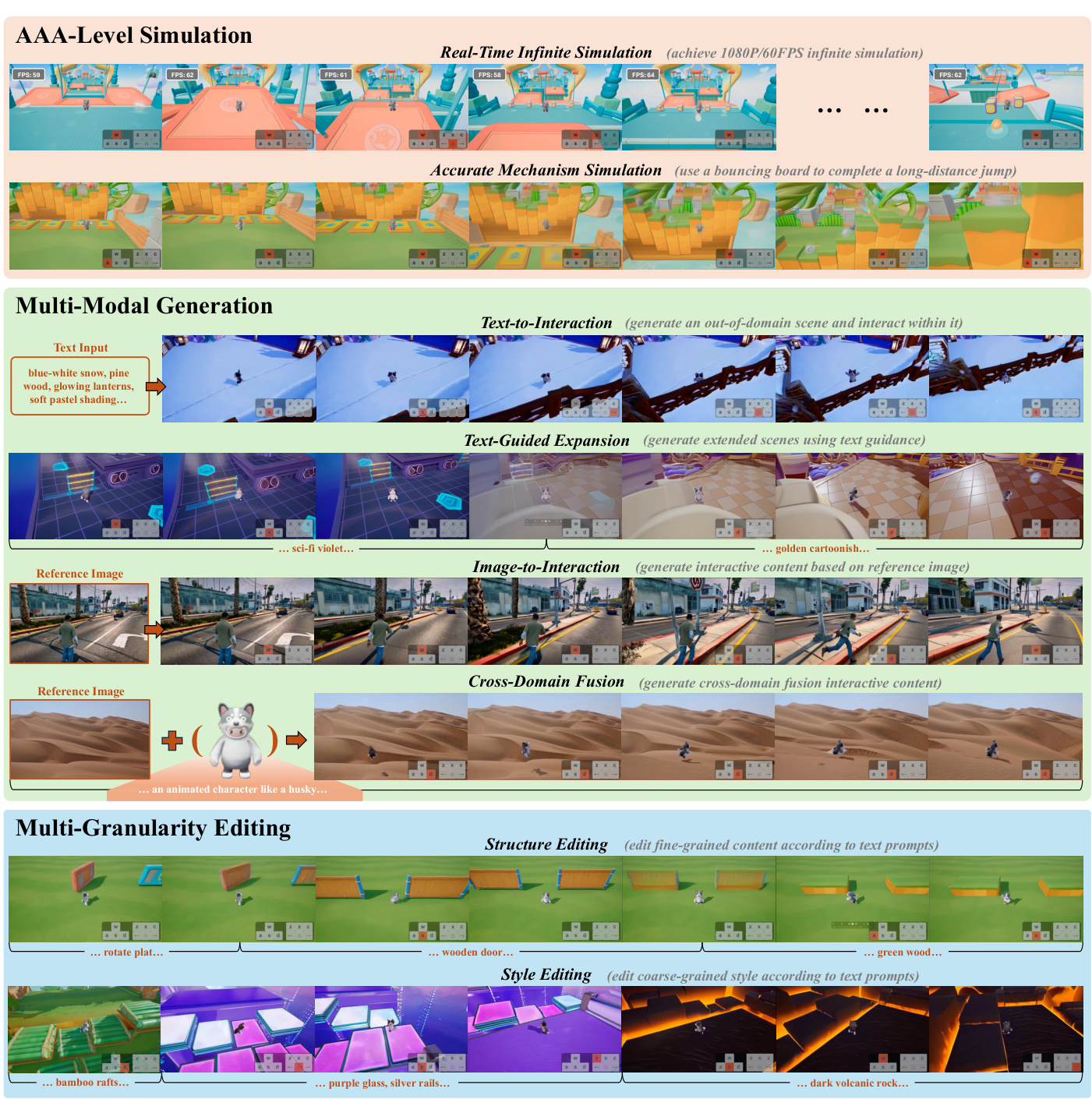

Yan is an interactive video generation framework developed by Tencent’s research team. It breaks through traditional video generation limitations by combining AAA-grade game visuals, real-time physics simulation, and multimodal content creation into one unified system. Through three core modules (high-fidelity simulation, multimodal generation, and multigrained editing), Yan achieves the first complete pipeline for “input command → real-time generation → dynamic editing” in interactive video creation.

“

Key Innovation: Real-time interaction at 1080P/60FPS with cross-domain style fusion and precise physics simulation.

2. Why Do We Need This Technology?

Traditional video generation faces three major challenges:

3. Yan’s Three Core Modules

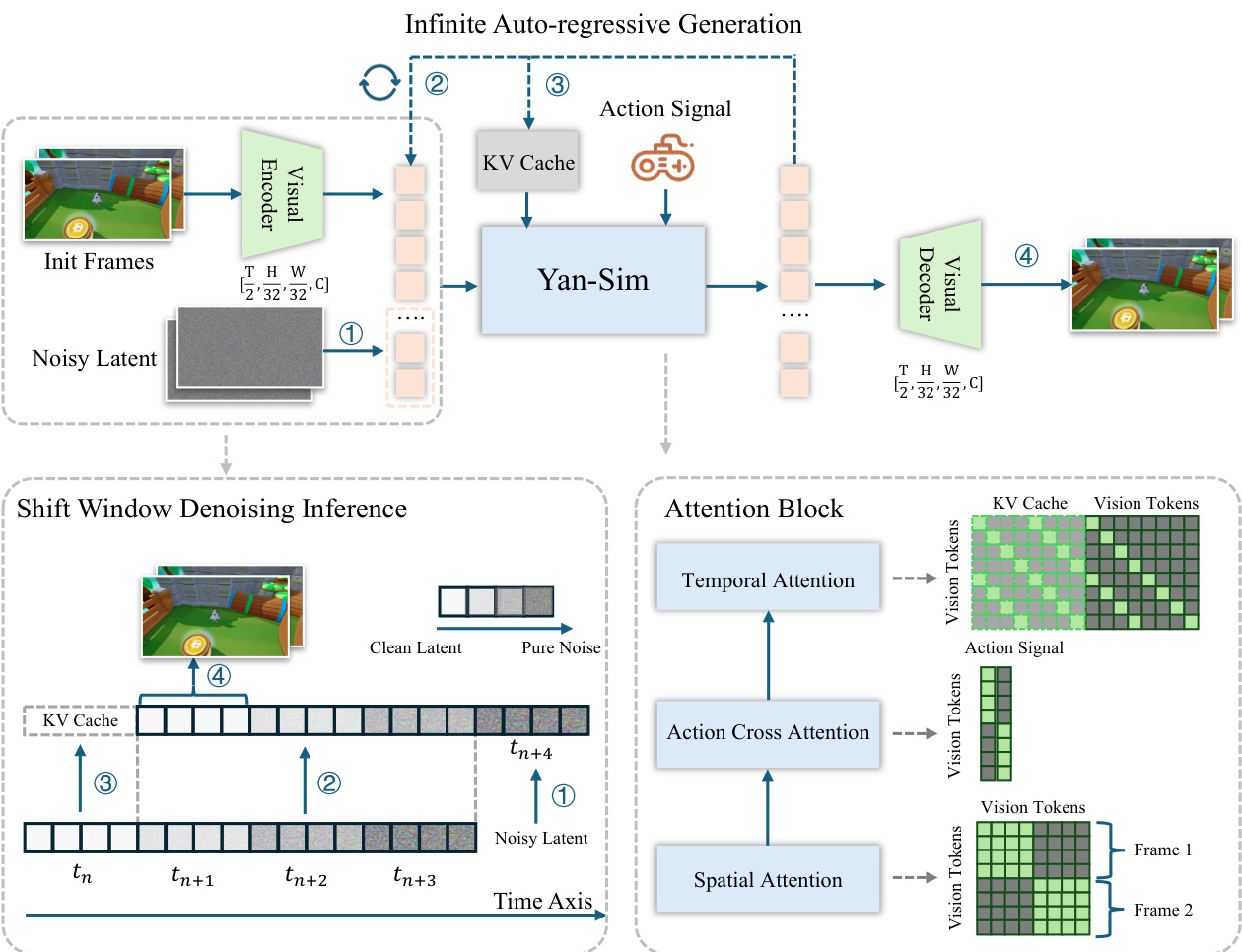

3.1 AAA-Level Simulation Module (Yan-Sim)

Technical Highlights

- •

Ultra-Compressed VAE Encoder

Achieves 32x spatial compression (vs traditional 8x) with expanded channel dimensions (16→256 channels) for high-fidelity latent space representation. - •

Shift Window Denoising

Parallel frame processing through sliding windows combined with KV caching reduces redundant computations, achieving 0.07s per-frame latency.

Performance Comparison

3.2 Multimodal Generation Module (Yan-Gen)

Key Innovations

- •

Hierarchical Video Captioning

- •

Global Context: Defines static world elements (e.g., “medieval castle with Gothic architecture”) - •

Local Context: Captures dynamic events (e.g., “character jumps over wooden fence with momentum”)

- •

- •

Action Control Cross-Attention

Action commands injected through dedicated encoders enable frame-level responsiveness (e.g., left key press → character turns left).

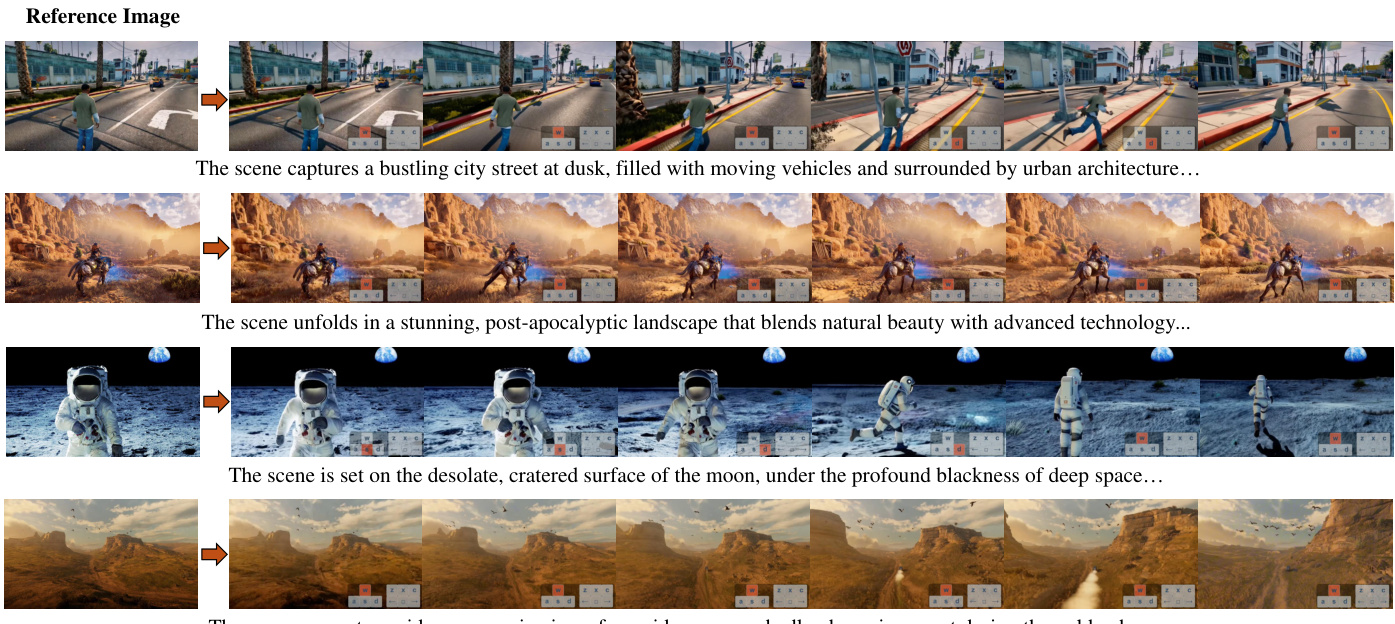

Generation Results

“

Example: Input “sprint across snowy terrain and leap over railing” generates:

✅ Physics-based momentum ✅ Snow texture interaction ✅ Railing collision detection

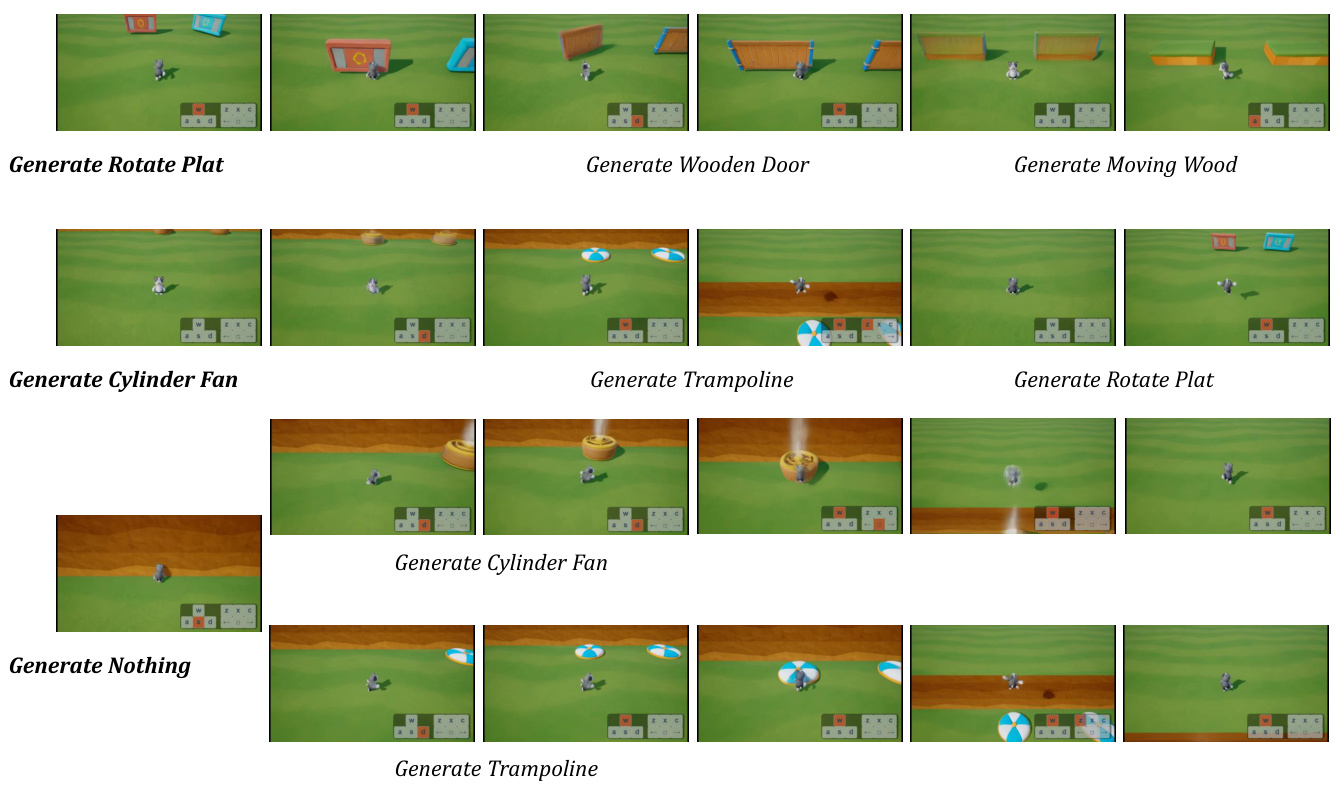

3.3 Multigrained Editing Module (Yan-Edit)

Core Concept

Separates mechanical simulation from visual rendering:

-

Mechanism Layer

Learns structure-dependent physics from depth maps (e.g., doors require keys) -

Rendering Layer

Uses ControlNet to apply arbitrary styles to depth maps (e.g., afternoon tea → cyberpunk)

Editing Types

“

Example: Changing “rotating platform” to “wooden door” maintains proper interaction physics

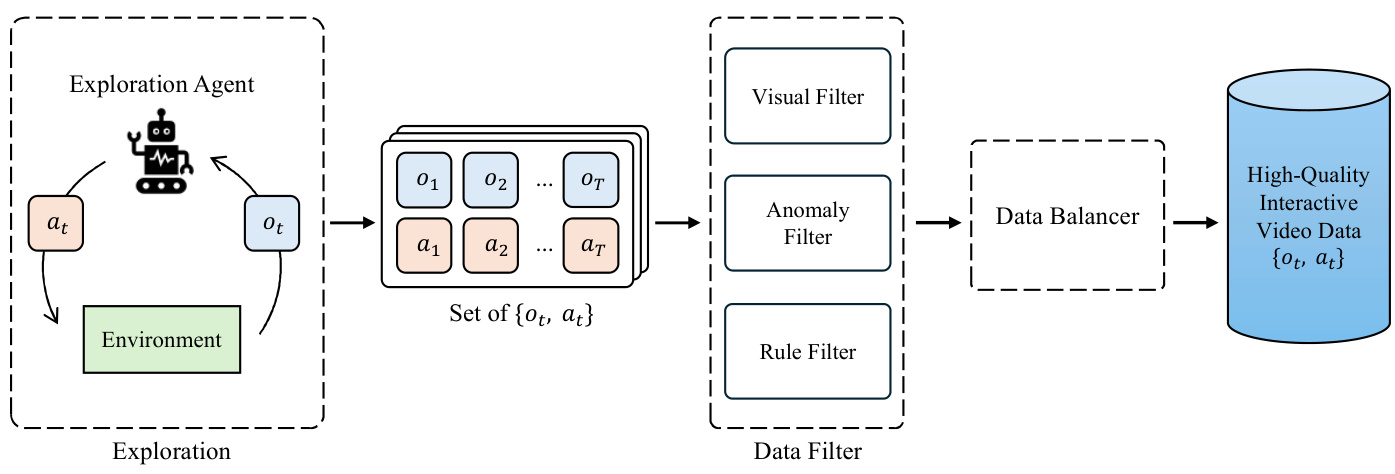

4. How Was the Training Data Built?

Data Collection Pipeline

-

Intelligent Agent Exploration

- •

Combines random actions + PPO reinforcement learning - •

Explores 90+ different scenarios

- •

-

Data Cleaning

- •

Visual Filter: Removes low-variance images (occlusions/rendering failures) - •

Anomaly Filter: Eliminates stuttering video segments - •

Rule Filter: Excludes data violating with game mechanics

- •

-

Balanced Sampling

Ensures even distribution across coordinates, collision states, etc.

Dataset Scale

5. Technical Implementation Details

5.1 Model Training Process

Stage 1: VAE Training

# Input: Two consecutive frames concatenated (H=1024, W=1792, C=6)

# Output: Latent space representation (h=32, w=56, C=16)

encoder = nn.Sequential(

DownBlock1(),

DownBlock2(), # Spatial compression ratio increased from 8→32

ChannelExpand() # Channel dimension expansion

)

Stage 2: Diffusion Model Training

- •

Diffusion Forcing Strategy: Independent noise addition per frame - •

Causal Attention: Current frame only attends to historical frames

5.2 Real-Time Inference Optimization

6. Typical Application Scenarios

6.1 Game Development

- •

Rapid Prototyping: Designers input text → generate interactive scenes instantly - •

Dynamic Content: Players input “volcanic eruption” → scene immediately responds

6.2 Virtual Production

- •

Virtual Scene Expansion: Reference image → generate continuous explorable environment - •

Cross-Domain Fusion: Real-world photos + game characters in real-time

7. Frequently Asked Questions

Q1: How does Yan differ from traditional game engines?

A: Yan is a generative simulation system unlike traditional pre-programmed engines:

- •

No manual collision detection coding needed - •

Supports free scene modification while maintaining physics consistency - •

Can generate training data for reinforcement learning

Q2: How does it maintain long video consistency?

A: Uses hierarchical description system:

-

Global context anchors world rules (unchanging) -

Local context updates dynamically (changeable)

Q3: What hardware is required?

A: Inference requirements:

- •

Single NVIDIA H20: 12-17 FPS - •

4x NVIDIA H20: 30 FPS

8. Technical Limitations

-

Long-Term Consistency Challenges: Complex interactions may cause spatiotemporal inconsistencies -

Hardware Dependency: Requires high-end GPUs for real-time performance -

Action Space Constraints: Currently based on game engine action sets -

Text-Based Editing: Needs improved natural language interfaces

9. Future Directions

-

Model Lightweighting: Develop edge device-friendly versions -

Cross-Domain Expansion: Transfer game scene generation to real-world applications -

Multimodal Input Enhancement: Support voice, gesture controls

“

“Yan points the way toward next-generation AI content engines” — Tencent Research

10. Further Reading

- •

[Project Website](project link) - •

Qwen2.5-VL Technical Report - •

[Rectified Flows Principles](https:// proceedings.neurips.cc/paper/2024/hash/…)