WhisperLiveKit: Ultra-Low-Latency Self-Hosted Speech-to-Text with Real-Time Speaker Identification

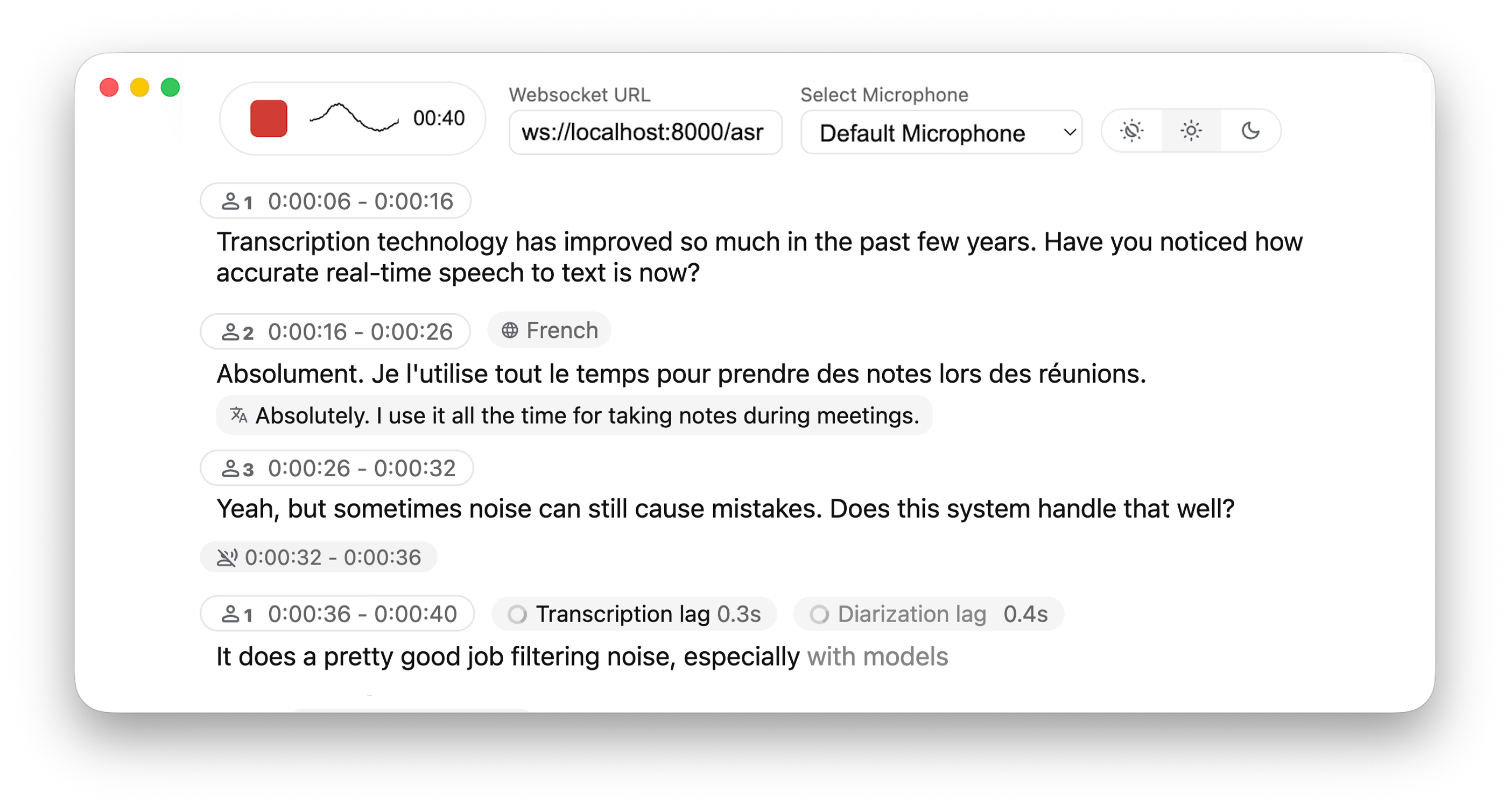

If you’re in need of a tool that converts speech to text in real time while distinguishing between different speakers, WhisperLiveKit (WLK for short) might be exactly what you’re looking for. This open-source solution specializes in ultra-low latency, self-hosted deployment, and supports real-time transcription and translation across multiple languages—making it ideal for meeting notes, accessibility tools, content creation, and more.

What Is WhisperLiveKit?

Simply put, WhisperLiveKit is a tool focused on real-time speech processing. It instantly converts spoken language into text and identifies who is speaking—this is known as “speaker identification.” Most importantly, it operates with minimal latency, delivering near-instantaneous results as you speak. Plus, you can deploy it on your own servers, eliminating concerns about data privacy.

You might wonder: Why not just use the standard Whisper model to process audio chunks? The original Whisper is designed for complete audio utterances, such as full recordings. Forcing it to handle small segments often leads to lost context, truncated words mid-syllable, and poor transcription quality. WhisperLiveKit addresses this with state-of-the-art real-time speech research, enabling intelligent buffering and incremental processing to maintain accuracy and fluency.

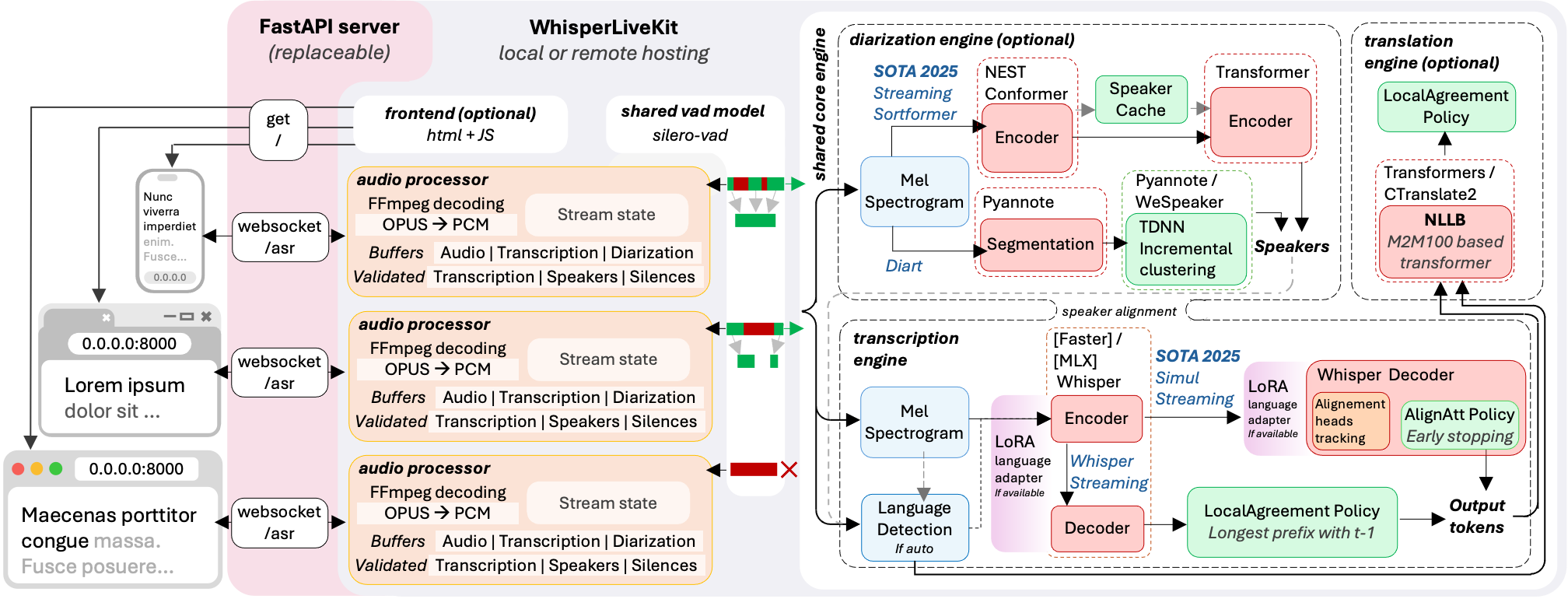

Core Technologies Powering WhisperLiveKit

WhisperLiveKit’s low latency and high accuracy are made possible by these cutting-edge technologies:

-

✦ Simul-Whisper/Streaming (SOTA 2025): Delivers ultra-low latency transcription using the AlignAtt policy—essentially processing speech “on the fly” without waiting for full sentences. -

✦ NLLW (2025): Built on the NLLB model, supporting simultaneous translation between over 200 languages. Whether translating Chinese to French or Spanish to Japanese, it handles cross-lingual tasks seamlessly. -

✦ WhisperStreaming (SOTA 2023): Reduces latency with the LocalAgreement policy for smoother, more consistent transcription. -

✦ Streaming Sortformer (SOTA 2025): Advanced real-time speaker diarization technology that accurately identifies “who is speaking” in dynamic conversations. -

✦ Diart (SOTA 2021): An alternative real-time speaker diarization tool, serving as a complementary backend. -

✦ Silero VAD (2024): Enterprise-grade Voice Activity Detection that precisely identifies when speech is present. It pauses processing during silent periods to conserve server resources.

Architecture: How Does It Work?

Curious how WhisperLiveKit handles multiple users simultaneously? Here’s a breakdown of its architecture:

In short, the backend supports concurrent connections from multiple users. When you speak, Voice Activity Detection (VAD) first determines if audio is present—reducing overhead during silence. When speech is detected, the audio is sent to the processing engine, which converts it to text in real time. Speaker identification technology labels each speaker, and the results are instantly returned to the user interface.

Installation: Get Up and Running in 3 Steps

Basic Installation

Whether you’re on Windows, macOS, or Linux, the first step is installing WhisperLiveKit. The simplest method is using pip:

For the latest development version, install directly from the repository:

Optional Dependencies: Boost Performance

Enhance WhisperLiveKit’s capabilities with these optional dependencies, tailored to your device and use case:

Quick Start: Experience Real-Time Transcription in 5 Minutes

Step 1: Launch the Transcription Server

Open your terminal and run the following command to start the server (using the base model and English as an example):

-

✦ --model: Specifies the Whisper model size (e.g., base, small, medium, large-v3). Larger models offer higher accuracy but require more computing power. -

✦ --language: Sets the target language (e.g., en for English, zh for Chinese, fr for French). View the full list of language codes here.

Step 2: Use It in Your Browser

Open a web browser and navigate to http://localhost:8000. Start speaking—you’ll see your words appear in real time. It’s that simple!

Quick Tips

-

✦ For HTTPS support (required for production environments), add SSL certificate parameters when starting the server (see the “Configuration Parameters” section below). -

✦ The CLI accepts both wlkandwhisperlivekit-serveras entry points—they work identically. -

✦ If you encounter GPU or environment issues during setup, refer to the troubleshooting guide.

Advanced Usage: Tailor to Your Needs

1. Command Line: Customize Your Transcription Service

Beyond basic setup, use command-line parameters to customize functionality. Examples include:

-

✦ Real-Time Translation: Transcribe French and translate it to Danish

-

✦ Public Access + Speaker Identification: Make the server accessible on all network interfaces and enable speaker diarization

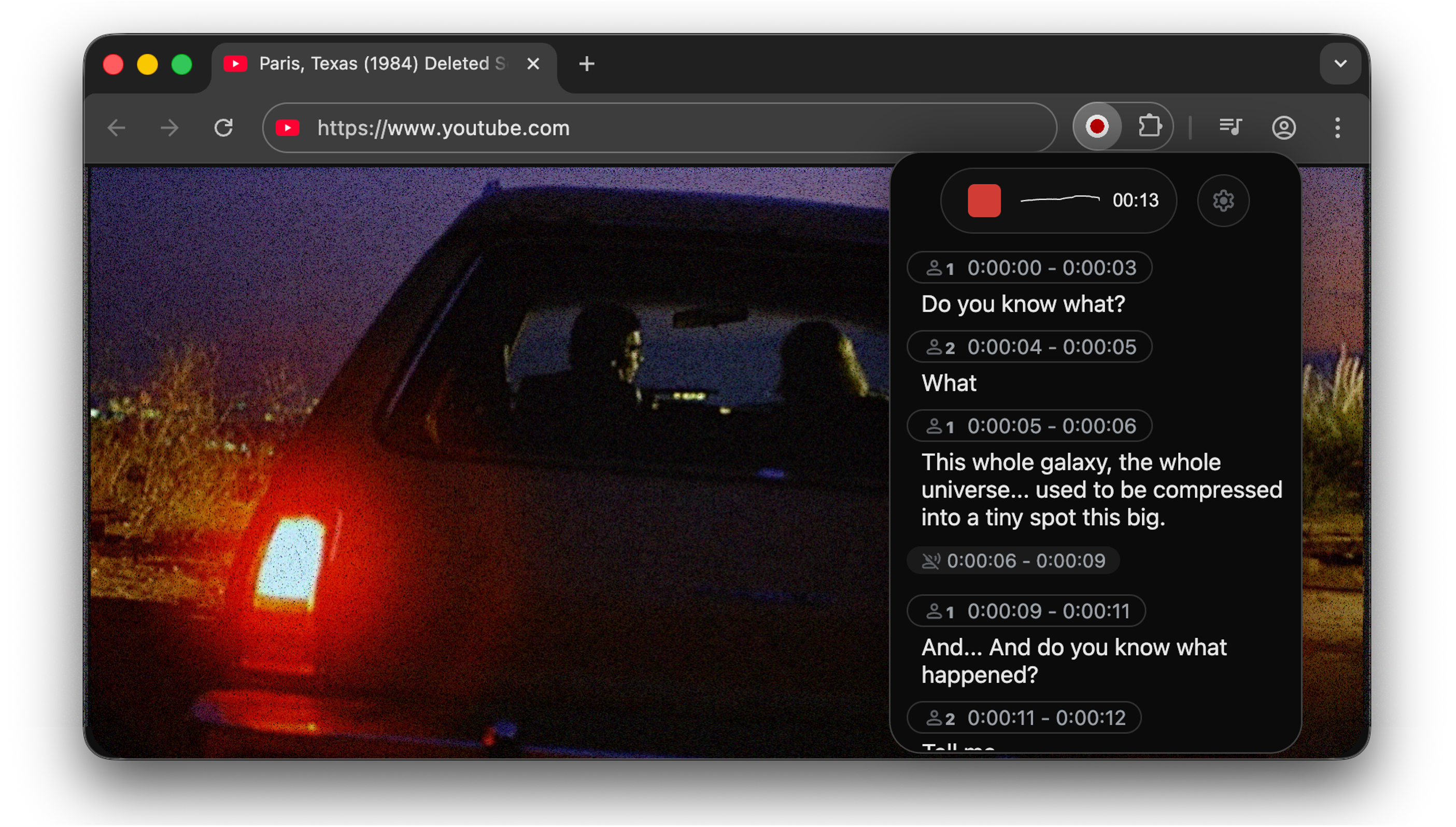

2. Web Audio Capture: Chrome Extension

Capture and transcribe audio from web pages (e.g., online meetings, webinars) using the Chrome extension:

-

Navigate to the chrome-extensiondirectory in the repository and follow the installation instructions. -

Enable the extension on the webpage you want to transcribe—audio will be captured and converted to text in real time.

3. Python API: Integrate into Your Applications

Embed WhisperLiveKit’s functionality into your Python projects using its API. Here’s a simplified example:

For a complete example, see basic_server in the repository.

4. Frontend Implementation: Customize Your UI

WhisperLiveKit includes a pre-built HTML/JavaScript frontend in whisperlivekit/web/live_transcription.html. You can also import it directly in Python:

Integrate this code into your website to get a ready-to-use transcription interface.

Configuration Parameters: A Detailed Guide

Customize WhisperLiveKit’s behavior with these parameters when starting the server:

Basic Parameters

Translation Parameters

Speaker Diarization Parameters

SimulStreaming Backend Parameters

WhisperStreaming Backend Parameters

Production Deployment Guide

Deploy WhisperLiveKit in production (e.g., for enterprise use or product integration) with these steps:

1. Server Setup

Install a production-grade ASGI server and launch multiple workers to handle concurrent traffic:

Replace your_app:app with your Python application entry point (e.g., the basic_server example).

2. Frontend Deployment

Customize the HTML example to match your brand, and ensure the WebSocket connection points to your production domain.

3. Nginx Configuration (Recommended)

Use Nginx as a reverse proxy to handle static assets and WebSocket forwarding, improving stability:

4. HTTPS Support

For secure deployments (required for most web services), use the wss:// protocol (WebSocket over HTTPS) and specify SSL certificates when starting the server:

Docker Deployment: Cross-Platform Simplicity

Avoid manual environment configuration with Docker, which supports both GPU and CPU deployment.

Prerequisites

-

✦ Docker installed on your system -

✦ NVIDIA Docker runtime (for GPU acceleration)

Quick Start

GPU Acceleration (Recommended for Speed)

CPU-Only (For Devices Without GPU)

Custom Configuration

Add parameters when running the container to customize behavior (e.g., model and language):

Advanced Build Options

Use --build-arg to add customizations during image build:

-

✦ Install extra dependencies: docker build --build-arg EXTRAS="whisper-timestamped" -t wlk . -

✦ Preload model cache: docker build --build-arg HF_PRECACHE_DIR="./.cache/" -t wlk . -

✦ Add Hugging Face token (for gated models): docker build --build-arg HF_TKN_FILE="./token" -t wlk .

Memory Requirements

Large models (e.g., large-v3) require significant memory—ensure your Docker runtime has sufficient resources allocated.

Frequently Asked Questions (FAQ)

1. What languages does WhisperLiveKit support?

Transcription languages are listed here. Translation supports over 200 languages, with the full list here.

2. How do I enable speaker identification?

Add the --diarization flag when starting the server:

For the Diart backend: Accept the model usage terms on Hugging Face (segmentation model, embedding model), then log in with huggingface-cli login.

3. Which model size should I choose?

-

✦ Small models (base, small): Fast and suitable for CPUs/low-end devices (slightly lower accuracy). -

✦ Large models (medium, large-v3): High accuracy for complex audio (requires GPU for performance).

See model recommendations for details.

4. Can I use WhisperLiveKit outside a browser?

Yes. Beyond the web interface, integrate it via the Python API or use the Chrome extension to capture audio from any webpage.

5. Why is there latency in transcription?

Latency depends on model size, device performance, and network speed. Reduce latency by:

-

✦ Using a smaller model (e.g., small instead of large-v3) -

✦ Lowering the --frame-thresholdvalue (for SimulStreaming) -

✦ Enabling hardware acceleration (GPU or Apple Silicon MLX)

Use Cases: How WhisperLiveKit Adds Value

-

✦ Meeting Transcription: Real-time transcription of multi-speaker meetings with automatic speaker labeling—export text logs instantly after the call. -

✦ Accessibility Tools: Help hard-of-hearing users follow conversations in real time, bridging communication gaps. -

✦ Content Creation: Auto-transcribe podcasts, videos, or interviews to generate subtitles, scripts, or blog post drafts. -

✦ Customer Service: Transcribe support calls with speaker differentiation (agent vs. customer) for quality assurance and training.

WhisperLiveKit stands out for its low latency, self-hosted flexibility, and multilingual support. If you need a locally deployable, real-time speech processing tool, it’s well worth exploring—installation is straightforward, and its open-source nature lets you customize it to your specific needs. Whether you’re building enterprise software or a personal project, WhisperLiveKit delivers reliable, efficient speech-to-text with speaker identification.