Rubrics as Rewards (RaR): Training AI to Better Align with Human Preferences

Introduction: The Challenge of Training AI for Subjective Tasks

When training AI systems to handle complex tasks like medical diagnosis or scientific analysis, we face a fundamental challenge: how do we teach models to produce high-quality outputs when there’s no single “correct” answer? Traditional reinforcement learning methods rely on either:

-

Verifiable rewards (e.g., math problems with clear solutions) -

Human preference rankings (e.g., scoring multiple responses)

But real-world domains like healthcare and science often require balancing objective facts with subjective quality (clarity, completeness, safety). This creates three key problems:

-

Opaque feedback: Black-box reward models make it hard to understand why certain outputs are favored -

Surface-level overfitting: Models may optimize for superficial patterns rather than true quality -

Scalability issues: Human preference data is expensive to collect and may contain biases

The Rubrics as Rewards (RaR) framework addresses these challenges by translating human evaluation criteria into structured, interpretable scoring rules.

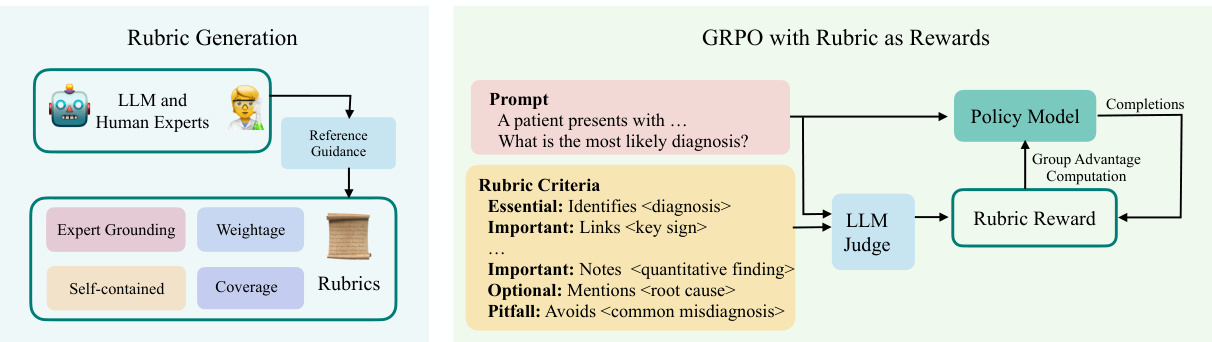

Figure 1: RaR converts expert knowledge into structured scoring checklists that guide AI training

How RaR Works: From Human Criteria to Machine Learning

The Core Idea: Structured Evaluation

RaR breaks down response quality into explicit scoring criteria organized in a checklist format. For example, a medical response might be evaluated using criteria like:

[

{

"title": "Diagnosis Accuracy",

"description": "Essential Criteria: Correctly identifies non-contrast helical CT as optimal for ureteric stones",

"weight": 5

},

{

"title": "Safety Check",

"description": "Important Criteria: Mentions no fasting required before procedure",

"weight": 3

}

]

Two Ways to Calculate Rewards

-

Explicit Aggregation

Calculate each criterion separately then combine scores:Total Score = (Σ criterion_score × weight) / total_weight -

Implicit Aggregation

Let AI judge evaluate all criteria holistically:Score = LLM_Judge(prompt + response + rubric_list)

Creating Effective Rubrics: Four Design Principles

Effective rubrics are created following strict guidelines:

| Design Principle | Implementation | Example Medical Rubric Item |

|---|---|---|

| Expert-Grounded | Reference expert answers when generating criteria | “Matches CDC guidelines for treatment protocols” |

| Comprehensive Coverage | Include multiple quality dimensions (accuracy, safety, clarity, completeness) | “Includes 3 differential diagnoses ranked by likelihood” |

| Semantic Weighting | Assign importance levels (Essential/Important/Optional/Pitfall) | “Essential: Identifies life-threatening contraindications” |

| Self-Contained | Criteria can be evaluated without external knowledge | “Optional: Uses layman-friendly explanations” |

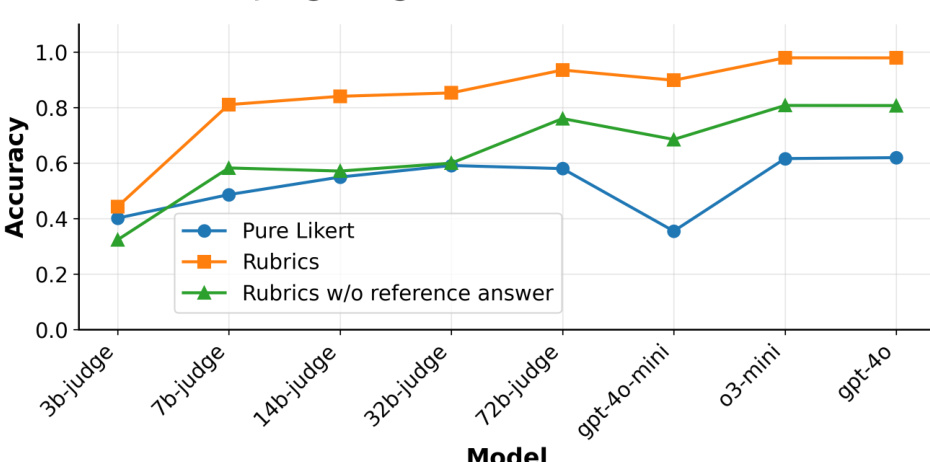

Figure 2: Rubric-based scoring (orange) outperforms simple ratings (blue) across all model sizes

Experimental Validation: Medical and Science Domains

Test Setup

Datasets Used:

-

RaR-Medical-20k: 20,166 medical prompts covering diagnosis, treatment, and ethics -

RaR-Science-20k: 20,625 STEM prompts spanning physics, chemistry, and biology

Comparison Baselines:

-

Simple-Likert: Direct 1-10 scoring without criteria -

Reference-Likert: Score based on similarity to expert answers -

Predefined Rubrics: Fixed generic criteria (not prompt-specific)

Key Results

| Method | Medical Score | Science Score |

|---|---|---|

| Simple-Likert | 0.2489 | 0.3409 |

| Reference-Likert | 0.3155 | 0.3775 |

| RaR-Implicit | 0.3194 | 0.3864 |

RaR achieved up to 28% improvement over basic preference-based methods

Critical Findings

-

Implicit aggregation Works Best

Allowing AI judges to balance criteria internally outperformed rigid mathematical weighting. -

Rubric Quality Matters

Rubrics generated with expert reference answers outperformed human-created criteria, while purely synthetic rubrics underperformed by 15%. -

Smaller Models Benefit Most

A 7B parameter model using RaR achieved accuracy comparable to 32B models without rubric guidance.

Technical Implementation Details

Training Parameters

| Parameter | Value |

|---|---|

| Base Policy Model | Qwen2.5-7B |

| Judge Model | gpt-4o-mini |

| Batch Size | 96 |

| Learning Rate | 5×10⁻⁶ |

| Rollouts per Prompt | 16 |

| Training Steps | 300 |

Example Rubric Generation Prompt (Medical)

You are an expert rubric writer. Create evaluation criteria for judging response quality to this medical question:

[Question]

[Reference Answer]

Requirements:

- 7-20 criteria items

- Categories: Essential/Important/Optional/Pitfall

- Self-contained descriptions

- JSON format output

Real-World Application Guide

Domain-Specific Tips

Medical Applications:

-

Prioritize criteria for factual accuracy and safety warnings -

Include specific terminology checks (e.g., “Identifies Stage III pressure ulcer”) -

Add negative criteria for dangerous recommendations

Scientific Analysis:

-

Focus on logical structure and formula application -

Include criteria for experimental validity checks -

Add units consistency checks

Common Pitfalls to Avoid

| Mistake | Better Approach |

|---|---|

| Too many criteria (>20) | Focus on 7-15 key quality dimensions |

| Complex weighting schemes | Use 3-4 importance levels maximum |

| Ignoring negative criteria | Include “Pitfall” items for common errors |

Future Directions

-

Dynamic Weight Learning

Automatically adjust criterion importance based on training feedback -

Multi-Modal Evaluation

Extend to evaluate code, diagrams, and mixed media outputs -

Curriculum Learning

Start with simple criteria, gradually introduce complex requirements

Conclusion

The RaR framework demonstrates that structured, human-readable evaluation criteria can effectively guide AI training in domains lacking clear ground truth. By making the reward mechanism transparent and interpretable, this approach:

-

Achieves better alignment with human preferences -

Reduces dependence on massive preference datasets -

Maintains performance across different model sizes -

Enables more controllable AI development

This methodology particularly benefits high-stakes domains like healthcare and scientific research, where output quality directly impacts real-world outcomes.