2025 Q2 AI Trends Report: Smarter Models, Cheaper Compute, and the Rise of AI Agents

The artificial intelligence industry continues its rapid evolution in Q2 2025, with significant advancements in model capabilities, cost efficiency, and practical applications. This analysis draws exclusively from the Artificial Analysis State of AI Q2 2025 Highlights Report to deliver a clear, jargon-free overview of key developments.

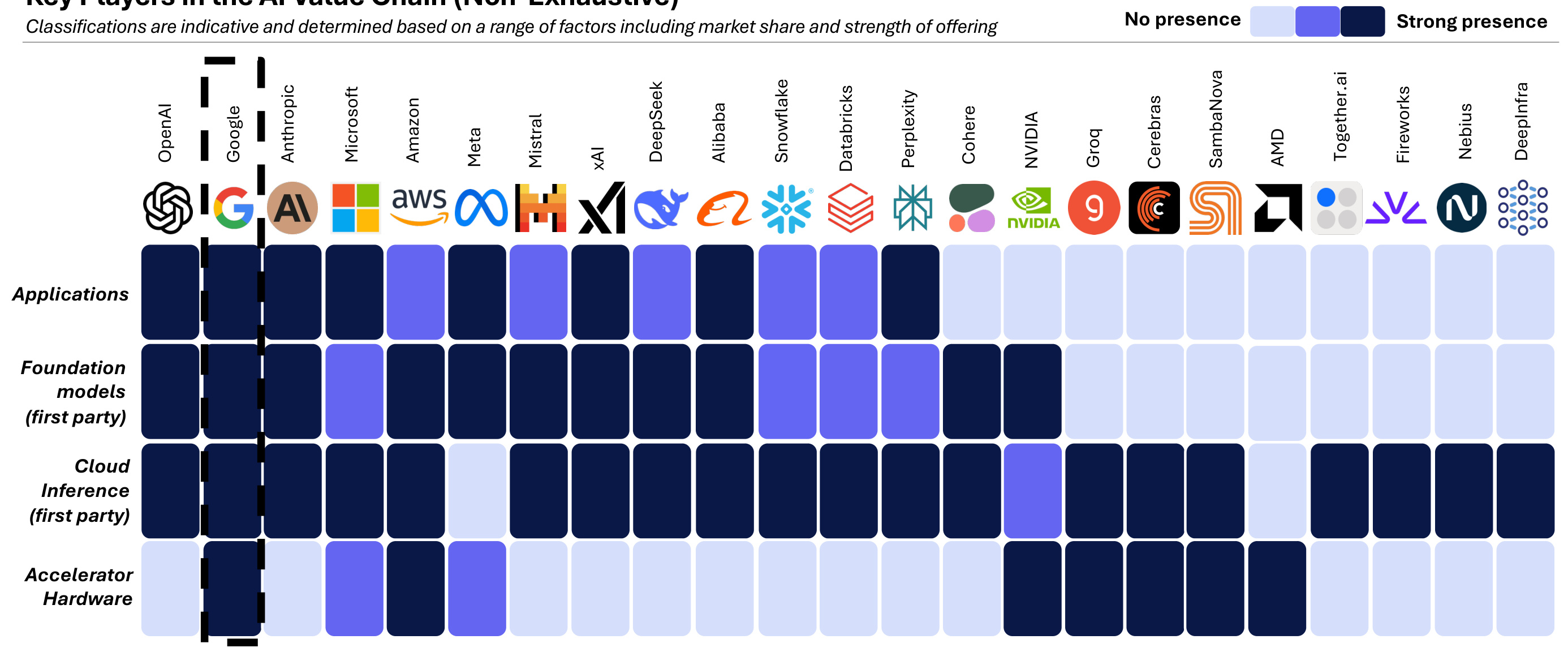

1. Industry Overview: Maturation and Market Shifts

The AI sector is entering a new phase of maturity, characterized by:

-

Vertical Integration: Companies like Google maintain end-to-end control from hardware (TPUs) to consumer applications (Gemini). -

Global Competition: Chinese labs like DeepSeek and MiniMax now rival U.S. leaders in model performance. -

Hardware-Software Co-Design: System performance increasingly depends on optimized hardware-software stacks rather than raw compute power.

Key Players in the AI Ecosystem

| Company Type | Examples | Focus Area |

|---|---|---|

| Big Tech | Google, Microsoft, Meta | Full-stack AI solutions |

| Specialized AI Labs | OpenAI, xAI, DeepSeek | Cutting-edge model development |

| Infrastructure Providers | NVIDIA, AMD, Huawei | AI accelerators and chips |

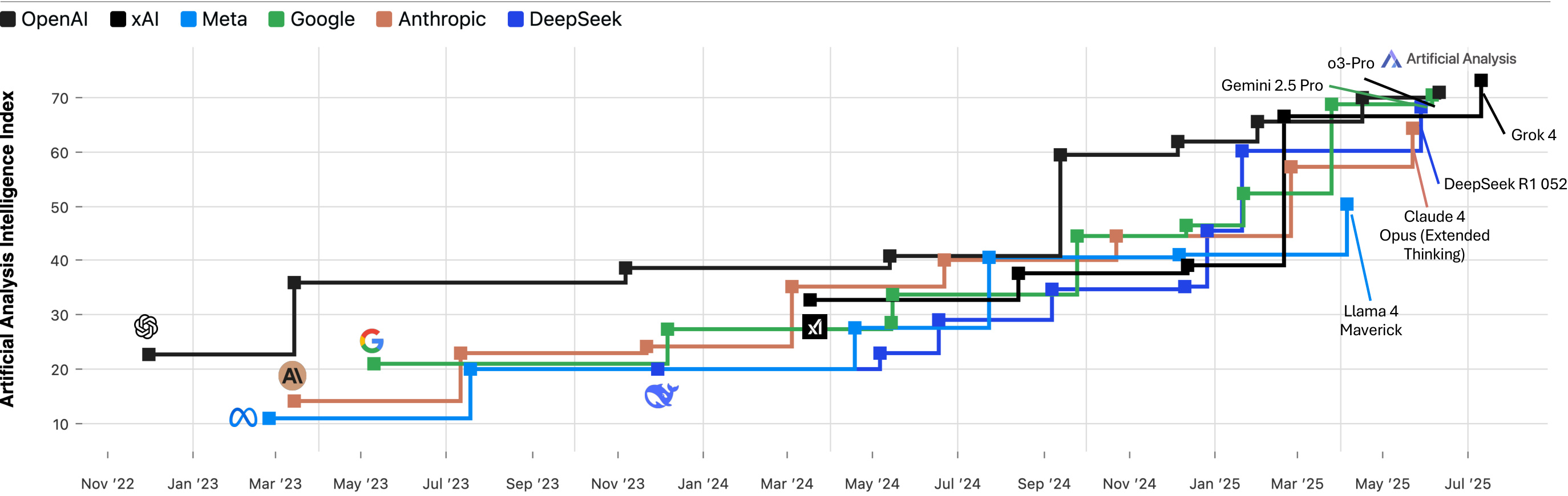

2. Language Models: xAI’s Breakthrough and Efficiency Gains

2.1 The Intelligence Leaderboard

Q2 2025 saw xAI’s Grok 4 claim the top spot in model intelligence, surpassing established leaders like OpenAI’s o3-pro and Google’s Gemini 2.5 Pro.

Top 5 Models by Intelligence (Artificial Analysis Index v2):

-

xAI Grok 4 (73) -

OpenAI o3-pro (71) -

Google Gemini 2.5 Pro (70) -

DeepSeek R1 (68) -

Anthropic Claude 4 Opus (67)

Key Takeaways:

-

Open-Source Progress: DeepSeek R1 demonstrates that open-weight models can compete with proprietary systems. -

Regional Balance: U.S. labs (xAI, OpenAI, Google) lead in reasoning models, while Chinese labs (DeepSeek, Alibaba) excel in cost-efficient architectures.

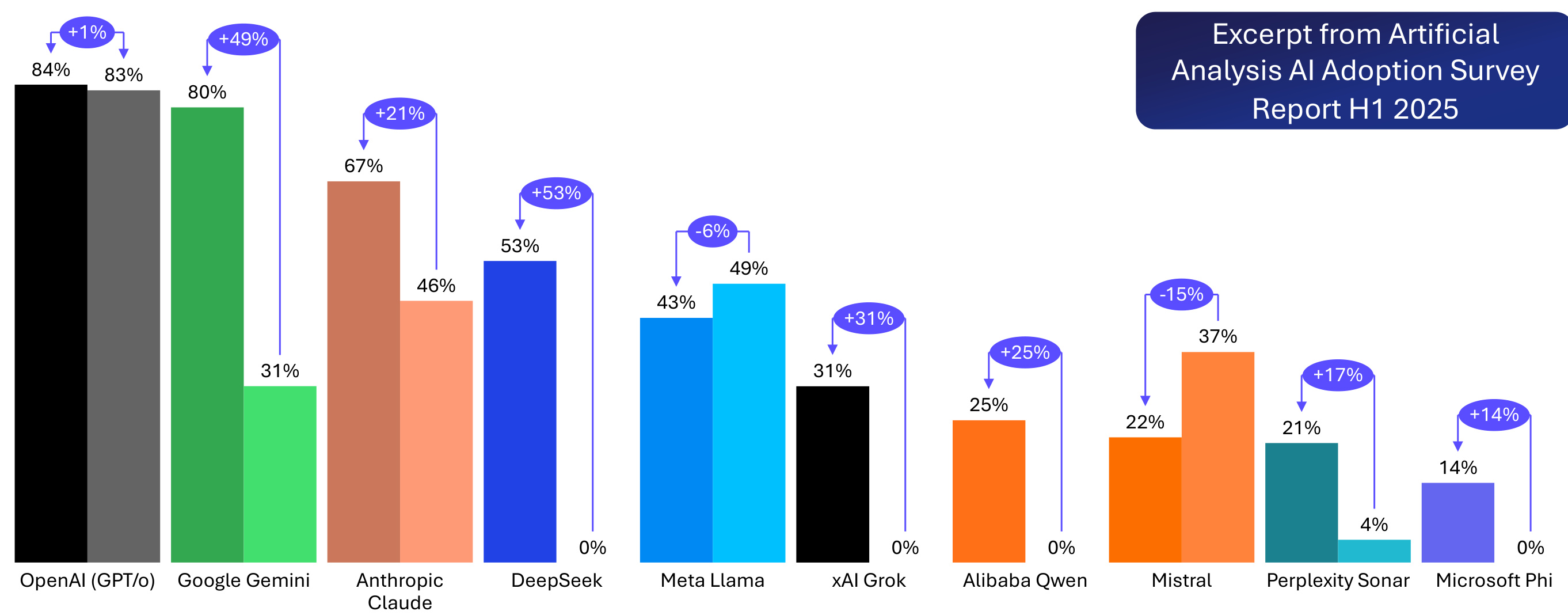

2.2 Market Demand Shifts

Data source: Artificial Analysis AI Adoption Survey (H1 2025, N=591)

Top 5 Most-Used/Considered LLM Families:

-

OpenAI GPT -

Google Gemini -

DeepSeek -

Anthropic Claude -

Meta Llama

Note: Open-source models like Llama saw declining interest compared to 2024.

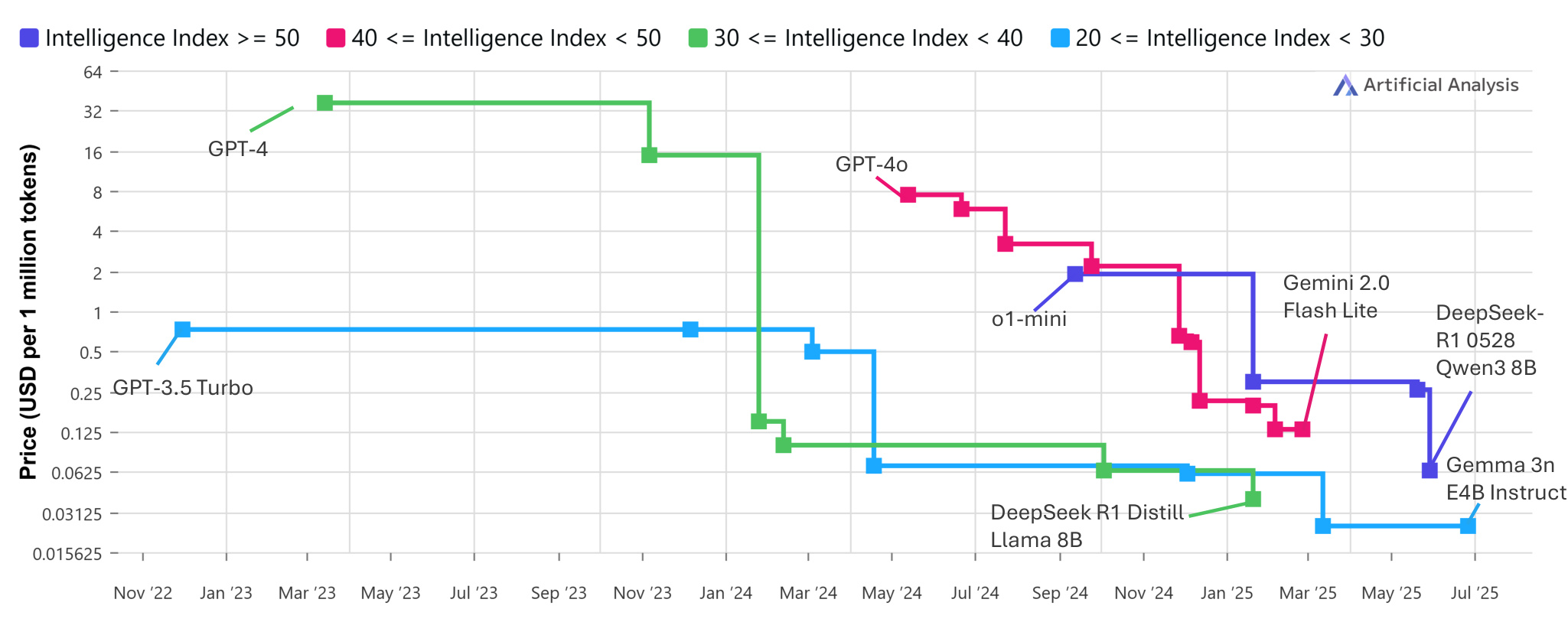

2.3 Cost Efficiency Breakthroughs

The price of frontier-level AI inference (models scoring ≥50 on the Intelligence Index) dropped 75% in Q2 2025, from 0.063 per million tokens.

Why This Matters:

-

Commoditization: Capable AI is now accessible to smaller developers and businesses. -

Hidden Costs: While per-token costs fell, total compute demand rose due to longer reasoning chains (e.g., a single deep research query can cost >10x a basic GPT-4 query).

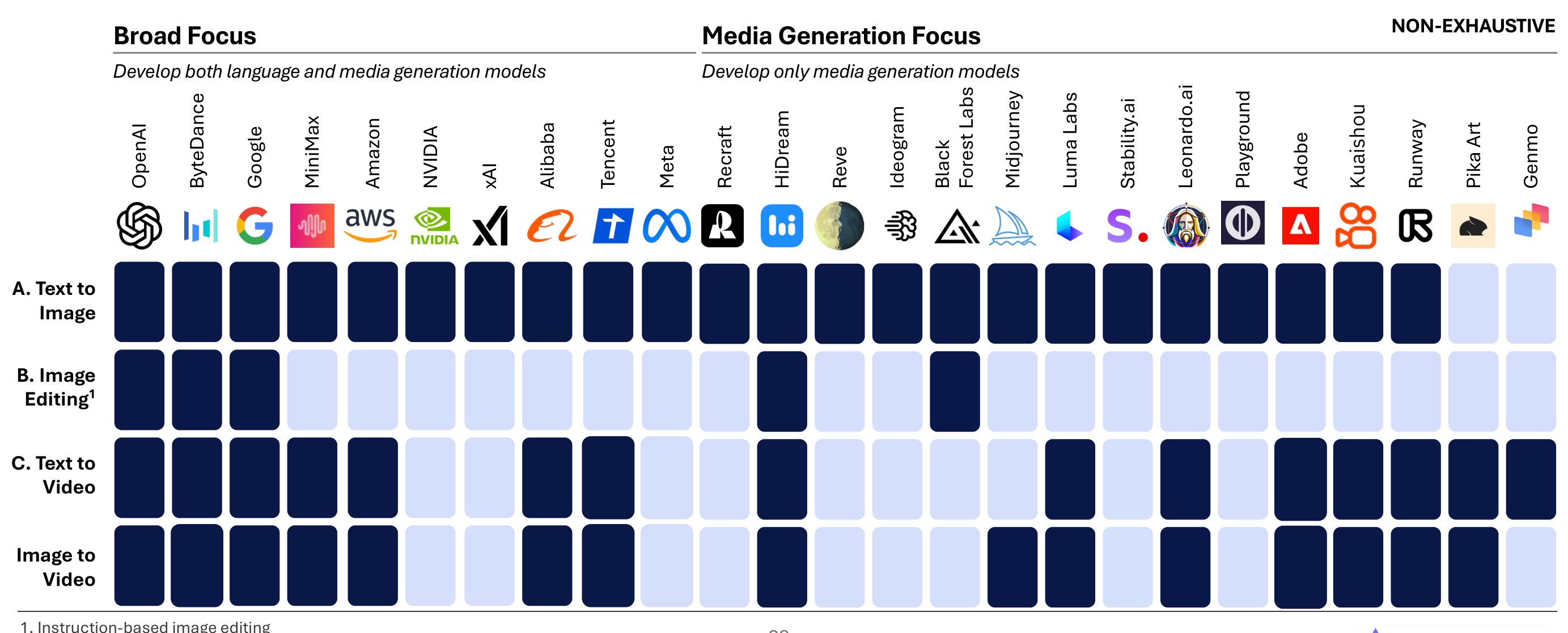

3. Image and Video Models: Quality Leaps and Chinese Leadership

3.1 Key Developments

| Trend | Example | Impact |

|---|---|---|

| Video models with native audio | Veo 3 (ByteDance) | First mainstream model with audio |

| Quality breakthroughs | Seedance 1.0 surpasses Q1 leaders | New benchmarks in text-to-video |

| Image editing advancements | Kontext [max], HiDream-E1.1 | Enhanced precision in edits |

| Chinese leadership | Bytedance SeeDream 3.0, HiDream Vivago 2.0 | Match U.S. models in quality |

Market Reality Check:

-

Open-source video models lag behind proprietary alternatives (e.g., Alibaba’s Wan 2.1 ranks 16th on the Artificial Analysis leaderboard). -

Google remains the only U.S. lab with a state-of-the-art (SOTA) video model in Q2.

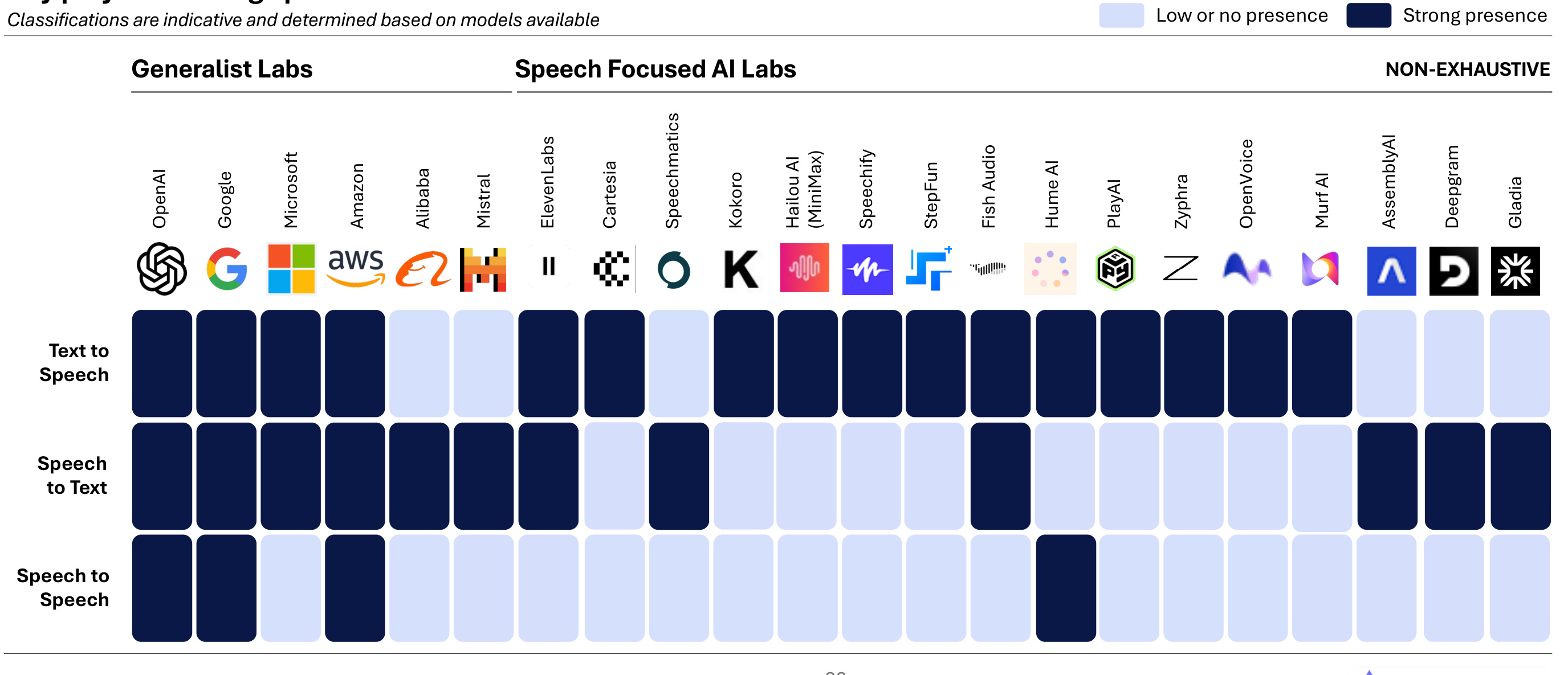

4. Speech Models: More Natural Voices, Lower Costs

4.1 Progress Highlights

-

Realism: Models like Diamodel push toward human-like dialogue. -

Open-Source Cost Reduction: Lightweight models (e.g., Kokoro 82M, Sesame CSM 1B) slash synthesis costs. -

End-to-End Systems: Models like OpenAI’s GPT-4o and Google’s Gemini 2.0 Flash process speech directly without intermediate text conversion.

Industry Shift:

Pure-play speech companies are driving innovation, though generalist labs (OpenAI, Google) still dominate the stack.

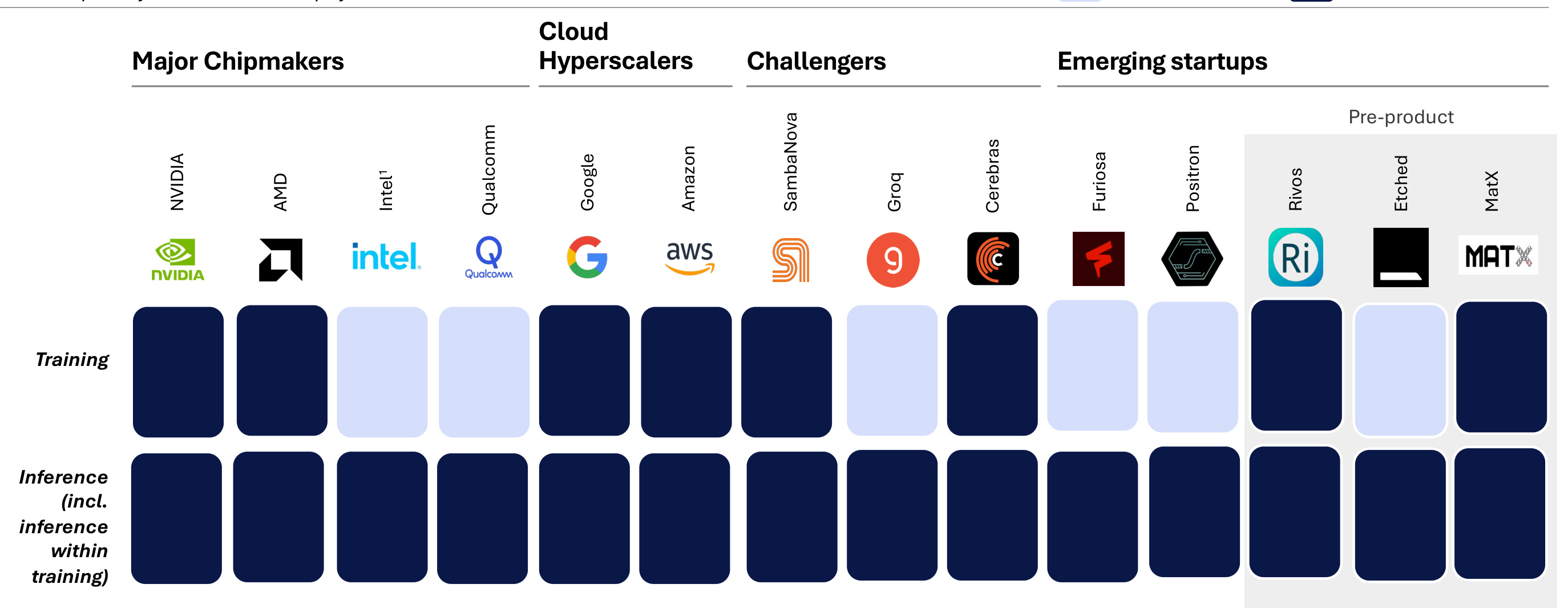

5. AI Accelerators: NVIDIA vs. The Field

5.1 Market Trends

| Trend | Details |

|---|---|

| Inference demand surges | 2025 will see 200K+ GB200 clusters |

| System performance focus | NVIDIA’s NVL72 combines 72 GB200 chips |

| Distributed inference rise | Multi-node setups handle trillion-parameter models |

| U.S.-China tensions | U.S. considers H20 ban; Huawei develops alternatives |

Note: Intel has discontinued Falcon Shores, with no replacement until 2026.

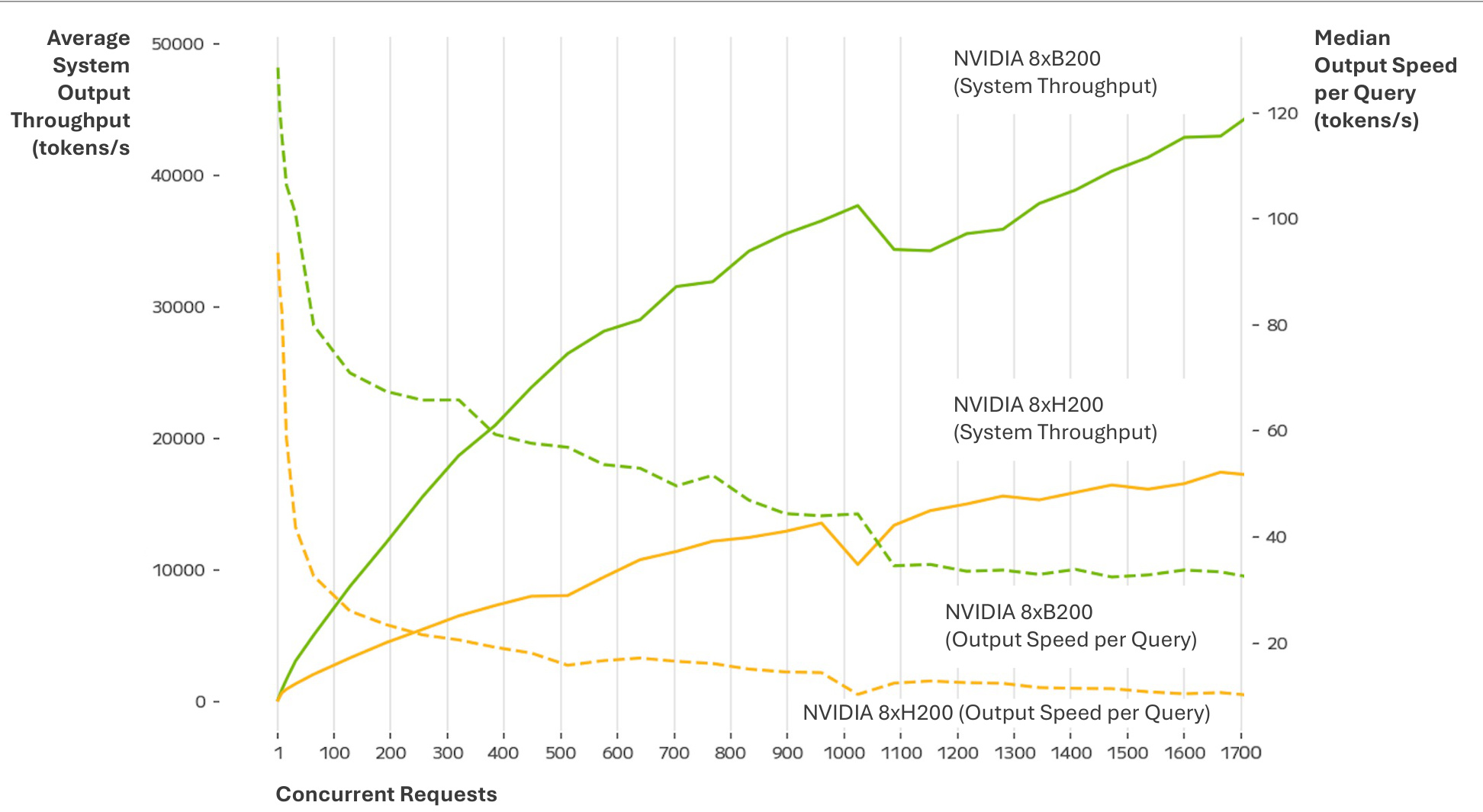

5.2 NVIDIA B200 vs. H200 Benchmark

Key Results (Llama 4 Maverick, FP8):

-

3x Throughput: B200 outputs ~39K tokens/sec vs. H200’s ~13K at 1,000 concurrent requests. -

Consistent Speed: B200 maintains 1.3x faster output at low loads and 3.5x faster under heavy load.

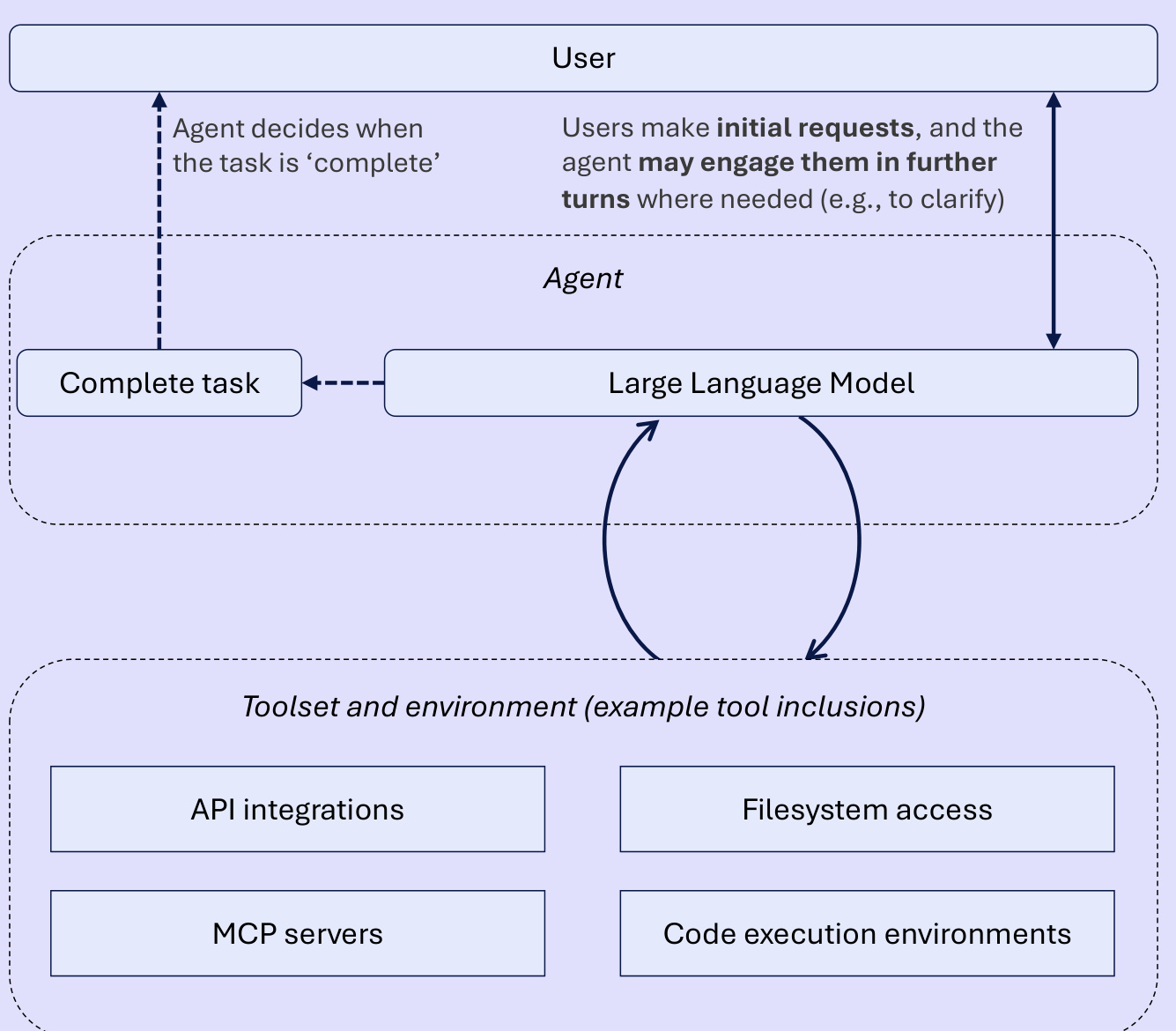

6. AI Agents: From Hype to Production

6.1 What Are Agents?

“Systems where LLMs dynamically direct their own processes and tool usage to accomplish tasks.”

6.2 Why Agents Matter

| Benefit | Example Use Case |

|---|---|

| Dynamic planning | Coding agents debug complex repositories |

| Cross-system integration | Sales agents sync with CRM tools |

| Error recovery | Research agents verify conflicting sources |

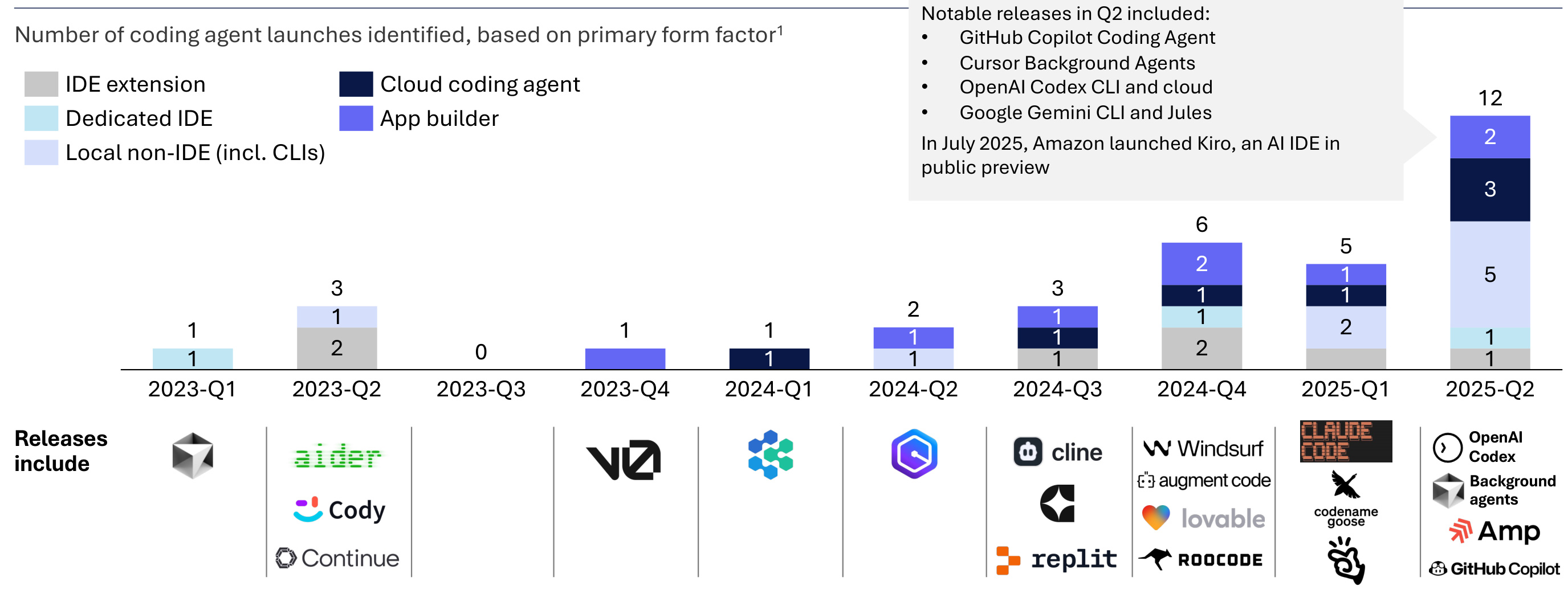

6.3 2025 Agent Trends

-

Coding Agents Dominate: GitHub Copilot and Cursor lead, with Chinese tools like Kimi gaining traction. -

Cost Challenges: Complex agent queries can cost $28+ per task. -

Training Focus: Labs prioritize long-horizon tool use (e.g., agents that plan multi-step workflows).

7. Frequently Asked Questions (FAQ)

7.1 What’s driving AI cost changes?

-

Software Efficiency: Smaller, optimized models (e.g., DeepSeek R1 0528) cut costs. -

Hardware Advances: NVIDIA B200 boosts throughput. -

Paradox: Cheaper per-token costs are offset by longer reasoning chains.

7.2 Are open-source models catching up?

Yes. DeepSeek R1 matches proprietary models in intelligence, proving open-weight architectures can compete.

7.3 What’s the biggest barrier to AI adoption?

Cost Management: While per-token prices fell, complex agent workflows and multi-step tasks increase total compute needs.

7.4 Which regions lead in AI innovation?

-

U.S.: Leads in reasoning models (xAI, OpenAI, Google). -

China: Dominates open-source efficiency (DeepSeek, MiniMax) and media generation (ByteDance).

7.5 How are AI agents being used today?

-

Coding: 58% of developers use or plan to use Cursor (source: Q2 survey). -

Customer Support: Real-time voice/text agents with CRM integration. -

Research: Agents chain queries to synthesize answers from multiple sources.

8. Conclusion

Q2 2025 highlights a maturing AI industry where:

-

Models are smarter and cheaper to run. -

Hardware focuses on system-level optimization. -

Agents transition from prototypes to production tools.

As Chinese labs close the innovation gap and NVIDIA faces new competition, the next phase of AI will likely center on practical deployment rather than raw capability.