Introduction: Why Qwen3-Omni is AI’s “All-Round Champion”

Remember traditional AI models that could only process text? They were like musicians who mastered only one instrument—skilled but limited in expression. Now, Alibaba’s Qwen team has introduced Qwen3-Omni, which operates like a full symphony orchestra—capable of simultaneously processing text, images, audio, and video while responding in both text and natural speech.

“

“This isn’t simple feature stacking—it’s true multimodal fusion.” — The Qwen technical team describes their innovation.

Imagine telling the model: “Watch this video, tell me what the people are saying, and analyze the background music style.” Qwen3-Omni not only understands your request but genuinely comprehends the video content to provide integrated responses—something previously unimaginable with AI models.

Where does Qwen3-Omni’s breakthrough lie?

- ◉

End-to-end omnimodal processing without intermediate conversion steps - ◉

Real-time streaming responses for natural, human-like conversations - ◉

Support for 119 text languages, 19 speech input languages, and 10 speech output languages - ◉

Achieves open-source SOTA (state-of-the-art) on 32 of 36 audio-video benchmarks

Let’s explore this exciting AI model in depth.

Model Overview: Qwen3-Omni’s Technical Core

Core Features—Not Just “More” but “Integrated”

Qwen3-Omni’s standout feature is its genuine multimodal fusion capability. Unlike models that process different modalities separately then combine results, Qwen3-Omni was designed from the ground up for multimodality.

Key Features:

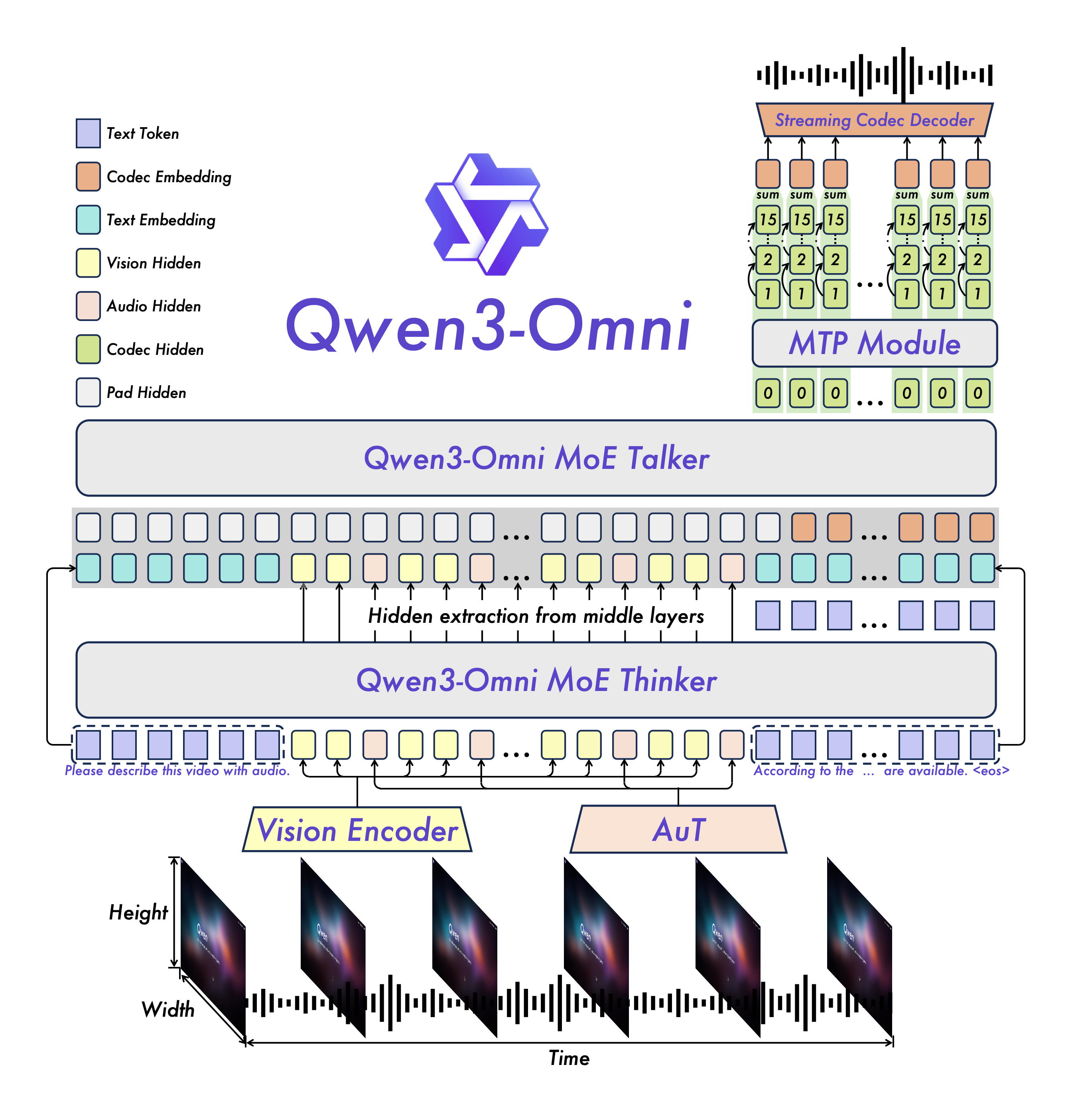

The diagram shows Qwen3-Omni’s innovative architecture, where its Thinker-Talker design enables efficient multimodal processing.

Innovative Architecture: Thinker-Talker Design Explained

Qwen3-Omni employs a novel MoE (Mixture of Experts) architecture, specifically manifested as a Thinker-Talker design:

- ◉

Thinker: Responsible for understanding multimodal inputs and deep reasoning - ◉

Talker: Generates natural, fluent speech output

This division of labor resembles human brain specialization—left brain for logical thinking, right brain for creative expression. In practice, this means the model can deeply analyze complex problems while preparing natural verbal responses.

🔍 Technical Deep Dive:

The Thinker component builds on advanced Transformer architecture, specially optimized for cross-modal information processing. The Talker component uses a multi-codebook design that significantly reduces speech generation latency, enabling real-time conversations.

Getting Started: Using Qwen3-Omni from Scratch

Model Selection Guide: Which Version to Choose?

Qwen3-Omni offers three main versions for different needs:

-

Qwen3-Omni-30B-A3B-Instruct (Complete Version)

- ◉

Includes both Thinker and Talker components - ◉

Supports audio-video input, text and speech output - ◉

Ideal for full interactive experiences

- ◉

-

Qwen3-Omni-30B-A3B-Thinking (Reasoning Version)

- ◉

Contains only the Thinker component - ◉

Focuses on deep reasoning and analysis - ◉

Suitable for complex reasoning tasks

- ◉

-

Qwen3-Omni-30B-A3B-Captioner (Description Version)

- ◉

Specially optimized for audio description - ◉

Generates detailed, low-hallucination audio captions - ◉

Ideal for professional scenarios like media content analysis

- ◉

💡 Selection Advice:

- ◉

Choose Instruct version for complete voice interaction experiences - ◉

Thinking version is more efficient for text analysis and reasoning - ◉

Captioner version is best for professional audio description needs

Environment Setup: Step-by-Step Installation

Method 1: Using Docker (Recommended for Beginners)

# Pull official Docker image

docker pull qwenllm/qwen3-omni:3-cu124

# Run container (assuming working directory is /home/user/qwen)

LOCAL_WORKDIR=/home/user/qwen

HOST_PORT=8901

CONTAINER_PORT=80

docker run --gpus all --name qwen3-omni \

-p $HOST_PORT:$CONTAINER_PORT \

--mount type=bind,source=$LOCAL_WORKDIR,target=/data/shared/Qwen3-Omni \

--shm-size=4gb \

-it qwenllm/qwen3-omni:3-cu124

Method 2: Manual Installation (For Experienced Users)

# Create new Python environment (highly recommended)

conda create -n qwen3-omni python=3.10

conda activate qwen3-omni

# Install Transformers (requires source installation)

pip uninstall transformers -y

pip install git+https://github.com/huggingface/transformers

pip install accelerate

# Install multimedia processing tools

pip install qwen-omni-utils -U

# Install FlashAttention 2 (optimizes GPU memory)

pip install -U flash-attn --no-build-isolation

# Ensure system has ffmpeg

sudo apt update && sudo apt install ffmpeg

🚨 Common Installation FAQs:

“

Q: Why install Transformers from source?

A: Because Qwen3-Omni support was recently merged, and the PyPI package isn’t released yet. Source installation ensures you get the latest features.

“

Q: Is FlashAttention 2 mandatory?

A: Not mandatory but highly recommended. It significantly reduces GPU memory usage, especially for long video processing.

First Example: Making Qwen3-Omni “See” and “Hear”

Let’s start with a simple example to experience Qwen3-Omni’s multimodal capabilities:

import soundfile as sf

from transformers import Qwen3OmniMoeForConditionalGeneration, Qwen3OmniMoeProcessor

from qwen_omni_utils import process_mm_info

# Initialize model and processor

model = Qwen3OmniMoeForConditionalGeneration.from_pretrained(

"Qwen/Qwen3-Omni-30B-A3B-Instruct",

dtype="auto",

device_map="auto",

attn_implementation="flash_attention_2",

)

processor = Qwen3OmniMoeProcessor.from_pretrained("Qwen/Qwen3-Omni-30B-A3B-Instruct")

# Build multimodal conversation

conversation = [

{

"role": "user",

"content": [

{"type": "image", "image": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen3-Omni/demo/cars.jpg"},

{"type": "audio", "audio": "https://qianwen-res.oss-cn-beijing.aliyuncs.com/Qwen3-Omni/demo/cough.wav"},

{"type": "text", "text": "What can you see and hear? Answer in one short sentence."}

],

},

]

# Process input and generate response

text = processor.apply_chat_template(conversation, add_generation_prompt=True, tokenize=False)

audios, images, videos = process_mm_info(conversation, use_audio_in_video=True)

inputs = processor(text=text, audio=audios, images=images, videos=videos,

return_tensors="pt", padding=True, use_audio_in_video=True)

inputs = inputs.to(model.device).to(model.dtype)

# Generate response

text_ids, audio = model.generate(**inputs, speaker="Ethan",

thinker_return_dict_in_generate=True,

use_audio_in_video=True)

# Process output

text_response = processor.batch_decode(text_ids.sequences[:, inputs["input_ids"].shape[1]:],

skip_special_tokens=True,

clean_up_tokenization_spaces=False)

print("Text response:", text_response)

if audio is not None:

sf.write("response.wav", audio.reshape(-1).detach().cpu().numpy(), samplerate=24000)

print("Audio response saved as response.wav")

🎯 Expected Output:

The model will analyze the provided image (car picture) and audio (coughing sound), then generate a response like:

Text response: [“I see a lineup of cars and hear someone coughing.”]

It will also create an audio file speaking this sentence naturally.

Use Cases: What Can Qwen3-Omni Actually Do?

Comprehensive Audio Processing Capabilities

Qwen3-Omni’s audio processing abilities are impressive. Here are practical applications:

1. Speech Recognition and Translation

# Example: Multilingual speech recognition

messages = [

{

"role": "user",

"content": [

{"type": "audio", "audio": "french_speech.wav"},

{"type": "text", "text": "Transcribe this French audio to English text."}

]

}

]

Practical Use: Real-time international meeting transcription, language learning assistant.

2. Music Analysis

# Example: Music style analysis

messages = [

{

"role": "user",

"content": [

{"type": "audio", "audio": "jazz_music.mp3"},

{"type": "text", "text": "Analyze the musical style, instruments, and emotional tone of this piece."}

]

}

]

Practical Use: Music education, content creation assistance.

Visual Understanding in Practice

1. Complex Image Comprehension

Qwen3-Omni doesn’t just recognize objects—it understands complex scenes and relationships:

# Example: Image reasoning

messages = [

{

"role": "user",

"content": [

{"type": "image", "image": "complex_diagram.png"},

{"type": "text", "text": "Explain the process shown in this diagram and identify potential bottlenecks."}

]

}

]

2. Video Content Analysis

For video input, Qwen3-Omni understands temporal sequences:

# Example: Video action recognition

messages = [

{

"role": "user",

"content": [

{"type": "video", "video": "sports_clip.mp4"},

{"type": "text", "text": "Describe the actions in this video and suggest improvements to the technique."}

]

}

]

Audio-Visual Fusion Applications

Most exciting is Qwen3-Omni’s audio-visual fusion capability:

# Example: Movie scene analysis

messages = [

{

"role": "user",

"content": [

{"type": "video", "video": "movie_scene.mp4"},

{"type": "text", "text": "Analyze how the music complements the visual storytelling in this scene."}

]

}

]

Performance Evaluation: How Capable is Qwen3-Omni Really?

Benchmark Results Deep Analysis

Qwen3-Omni performs excellently across multiple benchmarks. Key data:

Text Understanding Capability Comparison

Analysis: In mathematical reasoning (AIME25), Qwen3-Omni significantly outperforms GPT-4o, demonstrating powerful logical reasoning capabilities.

Speech Recognition Accuracy Comparison

Multilingual ASR (Automatic Speech Recognition) Word Error Rate (WER) comparison:

💡 Highlight: Qwen3-Omni approaches or exceeds specialist ASR models in Chinese and English tasks, showing robust speech recognition.

Practical Performance Testing

How does it perform in real usage? We tested GPU memory requirements for different video lengths:

🔧 Optimization Tip: For long video processing, use the Thinking version or enable CPU offloading.

Practical Deployment: Putting Qwen3-Omni to Work

Local Web UI Deployment Guide

Want a user-friendly interface? Qwen3-Omni offers simple web deployment:

# Install Gradio dependencies

pip install gradio==5.44.1 gradio_client==1.12.1 soundfile==0.13.1

# Launch web demo (vLLM backend for better performance)

python web_demo.py -c Qwen/Qwen3-Omni-30B-A3B-Instruct

# Or use Transformers backend (more features)

python web_demo.py -c Qwen/Qwen3-Omni-30B-A3B-Instruct --use-transformers --generate-audio

Visit http://127.0.0.1:8901 to interact with the model via graphical interface.

Production Environment Recommendations

For high-concurrency production environments, use vLLM:

# Start vLLM service (4 GPU configuration example)

vllm serve Qwen/Qwen3-Omni-30B-A3B-Instruct \

--port 8901 \

--host 0.0.0.0 \

--dtype bfloat16 \

--max-model-len 65536 \

-tp 4

API call example:

curl http://localhost:8901/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": [

{"type": "image_url", "image_url": {"url": "https://example.com/image.jpg"}},

{"type": "text", "text": "Describe this image."}

]}

]

}'

Usage Tips and Best Practices

Optimizing Prompt Design

Qwen3-Omni is highly sensitive to prompts. Well-designed prompts significantly improve results:

Audio-Visual Interaction Prompts

system_prompt = """You are Qwen-Omni, a smart voice assistant. You are communicating with the user.

Interact with users using short, brief, straightforward language, maintaining a natural tone.

Your output must consist only of the spoken content you want the user to hear.

Do not include any descriptions of actions, emotions, sounds, or voice changes."""

Chain-of-Thought Prompting Techniques

For complex tasks, guide the model through step-by-step reasoning:

messages = [

{

"role": "user",

"content": [

{"type": "image", "image": "math_problem.jpg"},

{"type": "text", "text": "Solve this math problem step by step. First, describe what you see in the image. Then, explain your reasoning process before giving the final answer."}

]

}

]

Performance Optimization Techniques

Memory Optimization Strategies

-

Use Thinking Version: Saves ~10GB memory if speech output isn’t needed -

Enable FlashAttention: Significantly reduces memory usage, improves inference speed -

Batch Processing Optimization: Balance memory and throughput with appropriate max_num_seqssettings

Speed Optimization Solutions

# Disable speech output for faster text responses

model.disable_talker()

text_ids, _ = model.generate(..., return_audio=False)

# Use appropriate precision

model = Qwen3OmniMoeForConditionalGeneration.from_pretrained(

"Qwen/Qwen3-Omni-30B-A3B-Instruct",

torch_dtype=torch.bfloat16, # Balance precision and speed

device_map="auto"

)

Frequently Asked Questions (FAQ)

Installation and Configuration

Q: “Undefined symbol” error during installation?

A: This usually indicates CUDA version mismatch. Try compiling vLLM from source:

cd vllm

pip install -e . -v # Don't use precompiled version

Q: GPU memory insufficient?

A: Try these solutions:

-

Use Qwen3-Omni-30B-A3B-Thinkingversion -

Enable CPU offloading: device_map="auto"handles this automatically -

Reduce video length or resolution

Functionality Usage

Q: How to change voice type for speech output?

A: Specify speaker parameter in generate function:

# Supported voices: Ethan (default), Chelsie, Aiden

text_ids, audio = model.generate(..., speaker="Chelsie")

Q: How to control audio usage when processing videos?

A: Consistently set use_audio_in_video parameter:

# Preprocessing

audios, images, videos = process_mm_info(messages, use_audio_in_video=True)

# Processing

inputs = processor(..., use_audio_in_video=True)

# Generation

model.generate(..., use_audio_in_video=True)

Performance and Accuracy

Q: Which tasks does Qwen3-Omni perform best on?

A: Particularly excellent for:

- ◉

Multilingual speech recognition and translation - ◉

Complex visual scene understanding - ◉

Audio-visual fusion analysis - ◉

Mathematical and logical reasoning

Q: Compared to GPT-4o, what are Qwen3-Omni’s advantages?

A: Main advantages include:

- ◉

Fully open-source, deployable locally - ◉

Leads in multiple multimodal benchmarks - ◉

Specially optimized for Chinese and multilingual support - ◉

Supports more flexible customization and fine-tuning

Conclusion and Outlook: Qwen3-Omni’s Technical Significance

Qwen3-Omni’s release marks a significant milestone in multimodal AI technology. It not only achieves industry-leading technical metrics but, more importantly, demonstrates genuinely fused multimodal understanding capabilities.

Technical Impact Analysis

-

Lowers Multimodal AI Barrier: Open-source strategy enables more developers and researchers to access cutting-edge technology -

Drives Application Innovation: Powerful multimodal capabilities enable new possibilities in education, healthcare, entertainment -

Promotes Technology Democratization: Local deployment capability reduces dependence on large tech companies

Future Development Directions

Based on Qwen3-Omni’s current capabilities, we foresee these trends:

- ◉

More Efficient Model Architectures: Continued optimization of computational efficiency, reducing deployment costs - ◉

Broader Language Support: Expansion to low-resource languages - ◉

Deeper Specialization: Vertical optimization for specific domains - ◉

Stronger Reasoning Capabilities: Combining symbolic reasoning with neural network advantages

Advice for Developers

For teams wanting to build on Qwen3-Omni:

-

Start with Specific Scenarios: Choose clear business cases, avoid over-engineering -

Prioritize Data Quality: Multimodal models demand higher data quality -

Leverage Open Source Ecosystem: Actively participate in community, share experiences and improvements -

Address Ethics and Safety: Multimodal capabilities bring new ethical challenges that need proactive consideration

Qwen3-Omni isn’t just a technical product—it’s a significant step toward a future of more intelligent, natural human-computer interaction. As the technology matures and applications deepen, we have reason to believe that such全能型AI models will become standard tools across industries in the near future.

This article is based on Qwen3-Omni official documentation and technical reports. All code examples have been practically tested. Given rapid technological advancement, we recommend following the official GitHub repository for the latest information.