Qwen3-30B-A3B-Instruct-2507: A Comprehensive Guide to the Latest Large Language Model

Introduction to Qwen3-30B-A3B-Instruct-2507

The Qwen3-30B-A3B-Instruct-2507 represents a significant advancement in the field of large language models (LLMs). This model, part of the Qwen series, is designed to handle a wide range of tasks with enhanced capabilities in instruction following, logical reasoning, and text comprehension. As a non-thinking mode model, it focuses on delivering efficient and accurate responses without the need for additional processing steps. This guide provides an in-depth look at the features, performance, and practical applications of Qwen3-30B-A3B-Instruct-2507, tailored for technical professionals and enthusiasts.

Technical Overview of Qwen3-30B-A3B-Instruct-2507

Model Type and Training Stage

Qwen3-30B-A3B-Instruct-2507 is classified as a causal language model (CLM), which means it generates text by predicting the next token based on the preceding context. The model undergoes two primary training stages: pretraining and post-training. During pretraining, the model learns the statistical patterns of language from a vast corpus of text data. Post-training involves fine-tuning the model on specific tasks to enhance its performance in real-world applications.

Key Parameters and Specifications

The model’s technical specifications are as follows:

| Feature | Value |

|---|---|

| Total Parameters | 30.5B |

| Activated Parameters | 3.3B |

| Non-Embedding Parameters | 29.9B |

| Number of Layers | 48 |

| Attention Heads (GQA) | 32 for Q, 4 for KV |

| Number of Experts | 128 |

| Activated Experts | 8 |

| Context Length | 262,144 tokens (native) |

These parameters highlight the model’s complexity and capacity to handle extensive contextual information, making it suitable for tasks requiring deep understanding and generation of long texts.

Non-Thinking Mode and Output Characteristics

One of the notable features of Qwen3-30B-A3B-Instruct-2507 is its non-thinking mode, which means it does not generate <think> blocks in its output. This mode is optimized for efficiency, allowing the model to produce responses quickly without additional processing steps. The model’s output is designed to be directly usable, reducing the need for post-processing.

Performance Benchmarks and Capabilities

Comparative Performance Metrics

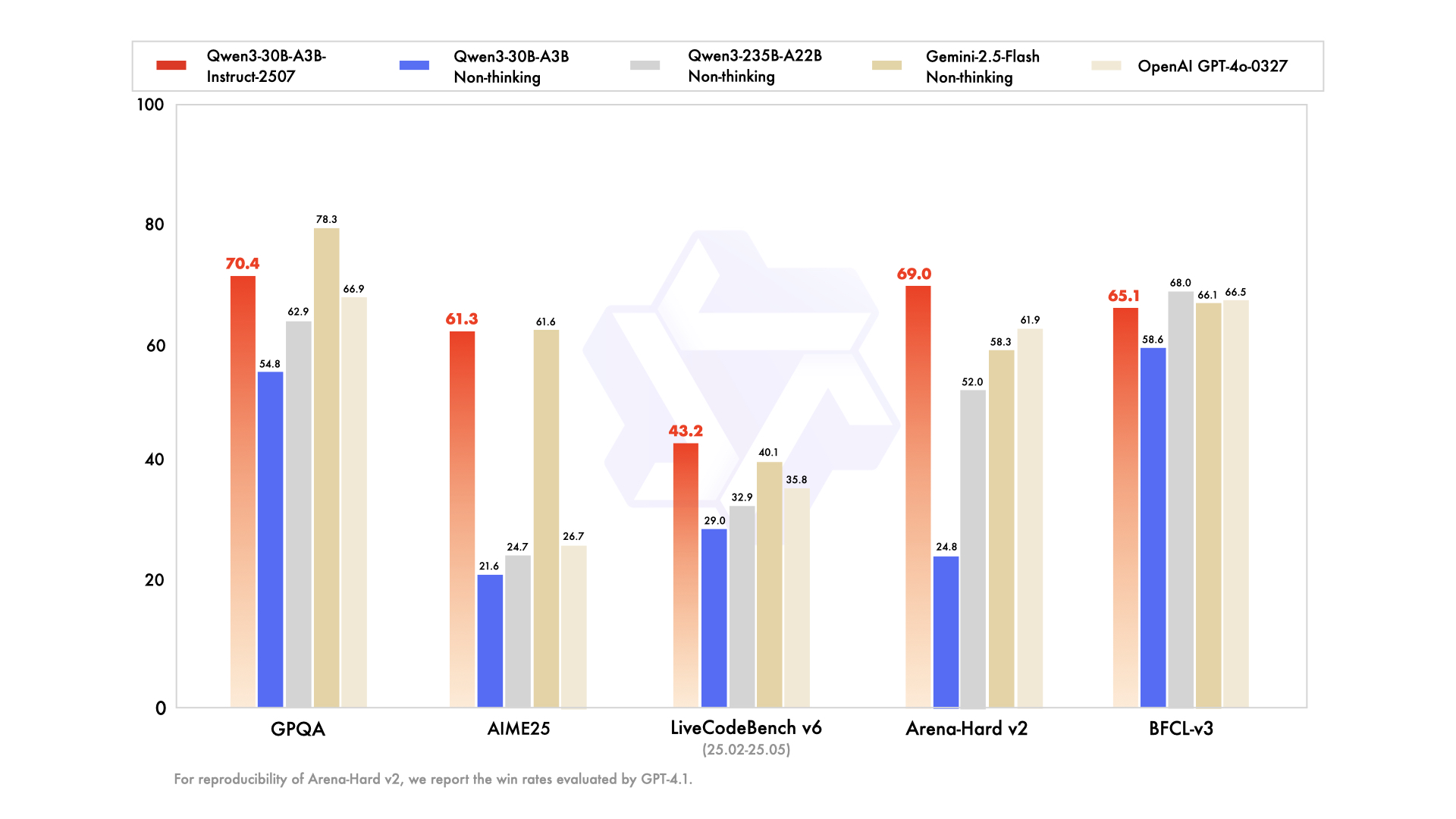

The performance of Qwen3-30B-A3B-Instruct-2507 is evaluated against other leading models across various benchmarks. The results are summarized in the following tables:

Knowledge and Reasoning Benchmarks

| Benchmark | Qwen3-30B-A3B-Instruct-2507 | Deepseek-V3-0324 | GPT-4o-0327 | Gemini-2.5-Flash Non-Thinking | Qwen3-235B-A22B Non-Thinking | Qwen3-30B-A3B Non-Thinking |

|---|---|---|---|---|---|---|

| MMLU-Pro | 81.2 | 79.8 | 81.1 | 75.2 | 69.1 | 78.4 |

| MMLU-Redux | 90.4 | 91.3 | 90.6 | 89.2 | 84.1 | 89.3 |

| GPQA | 68.4 | 66.9 | 78.3 | 62.9 | 54.8 | 70.4 |

| SuperGPQA | 57.3 | 51.0 | 54.6 | 48.2 | 42.2 | 53.4 |

| AIME25 | 46.6 | 26.7 | 61.6 | 24.7 | 21.6 | 61.3 |

| HMMT25 | 27.5 | 7.9 | 45.8 | 10.0 | 12.0 | 43.0 |

| ZebraLogic | 83.4 | 52.6 | 57.9 | 37.7 | 33.2 | 90.0 |

| LiveBench 20241125 | 66.9 | 63.7 | 69.1 | 62.5 | 59.4 | 69.0 |

Coding and Alignment Benchmarks

| Benchmark | Qwen3-30B-A3B-Instruct-2507 | Deepseek-V3-0324 | GPT-4o-0327 | Gemini-2.5-Flash Non-Thinking | Qwen3-235B-A22B Non-Thinking | Qwen3-30B-A3B Non-Thinking |

|---|---|---|---|---|---|---|

| LiveCodeBench v6 | 45.2 | 35.8 | 40.1 | 32.9 | 29.0 | 43.2 |

| MultiPL-E | 82.2 | 82.7 | 77.7 | 79.3 | 74.6 | 83.8 |

| Aider-Polyglot | 55.1 | 45.3 | 44.0 | 59.6 | 24.4 | 35.6 |

| IFEval | 82.3 | 83.9 | 84.3 | 83.2 | 83.7 | 84.7 |

| Arena-Hard v2* | 45.6 | 61.9 | 58.3 | 52.0 | 24.8 | 69.0 |

| Creative Writing v3 | 81.6 | 84.9 | 84.6 | 80.4 | 68.1 | 86.0 |

| WritingBench | 74.5 | 75.5 | 80.5 | 77.0 | 72.2 | 85.5 |

Agent and Multilingualism Benchmarks

| Benchmark | Qwen3-30B-A3B-Instruct-2507 | Deepseek-V3-0324 | GPT-4o-0327 | Gemini-2.5-Flash Non-Thinking | Qwen3-235B-A22B Non-Thinking | Qwen3-30B-A3B Non-Thinking |

|---|---|---|---|---|---|---|

| BFCL-v3 | 64.7 | 66.5 | 66.1 | 68.0 | 58.6 | 65.1 |

| TAU1-Retail | 49.6 | 60.3# | 65.2 | 65.2 | 38.3 | 59.1 |

| TAU1-Airline | 32.0 | 42.8# | 48.0 | 32.0 | 18.0 | 40.0 |

| TAU2-Retail | 71.1 | 66.7# | 64.3 | 64.9 | 31.6 | 57.0 |

| TAU2-Airline | 36.0 | 42.0# | 42.5 | 36.0 | 18.0 | 38.0 |

| TAU2-Telecom | 34.0 | 29.8# | 16.9 | 24.6 | 18.4 | 12.3 |

| MultiIF | 66.5 | 70.4 | 69.4 | 70.2 | 70.8 | 67.9 |

| MMLU-ProX | 75.8 | 76.2 | 78.3 | 73.2 | 65.1 | 72.0 |

| INCLUDE | 80.1 | 82.1 | 83.8 | 75.6 | 67.8 | 71.9 |

| PolyMATH | 32.2 | 25.5 | 41.9 | 27.0 | 23.3 | 43.1 |

Note: For reproducibility, we report the win rates evaluated by GPT-4.1. # Results were generated using GPT-4o-20241120, as access to the native function calling API of GPT-4o-0327 was unavailable.

Key Enhancements

Qwen3-30B-A3B-Instruct-2507 introduces several key improvements over its predecessors:

-

Enhanced Instruction Following: The model demonstrates improved accuracy in understanding and executing user instructions, making it more effective for task-oriented applications. -

Improved Logical Reasoning: The model’s ability to perform logical reasoning tasks has been significantly enhanced, allowing it to tackle complex problems with greater precision. -

Expanded Long-Tail Knowledge: The model covers a broader range of topics, including niche and less common knowledge areas, making it more versatile for diverse applications. -

Better Alignment with User Preferences: The model is designed to generate responses that align more closely with user preferences, resulting in more helpful and high-quality outputs. -

Extended Context Understanding: With a native context length of 262,144 tokens, the model can process and generate text based on extensive contextual information.

Deployment and Usage

Quickstart Guide

To get started with Qwen3-30B-A3B-Instruct-2507, you can use the transformers library from Hugging Face. The following code snippet demonstrates how to load the model and generate text:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "Qwen/Qwen3-30B-A3B-Instruct-2507"

# Load the tokenizer and the model

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

# Prepare the model input

prompt = "Give me a short introduction to large language model."

messages = [

{"role": "user", "content": prompt}

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True,

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

# Conduct text completion

generated_ids = model.generate(

**model_inputs,

max_new_tokens=16384

)

output_ids = generated_ids[0][len(model_inputs.input_ids[0]):].tolist()

content = tokenizer.decode(output_ids, skip_special_tokens=True)

print("content: ", content)

Deployment Options

Qwen3-30B-A3B-Instruct-2507 can be deployed using various frameworks and tools, including:

-

SGLang:

python -m sglang.launch_server --model-path Qwen/Qwen3-30B-A3B-Instruct-2507 --context-length 262144 -

vLLM:

vllm serve Qwen/Qwen3-30B-A3B-Instruct-2507 --max-model-len 262144 -

Local Applications: The model is supported by several local applications, including Ollama, LMStudio, MLX-LM, llama.cpp, and KTransformers.

Best Practices for Deployment

-

Hardware Requirements: Ensure that your system meets the hardware requirements for optimal performance. For large context lengths, consider using high-end GPUs such as NVIDIA A100. -

Memory Management: If you encounter out-of-memory (OOM) issues, reduce the context length to a shorter value, such as 32,768 tokens. -

Performance Tuning: Adjust sampling parameters such as Temperature,TopP,TopK, andMinPto achieve the desired balance between diversity and quality of generated text.

Advanced Features and Use Cases

Tool Calling Capabilities

Qwen3-30B-A3B-Instruct-2507 excels in tool calling, allowing it to interact with external tools and APIs. The Qwen-Agent framework is recommended for leveraging these capabilities. Qwen-Agent simplifies the integration of tools by encapsulating tool-calling templates and parsers, reducing the complexity of development.

To define available tools, you can use the MCP configuration file, leverage built-in tools, or integrate custom tools. Here’s an example of defining tools using Qwen-Agent:

from qwen_agent.agents import Assistant

# Define LLM

llm_cfg = {

'model': 'Qwen3-30B-A3B-Instruct-2507',

'model_server': 'http://localhost:8000/v1', # API base

'api_key': 'EMPTY',

}

# Define Tools

tools = [

{'mcpServers': { # Specify the MCP configuration file

'time': {

'command': 'uvx',

'args': ['mcp-server-time', '--local-timezone=Asia/Shanghai']

},

"fetch": {

"command": "uvx",

"args": ["mcp-server-fetch"]

}

}},

'code_interpreter', # Built-in tools

]

# Define Agent

bot = Assistant(llm=llm_cfg, function_list=tools)

# Streaming generation

messages = [{'role': 'user', 'content': 'https://qwenlm.github.io/blog/ Introduce the latest developments of Qwen'}]

for responses in bot.run(messages=messages):

pass

print(responses)

Customizing Output Formats

To ensure consistency in generated outputs, consider the following guidelines:

-

Math Problems: Include prompts such as “Please reason step by step, and put your final answer within \boxed{}.” to standardize responses. -

Multiple-Choice Questions: Use JSON structures to specify the expected format, e.g., "answer": "C". -

Code Generation: Specify the programming language to improve accuracy and relevance.

Frequently Asked Questions (FAQ)

1. How do I choose the right deployment method for Qwen3-30B-A3B-Instruct-2507?

-

Development and Testing: Use Hugging Face Transformers for quick experimentation. -

Production Environments: Opt for SGLang or vLLM for optimized performance. -

Resource-Constrained Systems: Consider reducing the context length to 32,768 tokens to manage memory usage effectively.

2. What are the recommended sampling parameters for optimal results?

-

Temperature: 0.7 -

TopP: 0.8 -

TopK: 20 -

MinP: 0

Adjusting these parameters can help balance the diversity and quality of generated text. For repetitive content, use the presence_penalty parameter (0-2) to reduce redundancy.

3. How can I handle long text inputs effectively?

-

Segmentation: Break long texts into smaller segments for processing. -

Summarization: Use summarization techniques to condense input length. -

Iterative Generation: Generate content in batches while maintaining contextual coherence.

4. What are the best practices for using Qwen3-30B-A3B-Instruct-2507 in agentic applications?

-

Tool Integration: Leverage Qwen-Agent to simplify tool interactions. -

Configuration Management: Use MCP configuration files to define available tools. -

Custom Tool Development: Integrate third-party tools to expand functionality.

Conclusion

Qwen3-30B-A3B-Instruct-2507 represents a significant leap forward in the capabilities of large language models. With its enhanced performance, extended context understanding, and versatile deployment options, this model is well-suited for a wide range of applications. By following best practices for deployment, customization, and tool integration, users can harness the full potential of Qwen3-30B-A3B-Instruct-2507 to drive innovation and efficiency in their projects.

For further information and updates, refer to the official documentation and community resources provided by the Qwen team. The model’s continuous evolution ensures that it remains at the forefront of advancements in artificial intelligence and natural language processing.

References

-

Qwen Team. (2025). Qwen3 Technical Report. arXiv:2505.09388 -

https://qwenlm.github.io/blog/qwen3/ -

https://github.com/QwenLM/Qwen3 -

https://qwen.readthedocs.io/en/latest/