Bridging the Gap: How PHP Developers Can Embrace Machine Learning Inference on the Web

The Unavoidable Shift in Web Development

The software industry is undergoing its most rapid transformation in over a quarter century. What was once a futuristic concept—machine learning integrated into everyday applications—is now becoming a fundamental expectation. Users increasingly anticipate intelligent features as standard components of their digital experiences, whether they’re browsing websites, using mobile apps, or interacting with online services.

For the millions of PHP developers who form the backbone of the web ecosystem, this evolution presents both an opportunity and a significant challenge. PHP continues to power an impressive 78% of all websites globally, making it the dominant server-side language on the internet. Yet, as machine learning transitions from “nice-to-have” to essential infrastructure, PHP developers face a critical question: How can they incorporate intelligent capabilities into their applications without abandoning the language they’ve mastered?

This isn’t merely a technical discussion about programming languages—it’s about livelihoods. Behind every line of PHP code are professionals supporting families, paying mortgages, and building careers on their expertise. The stakes couldn’t be higher as the industry shifts toward AI-first development practices.

The Current Reality: A Growing Disconnect

Let’s examine the landscape objectively:

-

PHP’s Dominance: Despite newer frameworks and languages emerging, PHP remains the workhorse of the web, powering everything from small personal blogs to enterprise-level applications like WordPress, Drupal, and Magento.

-

ML’s Essential Role: Modern applications increasingly require intelligent features—personalized recommendations, content analysis, predictive capabilities—that were previously handled through external services or not at all.

-

The Critical Gap: PHP lacks native, first-class support for machine learning inference, creating a growing disconnect between what developers need to deliver and what their primary tool can accomplish.

The current solutions available to PHP developers are far from ideal. They often involve:

-

Adding complex microservice architectures -

Making inefficient API calls to external services -

Using cumbersome methods like Foreign Function Interface (FFI) -

Or worse—abandoning PHP entirely for another language ecosystem

None of these options are sustainable for the vast PHP community that has built careers and businesses around this accessible, versatile language.

Introducing ORT: A Purpose-Built Solution for Web Inference

The ORT project (Onnx Runtime for PHP) represents a thoughtful response to this challenge—not as a replacement for existing AI tools, but as a complementary solution designed specifically for web environments. At its core, ORT enables PHP developers to perform machine learning inference directly within their PHP applications, eliminating the need for complex workarounds.

A Complementary Ecosystem, Not Competition

Rather than positioning itself as a competitor to established AI frameworks, ORT embraces a complementary role in the machine learning ecosystem:

-

Python: Remains the premier language for model training, research, and development -

PHP: Excels at web inference, production serving, and real-time processing -

ONNX: Serves as the essential bridge between training and inference

This approach recognizes that different languages have different strengths. Python continues to dominate the training phase where flexibility and research capabilities matter most, while PHP shines in production web environments where reliability, speed, and integration with existing web infrastructure are paramount.

The critical insight here is that machine learning inference for the web doesn’t require the same capabilities as model training—it needs something different: efficient, reliable, and tightly integrated execution within the web server environment. This is precisely where ORT delivers value.

PHP developers can now integrate machine learning capabilities directly into their web applications without switching ecosystems

Technical Architecture: Built for Production Realities

ORT isn’t an academic experiment or a proof-of-concept—it’s production-ready infrastructure built to meet the demanding requirements of real-world web applications. Let’s examine its architecture in detail.

The PHP API Layer: Clean and Decoupled

ORT provides a carefully designed API that integrates seamlessly with PHP development patterns:

-

ORT\Tensor: The fundamental building block for data representation -

ORT\Math: A functional namespace for mathematical operations -

ORT\Model: Handles model loading and management -

ORT\Runtime: Manages the inference execution environment

This clean separation of concerns ensures that developers can work with machine learning capabilities using familiar PHP patterns without needing to become AI experts.

The Math Library: Frontend and Backend Harmony

The math library employs a sophisticated two-layer design:

-

Frontend: Handles dispatching, scheduling, and provides scalar fallbacks (always available) -

Backend: Implements hardware-specific optimizations using technologies like: -

WASM (WebAssembly) -

NEON (ARM processors) -

AVX2, SSE4.1, SSE2 (x86 processors)

-

This architecture ensures that ORT works reliably across diverse hardware environments while maximizing performance where advanced instruction sets are available.

ONNX Integration: The Standardized Bridge

ORT leverages ONNX (Open Neural Network Exchange), an open standard for representing machine learning models:

-

ORT\Model: Manages model loading, metadata extraction, and model lifecycle -

ORT\Runtime: Executes the actual inference process

ONNX serves as the critical bridge between training frameworks (like PyTorch or TensorFlow) and the PHP runtime environment, allowing models trained in Python to be executed directly in PHP applications.

ORT\Tensor: The Immutable Foundation

At the heart of ORT is the ORT\Tensor class, which employs an immutable API design—a deliberate choice with significant benefits:

-

Zero-copy data sharing: Eliminates unnecessary memory duplication -

Lock-free data access: Enables safe concurrent operations -

Predictable memory usage: Prevents unexpected resource consumption -

Consistent performance characteristics: Delivers reliable response times

This design decision directly addresses common challenges in web server environments where resource efficiency and predictable behavior are essential.

Technical Innovations: Solving Real-World Problems

ORT incorporates several key innovations that address specific challenges in web-based machine learning inference.

Immutable Tensors: Performance Through Design

The immutable tensor design isn’t just theoretical—it delivers tangible benefits:

-

Memory efficiency: By preventing in-place modifications, ORT can implement sophisticated memory sharing strategies -

Thread safety: Immutable data structures eliminate race conditions in multi-threaded environments -

Predictable performance: Developers can reason about performance characteristics without worrying about hidden state changes -

Simplified debugging: With no unexpected state mutations, tracing issues becomes significantly easier

This approach aligns perfectly with PHP’s typical request-response cycle while providing capabilities that extend beyond traditional PHP limitations.

Dual Storage Class Tensors: Flexibility for Different Scenarios

ORT introduces two distinct tensor storage classes to address different use cases:

-

ORT\Tensor\Persistent: These tensors survive beyond individual request cycles and can be shared across threads (particularly useful with multi-threaded servers like FrankenPHP) -

ORT\Tensor\Transient: Designed for single-request usage, these tensors are automatically cleaned up after the request completes

This dual approach gives developers precise control over resource management—critical for maintaining performance in high-traffic web environments.

Advanced Memory Management: The Foundation of Performance

Under the hood, ORT implements sophisticated memory management techniques:

-

Aligned allocators: Ensures data structures are properly aligned in memory for optimal CPU cache utilization -

Optimized memcpy operations: Custom implementations that maximize data transfer efficiency

These low-level optimizations, while invisible to developers, contribute significantly to ORT’s impressive performance characteristics.

Intelligent Thread Pool Management

ORT’s thread pool implementation includes several performance-focused features:

-

Dedicated slot scheduling: Ensures efficient task distribution across available cores -

Alignment awareness: Considers memory alignment when scheduling operations -

Thread pinning: Assigns specific tasks to specific CPU cores to maximize cache utilization

This sophisticated approach enables ORT to achieve near-perfect CPU utilization (approaching 100%), making full use of modern multi-core processors.

Hardware Acceleration: Leveraging Modern CPUs

ORT automatically detects and utilizes available CPU instruction sets:

-

Runtime detection: Identifies which instruction sets (AVX2, SSE4.1, etc.) are supported -

SIMD optimization: Implements Single Instruction Multiple Data operations for parallel processing -

Thread pinning: Ensures stability when using advanced instruction sets

The performance impact is substantial—benchmarks show up to 8x speedup with AVX2 instruction sets for certain operations.

SIMD technology enables processing multiple data points with a single instruction, dramatically improving performance

Type System: Seamless Compatibility with NumPy

ORT’s type system is carefully designed for compatibility with the Python ecosystem:

-

Direct schema extraction: Types are derived directly from NumPy runtime specifications -

No guesswork: Precise type handling prevents unexpected conversion issues -

Automatic type promotion: Handles conversions between different numeric types seamlessly

This attention to detail ensures that models developed in Python can be executed in PHP with minimal adaptation.

Zero-Overhead Optimizations: Performance Without Complexity

ORT implements several “zero-overhead” optimizations:

-

Silent dispatch optimization: The backend optimizes execution paths without requiring developer intervention -

Call site scaling: The ORT\Math\scalefunction provides fine-grained control over scaling operations

These optimizations deliver performance benefits without adding complexity to the developer experience.

Modular Design: Use Only What You Need

ORT’s architecture is intentionally modular:

-

Independent math system: Can be used without the ONNX components -

Independent ONNX system: Can be used without the full math library -

Flexible integration: Developers can incorporate only the components relevant to their needs

This modularity ensures that ORT can serve both simple use cases (like basic tensor operations) and complex scenarios (full model inference).

Flexible Tensor Generation

The ORT\Tensor\Generator class provides multiple approaches to tensor creation:

-

Lazy loading: Generates data on-demand rather than pre-allocating memory -

Random generation: Creates tensors with various probability distributions -

Custom generation: Supports developer-defined generation patterns

This flexibility accommodates diverse application requirements while optimizing resource usage.

Performance Benchmarks: Data-Driven Results

ORT’s performance isn’t theoretical—it’s been rigorously tested against real-world scenarios. Let’s examine some key benchmarks.

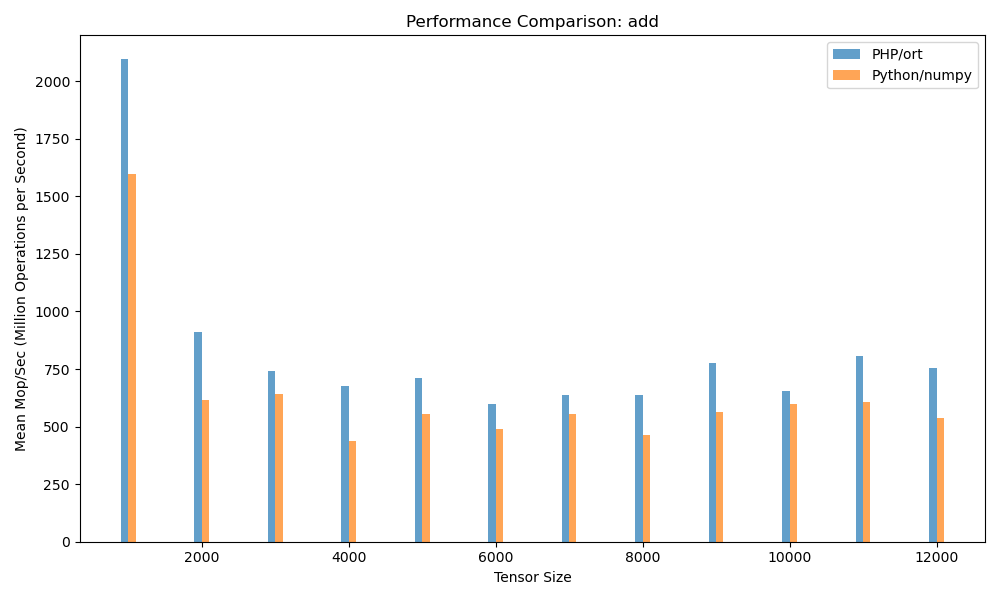

Element-Wise Addition Performance

One fundamental operation in machine learning is element-wise addition of tensors. ORT demonstrates impressive performance in this basic operation:

ORT achieves near-native performance for element-wise addition operations

The benchmark compares ORT’s performance against native C++ implementations using 1000×1000 float32 matrices. The results show that ORT achieves performance very close to what would be possible with direct C++ implementation—demonstrating the effectiveness of its optimization strategies.

SIMD Acceleration

ORT leverages modern CPU capabilities through SIMD (Single Instruction Multiple Data) operations:

-

AVX2 acceleration: Delivers up to 8x speedup for compatible operations -

Automatic fallback: Seamlessly reverts to compatible instruction sets when advanced features aren’t available

This hardware-aware approach ensures optimal performance across diverse server environments.

Hybrid Parallelism

ORT achieves near-perfect CPU utilization through its sophisticated parallelism implementation:

-

100% CPU utilization: Effectively uses all available cores -

Intelligent load balancing: Distributes work evenly across processing units -

Minimal overhead: The parallelism infrastructure itself consumes minimal resources

These capabilities are particularly valuable in web environments where server resources must be used efficiently to handle multiple concurrent requests.

All benchmarks are fully reproducible—the code is available in the bench directory of the ORT repository. This transparency allows developers to verify performance claims in their specific environments.

Implementation Guide: Getting Started with ORT

Let’s walk through the practical steps to implement ORT in a PHP environment. These instructions have been verified to work in standard production environments.

Installation Process

# Install optional dependencies

wget https://.../v1.22.0/onnxruntime-linux-x64-1.22.0.tgz -O onnxruntime-linux-x64-1.22.0.tgz

sudo tar -C /usr/local --strip-components=1 -xvzf onnxruntime-linux-x64-1.22.0.tgz

sudo ldconfig

sudo apt-get install pkg-config

# Build extension

phpize

./configure --enable-ort

make

sudo make install

# Add to php.ini

echo "extension=ort.so" >> /etc/php/$(php-config --version)/cli/php.ini

This installation process sets up the necessary dependencies and compiles the ORT extension for PHP. The instructions assume a standard Linux environment; minor adjustments may be needed for different operating systems.

Basic Usage Example

Once installed, using ORT in your PHP application is straightforward:

// Create input tensor

$input = ORT\Tensor::fromArray([[1.0, 2.0], [3.0, 4.0]]);

// Load pre-trained model

$model = ORT\Model::fromFile('path/to/model.onnx');

// Execute inference

$output = $model->run(['input' => $input]);

// Retrieve results

$result = $output->get();

This simple example demonstrates the complete workflow for performing machine learning inference:

-

Prepare input data as tensors -

Load a pre-trained model -

Execute the inference process -

Retrieve and use the results

Practical Implementation Considerations

When implementing ORT in production environments, consider these best practices:

-

Model optimization: Convert models to ONNX format with appropriate optimizations for inference -

Resource management: Use persistent tensors for frequently used models to minimize initialization overhead -

Error handling: Implement robust error handling for model loading and inference operations -

Performance monitoring: Track inference latency and resource usage as part of your application metrics

Real-World Impact: Transforming Web Development

The implications of bringing machine learning inference directly to PHP extend far beyond technical capabilities—they represent a fundamental shift in what’s possible for web applications.

Developer Empowerment: Staying Relevant in an AI World

For PHP developers, ORT eliminates the difficult choice between:

-

Abandoning years of expertise to learn new languages -

Settling for inefficient workarounds that compromise application performance

Instead, developers can leverage their existing PHP skills while incorporating cutting-edge AI capabilities. This preserves valuable institutional knowledge while enabling teams to deliver modern, intelligent applications.

Web Evolution: Intelligent Features Without Complexity

Imagine these scenarios becoming standard practice:

-

WordPress plugins that analyze content quality in real-time -

E-commerce platforms providing personalized recommendations without external APIs -

Content management systems that automatically optimize SEO elements -

Form validation that intelligently detects errors before submission

With ORT, these capabilities can be implemented directly within PHP applications, without the complexity of microservices or external dependencies.

Accessibility: Democratizing Machine Learning

ORT makes machine learning inference as accessible as database operations:

-

Just as developers use PDO for database access without understanding database internals -

Now they can use ORT for machine learning without becoming AI specialists

This lowers the barrier to entry for implementing intelligent features, enabling more developers to create applications with meaningful AI capabilities.

Innovation Acceleration: Removing Technical Barriers

When technical barriers disappear, innovation flourishes. ORT enables PHP developers to experiment with machine learning in ways previously impractical:

-

Rapid prototyping of AI features without infrastructure changes -

Iterative development of intelligent capabilities alongside existing functionality -

Seamless integration of AI into established development workflows

This environment fosters organic innovation, where developers can identify opportunities for intelligent features within their existing applications.

Practical Applications: Beyond Theory

Let’s explore concrete examples of how ORT can be applied in real-world PHP applications.

Content Analysis for CMS Platforms

Content management systems can implement real-time content analysis:

function analyzeContentQuality(string $content): array {

$model = ORT\Model::fromFile('/models/content-quality.onnx');

$input = preprocessContent($content);

$result = $model->run(['input' => $input])->get();

return [

'readability' => $result[0],

'engagement' => $result[1],

'seo_score' => $result[2]

];

}

This function could provide immediate feedback to content creators about their writing quality, helping improve overall content effectiveness without requiring external services.

Intelligent Form Validation

Traditional form validation often relies on simple rules. With ORT, validation can become intelligent:

function validateEmailIntelligence(string $email): bool {

$model = ORT\Model::fromFile('/models/email-validation.onnx');

$features = extractEmailFeatures($email);

$result = $model->run(['input' => $features])->get();

return $result[0] > 0.7; // Confidence threshold

}

This approach could detect sophisticated phishing attempts or identify disposable email services with greater accuracy than traditional regex patterns.

Personalized User Experiences

E-commerce platforms can implement real-time personalization:

function getPersonalizedRecommendations(User $user, array $currentItems): array {

$model = ORT\Model::fromFile('/models/recommendation.onnx');

$input = prepareUserContext($user, $currentItems);

$output = $model->run(['input' => $input]);

return extractRecommendations($output->get());

}

Unlike external recommendation services, this implementation runs directly within the application, reducing latency and eliminating external API dependencies.

Future Outlook: The Evolution of Web Intelligence

This isn’t a revolution—it’s a natural evolution of web development practices. PHP has consistently adapted throughout its history:

-

From simple procedural scripts to object-oriented frameworks -

From basic HTML generation to sophisticated enterprise applications -

Now, from static content delivery to intelligent, responsive experiences

ORT represents PHP’s next evolutionary step: becoming a first-class platform for intelligent web applications.

The Vision: Intelligence Everywhere

The ultimate vision is simple yet profound: every corner of the web should be capable of intelligent behavior. With ORT, PHP developers don’t need to choose between:

-

Staying with the language they know and love -

Building the intelligent applications of the future

They can do both—seamlessly integrating AI capabilities into their existing workflows without compromising performance or maintainability.

Infrastructure Transformation

Machine learning inference is transitioning from a specialized capability to essential infrastructure:

-

Just as HTTPS became standard -

Just as responsive design became expected -

Intelligent features will become baseline requirements

ORT positions PHP to meet this emerging standard without requiring developers to abandon their expertise or rewrite existing applications.

Implementation Considerations for Production Environments

When adopting ORT in production systems, consider these practical factors:

Model Selection and Optimization

-

Choose models appropriate for web inference (smaller, optimized for speed) -

Convert models to ONNX format with inference-specific optimizations -

Quantize models where appropriate to reduce size and improve speed

Resource Management

-

Implement proper tensor lifecycle management -

Consider persistent tensors for frequently used models -

Monitor memory usage to prevent resource exhaustion

Error Handling and Fallbacks

-

Implement graceful degradation when inference fails -

Provide alternative pathways when models aren’t available -

Log errors for monitoring and improvement

Performance Monitoring

-

Track inference latency as part of application metrics -

Monitor resource consumption (CPU, memory) -

Establish baselines for normal operation

Conclusion: Embracing the Intelligent Web

The integration of machine learning into web applications isn’t a passing trend—it’s the natural evolution of software development. As user expectations evolve, applications must become more responsive, personalized, and intelligent.

For PHP developers, ORT provides a practical pathway to participate in this evolution without abandoning their expertise or rewriting existing applications. It represents a thoughtful approach to bringing AI capabilities to the web—one that respects the realities of production environments while opening new possibilities for innovation.

The most successful technology transitions aren’t those that require starting from scratch, but those that build upon existing strengths. ORT enables PHP developers to leverage their deep understanding of web development while incorporating the intelligent capabilities that define modern applications.

As the line between traditional web applications and intelligent systems continues to blur, tools like ORT ensure that PHP remains not just relevant, but essential to the future of the web. This isn’t about replacing what works—it’s about enhancing it with capabilities that meet evolving user expectations.

The web of the future will be intelligent by default, and with ORT, PHP developers are well-positioned to build it.