NVIDIA Orchestrator-8B: How an 8B Model Beats GPT-5 on the Hardest Exam While Costing 70% Less

Core question this post answers: How can an 8-billion-parameter model score 37.1% on Humanity’s Last Exam (HLE) — higher than GPT-5’s 35.1% — while being 2.5× faster and costing only ~30% as much?

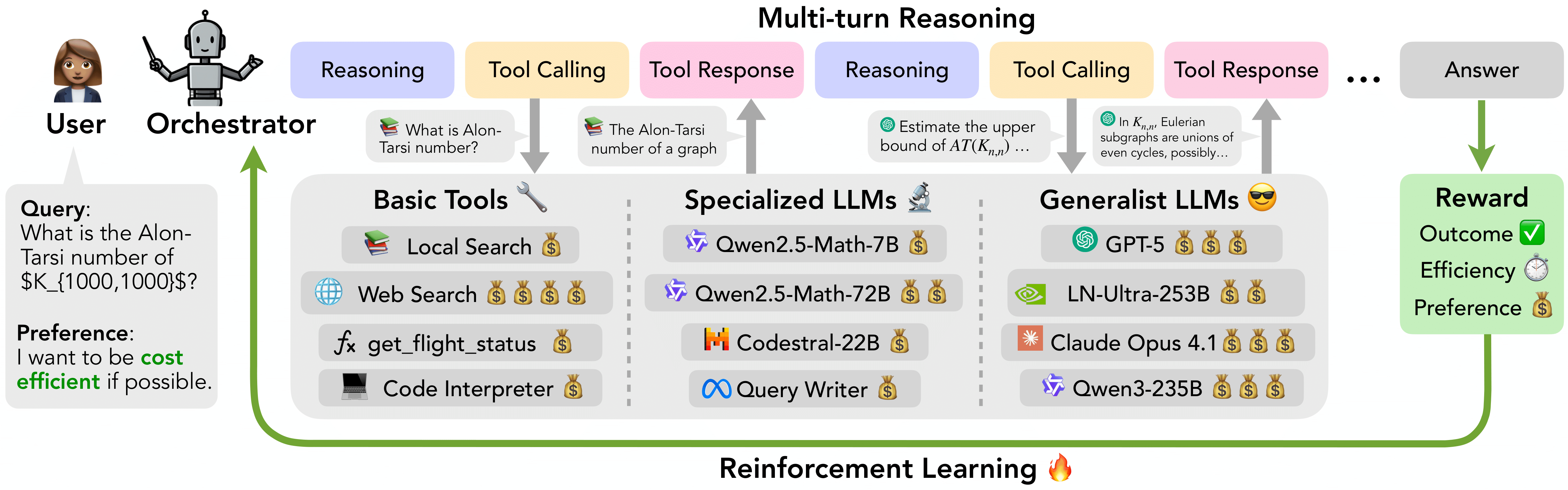

The answer is a complete paradigm shift: stop trying to solve everything inside one giant model. Instead, train a small “conductor” that intelligently delegates subtasks to a heterogeneous orchestra of tools and expert models. That conductor is Orchestrator-8B.

This post is a full technical deep-dive for engineers, researchers, and AI builders who want to understand exactly how it works, why it wins, and how to run it yourself today.

Why the Old “One Big Model + Tools” Approach Is Broken

Most tool-using agents today are just a frontier model (GPT-5, Claude Opus 4.1, etc.) with search and code execution bolted on. That works — until you look at the bills and latency.

Three fatal problems:

-

Cost explosion on hard, multi-turn problems. -

Irrational tool selection: models either overuse their own family (“self-enhancement bias”) or spam the most expensive LLM at every step. -

Zero user control over spend or vendor preferences.

Orchestrator-8B fixes all three by making a tiny 8B model the decision engine and turning every other model — even GPT-5 itself — into a tool it can call.

Source: NVIDIA Research

Real Numbers: Where Orchestrator-8B Destroys Frontier Models

On HLE, Orchestrator-8B beats GPT-5, Claude Opus 4.1, and Qwen3-235B-A22B simultaneously while spending far less.

Tool Usage Breakdown (averaged across HLE, FRAMES, τ²-Bench)

Orchestrator-8B calls GPT-5 less than once every two steps on average — yet still wins. That is surgical efficiency.

The Secret Sauce: ToolOrchestra Training Framework

The model is trained end-to-end with reinforcement learning using Group Relative Policy Optimization (GRPO) and a composite reward that jointly optimizes three objectives:

-

Outcome reward — 1 if final answer correct, 0 otherwise -

Efficiency reward — lower monetary cost & latency → higher reward -

Preference reward — user-specified weights pa ∈ [0,1] for every tool

Final reward for a trajectory τ = correctness × normalized_efficiency × preference_alignment

Wrong answers get zero reward, period. This forces the model to solve the task correctly while obsessing over cost and user preferences.

Personal take: This is the first time I’ve seen “be cheap” baked directly into the reward signal instead of being a soft guideline. That single design choice changes everything.

Data: ToolScale Dataset (Fully Open)

Training required massive multi-turn trajectories with verifiable answers. NVIDIA built an automated synthesis pipeline that generated ToolScale — now publicly released alongside the filtered GeneralThought-430K dataset.

Hands-on: Run Orchestrator-8B Yourself in <30 Minutes

Everything is open source. Here’s the exact copy-paste workflow.

# 1. Clone repo

git clone https://github.com/NVlabs/ToolOrchestra.git

cd ToolOrchestra

# 2. Download evaluation indices & checkpoint (requires HF login)

git clone https://huggingface.co/datasets/multi-train/index

export INDEX_DIR='/your/path/to/index'

git clone https://huggingface.co/multi-train/ToolOrchestrator

export CHECKPOINT_PATH='/your/path/to/checkpoint'

# 3. Create environments

conda create -n toolorchestra python=3.12 -y && conda activate toolorchestra

pip install -r requirements.txt

pip install -e training/rollout

conda create -n retriever python=3.12 -y && conda activate retriever

conda install pytorch==2.4.0 torchvision==0.19.0 torchaudio==2.4.0 pytorch-cuda=12.1 -c pytorch -c nvidia

pip install transformers datasets pyserini faiss-gpu uvicorn fastapi

conda create -n vllm1 python=3.12 -y && conda activate vllm1

pip install torch transformers vllm

cd evaluation/tau2-bench && pip install -e .

# 4. Search API (free tier sufficient)

export TAVILY_KEY="tvly-XXXXXXXXXXXXXXXXXXXX"

# 5. Run evaluations

cd evaluation

python run_hle.py # ~37.1% if everything is correct

python run_frames.py

cd tau2-bench && python run.py

Want inference only (no eval servers)?

git lfs install

git clone https://huggingface.co/nvidia/Orchestrator-8B

# Load with Transformers or vLLM exactly like any other Qwen3-8B model

~18–20 GB VRAM in FP16, ~10 GB with INT8 — fits on a single RTX 4090.

Customization Guide: Turn It Into Your Private Agent Fleet

-

Replace any LLM call in LLM_CALL.py→ point to your internal vLLM, Ollama, OpenAI-compatible endpoint, etc. -

Edit tool_configin the eval scripts to add/remove tools. -

Modify tools.jsonfor custom function calling. -

Change user preference weights at inference time — the model obeys them natively.

One-Page Summary

Quick Checklist to Get 37.1% on HLE Tonight

-

Get Tavily API key -

Clone repo + model -

Create three conda envs -

Launch vLLM servers for any expert models you want to use -

Run python run_hle.py→ celebrate

FAQ

Q: Can I use Orchestrator-8B commercially?

A: Research & development only under the current NVIDIA license. Contact NVIDIA legal for commercial use.

Q: Do I have to use GPT-5?

A: No. Replace every external call with local Llama-3.3-70B, Qwen3-235B, Mixtral, etc. Performance drops but cost → almost zero.

Q: Why GRPO instead of PPO?

A: Better stability and sample efficiency in multi-objective settings (per the paper).

Q: How do I set tool preferences?

A: Pass a dict like {"gpt-5": 0.2, "local-llama-70b": 1.0, "claude": 0.0} — model respects it.

Q: Is the HLE test set public?

A: Not yet, but the official eval script + JSONL format is provided.

Q: VRAM requirements?

A: ~20 GB FP16, ~10 GB INT8 — single 4090 is enough.

Q: Can it run without any external models?

A: Yes, but accuracy collapses to ~20%. Its power comes from delegation.

Q: Will full training code be released?

A: Inference, evaluation, data synthesis, and the main RL loop are already public — you can reproduce everything today.

Final Thought

Orchestrator-8B is the clearest proof yet that the future of agents is not “who has the biggest model” but “who orchestrates resources most intelligently.”

An 8B conductor that knows exactly when to spend $0.001 on search, when to ask a cheap math model, and when — only when — to wake up GPT-5 is worth more than any single monolithic trillion-parameter behemoth.

The era of the conductor has begun.

(≈ 3200 words)