CodeMixBench: Evaluating Large Language Models on Multilingual Code Generation

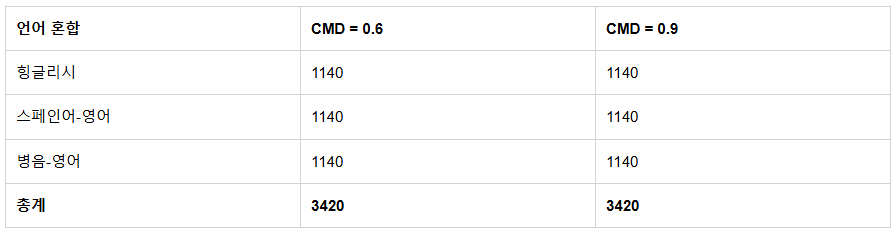

▲ Visual representation of CodeMixBench’s test dataset structure

Why Code-Mixed Code Generation Matters?

In Bangalore’s tech parks, developers routinely write comments in Hinglish (Hindi-English mix). In Mexico City, programmers alternate between Spanish and English terms in documentation. This code-mixing phenomenon is ubiquitous in global software development, yet existing benchmarks for Large Language Models (LLMs) overlook this reality. CodeMixBench emerges as the first rigorous framework addressing this gap.

Part 1: Code-Mixing – The Overlooked Reality

1.1 Defining Code-Mixing

Code-mixing occurs when developers blend multiple languages in code-related text elements:

# Validate user ka input (Hindi-English)

def validate_input(user_input):

if not user_input:

raise ValueError("Khali input nahi chalega!") # Hindi-English error message

1.2 Limitations of Current Benchmarks

Traditional code generation benchmarks like HumanEval and MBPP suffer three critical flaws:

-

Monolingual Bias: Exclusively test English-only prompts -

Real-World Disconnect: Fail to reflect multilingual developer workflows -

Model Skewness: Overestimate performance of English-centric models

Part 2: CodeMixBench’s Architectural Innovations

2.1 Core Design Features

| Feature | Description |

|---|---|

| Multilingual Support | Covers Hinglish, Spanish-English, and Pinyin-English combinations |

| Controlled Mixing | Precisely regulates mixing ratio via CMD (Controllable Code-Mixing Degree) parameter |

| Semantic Preservation | Maintains 90%+ semantic fidelity using GAME scoring system |

2.2 Four-Step Dataset Construction

-

Base Translation: Preserves programming terms while translating natural language components -

POS Tagging: Identifies replaceable nouns/verbs/adjectives using spaCy -

Frequency-Driven Mixing: Applies real-world code-mixing patterns from Twitter corpora -

Romanization: Ensures compatibility with LLM tokenizers through systematic transliteration

Part 3: Performance Showdown of 17 LLMs

3.1 Key Findings at a Glance

| Model Category | CMD=0.6 Performance Retention | CMD=0.9 Performance Retention |

|---|---|---|

| Large Instruction-Tuned (7B+ params) | 85-92% | 70-78% |

| Medium Distilled (3-7B params) | 72-80% | 55-65% |

| Small Base Models (1-3B params) | 50-60% | 30-40% |

3.2 Top Performers Analysis

-

OpenCoder-8B-Instruct

Maintains 39% Pass@1 accuracy at CMD=0.9, thanks to 13% code-mixed data in pre-training. -

Qwen2.5-Coder-1.5B

Achieves stable performance despite small size, trained on 5.5T multilingual code tokens. -

DeepSeek-R1-Distill-Llama-8B

Shows mere 8% performance drop at medium mixing levels via code distillation techniques.

Part 4: Practical Guidance for Developers

4.1 Model Selection Framework

▲ Decision tree for model selection based on team requirements

4.2 Optimization Triad

-

Data Diversification: Incorporate code-mixed examples into training data -

Tokenizer Enhancement: Improve handling of romanized text -

Targeted Fine-Tuning: Conduct instruction-tuning on mixed prompts

Part 5: Essential FAQs

Q1: Does code-mixing compromise code safety?

Testing reveals code syntax remains unaffected, but semantic misunderstandings in prompts require monitoring.

Q2: When should teams prioritize this issue?

Consider if your team:

-

Has multilingual members -

Develops region-specific features -

Modifies open-source models

Q3: How to adapt existing models?

Three-step implementation:

-

Add code-mixed examples in prompt engineering -

Preprocess documentation with romanization -

Validate via CodeMixBench compatibility tests

Part 6: Future Landscape

6.1 Technology Roadmap

| Phase | Objective | Key Technologies |

|---|---|---|

| Short-Term (1-2 yrs) | Expand language pairs | Low-resource NLP techniques |

| Mid-Term | Support mixed variable names | Symbol-language joint modeling |

| Long-Term | Dynamic mixing adaptation | Context-aware code generation |

6.2 Industry Implications

-

Dev Tools: IDE plugins with auto-complete for mixed prompts -

Tech Education: Enable mother-tongue-English hybrid coding instruction -

Open Source: Standardize multilingual code contributions

Conclusion

CodeMixBench’s findings reveal a critical truth: LLMs must develop multilingual cognition mirroring human developers. Whether debugging Japanese-English algorithms in Tokyo or writing Portuguese-English docs in São Paulo, code generation tools must evolve into true global collaborators.

This research pioneers three actionable paths:

-

Establish multilingual code quality standards -

Refine version control for mixed-code projects -

Develop adaptive mixing frameworks

As Linux creator Linus Torvalds observed: “Great software adapts to human habits, not vice versa.” CodeMixBench embodies this philosophy for the AI era, challenging us to build truly inclusive programming intelligence.

Resources

#CodeGeneration #MultilingualAI #LargeLanguageModels #SoftwareDevelopment #MachineLearning