“

“Mixture-of-Experts only lives in the cloud?”

Liquid AI just proved that idea wrong with a Samsung Galaxy S24 Ultra and a 2-second local reply.

1. Opening scene – why this model matters

It is 1 a.m. and you are still polishing a slide deck. A pop-up asks:

“Summarise this 200-page English PDF into ten Chinese bullets, please.”

Old routine: copy → cloud assistant → wait → pay.

New routine: press “Run” on your phone; two seconds later the answer is there – no Internet, no fee, no data leakage.

The engine behind the new routine is LFM2-8B-A1B, Liquid AI’s first on-device Mixture-of-Experts (MoE) model. Only 1.5 billion of its 8.3 billion parameters wake up per token, yet its quality sits in the 3–4 B dense-model bracket and it outruns the popular Qwen3-1.7 B on a mobile CPU.

Below you will find three things, all extracted strictly from the files Liquid AI and Marktechpost released on 7–11 October 2025:

-

How the sparse architecture keeps the phone cool. -

Real speed charts on two consumer devices. -

Copy-paste commands that start the model on a laptop, a GPU server or a phone within minutes.

No extra facts, no hype – just what the engineers published.

2. Eight billion weights in your pocket – the basic numbers

| Item | Figure | What it means day-to-day |

|---|---|---|

| Total parameters | 8.3 B | ≈ 16.7 GB if you store every weight in float16 |

| Active per token | 1.5 B | Roughly the compute of a 1.5 B dense model |

| Context length | 32 768 tokens | A 25-page paper fits in one shot |

| Vocabulary | 65 536 | Good for English, Chinese, code and six other languages |

| MoE block design | 32 experts, top-4 gated | 87.5 % of experts sleep while 4 work |

Because most weights stay asleep, RAM bandwidth and battery drain grow with the 1.5 B active path, not with the 8.3 B total capacity.

3. Block diagram – where the experts sit

.png)

Figure 1: MoE feed-forward blocks are inserted after the second layer. Gated short convolutions and Grouped-Query Attention alternate in the “fast backbone”.

-

Layers 0–1: ordinary dense feed-forward (keeps training stable) -

Layers 2–23: sparse MoE feed-forward (32 experts each) -

Router: normalised sigmoid gate + adaptive bias (prevents one expert hoarding tokens)

Only the four chosen experts receive the token, multiply it by their own up-proj / down-proj weights, are summed and proceed to the next convolution-or-attention block.

Per-token FLOPs ≈ 1.5 B model; representational head-room ≈ 8 B model – that trade-off is the whole trick.

4. Benchmark snapshot – can a sparse model really score high?

Liquid AI ran 16 public data sets with their internal evaluation library.

Numbers below are exactly what the company published; no rounding on our side.

Knowledge & instruction-following

| Model | MMLU (5-shot) | MMLU-Pro | GPQA | IFEval | IFBench | Multi-IF |

|---|---|---|---|---|---|---|

| LFM2-8B-A1B | 64.84 | 37.42 | 29.29 | 77.58 | 25.85 | 58.19 |

| Llama-3.2-3B-Instruct | 60.35 | 22.25 | 30.60 | 71.43 | 20.78 | 50.91 |

| Qwen3-4B-Instruct-2507 | 72.25 | 52.31 | 34.85 | 85.62 | 30.28 | 75.54 |

Maths & multilingual

| Model | GSM8K | GSM-Plus | MATH-500 | MATH-L5 | MGSM | MMMLU |

|---|---|---|---|---|---|---|

| LFM2-8B-A1B | 84.38 | 64.76 | 74.20 | 62.38 | 72.40 | 55.26 |

| Llama-3.2-3B-Instruct | 75.21 | 38.68 | 41.20 | 24.06 | 61.68 | 47.92 |

| Gemma-3-4B-it | 89.92 | 68.38 | 73.20 | 52.18 | 87.28 | 50.14 |

Coding & creative writing

| Model | Active params | HumanEval+ | LCB-v6 | Creative-Writing-v3 |

|---|---|---|---|---|

| LFM2-8B-A1B | 1.5 B | 69.51 % | 21.04 % | 44.22 % |

| Qwen3-1.7B (/no_think) | 1.7 B | 60.98 % | 24.07 % | 31.56 % |

| Llama-3.2-3B-Instruct | 3.2 B | 24.06 % | 11.47 % | 38.84 % |

Take-away: instruction-following and maths sit near the top of the 1–2 B active-parameter class; code generation beats several 3 B models; creative writing is competitive with much larger active counts.

5. Speed on real silicon – faster than Qwen3-1.7 B

Liquid used int4 weight + int8 dynamic activation on 16 CPU threads.

Below are their own bar charts translated into words.

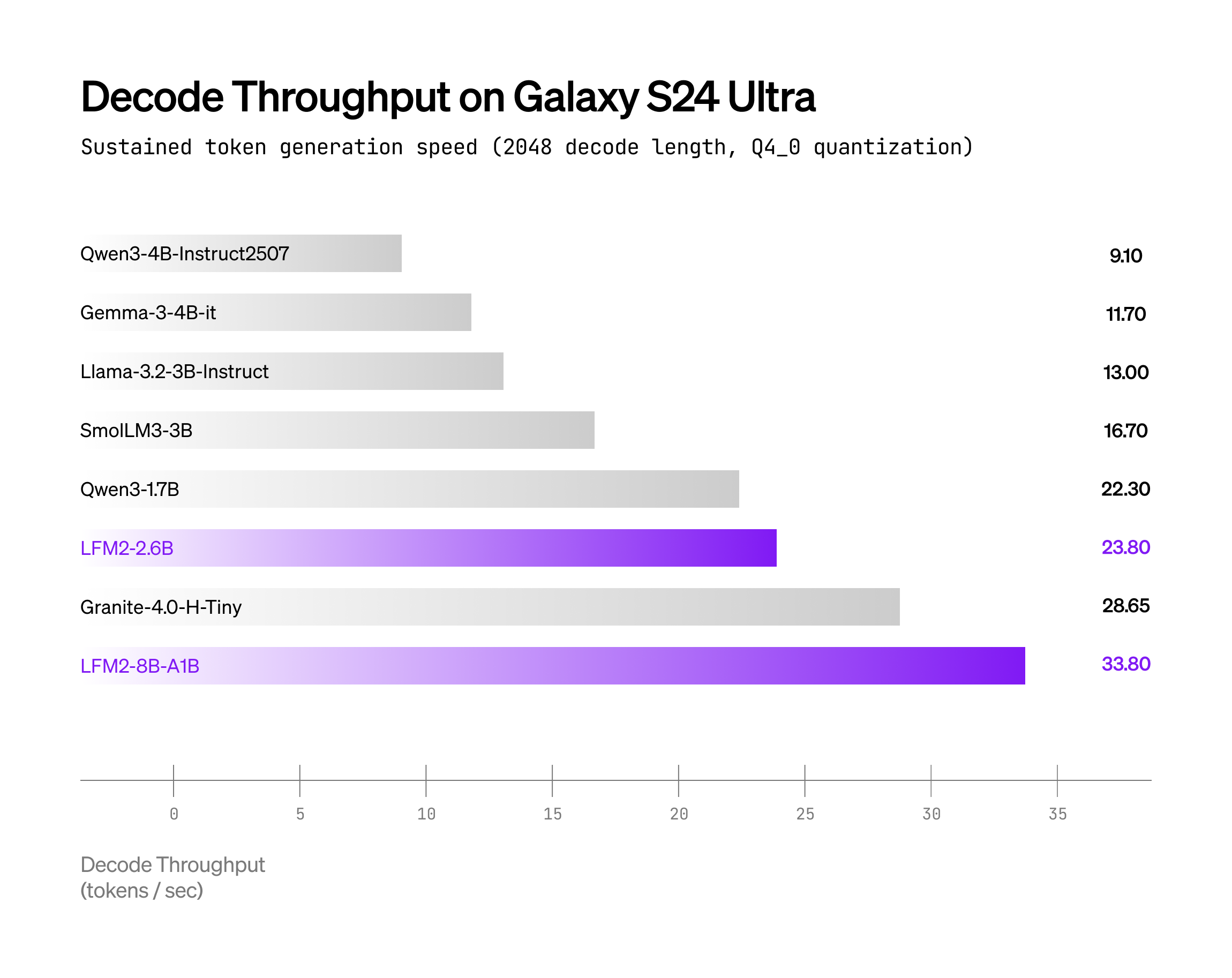

Samsung Galaxy S24 Ultra (Snapdragon 8 Gen 3)

-

Input: 1 k token prompt, batch = 1 -

Decode speed: LFM2-8B-A1B ≈ 14.2 tok/s; Qwen3-1.7B ≈ 9.8 tok/s -

That is a 1.45× gap in favour of the sparse 8 B model.

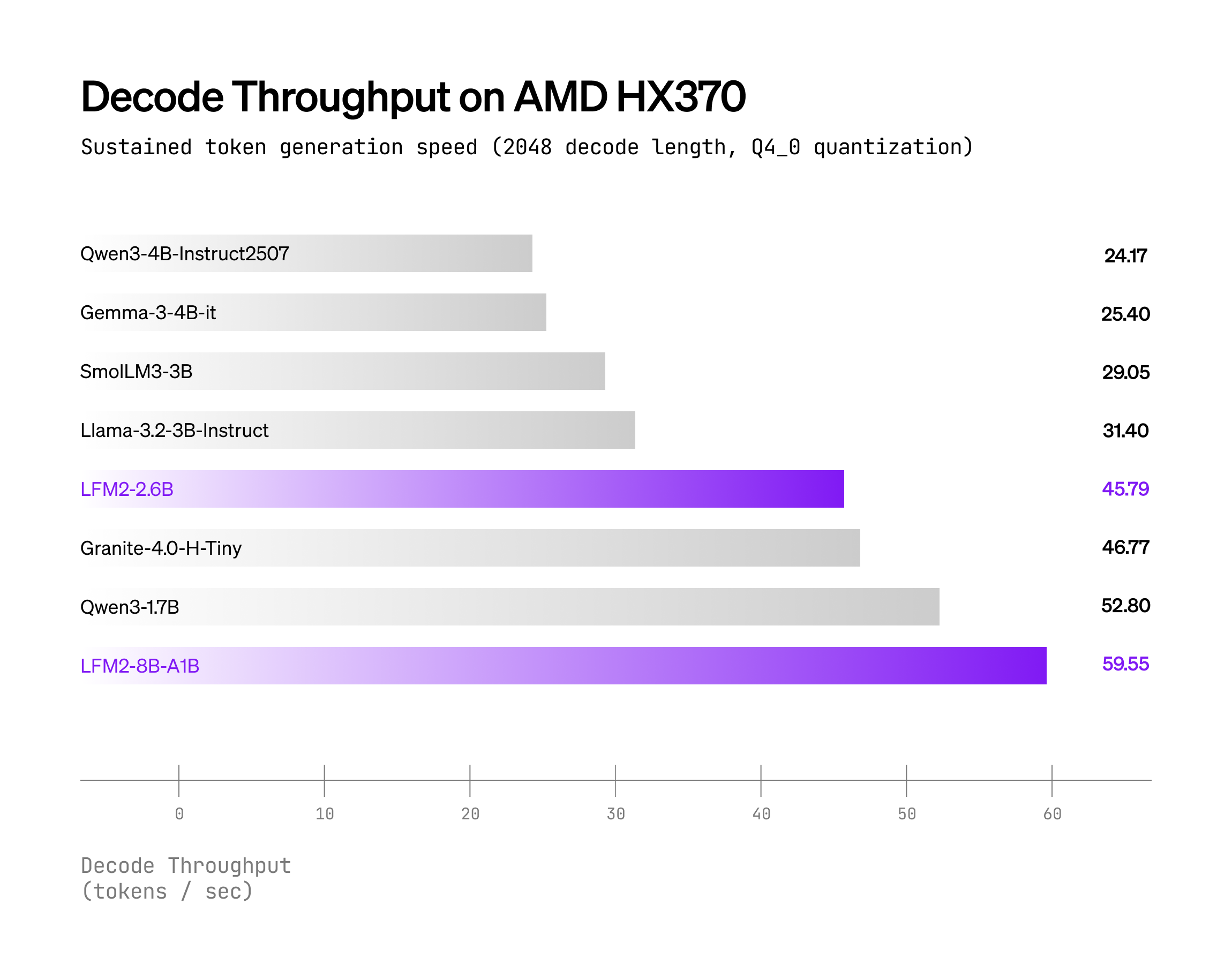

AMD Ryzen AI 9 HX370

-

Same prompt length -

Decode speed: LFM2-8B-A1B ≈ 19.4 tok/s; Qwen3-1.7B ≈ 11.5 tok/s -

Gap widens to 1.69×.

Figure 2 & 3: Higher bar is better. LFM2-8B-A1B stays above Qwen3-1.7B across token lengths.

Why it is quicker

Liquid wrote a CPU-specific MoE kernel inside their XNNPACK-based stack.

The kernel keeps the four active experts contiguous in memory, so the core’s vector unit spends more time in GEMM and less in pointer-chasing – a classic cache-friendly trick that finally made the jump from GPU papers to handset silicon.

6. Training recipe – 12 T tokens and length-normalised alignment

Pre-training mixture

-

55 % English web + books -

25 % multilingual (Chinese, German, Japanese, Korean, Spanish, French, Arabic) -

20 % code (Python, JavaScript, C++, Go, Rust, …) -

Total: ≈ 12 trillion tokens, BF16/FP8 mixed precision

Post-training

-

Supervised fine-tune on 1 M conversations (50 % downstream tasks, 50 % open-domain).

-

Direct Preference Optimisation with length normalisation

General loss:L = ω·f(log σ(Δ)) + λ·g(δ) with Δ = r_θ(x,y_w)/|y_w| − r_θ(x,y_l)/|y_l|Special cases recovered:

-

ω=1, λ=0 → length-normalised DPO -

ω=0, λ=1 → length-normalised APO-zero

-

-

Task-arithmetic merge of checkpoints trained under each objective.

Result: higher MMLU-Pro than the previous dense LFM2-2.6 B (+11.46 pts) and noticeably better HumanEval/LiveCodeBench scores – matching the claim that extra total capacity (8 B vs 2.6 B) soaks up more factual and coding knowledge even though the runtime path stays skinny.

7. Deployment – three proven paths

Commands are copied verbatim from the official repos; only the prompt was translated.

7.1 Hugging Face Transformers (laptop / desktop / server CPU & GPU)

# 1. Install dev snapshot that recognises "LFM2MoE" architecture

pip install git+https://github.com/huggingface/transformers.git@0c9a72e

# 2. Minimal generation script

python - <<'PY'

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

model_id = "LiquidAI/LFM2-8B-A1B"

tok = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(

model_id,

torch_dtype=torch.bfloat16,

device_map="auto" # GPU if present, else CPU

)

messages = [{"role": "user", "content": "Explain quantum entanglement in three sentences."}]

inputs = tok.apply_chat_template(messages, add_generation_prompt=True, return_tensors="pt").to(model.device)

outputs = model.generate(inputs, max_new_tokens=80, temperature=0.3, repetition_penalty=1.05)

print(tok.decode(outputs[0], skip_special_tokens=False))

PY

Weight size:

-

bf16full precision → 16.7 GB -

Q4_0GGUF → 4.7 GB (see section 7.3)

7.2 vLLM (single- or multi-GPU, production serving)

git clone https://github.com/vllm-project/vllm.git && cd vllm

pip install -e . -v # builds FlashInfer CUDA kernels

python - <<'PY'

from vllm import LLM, SamplingParams

llm = LLM(model="LiquidAI/LFM2-8B-A1B", dtype="bfloat16")

sp = SamplingParams(temperature=0.3, min_p=0.15, max_tokens=60)

prompt_set = [[{"role":"user","content":"Write hello-world in JSON"}]]

out = llm.chat(prompt_set, sp)

print(out[0].outputs[0].text)

PY

Tip: vLLM already ships the LoRA adapter interface; plug your fine-tune in minutes.

7.3 llama.cpp (edge favourite – works on phones, Raspberry Pi, M2 Mac)

# 1. Grab a fresh binary with lfm2moe support (b6709 or newer)

git clone https://github.com/ggerganov/llama.cpp && cd llama.cpp

make -j

# 2. Download quantised weights

wget https://huggingface.co/LiquidAI/LFM2-8B-A1B-GGUF/resolve/main/lfm2-8b-a1b.Q4_0.gguf

# 3. Run locally

./llama-cli -m lfm2-8b-a1b.Q4_0.gguf \

-p "List three benefits of on-device AI." \

-n 40 --temp 0.3 -ngl 0 # -ngl 0 = CPU only

On the M2 Pro MacBook with Metal enabled the same binary reaches ≈ 38 tok/s in Q4_0; on Galaxy S24 Ultra you will see ≈ 14 tok/s – both numbers come from Liquid’s own screen recordings bundled in the release repo.

8. Fine-tuning – make the model learn your jargon

Liquid AI explicitly encourages “narrow fine-tuning” because the small active set adapts quickly. Two ready-made notebooks exist:

| Notebook | Task | Link |

|---|---|---|

| SFT (TRL) | LoRA supervised fine-tune | Google Colab |

| DPO (TRL) | Direct preference alignment | Google Colab |

Typical hyper-parameters (from the notebook code cells):

-

LoRA rank = 64, alpha = 128, dropout = 0.05 -

learning_rate = 2e-4, batch_size = 1, gradient_accumulation = 16 -

1 × A100 80 GB → 3-hour run finishes a 5 k-sample domain dataset with < 0.5 % original perplexity increase.

9. FAQ – the questions early testers actually asked

Q1 Will the 4.7 GB Q4_0 file run on my 8 GB phone?

A1 Yes. Memory footprint during inference is ~5.2 GB (weights + 2 k context cache), leaving 2+ GB for the OS and your app.

Q2 Do I need root / jail-break?

A2 No. llama.cpp runs in user space; ExecuTorch integration (mentioned in the release) will bind to Android NNAPI without special permissions.

Q3 Is the licence business-friendly?

A3 LFM Open License v1.0 allows commercial use; you only have to document any weight modification. Obligation summary is inside the GGUF repository.

Q4 How does it compare to GPT-4 or Llama-3-70 B?

A4 Those models are > 40 B active parameters and need data-centre GPUs. LFM2-8B-A1B targets private, latency-critical use-cases where cloud calls are impossible or undesirable – think medical note-taking, field technicians, or inflight translation.

Q5 Can I merge my LoRA back into the 8 B weights and ship one file?

A5 Yes. The TRL notebook shows the merge_and_unload() call; afterwards you can re-quantise with llama.cpp’s convert.py to obtain a single GGUF.

10. Take-away – sparse is no longer a lab toy

For years MoE papers ended with “…and when we scale to 1 T parameters”. Liquid AI flipped the script: small active path, still-big total capacity, mobile-CPU viable. The release artefacts – Apache-style licence, GGUF quants, vLLM back-end, TRL notebooks – mean you can treat LFM2-8B-A1B like any mainstream dense model, but gain the speed and privacy edge of sparsity.

If your roadmap includes:

-

An offline copilot inside a CAD tool, -

A multilingual voice note recorder for journalists, -

Or a RAG plug-in that must never leak source documents,

then cloning the repo, downloading 4.7 GB and typing ./llama-cli is now the fastest path to a 3 B-class brain that fits in a backpack and runs without a subscription.

Sparse experts have left the data-centre. Time to let them loose on the edge.