OpenAI gpt-oss Models: Technical Breakdown & Real-World Applications

Introduction

On August 5, 2025, OpenAI released two open-source large language models (LLMs) under the Apache 2.0 license: gpt-oss-120b and gpt-oss-20b. These models aim to balance cutting-edge performance with flexibility for developers. This article breaks down their architecture, training methodology, and real-world use cases in plain language.

1. Model Architecture: How They’re Built

1.1 Core Design

Both models use a Mixture-of-Experts (MoE) architecture, a type of neural network that activates only parts of the model for each input. This makes them more efficient than traditional dense models.

| Component | gpt-oss-120b | gpt-oss-20b |

|---|---|---|

| Total Parameters | 116.8B | 20.9B |

| Active Parameters per Token | 5.1B | 3.6B |

| Quantization | MXFP4 (4.25 bits/parameter) | MXFP4 |

Table 1: Key specifications of the two models [citation:1]

1.2 Technical Features

-

Sparse Activation: Only 4 out of 128/32 experts (for 120b/20b) process each token. -

Extended Context: Supports up to 131,072 tokens via YaRN (a context window extension technique). -

Optimized Attention: Uses grouped query attention (GQA) to reduce memory usage.

2. Training Process: From Data to Deployment

2.1 Pre-Training

-

Data Source: Trillions of tokens of text, focusing on STEM, coding, and general knowledge. -

Safety Filter: Content related to hazardous biological/chemical knowledge was filtered using GPT-4o’s CBRN filters. -

Tokenizer: Uses o200k_harmonytokenizer (201k tokens) for multilingual support.

2.2 Post-Training

After pre-training, the models were fine-tuned using Reinforcement Learning from AI Feedback (RLAIF) with three key objectives:

-

Reasoning: Three levels of reasoning effort (low/medium/high) via system prompts. -

Tool Use: -

Web browsing -

Python code execution -

Custom function calls (e.g., for e-commerce APIs).

-

-

Harmony Chat Format: A structured prompt format with roles like System,Developer, andUser.

3. Performance: Benchmarks & Real-World Use

3.1 Core Capabilities

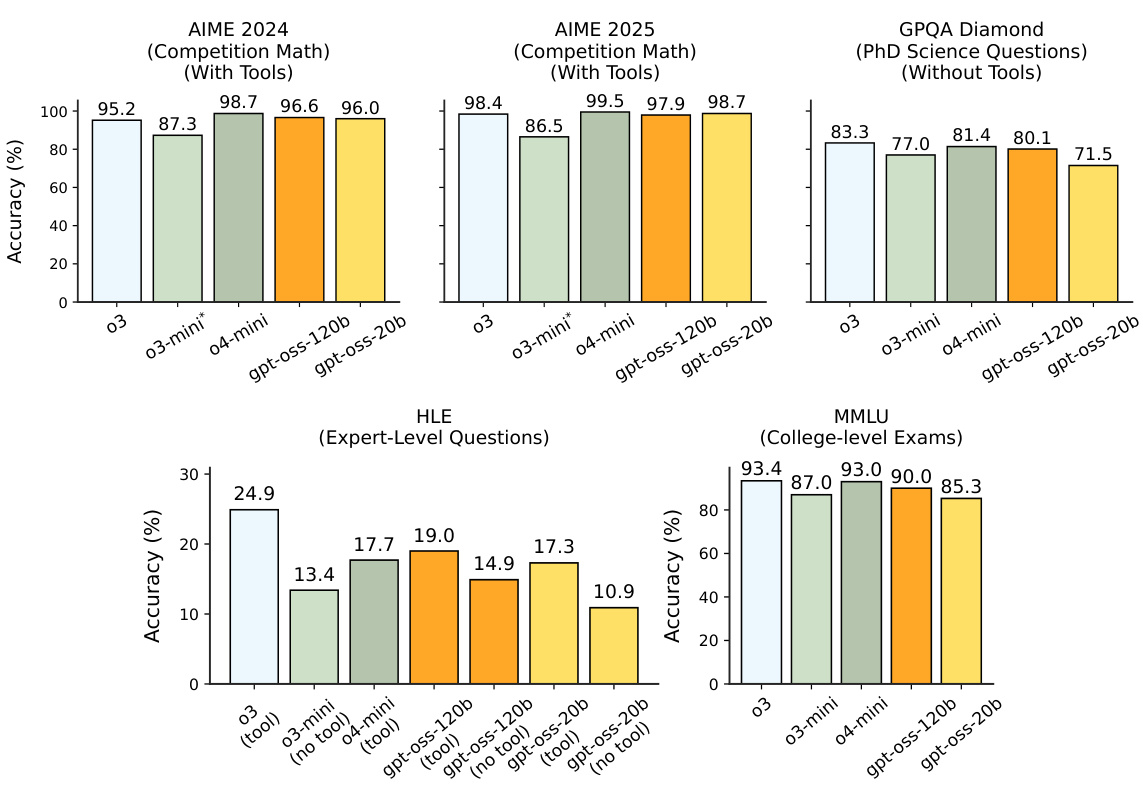

The models were tested on math, science, coding, and multilingual tasks:

| Benchmark | gpt-oss-120b (High Reasoning) | gpt-oss-20b (High) |

|---|---|---|

| AIME 2025 (Math) | 97.9% | 98.7% |

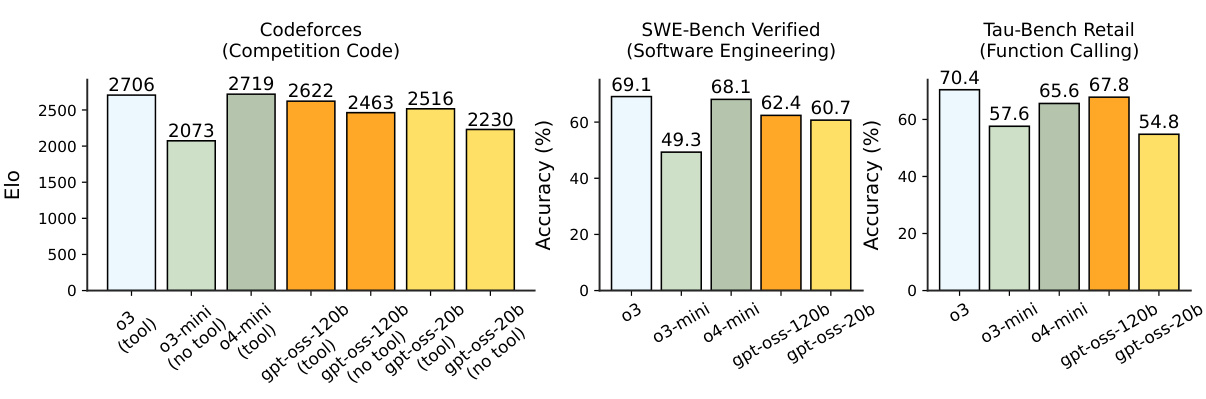

| Codeforces Elo | 2622 | 2516 |

| MMMLU (14 languages) | 81.3% avg. | 75.7% avg. |

Table 3: Performance across key benchmarks [citation:1]

3.1.1 Strengths

-

Math & Logic: Excels at complex reasoning (e.g., AIME problems). -

Coding: Matches OpenAI o4-mini in Codeforces and SWE-Bench Verified scores. -

Multilingual: Supports 14 languages, with Spanish/Portuguese achieving 85%+ accuracy.

3.1.2 Limitations

-

Factuality: Higher hallucination rates on SimpleQA (78.2% for 120b) compared to OpenAI o4-mini. -

Niche Knowledge: Struggles with highly specialized domains (e.g., HLE benchmark).

3.2 Health & Safety

-

HealthBench: 120b model matches OpenAI o3 performance in realistic medical conversations. -

Safety: -

99%+ accuracy in refusing harmful content (e.g., self-harm, violence). -

Robust to jailbreaks but slightly weaker than OpenAI o4-mini in instruction hierarchy tests.

-

4. Practical Applications

4.1 Technical SEO & Content Optimization

The models’ ability to analyze code and generate structured data makes them useful for:

-

Schema Markup: Automatically generating JSON-LD for product pages. -

Content Gap Analysis: Identifying missing keywords in blog posts. -

Multilingual SEO: Translating metadata while maintaining keyword relevance.

Example use case:

# Sample code to generate meta descriptions using gpt-oss-20b

def generate_meta_description(keyword, content):

prompt = f"""As a technical SEO expert, write a concise meta description for a page about {keyword}.

Content snippet: {content}

Requirements: Under 160 characters, include primary keyword."""

return call_model(prompt, reasoning="low")

4.2 YouTube & Video SEO

For video creators, the models can:

-

Keyword Research: Analyze search volume for tags (e.g., using Keywords Everywheredata). -

Transcript Optimization: Generate timestamps and keyword-rich descriptions. -

Thumbnail Text: Suggest text overlays for CTR optimization.

4.3 E-Commerce

-

Product Descriptions: Generate unique, keyword-rich copy at scale. -

FAQ Optimization: Answer common customer queries using structured data.

5. Safety & Limitations

5.1 Risks

-

Hallucinations: May generate plausible but incorrect technical advice. -

Bias: Requires fine-tuning for fairness in hiring/HR applications.

5.2 Mitigation

-

Guardrails: Use system prompts to restrict domains (e.g., medical advice). -

Human-in-the-Loop: Validate outputs for critical tasks.

6. How to Get Started

6.1 Access

-

Download weights via OpenAI’s official channels (Apache 2.0 license). -

Deploy on AWS/GCP with 80GB+ VRAM (120b) or 16GB+ (20b).

6.2 Optimization Tips

-

Quantization: Use 4-bit quantization for faster inference. -

Reasoning Level: Start with lowfor simple tasks,highfor complex analysis.

Conclusion

OpenAI’s gpt-oss models offer a powerful, customizable foundation for technical SEO, content creation, and multilingual applications. While they require careful deployment to mitigate risks, their open-source nature makes them a valuable tool for developers building the next generation of AI-driven solutions.