Gemini 3 Pro: The Frontier of Vision AI – From Recognition to True Reasoning

Core Question: What fundamental leaps does Google’s latest Gemini 3 Pro model deliver, and how does it move beyond traditional image recognition to solve real-world problems through genuine visual and spatial reasoning?

In late 2025, Google DeepMind introduced its most capable multimodal model to date: Gemini 3 Pro. This is far more than a routine version update. It marks a paradigm shift for artificial intelligence in processing visual information, evolving from passive “recognition” to active “understanding” and “reasoning.” Whether it’s chaotic historical documents, dynamic and complex video streams, or the interactive interfaces on our phone screens, Gemini 3 Pro demonstrates unprecedented capabilities in parsing and cognition. This article deconstructs the four core competencies of this “all-purpose visual brain” and reveals, through concrete application scenarios, how it is poised to reshape education, medicine, law, and even how we interact with machines.

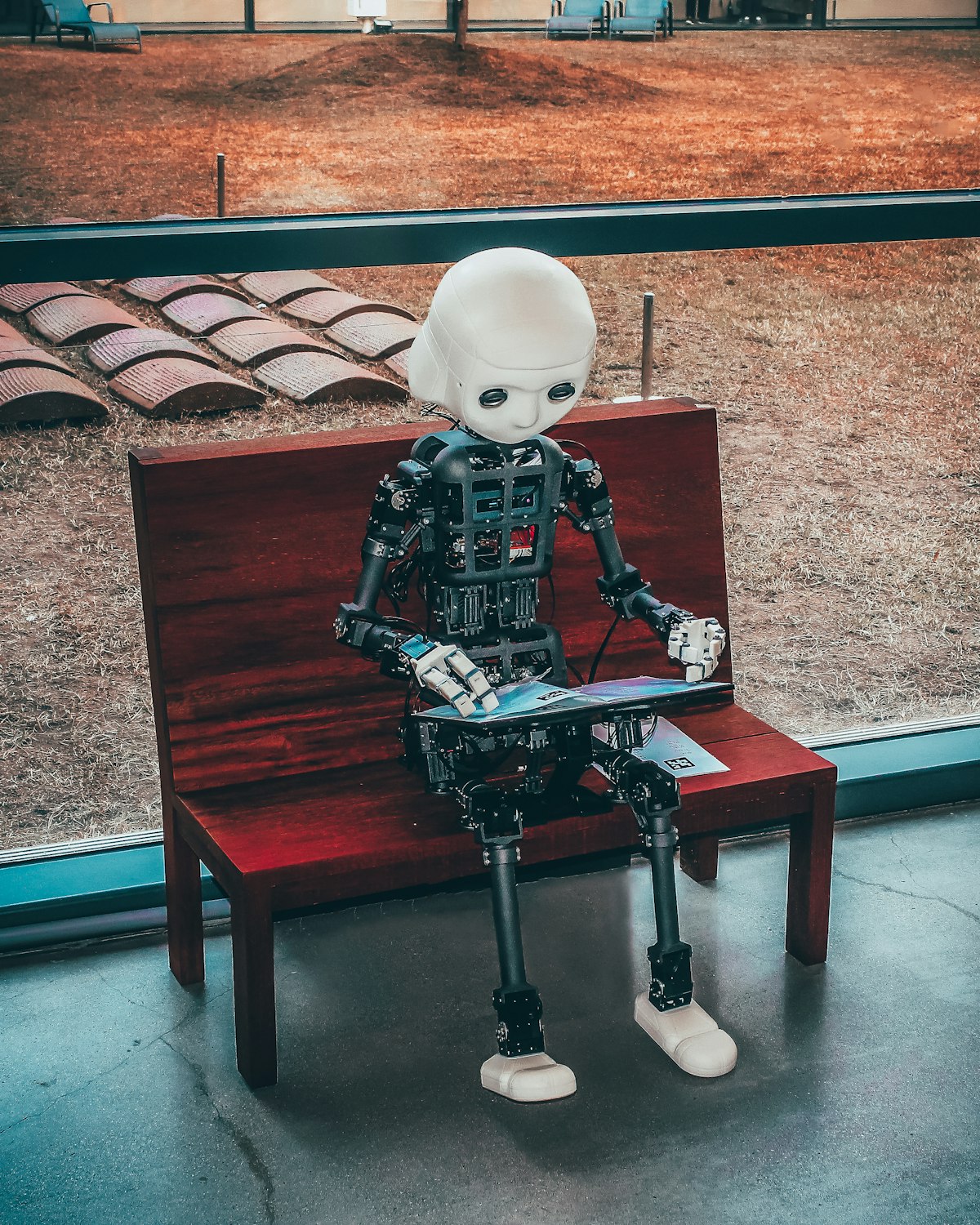

Image Source: Unsplash

1. Document Understanding: From Scanner to Intelligent Archivist

Core Question: How does Gemini 3 Pro achieve deep understanding and analysis of messy, real-world documents that goes far beyond traditional Optical Character Recognition (OCR)?

Real-world documents are messy: filled with illegible handwritten text, nested tables, complex mathematical notation, interleaved images and charts, all arranged in non-linear layouts. Traditional OCR often stops at “character recognition.” Gemini 3 Pro aims for “content comprehension.” It represents a generational leap in document processing, built on two core layers: Intelligent Perception and Sophisticated Reasoning.

1.1 Intelligent Perception: Derendering the Document’s “Source Code”

To truly understand a document, a model must accurately detect and recognize text, tables, formulas, figures, and charts, regardless of noise or irregular formatting.

A foundational and powerful capability is “derendering” – the ability to reverse-engineer a visual document back into the structured code (e.g., HTML, LaTeX, Markdown) that would recreate it. For instance, the model can transform a complex, handwritten table from an 18th-century merchant’s ledger into a structured digital table. It can also convert an image dense with mathematical annotations into precise LaTeX code. This effectively generates a “digital DNA” for any document.

Example: Input an image of an archaic merchant’s handbook, and Gemini 3 Pro outputs an accurate transcription and structured tabular data.

1.2 Sophisticated Reasoning: Interpreting Reports Like an Analyst

Users can rely on Gemini 3 Pro to perform complex, multi-step reasoning across charts and tables, even in reports spanning dozens of pages. Notably, it outperforms the human baseline on the expert-level CharXiv reasoning benchmark with a score of 80.5%.

Consider this scenario: An analyst needs to review the U.S. Census Bureau’s 62-page “Income in the United States: 2022” report. They could ask the model: “Compare the 2021–2022 percent change in the Gini index for ‘Money Income’ versus ‘Post-Tax Income.’ What caused the divergence in the post-tax measure? Regarding ‘Money Income,’ does the data show the lowest quintile’s share rising or falling?”

The model’s workflow showcases its reasoning power:

-

Visual Extraction: It first locates the key data points (e.g., “Money Income decreased by 1.2%” in Figure 3 and “Post-Tax Income increased by 3.2%” in Table B-3) among the report’s many visuals. -

Cross-referencing & Computation: It compares these trends and identifies the factors driving the divergence in post-tax income changes. -

Trend Analysis: It finds data on income share distribution elsewhere in the report to determine the trend for the lowest income group. -

Synthesized Answer: It delivers a structured, data-accurate response.

Reflection / Unique Insight: The ultimate value of advanced document understanding lies in liberating humans from the “manual labor” of sifting through vast amounts of unstructured information. The advent of Gemini 3 Pro suggests that the bottleneck in information processing is shifting from “data acquisition” to “asking the right questions.” In the future, a professional’s core competency may increasingly hinge on their ability to craft precise prompts that guide AI to uncover the most insightful conclusions.

2. Spatial Understanding: Giving AI a “Hand-Eye Coordination” System

Core Question: How does Gemini 3 Pro comprehend spatial relationships in the physical world and translate this understanding into actionable instructions for robots or AR devices?

Gemini 3 Pro is Google’s most advanced spatial understanding model to date. When combined with its robust reasoning engine, it equips AI with the foundational ability to make sense of and interact with the physical world.

2.1 Pixel-Precise Pointing Capability

The model can output pixel-precise coordinates to point at specific locations within an image. Sequences of these 2D points can be strung together to perform more complex tasks, such as estimating human poses or reflecting object trajectories over time.

2.2 Open-Vocabulary Referencing and Physical Task Planning

The model can use an open vocabulary to identify objects and comprehend intent. The most direct application is in robotics. A user could instruct a robot: “Given this messy table, come up with a plan to sort the trash.” The model would not only identify items like “bottle,” “cardboard box,” and “scrap paper” but also generate a spatially grounded plan involving steps like grasping, moving, and placing.

This capability extends seamlessly to Augmented Reality/Extended Reality (AR/XR) devices. A user could ask an AI assistant: “Point to the screw mentioned in step 3 of the manual.” The AI, through AR glasses, could then highlight the exact screw in the user’s real-world field of view.

Example: A cluttered tabletop with a box, bottle, screwdriver, and other items. Gemini 3 Pro can plan a clear movement path from a measuring tape to the box.

Reflection / Lesson Learned: Spatial understanding is a critical link for achieving embodied intelligence and natural interaction. It breaks the screen barrier, allowing AI’s “thinking” to be grounded in the three-dimensional physical world. This challenge is not just about technical precision but also about comprehending “intent” – the AI must understand what the abstract instruction “sort the trash” translates to in terms of a series of concrete spatial actions.

3. Screen Understanding: Turning AI into Your “Digital Assistant”

Core Question: How does Gemini 3 Pro accurately interpret complex user interfaces on computer and mobile screens to enable reliable automation?

Gemini 3 Pro’s spatial prowess shines in its understanding of desktop and mobile operating system screens. This reliability is key to building robust “computer use agents” capable of automating repetitive tasks.

Consider this task: “In a new worksheet (Sheet2), use the Pivot Table feature to summarize the total revenue for each promotion type, using the promotion names as column headers.”

An automated workflow powered by Gemini 3 Pro could:

-

Perceive the UI: Accurately identify the Excel window, menu bars, and data regions on the screen. -

Plan Actions: Understand that it needs to select the data range, click “Insert PivotTable,” choose the location for the new sheet, and drag the appropriate fields to the Rows, Columns, and Values areas. -

Execute Interactions: Simulate mouse movements and clicks with high precision to complete all the above steps.

This UI comprehension enables other valuable applications: automated software testing (QA), generating personalized software onboarding guides for new users, and analyzing user interaction data for UX optimization.

4. Video Understanding: Decoding the Most Complex Dynamic Data Stream

Core Question: How does Gemini 3 Pro move beyond simple object detection in dense, fast-paced video to achieve deep temporal reasoning and knowledge extraction?

Video is the most complex data format we interact with daily: dense, dynamic, multimodal, and rich with context. Gemini 3 Pro delivers three major breakthroughs here.

4.1 High Frame-Rate Understanding: Capturing Fleeting Details

The model is optimized for understanding fast-paced actions when processing video at high frame rates (>1 frame per second). This is vital for tasks like analyzing golf swing mechanics. By processing video at 10 FPS (10x the default speed), the model captures every subtle shift in swing and weight transfer, unlocking deep insights into athlete form.

4.2 Video Reasoning with an Enhanced “Thinking” Mode

The model’s “thinking” mode is upgraded to go beyond object recognition toward true video reasoning. It can better trace complex cause-and-effect relationships over time. This means it moves past identifying what is happening to understanding why it is happening.

4.3 From Long-Form Video to Executable Code

The model bridges the gap between video content and functional code. It can extract knowledge from long-form instructional videos and immediately translate it into a working application skeleton or structured code. For example, after watching a web design tutorial, it could generate the corresponding HTML and CSS framework.

Reflection / Unique Insight: The evolution of video understanding signals AI’s transition from a “content consumer” to a “content deconstructor” and “knowledge distiller.” In the future, hours-long professional training videos, surgical recordings, or industrial process documentation could be rapidly parsed, summarized, and distilled into actionable procedures or key insights, dramatically accelerating learning and knowledge transfer in specialized fields.

5. Powering Industry Transformation: The Gemini 3 Pro Application Landscape

Core Question: In which specific industries will Gemini 3 Pro’s advanced capabilities tangibly transform workflows?

5.1 Education: From Grading Homework to Personalized Tutoring

Gemini 3 Pro shows exceptional performance on diagram-heavy questions central to math and science, tackling the full spectrum of multimodal reasoning problems from middle school through post-secondary curricula.

A powerful practical application is its combination with generative capabilities. A student can take a photo of their worked-out math problem and prompt: “Please check my steps and tell me where I went wrong. Instead of explaining in text, show me visually on my image.” The model can then annotate the student’s original homework photo, visually highlighting errors in one color and providing corrections in another, enabling an immersive, personalized tutoring experience.

5.2 Medical and Biomedical Imaging: The Expert’s Collaborative Partner

In the specialized medical domain, Gemini 3 Pro stands as a highly capable general-purpose model. It achieves state-of-the-art performance on major public benchmarks, including:

-

MedXpertQA-MM: A difficult expert-level medical reasoning exam. -

VQA-RAD: Question-answering based on radiology imagery. -

MicroVQA: A multimodal reasoning benchmark for microscopy-based biological research.

This enables the model to assist doctors in analyzing complex medical imagery or help researchers extract critical biological features from microscope images.

5.3 Law and Finance: Tackling Highly Complex Professional Documents

For legal and financial professionals, Gemini 3 Pro’s enhanced document understanding tackles extremely complex workflows. Financial platforms can seamlessly analyze dense reports filled with charts and tables. Legal platforms benefit from its sophisticated document reasoning, particularly in understanding and editing contracts with complex redlines and revisions, efficiently handling high volumes of variable legal documents and providing significant value to in-house legal teams.

6. Developer’s Guide: Granular Control Over Performance and Cost

Core Question: How can developers using Gemini 3 Pro flexibly balance processing quality, speed, and cost based on task requirements?

Gemini 3 Pro improves visual input processing by preserving the native aspect ratio of images, leading to across-the-board quality gains.

More importantly, developers gain fine-grained control over performance and cost through the new media_resolution parameter. This allows you to tune visual token usage to balance fidelity against consumption:

-

High Resolution: Maximizes fidelity for tasks requiring fine detail, such as dense OCR or complex document understanding. -

Low Resolution: Optimizes for cost and latency on simpler tasks, such as general scene recognition or long-context tasks.

Developers should consult the official documentation to select the appropriate resolution setting for their specific application.

Conclusion: The Foundation for Next-Generation Multimodal Applications

The release of Gemini 3 Pro marks a critical inflection point where multimodal AI moves from “demonstration” to “utility.” It is no longer satisfied with answering “what’s in the picture” but is dedicated to solving the higher-order problem: “based on everything I see, how should I think and act?”

Whether it’s reconstructing historical texts, instructing a robot to tidy a room, automating office workflows, or distilling knowledge from video, the core achievement is the seamless fusion of visual perception, spatial reasoning, logical analysis, and code generation. For developers and enterprises, now is the ideal time to explore how to integrate these capabilities into products and services to solve real pain points and create entirely new user experiences.

Practical Summary / Actionable Checklist

-

Document Intelligence: Experiment with using Gemini 3 Pro to process scanned contracts, academic papers, or historical archives for information extraction, format conversion, and cross-chart reasoning. -

Spatial Interaction: Integrate its open-vocabulary object recognition and spatial planning capabilities into robotics or AR application prototypes. -

Interface Automation: Build automated testing scripts or digital assistants to handle repetitive software operation tasks. -

Video Analysis: Develop tools to analyze instructional videos, sports training footage, or surveillance clips to extract temporal insights or generate summaries. -

Cost Optimization: Actively use the media_resolutionparameter during development, switching between “high” and “low” modes based on task complexity.

One-Page Summary

-

Core Breakthrough: A generational leap from visual recognition to visual and spatial reasoning. -

Four Pillars of Capability: -

Document Understanding: Derendering + sophisticated reasoning for the messiest real-world documents. -

Spatial Understanding: Pixel-precise pointing + physical world task planning. -

Screen Understanding: High-precision UI perception for reliable desktop automation. -

Video Understanding: High-frame-rate analysis + causal reasoning to extract knowledge and generate code from dynamic video.

-

-

Key Industry Applications: Education (visual tutoring), Medical (image analysis), Law & Finance (complex document processing). -

Developer Control: Use the media_resolutionparameter for granular control over processing quality and cost.

Frequently Asked Questions (FAQ)

-

What type of problems is Gemini 3 Pro best suited to solve?

It excels at complex tasks that require multi-step reasoning and understanding based on visual information. Examples include analyzing long reports with charts, generating code from video tutorials, or directing a robot to organize items in a cluttered environment. -

As a developer, how can I quickly start experimenting with Gemini 3 Pro?

You can visit Google AI Studio, which provides a preview environment to interact with Gemini 3 Pro. Detailed API documentation and guides are also available for developer integration. -

How can I control costs when using Gemini 3 Pro to process images and videos?

Developers can use themedia_resolutionparameter in the API. Use “high” resolution for tasks requiring fine details (like OCR) and “low” resolution for simpler recognition tasks to optimize costs. -

What does its application in education look like in practice?

Students can upload photos of their handwritten homework. The model can not only judge correctness but also visually indicate errors (e.g., with red annotations) directly on the original image, providing an immersive tutoring experience. -

What does Gemini 3 Pro’s spatial understanding mean for robotics?

It enables robots to understand natural language instructions like “put the empty bottle from the table into the recycling bin” and autonomously plan the spatial execution path involving movement, grasping, and placement, significantly reducing programming complexity. -

How is its video understanding superior to previous models?

It is stronger in three main areas: processing higher frame rates to capture fast action; performing causal reasoning to understand why events happen; and transforming long video content (like tutorials) into executable application code. -

What value does this model offer for legal tech companies?

It can deeply understand and process legal contracts with complex redlines and revisions, efficiently extracting key clauses and comparing differences. This is ideal for handling high-volume, variable contract review workflows. -

What role does the model’s “thinking” mode play in video analysis?

The “thinking” mode allows the model to perform internal reasoning while analyzing video, enabling it to trace chains of causality between events. For example, it can infer not just that “an athlete fell,” but that “the fall was caused by slipping on a wet court marker.”