LangChain on X: “Evaluating Deep Agents: Our Learnings”

Over the past month at LangChain, we’ve launched four applications built on top of the Deep Agents framework:

-

A coding agent -

LangSmith Assist: an in-app agent to assist with various tasks in LangSmith -

Personal Email Assistant: an email assistant that learns from each user’s interactions -

A no-code agent building platform powered by meta deep agents

Developing and launching these agents required creating evaluations for each, and we gained valuable insights along the way! In this post, we’ll delve into the following patterns for evaluating deep agents.

-

Deep agents demand custom test logic for each data point — each test case has its own success criteria. -

Running a deep agent for a single step is excellent for validating decision-making in specific scenarios (and it saves tokens too!) -

Full agent turns are ideal for testing assertions about the agent’s “end state”. -

Multiple agent turns simulate realistic user interactions but need to stay on track. -

Environment setup is crucial — Deep Agents require clean, reproducible test environments

Glossary

Before we dive in, let’s define a few terms we’ll use throughout this post.

Ways to run an agent:

-

Single step: Restrict the core agent loop to run for just one turn, determining the next action the agent will take. -

Full turn: Run the agent completely on a single input, which can involve multiple tool-calling iterations. -

Multiple turns: Run the agent in its entirety multiple times. Often used to simulate a “multi-turn” conversation between an agent and a user with several back-and-forth interactions.

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584093883117574)

Things we can test:

-

Trajectory: The sequence of tools called by the agent, along with the specific tool arguments the agent generates. -

Final response: The final response returned by the agent to the user. -

Other state: Other values generated by the agent during operation (e.g., files, other artifacts)

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584166687936512)

#1: Deep Agents require more custom test logic (code) for each data point

Traditional LLM evaluation is straightforward:

-

Build a dataset of examples -

Write an evaluator -

Run your application on the dataset to generate outputs, and score those outputs with your evaluator

Every data point is treated the same — processed through the same application logic and scored by the same evaluator.

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584264062849024)

Deep Agents challenge this assumption. You’ll want to test more than just the final message. The “success criteria” may also be more specific to each data point and may involve specific assertions about the agent’s trajectory and state.

Consider this example:

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584330114707458)

We have a calendar-scheduling deep agent that can remember user preferences. A user asks their agent to “remember to never schedule meetings before 9am”. We want to confirm that the calendar-scheduling agent updates its own memories in its filesystem to retain this information.

To test this, we might write assertions to verify:

-

The agent called edit_fileon the specified file path -

The agent informed the user of the memory update in its final message -

The file actually contains information about not scheduling early meetings. You could: -

Use regex to look for a mention of “9am” -

Or use an LLM-as-judge with specific success criteria for a more comprehensive analysis of the file update

-

LangSmith’s Pytest and Vitest integrations support this type of custom testing. You can make different assertions about the agent’s trajectory, final message, and state for each test case.

# Mark as a LangSmith test case

@pytest.mark.langsmith

def test_remember_no_early_meetings() -> None:

user_input = "I don't want any meetings scheduled before 9 AM ET"

# We can log the input to the agent to LangSmith

t.log_inputs({"question": user_input})

response = run_agent(user_input)

# We can log the output of the agent to LangSmith

t.log_outputs({"outputs": response})

agent_tool_calls = get_agent_tool_calls(response)

# We assert that the agent called the edit_file tool to update its memories

assert any([tc["name"] == "edit_file" and tc["args"]["path"] == "memories.md" for tc in agent_tool_calls])

# We log feedback from an llm-as-judge that the final message confirmed the memory update

communicated_to_user = llm_as_judge_A(response)

t.log_feedback(key="communicated_to_user", score=communicated_to_user)

# We log feedback from an llm-as-judge that the memories file now contains the right info

memory_updated = llm_as_judge_B(response)

t.log_feedback(key="memory_updated", score=memory_updated)

For a general code snippet on how to use Pytest, check out the relevant resources.

This LangSmith integration automatically logs all test cases to an experiment, allowing you to view traces for failed test cases (to debug issues) and track results over time.

#2: Single-step evaluations are valuable and efficient

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584415628259328)

When running evaluations for Deep Agents, approximately half of our test cases were single-step evaluations — i.e., what did the LLM decide to do immediately after a specific series of input messages?

This is particularly useful for verifying that the agent called the correct tool with the right arguments in a specific scenario. Common test cases include:

-

Did it call the right tool to search for meeting times? -

Did it inspect the right directory contents? -

Did it update its memories?

Regressions often occur at individual decision points rather than across full execution sequences. If using LangGraph, its streaming capabilities let you interrupt the agent after a single tool call to inspect the output — enabling you to catch issues early without the overhead of a complete agent sequence.

In the code snippet below, we manually introduce a breakpoint before the tools node, making it easy to run the agent for a single step. We can then inspect and make assertions about the state after that single step.

@pytest.mark.langsmith

def test_single_step() -> None:

state_before_tool_execution = await agent.ainvoke(

inputs,

# interrupt_before specifies nodes to stop before

# interrupting before the tool node allows us to inspect the tool call args

interrupt_before=["tools"]

)

# We can see the message history of the agent, including the latest tool call

print(state_before_tool_execution["messages"])

#3: Full agent turns provide a complete picture

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584491486359552)

Think of single-step evaluations as your “unit tests” that ensure the agent takes the expected action in a specific scenario. Meanwhile, full agent turns are also valuable — they show you a complete picture of the end-to-end actions your agent takes.

Full agent turns let you test agent behavior in multiple ways:

-

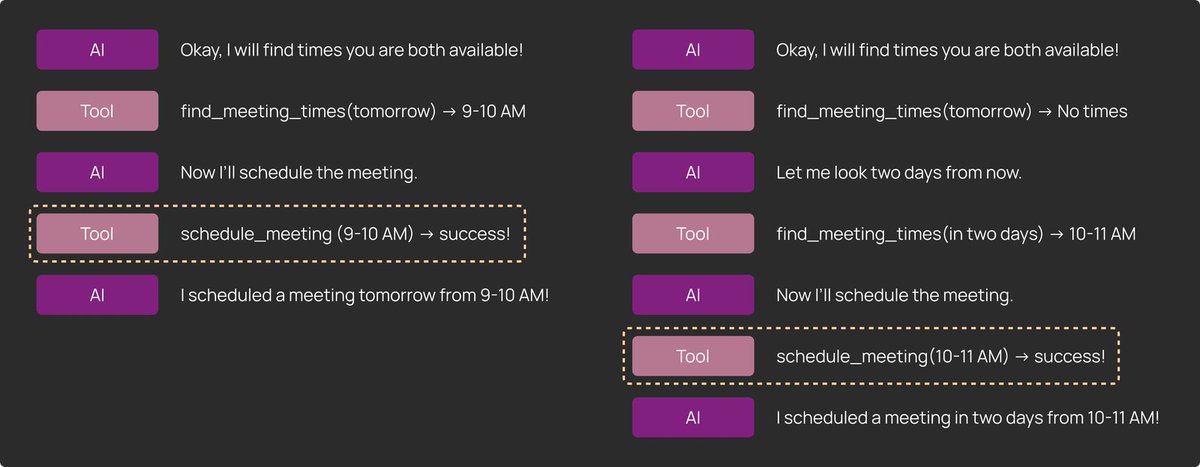

Trajectory: A very common way to evaluate a full trajectory is to ensure that a particular tool was called at some point during the action, regardless of the exact timing. In our calendar scheduler example, the scheduler might need multiple tool calls to find a suitable time slot that works for all parties.

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584619517554688)

-

Final Response: In some cases, the quality of the final output matters more than the specific path taken by the agent. We found this to be true for more open-ended tasks like coding and research.

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584657908002816)

-

Other State: Evaluating other state is very similar to evaluating an agent’s final response. Some agents will create artifacts instead of responding to the user in a chat format. Examining and testing these artifacts is easy by inspecting an agent’s state in LangGraph. -

For a coding agent → read and then test the files that the agent wrote. -

For a research agent → confirm the agent found the right links or sources.

-

Full agent turns give you a complete view of your agent’s execution. LangSmith makes it easy to view your full agent turns as traces, where you can see high-level metrics like latency and token usage, while also analyzing specific steps down to each model call or tool invocation.

#4: Running an agent across multiple turns simulates full user interactions

[

](https://x.com/LangChainAI/article/2006589207196930109/media/2006584727038472193)

Some scenarios require testing agents across multi-turn conversations with multiple sequential user inputs. The challenge is that if you naively hardcode a sequence of inputs and the agent deviates from the expected path, the subsequent hardcoded user input may not make sense.

We addressed this by adding conditional logic in our Pytest and Vitest tests. For example:

-

Run the first turn, then check the agent’s output. If the output is as expected, run the next turn. -

If not, fail the test early. (This was possible because we had the flexibility to add checks after each step.)

This approach allowed us to run multi-turn evaluations without having to model every possible agent branch. If we wanted to test the second or third turn in isolation, we simply set up a test starting from that point with appropriate initial state.

#5: Setting up the right evaluation environment is important

Deep Agents are stateful and designed to handle complex, long-running tasks — often requiring more complex environments for evaluation.

Unlike simpler LLM evaluations where the environment is limited to a few usually stateless tools, Deep Agents need a fresh, clean environment for each evaluation run to ensure reproducible results.

Coding agents illustrate this clearly. There’s an evaluation environment for TerminalBench that runs inside a dedicated Docker container or sandbox. For DeepAgents CLI, we use a more lightweight approach: we create a temporary directory and run the agent inside it for each test case.

The key point: Deep Agent evaluations require environments that reset per test — otherwise, your evaluations become unreliable and hard to reproduce.

Tip: Mock your API requests

LangSmith Assist needs to connect to real LangSmith APIs. Running evaluations against live services can be slow and costly. Instead, record HTTP requests to a filesystem and replay them during test execution. For Python, relevant tools work well; for JS, we proxy fetch requests through a Hono app.

Mocking or replaying API requests makes Deep Agent evaluations faster and easier to debug, especially when the agent relies heavily on external system state.

Evaluate Deep Agents with LangSmith

The techniques above are common patterns we observed when writing our own test suites for deep agent-powered applications. You’ll likely only need a subset of these patterns for your specific application — which is why it’s important for your evaluation framework to be flexible. If you’re building a deep agent and getting started with evaluations, check out LangSmith!