Six Practical Guidelines to Improve Software Testing Efficiency with Cursor

In modern software development workflows, the efficiency of the testing phase directly impacts product release speed and user experience quality. In traditional models, many development teams over-rely on dedicated QA engineers, resulting in long testing cycles and delayed feedback. As an AI-powered development tool, Cursor provides developers with a comprehensive automated testing solution that prevents testing from becoming a bottleneck in the development process. This article details six practical recommendations from Cursor’s QA engineers to help development teams establish systematic QA methods and achieve an automated transformation of testing processes.

1. Implement Core Functionality Automated Testing with Playwright’s MCP Tool

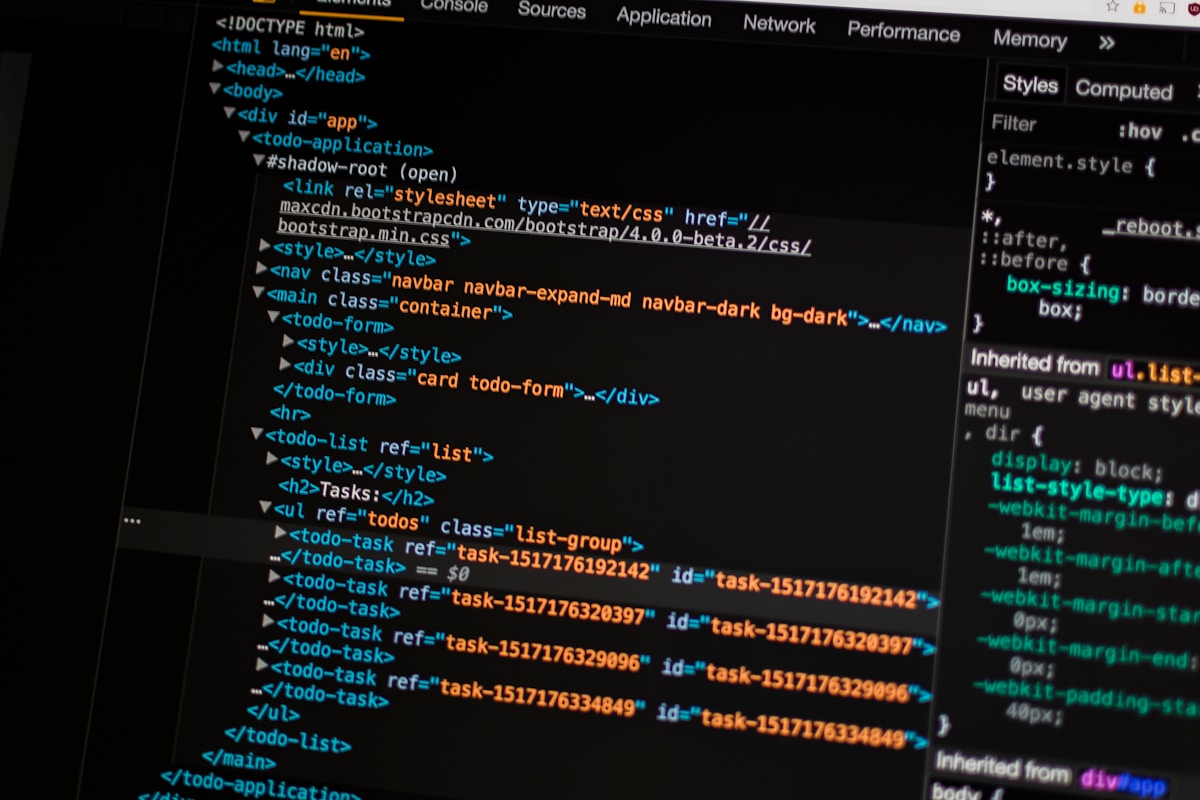

For web application developers, ensuring the stability of core functionalities is the top priority of testing work. When used together, Playwright’s MCP (Minimum Viable Coverage) tool and Cursor can provide a comprehensive automated testing solution for your web application’s core functionalities.

The core value of the MCP tool lies in its ability to simulate real user behaviors and continuously monitor the critical paths of your application. Unlike traditional testing tools that require writing extensive manual scripts, MCP can quickly configure testing scenarios through Cursor’s AI-assisted features, automatically identifying potential vulnerabilities or edge cases. These edge cases are often overlooked in manual testing but can become significant隐患 to user experience.

The basic process of using the MCP tool is not complicated. First, you need to configure the Playwright environment in your project, then use Cursor to call the MCP function to specify the core functional modules that need testing. MCP will automatically generate basic test cases and dynamically adjust them based on the application’s actual operation. Notably, the MCP tool supports screenshot functionality – by adding the --vision flag during startup, you can enable visual verification capabilities to perform visual checks on UI elements and layouts, which is extremely helpful for ensuring consistent frontend display效果.

In practical applications, many development teams have found that the MCP tool is particularly suitable for quickly verifying application stability. When you iterate and update core functionalities, simply running the MCP test suite provides a comprehensive test report within minutes, covering multiple dimensions such as功能完整性, response speed, and compatibility. This rapid feedback mechanism significantly shortens the development cycle, allowing developers to discover and fix issues immediately.

The combination of MCP and Cursor offers another significant advantage: intelligent maintenance of test cases. A major pain point of traditional automated testing is that when the application interface changes, test scripts require extensive modifications. However, through Cursor’s AI analysis capabilities, the MCP tool can automatically identify changes in interface elements and adjust test logic accordingly, greatly reducing test maintenance costs.

2. Enable Bugbot for Automated Code Issue Detection

In software development, the earlier issues are discovered, the lower the repair cost. Cursor’s built-in Bugbot tool is an automated bug detection system designed based on this principle. Initially created for Cursor’s internal development team, it has now become a trusted code quality assurance tool for many developers.

Bugbot’s core functionality is connecting to your GitHub repository to continuously scan and analyze code, promptly identifying potential issues. This preventive detection mechanism helps teams resolve most problems before code merging and deployment, significantly reducing the number of post-release failures.实践 from Cursor’s engineering team shows that using Bugbot has dramatically reduced pre-release error rates, greatly improving code quality and development efficiency.

Enabling Bugbot is a straightforward process. First, you need to configure your GitHub repository connection in Cursor, which requires appropriate permission settings to ensure Bugbot can access the codebase. Once configured, Bugbot automatically begins a comprehensive scan of existing code and performs real-time detection on newly submitted code during subsequent development.

Bugbot works through static code analysis and pattern recognition to identify common programming errors, security vulnerabilities, and code smells. It particularly excels at identifying detailed issues easily overlooked by humans, such as unhandled exceptions, resource leaks, and logical errors. Compared to traditional code reviews, Bugbot covers more comprehensive checkpoints and can work 24/7 without interruption.

When using Bugbot, it’s recommended to refer to Cursor’s official documentation for configuration and optimization. The documentation provides丰富的规则 configuration options, allowing you to customize detection rules according to project characteristics to better align with your team’s coding standards and quality requirements. Additionally, the documentation explains how to interpret Bugbot’s generated reports and effectively fix identified issues.

Many teams have noticed that after using Bugbot for some time, not only has code quality improved, but team coding habits have also gradually improved. Bugbot provides consistent feedback, helping developers form good programming practices and fundamentally reducing error occurrence. This subtle influence is often more valuable than simply solving specific problems.

3. Utilize Terminal Tools for Efficient Unit Testing

Unit testing is a fundamental aspect of ensuring code quality, and Cursor’s terminal tools provide developers with an efficient unit testing environment, particularly suitable for testing newly developed backend functionalities. Through terminal tools, developers can quickly execute tests, view results, and obtain immediate feedback, significantly improving unit testing efficiency.

A major advantage of Cursor’s terminal tools is their deep integration with AI. As Cursor QA engineer Juan recommends, adding sufficiently detailed logs in your code enables AI to better understand test scenarios and execution results, thereby providing more accurate feedback and suggestions. This log-driven testing method allows developers to locate problem roots faster and reduce debugging time.

In practical use of terminal tools for unit testing, several best practices值得关注. First, establish a clear test directory structure that separates test code from business code, facilitating management and ensuring test independence. Second, adopt a modular testing strategy by writing independent test suites for each functional module, enabling parallel test execution and improving efficiency.

Terminal tools support various mainstream testing frameworks, allowing developers to choose appropriate tools based on project requirements. When executing tests, the tool displays real-time test progress and results in the terminal, including key information such as passed cases, failed cases, and execution time. For failed tests, detailed error logs appear directly in the terminal to help developers quickly locate issues.

Handling test logs is an important aspect of terminal testing. Through appropriate log configuration, you can control the level of detail – using detailed logs for debugging during development and concise logs in CI environments to improve efficiency. In Node.js environments, you can use the exec method to capture terminal output for further analysis and processing of test results, as shown below:

subprocess.exec('jest your_test_file_path', (err, stdout, stderr) => {

if (err) {

console.error('Test execution error:', err);

return;

}

// Analyze standard output

const testResults = stdout.split('\n');

testResults.forEach(line => {

if (line.includes('PASS')) {

console.log(`✅ ${line}`);

} else if (line.includes('FAIL')) {

console.error(`❌ ${line}`);

}

});

});

This log processing method can help you automatically analyze test results and can even be integrated into team notification systems to push important test status changes in real-time.

Terminal tools also support partial test execution, allowing developers to specify only specific test cases or suites to run – extremely useful when debugging specific functionalities. Combined with Cursor’s AI capabilities, you can even specify tests to run through natural language descriptions, further simplifying the testing process.

Long-term use of terminal tools for unit testing helps development teams establish good “test-driven development” habits. Because testing becomes so convenient, developers are more willing to write test cases alongside functional code, forming a positive cycle that continuously improves code quality.

4. Leverage AI for Automatic Test Case Generation

Writing test cases is often time-consuming and labor-intensive, especially for large projects where maintaining comprehensive test suites requires significant resources. Cursor’s AI capabilities offer an innovative solution to this problem – automatically generating test cases, allowing developers to focus more energy on feature development rather than worrying excessively about regression test coverage.

Cursor’s AI test case generation feature can understand your code logic and functional requirements, automatically creating targeted test suites. This process is fully integrated into Cursor’s workflow – simply describe the functionality you want to test in the chat interface, and AI will generate corresponding test code. This approach not only saves time but also generates more comprehensive test scenarios, including edge cases that developers might overlook.

Cursor’s QA team has experimented with various testing frameworks, including Cypress, Selenium, Eggplant, and Playwright, and found that Playwright performs best when paired with AI for test case generation. Therefore, they recommend prioritizing Playwright as the testing framework, especially when needing to combine with the MCP tool to generate core functionality test cases – this combination delivers maximum effectiveness.

The process of using AI to generate test cases is very intuitive. First, clearly describe the functional modules and key scenarios you want to test in Cursor’s chat interface. Second, specify your testing framework (such as Playwright). Finally, AI will generate complete test code that you can use directly or fine-tune according to actual needs.

To ensure these automatically generated tests seamlessly integrate into continuous integration (CI) processes, Cursor recommends creating a .github/actions folder and configuring test workflows within it. This way, tests run automatically whenever code is submitted to the GitHub repository, ensuring new changes do not break existing functionalities. A typical CI configuration includes installing dependencies, setting up the test environment, executing test cases, and generating test reports.

The advantages of AI-generated test cases are evident not only in initial creation but also in subsequent maintenance. When your application features change, simply have AI re-analyze the updated code, and it will automatically adjust test cases to ensure they remain synchronized with functionality. This dynamic adaptability significantly reduces test maintenance costs and solves the “test rot” problem in traditional automated testing.

In practical applications, many development teams have found that the quality of AI-generated test cases improves with usage. As Cursor learns team testing styles and project characteristics, it generates increasingly relevant test code. Meanwhile, teams can continuously optimize AI output through feedback mechanisms,形成良性循环.

5. Prioritize TypeScript for Writing Test Code

In automated testing, choosing the right programming language affects not only test code quality but also development efficiency and maintenance costs. Cursor’s QA engineers strongly recommend using TypeScript (.ts) for writing test code because it provides an ideal foundation for AI-assisted testing while improving code readability and maintainability.

TypeScript’s static type system is one of its greatest advantages. Compared to pure JavaScript, TypeScript requires explicit type definitions, making code logic structures clearer and enabling AI to more accurately understand code intent. Cursor’s AI offers excellent support for TypeScript, leveraging type information to generate more precise test code and provide more effective refactoring suggestions, thereby improving overall development efficiency.

Using TypeScript for test code带来 multiple benefits. First, type checking can identify many potential errors during compilation, reducing runtime failures. In test code, this means you can discover issues in test logic earlier, ensuring the reliability of the tests themselves. Second, type definitions serve as a form of self-documentation, enabling other developers (and your future self) to understand test intentions and structures more quickly.

When actually writing TypeScript test code, you can draw on many mature patterns and practices. For example, using Mocha as the test runner and Chai as the assertion library creates a powerful testing combination. Below is a simple TypeScript test example:

import { expect } from 'chai';

import { calculateTotal } from '../src/utils/calculator';

describe('Calculator Utility', () => {

describe('calculateTotal function', () => {

it('should return correct sum of positive numbers', () => {

const result = calculateTotal([10, 20, 30]);

expect(result).to.equal(60);

});

it('should handle empty array gracefully', () => {

const result = calculateTotal([]);

expect(result).to.equal(0);

});

it('should correctly sum negative numbers', () => {

const result = calculateTotal([-5, 10, -3]);

expect(result).to.equal(2);

});

});

});

This example demonstrates the clear structure and type safety features of TypeScript test code. Through explicit type definitions, the input-output relationships of test functions are一目了然, enabling AI to more accurately understand the purpose of each test case.

Cursor’s QA engineers share that their own test code and most project code are written in TypeScript with remarkable results. Not only has test stability improved, but team collaboration has also become smoother, as type definitions reduce much communication overhead. New team members can understand existing test code more quickly and integrate into the development process faster.

For teams accustomed to JavaScript, migrating to TypeScript may require some learning investment but is worthwhile in the long run. Cursor’s AI can help smooth this transition by automatically converting existing JavaScript test code to TypeScript and providing type definition suggestions, significantly reducing migration difficulties.

6. Use Background Agents for End-to-End Testing

End-to-end testing is a critical环节 for verifying overall application functionality. It simulates real user scenarios to ensure correct collaboration between various components. Although Cursor’s Background Agents feature is still in its early stages, it has shown great potential in end-to-end testing, providing developers with an intelligent testing solution.

The core idea of Background Agents is using AI agents to automatically execute testing tasks in the background. Unlike traditional end-to-end testing tools that require writing complex scripts, Background Agents can understand application business logic through AI, automatically generate test processes, and execute them. This approach significantly lowers the barrier to end-to-end testing, allowing more developers to participate in testing work.

When developing new features, Cursor recommends using Background Agents to automatically generate at least one end-to-end test. This test should cover the main user flow of the feature to ensure core functionality integrity and stability. As features iterate, you can gradually expand test coverage to form a comprehensive test suite.

The Background Agents workflow typically includes several key steps: first, AI analyzes the application structure and functionality to identify critical user paths; second, it generates corresponding test scripts to simulate user operations along these paths; third, it executes tests and records results; finally, it generates detailed test reports highlighting discovered issues.

Although Background Agents are still evolving, they already possess several useful features. For example, they can remember previous test operations to maintain contextual continuity in subsequent tests; they can handle simple dynamic content such as dates and randomly generated content; and they can recognize common UI components and interact with them. These capabilities enable Background Agents to handle testing requirements for many practical application scenarios.

Compared to other end-to-end testing tools, Background Agents offer several unique advantages. First is strong adaptability – when the application interface undergoes minor changes, AI can automatically adjust test logic, reducing maintenance costs. Second is learning ability – as testing次数 increase, Agents become more familiar with application characteristics, continuously improving testing efficiency and accuracy. Finally, they offer excellent scalability – developers can expand test scenarios through simple instructions without writing complex code.

In practical applications, Background Agents are particularly suitable for quickly verifying overall flows of new features. For example, when developing a new user registration feature, Background Agents can automatically generate test processes including accessing the registration page, filling out forms, submitting data, and verifying the success page. This rapid feedback mechanism allows developers to discover feature integration issues early in development.

The Cursor team is continuously improving Background Agents’ capabilities, with future plans to add more powerful visual recognition, more complex scenario handling, and deeper issue analysis features. For the current version, it’s recommended to use Background Agents as a supplementary tool for end-to-end testing, combined with traditional testing methods to achieve optimal results.

Conclusion: Building an Efficient Automated Testing System

The goal of software testing is not just to发现错误 but to establish a mechanism that continuously ensures software quality. These six recommendations from Cursor together form a complete automated testing system, covering all aspects of software development from unit testing to end-to-end testing, and from code scanning to test generation.

Adopting these methods can bring multiple benefits to development teams. First is improved quality – through comprehensive automated testing, issues can be discovered and resolved early in development, reducing post-release failures. Second is increased efficiency – automated testing frees developers from repetitive manual testing, allowing them to focus on more valuable feature development. Finally, there are cost reductions – discovering issues early means lower repair costs, and automated testing reduces reliance on large numbers of dedicated QA personnel.

Implementing these testing methods doesn’t require doing everything at once – teams can progress gradually according to their situation. It’s recommended to start with the areas that bring immediate benefits, such as enabling Bugbot for code scanning while introducing TypeScript for writing test code, then gradually implementing more complex end-to-end testing and AI-generated test cases.

It’s important to note that automated testing isn’t meant to completely replace manual testing but to complement it. Automated testing excels at handling repetitive, logically clear scenarios, while manual testing has advantages in exploratory testing and user experience evaluation. An efficient testing strategy should organically combine both to leverage their respective strengths.

As AI technology continues to develop, software testing is evolving toward greater intelligence and automation. The tools and methods provided by Cursor embody this trend, making testing more efficient, accurate, and sustainable. For development teams, embracing these new technologies not only improves current project quality but also lays the foundation for future technological development.

Finally, establishing a good testing culture is equally important. When testing is no longer seen as a burden in the development process but as a necessary investment in ensuring and improving product quality, teams can truly realize the value of these tools and methods. Through continuous practice and optimization, every development team can find a testing strategy that suits their needs, ensuring software quality while iterating quickly and providing users with more reliable product experiences.