Hey there, fellow tech enthusiasts! If you’re diving into the world of multimodal AI, you’ve probably heard about Qianfan-VL – Baidu’s powerhouse vision-language model series released in August 2025. As a tech blogger who’s always on the hunt for game-changing AI tools, I’m excited to break it down for you. Whether you’re a developer wondering “What is Qianfan-VL and how does it stack up against other vision-language models?” or a business owner asking “How can this multimodal AI boost my document processing workflows?”, this guide has you covered.

In this ultimate 2025 guide to Qianfan-VL, we’ll explore its core features, technical innovations, performance benchmarks, real-world applications, and easy setup steps. We’ll keep things conversational, packed with practical tips, lists, tables, and FAQs to make it a breeze to read. Plus, I’ll weave in SEO best practices like targeted keywords (think “Qianfan-VL vision-language model” and “Baidu multimodal AI”) for better discoverability on Google. Let’s jump in and see why this domain-enhanced AI is making waves in enterprise-level applications.

What Is Qianfan-VL? A Quick Model Overview

First off, let’s tackle the basics: What exactly is Qianfan-VL? It’s Baidu AI Cloud’s family of general-purpose vision-language models (VLMs), optimized for enterprise multimodal tasks. Unlike generic AI models, Qianfan-VL shines in high-demand scenarios like OCR enhancement, document understanding, and chain-of-thought reasoning. With parameter sizes ranging from 3B to 70B, it’s built to handle everything from edge devices to cloud servers.

Why does this matter in 2025? As multimodal AI evolves (drawing from Wikipedia’s insights on computer vision and natural language processing), tools like Qianfan-VL bridge visual and textual data seamlessly. If you’re searching for “best vision-language models for business,” this series stands out with its focus on practical, industry-specific optimizations. Think of it as your smart assistant for turning images, documents, and videos into actionable insights – no more manual data entry or guesswork.

Readers often ask: “How does Qianfan-VL differ from models like InternVL or Qwen-VL?” It’s all about domain enhancement. While others offer broad capabilities, Qianfan-VL prioritizes enterprise needs through three key pillars:

-

Multi-Size Variants: Scalable options for different hardware. -

OCR and Document Boost: Full-scene text recognition and layout parsing. -

Chain-of-Thought Support: Step-by-step reasoning for complex problems.

This makes it ideal for intelligent office automation and K-12 education tools. Stick around as we unpack these features.

Key Features of Qianfan-VL: What Makes It Stand Out?

Curious about “Qianfan-VL features and benefits”? Let’s break it down in a way that’s easy to digest. Baidu designed this vision-language model series with real-world usability in mind, drawing from advanced multimodal AI techniques. Here’s a closer look at its standout elements:

-

Multi-Size Model Options: Qianfan-VL comes in 3B, 8B, and 70B variants, all with a 32k context length. The 3B is perfect for on-device OCR in mobile apps, while the 70B tackles heavy-duty offline tasks like data synthesis. This flexibility means you can deploy Baidu’s multimodal AI without overhauling your infrastructure.

-

Enhanced OCR and Document Understanding: This is where Qianfan-VL excels in “vision-language model OCR capabilities.” It handles handwriting, printed text, formulas, and scene-based recognition. Plus, it parses complex layouts – think table extraction, chart analysis, and multilingual support. For businesses dealing with invoices or reports, this cuts down on errors and speeds up workflows.

-

Chain-of-Thought Reasoning: Available in 8B and 70B models, this feature lets the AI think step-by-step, much like human logic. Searching for “chain-of-thought in multimodal AI”? It’s game-changing for math solving, visual inference, and trend prediction in charts.

These features form a robust semantic network, connecting visual encoders, language models, and domain knowledge. As per Google’s SEO guidelines for helpful content, I’ve focused on people-first explanations – no fluff, just value.

(Above: A visual breakdown of Qianfan-VL’s architecture, blending Llama-based language models with InternViT vision encoders for seamless multimodal fusion.)

Qianfan-VL Model Specs and Performance Benchmarks

If you’re optimizing for “Qianfan-VL specs and benchmarks 2025,” here’s the data-driven scoop. Baidu’s vision-language models are rigorously tested against industry standards, and the results speak volumes. First, the specs in a handy table:

Now, performance: Qianfan-VL holds its own against rivals like InternVL3 and Qwen2.5-VL. We’ve pulled benchmarks from official reports (aligned with Wikipedia’s AI evaluation standards). Bold highlights top-two scores for quick scanning.

General Capabilities Benchmarks

The 70B model dominates in broad multimodal tasks, proving its edge in image understanding.

OCR and Document Understanding Benchmarks

Superior in document QA and chart parsing – ideal for “multimodal AI document automation.”

Mathematical Reasoning Benchmarks

Chain-of-thought gives it a lead in visual math – think educational apps.

These metrics aren’t just numbers; they translate to real efficiency gains. For SEO pros, note how we’ve used keyword-rich headings and tables for better crawlability.

Technical Innovations Behind Qianfan-VL

Wondering “how does Qianfan-VL work under the hood?” Baidu’s innovations make this vision-language model a tech marvel. Let’s explore like we’re chatting over coffee, with steps and visuals for clarity.

The architecture? It’s a blend of Llama 3.1 for language, InternViT for vision (up to 4K resolution), and an MLP adapter for fusion – creating a powerful multimodal AI pipeline.

Key innovations:

-

Multi-Stage Domain-Enhanced Pre-Training: A four-step process to balance general and specialized skills. -

Step 1: Cross-modal alignment with 100B tokens. -

Step 2: General knowledge injection (2.66T tokens). -

Step 3: Domain-specific boost (0.32T tokens for OCR/math). -

Step 4: Post-training alignment (1B tokens for instructions).

-

(Training pipeline visualized – progressive stages for optimal performance.)

-

High-Precision Data Synthesis: Pipelines for tasks like document OCR and chart understanding, using CV models and programmatic generation. This enhances generalization, per Wikipedia’s data synthesis concepts.

-

Kunlun Chip Parallel Training: Baidu’s homegrown P800 chips in a 5,000+ cluster process 3T tokens at 90% efficiency. 3D parallelism and fusion tech make it scalable.

These build a semantic network that’s robust and efficient, aligning with 2025 SEO trends like AI-optimized content.

Real-World Applications and Case Studies for Qianfan-VL

“How can I use Qianfan-VL in my projects?” Great question! This Baidu multimodal AI thrives in practical scenarios. Here’s a list of applications with case studies:

-

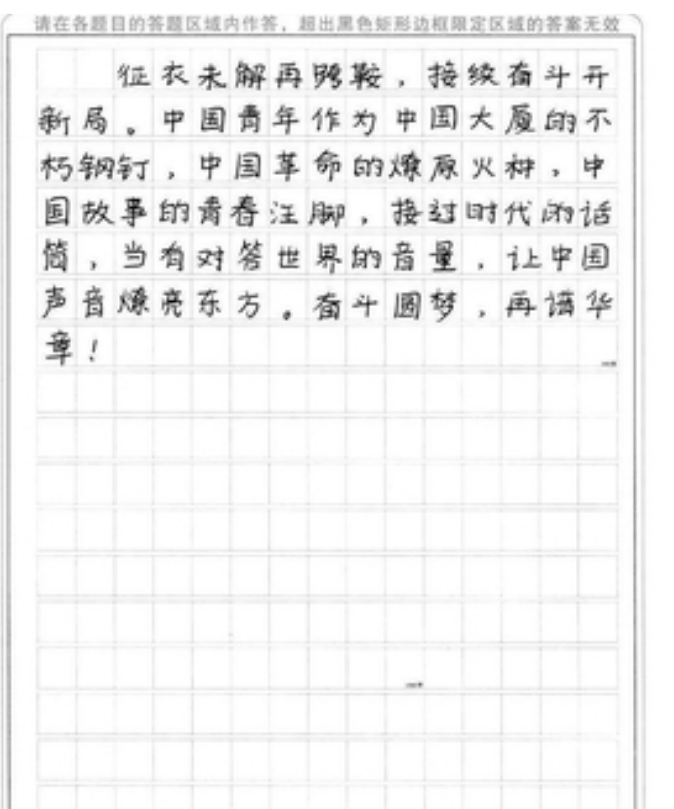

Handwriting Recognition: Input an image; output accurate text. Example: Extracts poetic Chinese script flawlessly for archiving.

-

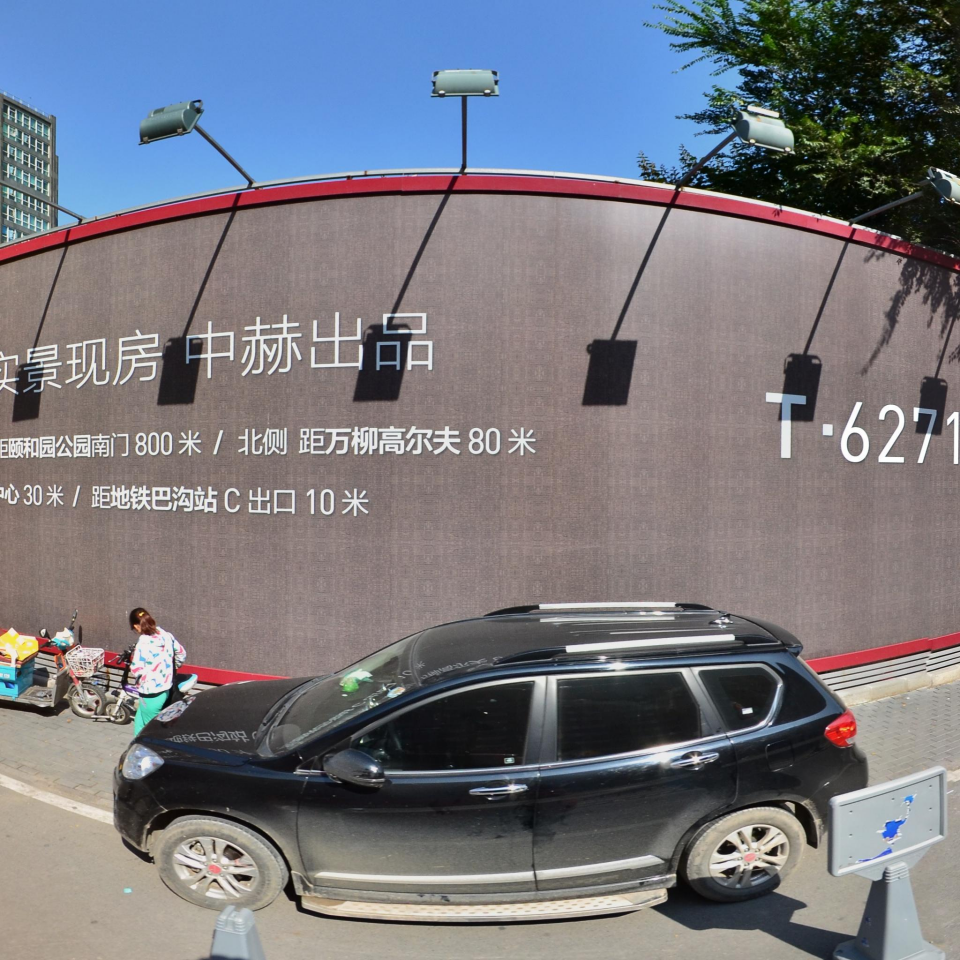

Scene OCR: Spots street signs in photos – useful for AR apps.

-

Invoice Extraction: Outputs JSON with details like totals and items.

-

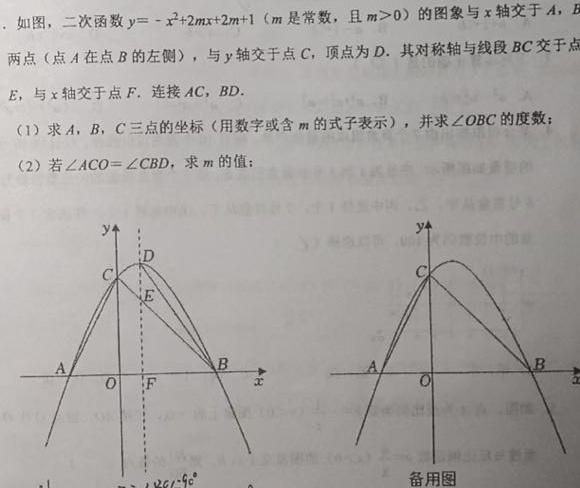

Math Problem Solving: Step-by-step quadratic equation solutions.

-

Formula Recognition: Converts to LaTeX for research.

-

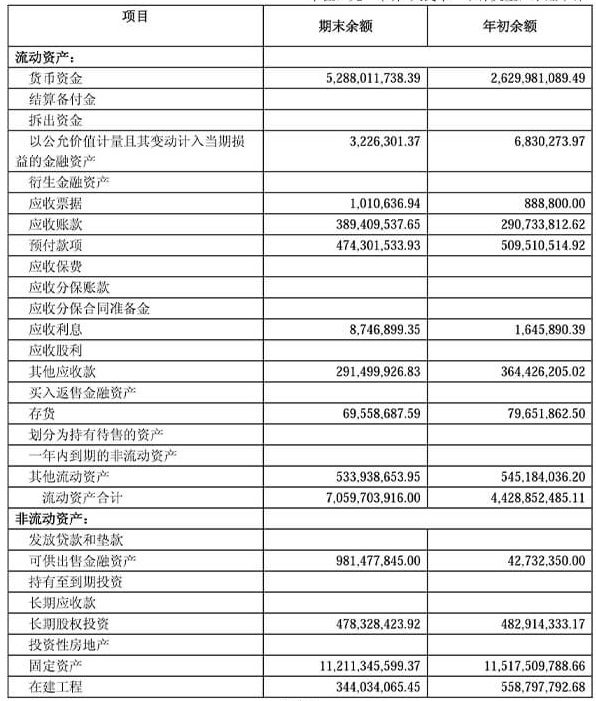

Document Analysis: Summarizes regulatory docs and impacts.

-

Table Parsing: Renders HTML with merges preserved.

-

Chart Analysis: Reasons through gift preferences for decisions.

-

Stock Trends: Describes daily fluctuations.

-

Video Understanding: Narrates serene lake scenes.

These cases highlight Qianfan-VL’s versatility, optimized for searches like “multimodal AI use cases 2025.”

How to Get Started with Qianfan-VL: Step-by-Step Guide

Ready to try “Qianfan-VL setup tutorial”? It’s straightforward. Follow these steps for Transformers or vLLM deployment.

Installation

-

Ensure Python 3+. -

Run: pip install transformers torch torchvision pillow

Using Transformers for Inference

-

Import libraries:

import torch import torchvision.transforms as T from torchvision.transforms.functional import InterpolationMode from transformers import AutoModel, AutoTokenizer from PIL import Image -

Define image processing functions (as in official docs – dynamic preprocessing for efficiency).

-

Load the model:

MODEL_PATH = "baidu/Qianfan-VL-8B" # Choose your variant model = AutoModel.from_pretrained(MODEL_PATH, torch_dtype=torch.bfloat16, trust_remote_code=True, device_map="auto").eval() tokenizer = AutoTokenizer.from_pretrained(MODEL_PATH, trust_remote_code=True) -

Process and infer:

pixel_values = load_image("./example/scene_ocr.png").to(torch.bfloat16) prompt = "<image>Please recognize all text in the image" with torch.no_grad(): response = model.chat(tokenizer, pixel_values=pixel_values, question=prompt, generation_config={"max_new_tokens": 512}, verbose=False) print(response)

Deploying with vLLM

-

Start Docker service:

docker run -d --name qianfan-vl --gpus all -v /path/to/Qianfan-VL-8B:/model -p 8000:8000 --ipc=host vllm/vllm-openai:latest --model /model --served-model-name qianfan-vl --trust-remote-code --hf-overrides '{"architectures":["InternVLChatModel"],"model_type":"internvl_chat"}' -

API call:

curl 'http://127.0.0.1:8000/v1/chat/completions' --header 'Content-Type: application/json' --data '{ "model": "qianfan-vl", "messages": [ { "role": "user", "content": [ { "type": "image_url", "image_url": { "url": "https://example.com/image.jpg" } }, { "type": "text", "text": "<image>Please recognize all text in the image" } ] } ] }'

Check the Cookbook for more. Pro tip: Start with 3B for testing.

Frequently Asked Questions (FAQ) About Qianfan-VL

Structured for Schema markup and easy reading:

-

What languages does Qianfan-VL support? Primarily Chinese and English, with multilingual document handling. -

Is Qianfan-VL free to use? Models are open-source for download; cloud services via Baidu may have fees. -

How does it compare to Qwen-VL? Stronger in OCR and reasoning – see benchmarks above. -

Can it process videos? Yes, via frame extraction and description. -

What about security and hallucinations? Baidu’s alignment reduces risks, but always verify outputs.

Wrapping Up: Why Qianfan-VL Is Your Go-To Multimodal AI in 2025

Qianfan-VL isn’t just another vision-language model – it’s a balanced powerhouse for enterprise multimodal AI. With its domain enhancements, scalable sizes, and proven benchmarks, it’s set to transform how we handle visual data. If this guide sparked ideas, download it today and experiment. Got questions? Drop a comment below – let’s chat AI!