Breaking the Real-Time Video Barrier: How MirageLSD Generates Infinite, Zero-Latency Streams

Picture this: During a video call, your coffee mug transforms into a crystal ball showing weather forecasts as you rotate it. While gaming, your controller becomes a lightsaber that alters the game world in real-time. This isn’t magic – it’s MirageLSD technology in action.

The Live-Stream Diffusion Revolution

We’ve achieved what was previously considered impossible in AI video generation. In July 2025, our team at Decart launched MirageLSD – the first real-time video model that combines three breakthrough capabilities:

Why This Changes Everything

Unlike previous systems, MirageLSD operates through a causal autoregressive framework:

This loop enables continuous transformation of live video feeds – whether from cameras, games, or video calls – with imperceptible delay.

Solving Two Fundamental Challenges

Challenge 1: The 30-Second Video Wall

Prior video models collapsed around 30 seconds due to error accumulation – where tiny imperfections compound until outputs become incoherent:

Our Solution: History Augmentation

-

Diffusion Forcing

Trains the model to denoise individual frames independently -

Controlled Corruption

Artificially introduces errors during training to build error-correction capabilities

Result: Continuous generation exceeding 120 minutes without quality degradation

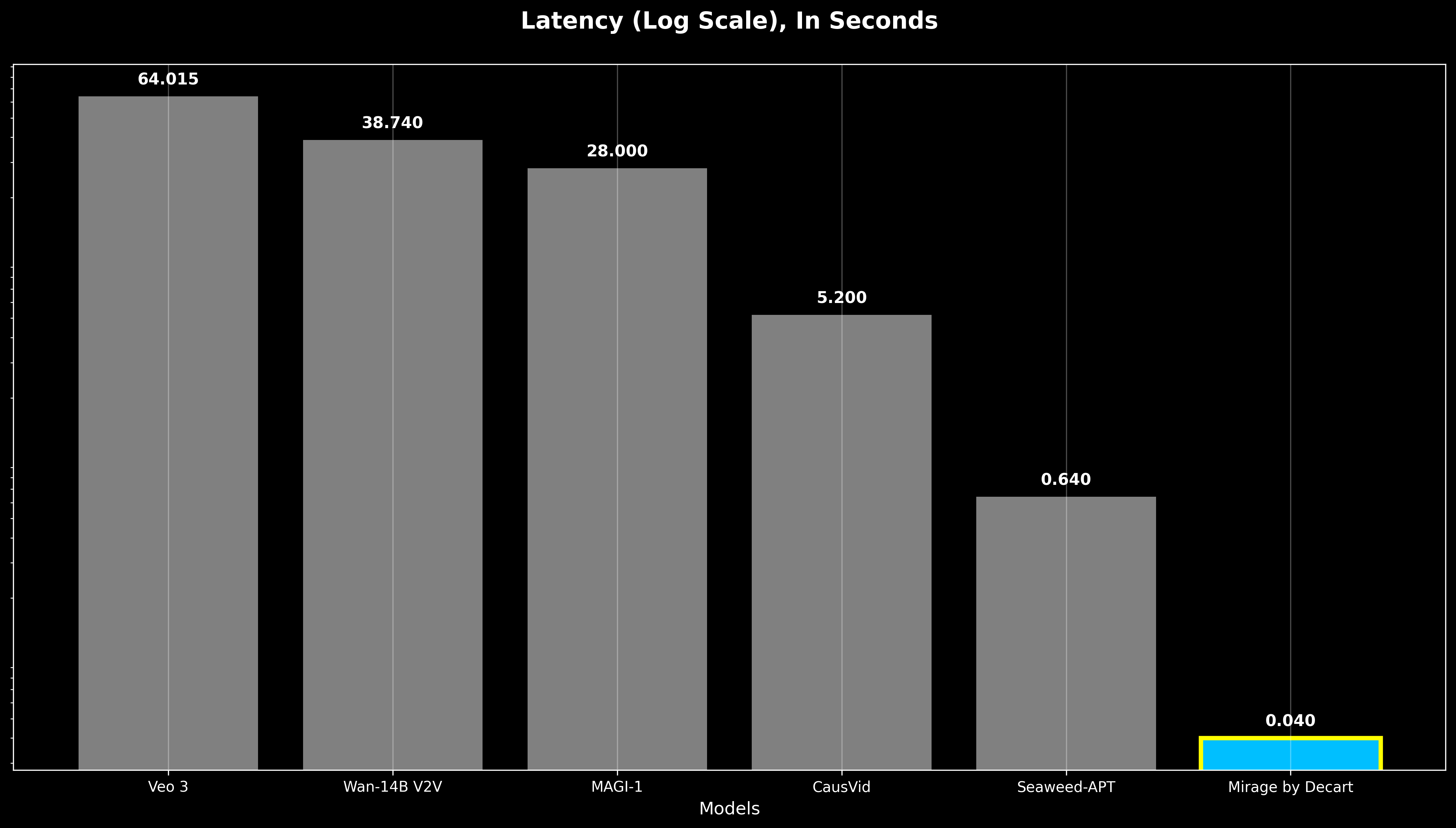

Challenge 2: The 40ms Real-Time Barrier

Human perception requires under 40ms latency for seamless video. Previous “real-time” systems were 16x slower:

Triple-Layer Optimization

Technical breakthroughs:

-

Hopper GPU Kernels

Direct GPU-to-GPU communication eliminates data transfer bottlenecks -

Architecture-Aware Pruning

Aligns parameter matrices with GPU tensor cores -

Shortcut Distillation

Compresses 12 denoising steps into 3 (based on Frans et al. 2024)

Transforming Real-World Applications

How Interactive Generation Works

Current implementation examples:

-

✦ 📱 Mobile AR: Transform surroundings through phone cameras (iOS/Android supported) -

✦ 🎮 Gaming: Convert Minecraft blocks to steampunk mechanics in real-time -

✦ 💻 Video Conferencing: Dynamically replace backgrounds with prompt-based scenes

Limitations and Development Roadmap

Current Constraints

2025 Release Schedule

Technical FAQ

How does this differ from Stable Diffusion?

Architectural contrast:

How is 40ms latency guaranteed?

Hardware-software co-design:

-

Kernel optimization: Combined GPU operations -

Architecture tuning: GPU-aligned tensor shapes -

Distillation: 12-step → 3-step denoising

Why doesn’t the video degenerate?

Error-resistant training maintains stability even with significant input noise:

.png)

References and Resources

Further reading: