MetaAgent: A Self-Evolving AI System That Learns Through Practice

Introduction

Imagine an AI system that starts with basic skills but gradually becomes an expert through continuous practice and reflection—much like humans do. This is the core idea behind MetaAgent, a groundbreaking AI framework designed for complex knowledge discovery tasks.

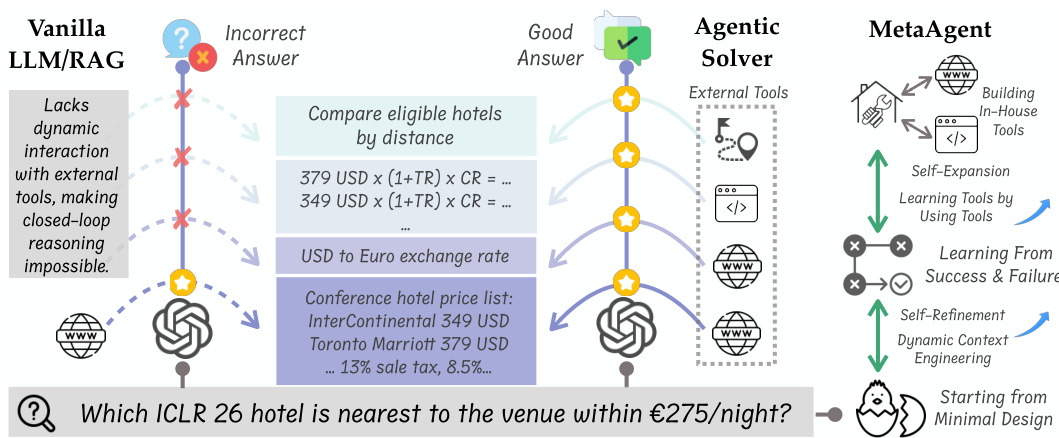

Figure 1: MetaAgent evolves through task completion

What Makes MetaAgent Unique?

Traditional AI systems either:

-

Follow rigid pre-programmed workflows -

Require massive training datasets

MetaAgent takes a different approach by:

-

Starting with minimal capabilities -

Learning through real-world task execution -

Continuously improving via self-reflection

Core Design Principles

1. Minimal Viable Workflow

MetaAgent begins with three simple steps:

1. Reason using current knowledge

2. Ask for help when stuck

3. Combine information to solve problems

This modular design separates reasoning from tool execution, letting the AI focus on problem-solving without tool details.

2. Meta Tool Learning

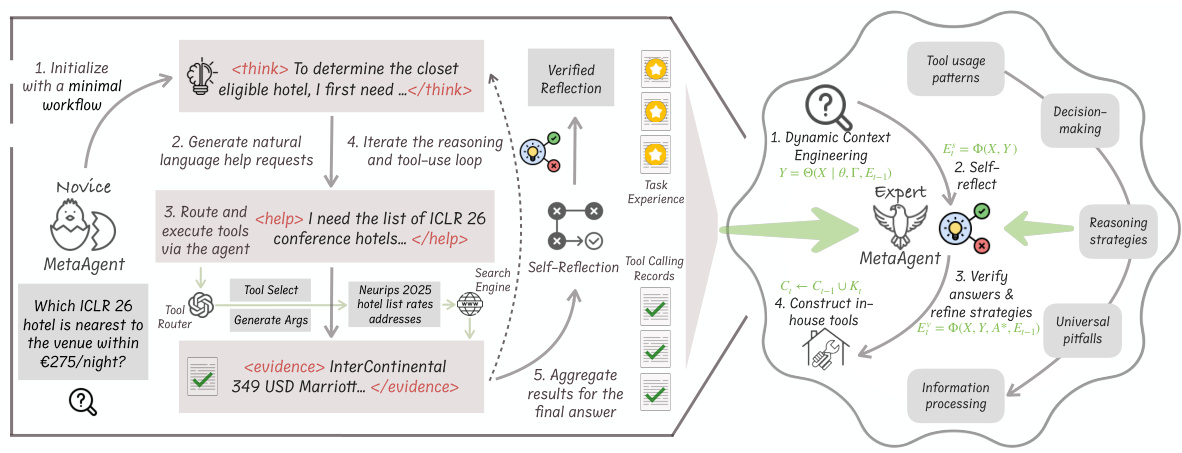

The system improves through two reflection mechanisms:

(1) Self-Reflection

# Simplified pseudocode

def self_reflection(task, solution):

# Analyze reasoning validity

# Identify gaps or errors

# Generate improvement notes

return feedback

(2) Verified Reflection

# Simplified pseudocode

def verified_reflection(task, solution, correct_answer):

# Compare with ground truth

# Extract successful patterns

# Identify failure reasons

return actionable_insights

Figure 2: Performance improvement over time

3. Dynamic Context Engineering

The AI builds context for each task:

Task context = {

"Question": q,

"Instructions": p,

"Experience": ξ_{t-1}

}

Experience accumulates through:

-

Real-time reflection during tasks -

Post-task verification with known answers

4. In-House Knowledge Base

MetaAgent maintains a persistent memory:

Knowledge Base ← Knowledge Base ∪ (Web Data ∪ Code Results)

This grows with each task, enabling better information retrieval over time.

Experimental Results

Test Datasets

| Benchmark | Focus Area | Key Challenge |

|---|---|---|

| GAIA | Multi-step reasoning | Complex tool chains |

| WebWalkerQA | Web navigation | Long-horizon search |

| BrowseCamp | Deep browsing | Hundreds of pages per query |

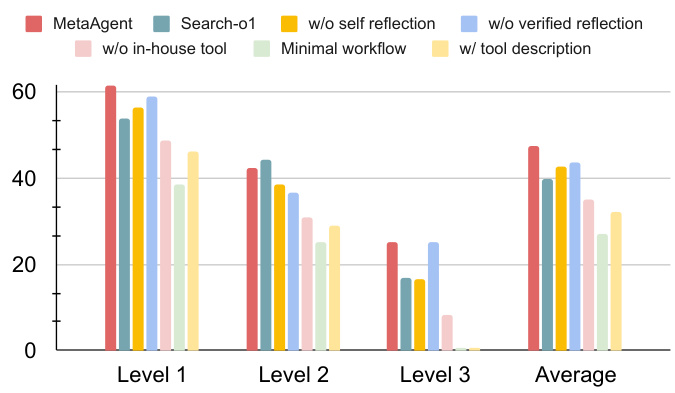

Performance Comparison

| Method Type | Example | GAIA Accuracy | WebWalkerQA | BrowseCamp |

|---|---|---|---|---|

| Direct LLM | Qwen2.5 | 13.6% | 3.1% | 0.0% |

| Retrieval-Augmented | RAG | 32.0% | 31.2% | 0.0% |

| Expert Workflow | Search-o1 | 39.8% | 34.1% | 1.9% |

| End-to-End Trained | WebThinker | 48.5% | 46.5% | 2.7% |

| MetaAgent | QwQ-32B | 47.6% | 47.9% | 7.1% |

Figure 3: Ablation study results

Case Study: Building Identification

Task: Find a building that:

-

Opened 2010s, closed pre-2023 -

15m base width, 1-3km length -

Architect’s studio founded 1990s -

5-10 acre site -

Parts made in Europe

Solution Process:

-

First Attempt:

-

Search: “2010s opened 2023 closed building” -

Found Shanghai bridge candidate -

Self-reflection: Site size mismatch (19.76 acres)

-

-

Second Attempt:

-

Targeted search: “Hudson Yards Vessel” -

Verified all constraints -

Final Answer: Copper

-

Technical Advantages

| Feature | Traditional Workflows | End-to-End Training | MetaAgent |

|---|---|---|---|

| Adaptability | Low | Medium | High |

| Data Needs | Low | High | Minimal |

| Knowledge Updates | Difficult | Difficult | Natural |

| Cross-Task Performance | Weak | Medium | Strong |

Common Questions

Q: Does MetaAgent need lots of labeled data?

A: No. It learns through task execution and self-reflection without manual data labeling.

Q: How to deploy MetaAgent?

A: Basic requirements:

-

Central reasoning agent (QwQ-32B recommended) -

Tool router (configurable for web search/code execution) -

Knowledge base storage (BGE-m3 embeddings)

Q: Does it support multiple languages?

A: Yes. MetaAgent automatically adapts to the user’s language.

Q: How to evaluate performance?

A: Three key metrics:

-

Task completion accuracy -

Tool call efficiency -

Experience accumulation rate

Conclusion

MetaAgent demonstrates a new paradigm for AI development through:

-

Low initial requirements: Starts with minimal capabilities -

Continuous improvement: Learns through task execution -

Knowledge retention: Builds persistent memory -

Tool optimization: Dynamically adjusts tool usage

This framework shows promise for real-world applications requiring adaptive problem-solving, particularly in knowledge discovery scenarios.