Building an Enterprise AI Assistant: Moltbot AWS Deployment, Feishu Integration, and Multi-Model Setup Guide

With the widespread adoption of Large Language Models (LLMs), many teams are no longer satisfied with interacting with AI inside a web browser. Instead, the goal is to embed AI capabilities deeply into daily workflows. However, bridging the gap between a “toy” chatbot and an “enterprise-grade” AI assistant involves significant hurdles: security audits, 24/7 availability, and multi-platform integration.

Based on the latest technical practices, this guide provides a detailed breakdown of how to use the Amazon Web Services (AWS) one-click deployment solution to build your own AI assistant, Moltbot (formerly Clawdbot), in just 8 minutes. We will also delve into integrating with Feishu (Lark), configuring MiniMax to avoid bans, and connecting Kimi K2.5, helping you build a stable, controllable, and cost-effective enterprise AI solution.

1. Why Choose Moltbot and the Official AWS Solution?

Core Question: How can you securely and rapidly build a long-term, controllable AI assistant in an enterprise environment?

What you need is not just another chatbot, but an AI assistant capable of running 24/7, being auditable, and integrating deeply with enterprise collaboration tools. Moltbot is an open-source project designed to meet this demand. It connects to platforms like WhatsApp, Slack, Discord, Telegram, and the domestic Feishu, allowing teams to complete complex tasks such as Q&A, information processing, and browser automation through familiar chat interfaces.

When it comes to deployment, we strongly recommend the official AWS one-click solution over piecing together servers yourself. Many teams get stuck on the “running securely” hurdle when trying to self-host. The value of the official solution lies in turning key enterprise-ready capabilities into default settings, drastically reducing security risks and maintenance overhead.

1.1 Core Advantages of Enterprise-Grade Deployment

AWS’s standardized solution is built on core services like Amazon EC2, IAM, CloudFormation, and SSM, offering significant advantages:

-

Compute and Security Foundation: Built on AWS’s global infrastructure, data storage and computation better meet enterprise compliance requirements and offer high controllability. -

No API Key Management: By using IAM Role authentication to call Amazon Bedrock directly, you don’t need to hardcode sensitive API Keys in code or config files. This effectively eliminates the risk of key leakage. -

Secure Access Mechanism: Utilizing SSM Session Manager for access and port forwarding means no public ports need to be exposed. This “zero public exposure” access method aligns better with enterprise security policies and reduces the attack surface. -

One-Click Automation: Using CloudFormation templates automates the creation and configuration of all related resources with a clear deployment chain. -

Flexible Model Switching: Switch between Claude, Nova, DeepSeek, and other Bedrock-supported models without changing code, making it easy to choose the best model for specific business scenarios.

1.2 Use Cases: Who Should Pilot This First?

Moltbot is perfect for teams looking to validate AI value in a small, specific scenario. By piloting in a specific business workflow, you can quickly see ROI (Return on Investment). Typical pilot scenarios include:

-

Marketing & Strategy: Automated tracking of competitive intelligence and daily industry brief generation. -

Sales & Customer Support: As a rapid response tool for high-frequency questions, assisting in document retrieval and draft generation to boost response efficiency. -

Executive Assistant: Automatically aggregating cross-channel information, preparing for meetings, and smart reminders for to-do items.

Author’s Reflection:

The evolution from a tool to an assistant attribute relies heavily on “always-on” capability and “environment independence.” If an AI assistant depends on a personal computer being turned on, it’s just a tool. Only when it stands by in the cloud 24/7, responding instantly to messages on enterprise collaboration platforms, does it truly become a member of the team.

1.3 The 8-Minute Rapid Deployment Walkthrough

If you want to experience it quickly, just follow these three steps to own a Moltbot workstation running in your own AWS account in about 8 minutes.

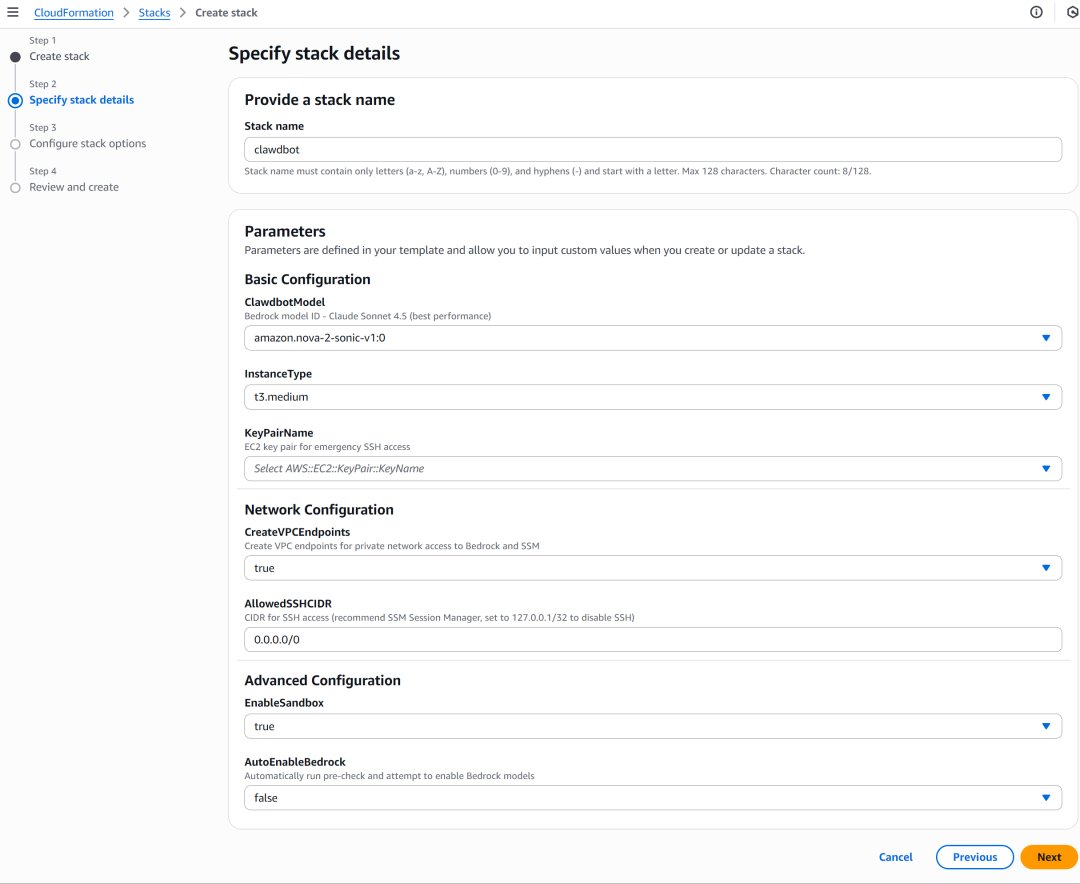

Step 1: Launch CloudFormation Deployment

Click the official deployment button to go directly to the CloudFormation page. In the configuration form, you only need to select an EC2 Key Pair (for emergency maintenance login). The creation of all other resources (VPC, Security Groups, IAM Roles, EC2 instances) is handled automatically by the template.

Step 2: Wait ~8 Minutes and Check Outputs

The CloudFormation stack creation takes about 8 minutes. Once complete, open the “Outputs” tab in the CloudFormation console. This displays critical instructions for subsequent access and configuration, including the Instance ID and access methods.

Step 3: Access Web UI via SSM

Follow the instructions in Outputs to install the SSM Manager plugin in your local terminal and execute the provided port forwarding command. This step securely maps a local browser port (e.g., 8080) to the cloud Moltbot service. Afterward, simply open the specified URL in your local browser to enter the Moltbot Web UI and start configuring.

2. Deep Integration: Connecting Moltbot to the Feishu (Lark) Workflow

Core Question: How do you seamlessly integrate a cloud AI assistant into the Feishu workflow your team uses daily?

Once deployed, to let the team interact directly in a familiar tool, we usually choose an instant messaging entry point for integration. For domestic users, Feishu (Lark) is the top choice. Below are the detailed steps to bridge Moltbot and Feishu.

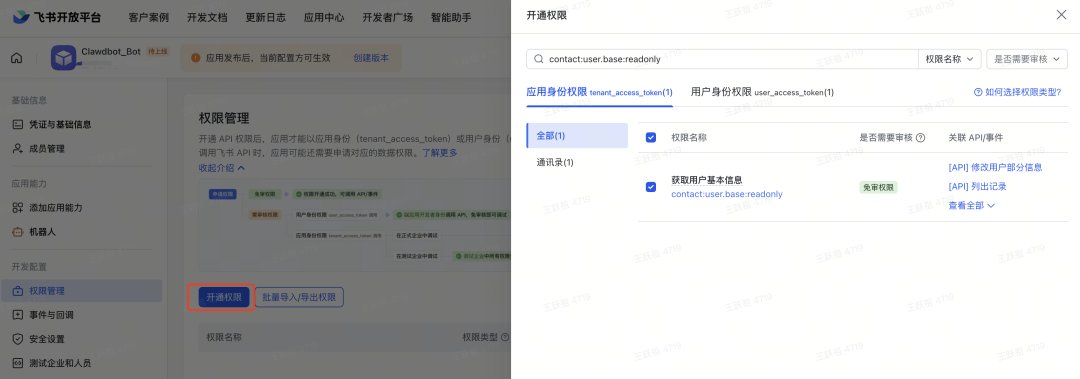

2.1 Feishu Side: Create App and Bot

First, you need to create a self-built app on the Feishu Open Platform and configure its bot capabilities.

-

Create Self-Built App: Log in to the Feishu Admin Console, create a new enterprise self-built app, and obtain the

App IDandApp Secret.图像 -

Add Bot Capability: On the app’s feature activation page, enable the “Bot” capability. This allows the app to interact with users by receiving and sending messages.

图像 -

Set Permissions: Configure necessary API permissions based on actual usage needs. For example, getting group info, receiving messages, and sending messages are basic configurations.

图像

2.2 Moltbot Side: Configure Feishu Channel

Next, you need to enter Feishu credentials in Moltbot and establish the connection.

(1) Obtain and Configure Credentials

Use the clawdbot CLI tool or edit the config file directly to enter Feishu credentials. Key configurations include App ID, App Secret, and the enabled state.

# Set Feishu App ID

clawdbot config set channels.feishu.appId "cli_xxxxx"

# Set Feishu App Secret

clawdbot config set channels.feishu.appSecret "your_app_secret"

# Enable Feishu Channel

clawdbot config set channels.feishu.enabled true

(2) Credential Acquisition and Interface Operation

After copying the credentials from the Feishu backend, paste them into Moltbot’s configuration interface or file. This step establishes the trust relationship between Moltbot and the Feishu app.

2.3 Message Event Subscription and Verification

The final step is configuring Feishu’s event callbacks so it can push user messages to Moltbot in real-time.

-

Event Subscription Config: In the event subscription/callback configuration of the Feishu app, choose to use the long connection mode to receive callbacks. This method is more friendly for cloud instances deployed in private subnets or accessed via SSM.

-

Verification and Publish: Complete signature verification and retry logic configuration, then publish the app.

图像 图像 -

Integration Testing: @mention the bot in a Feishu group chat, or start a private chat to test if it responds correctly. You can also try sending card messages to test rich-text interactions.

图像

Author’s Reflection:

When configuring Feishu integration, the biggest technical hurdle often isn’t code, but permission management and callback address configuration. Especially when using the long connection mode, you must ensure the cloud instance can initiate the connection. While this setup seems tedious initially, once connected, the user experience is incredibly smooth, completely shielding the backend technical complexity.

3. Model Advancement: Configuring MiniMax to Avoid Bans and Reduce Costs

Core Question: When integrating LLMs, how do you avoid the risk of personal subscription account bans and achieve cost control?

With the rising popularity of Moltbot, many users are trying to connect it to various model providers. However, using personal subscription API Keys for Claude or ChatGPT carries significant risk—numerous cases exist where vendors like Anthropic have banned enterprise calls violating ToS. Therefore, choosing a compliant, stable, and cost-effective Provider (like MiniMax) has become the first choice for many teams.

3.1 Obtaining the API Key

MiniMax is divided into a Domestic (China) version and an International version. Both can connect to Moltbot, but registration addresses and configuration details differ.

-

Domestic Version: Suitable for teams whose main business is in China; offers fast access speeds. -

International Version: Suitable for scenarios needing to connect to overseas business or specific models.

After registering and choosing a suitable payment plan, you can retrieve the API Key in the Account Management interface. Please keep this Key safe.

3.2 Configuring Moltbot to Connect MiniMax

Depending on the version you choose, the configuration process falls into two cases.

Case A: Using International API (Standard Process)

This is the simplest configuration method for scenarios using the official standard interface.

-

Enter the configuration command in the terminal to start the wizard: clawdbot configure -

Go to Model/authand selectMiniMax M2.1. -

Paste your API Key. -

When prompted to select “Models in /model picker (multi-select)”, press Enter directly; the system will auto-select the default model. -

When prompted “Updated ~/.clawdbot/clawdbot.json”, select Continue to finish.

Case B: Using Domestic API

The Domestic API requires manually editing the config file to adapt to specific endpoints and auth headers.

-

Generate Base Config First: Follow “Case A” steps once, entering the Key but not setting it as default. The point is to let the system auto-generate the base JSON structure, saving you from handwriting the config file. -

Edit Config File: Use an editor (like nano) to open the config file: nano ~/.clawdbot/moltbot.json -

Manual Address Change: Find the baseUrlfield, change it to the Domestic API service address, and add the"authHeader": trueconfiguration item to meet auth requirements. -

Save and Exit: Press Ctrl+Oand Enter to save,Ctrl+Xto exit the editor.

Finally, restart the service for the config to take effect:

clawdbot gateway restart

3.3 Real-World Case: Building a Multi-Timezone Mini-App in 2 Minutes

After configuration, we can verify MiniMax’s tool execution accuracy with a real task.

Scenario: We need a small web app that displays global multi-timezone times.

Operation Steps:

-

Ask for a Plan: First, ask Moltbot in the chat window how to implement this. It will suggest a solution using Nginx to deploy a static page.

图像 -

Execute Deployment: Choose to let the AI automatically execute Nginx installation and code writing. The whole process from installing Nginx to generating HTML/CSS takes only 2 minutes with zero errors.

图像 -

Verify Results: Access the generated page directly in the browser to check the time display for different time zones.

图像

This case demonstrates the high efficiency of Moltbot combined with MiniMax in daily task handling. Compared to expensive models like Opus or GPT-4, this combination offers extreme cost-efficiency for non-production demos and small tools.

Author’s Reflection:

Many developers worry about the capability of domestic models, but in specific tool-use scenarios, MiniMax often exceeds expectations. Especially in structured tasks like writing code or deploying services, its accuracy and speed fully meet the needs of daily assisted development. This reminds us that when choosing models, we shouldn’t just stare at benchmark scores, but focus on “good enough” levels and cost-efficiency in specific scenarios.

4. Quick Access: Connecting Kimi K2.5 to Moltbot

Core Question: How do you leverage Kimi K2.5’s coding power to rapidly enhance Moltbot’s code generation capabilities?

With the buzz around Kimi K2.5, many users want to integrate its powerful coding abilities into Moltbot. Here is the step-by-step guide to help you get connected in minutes.

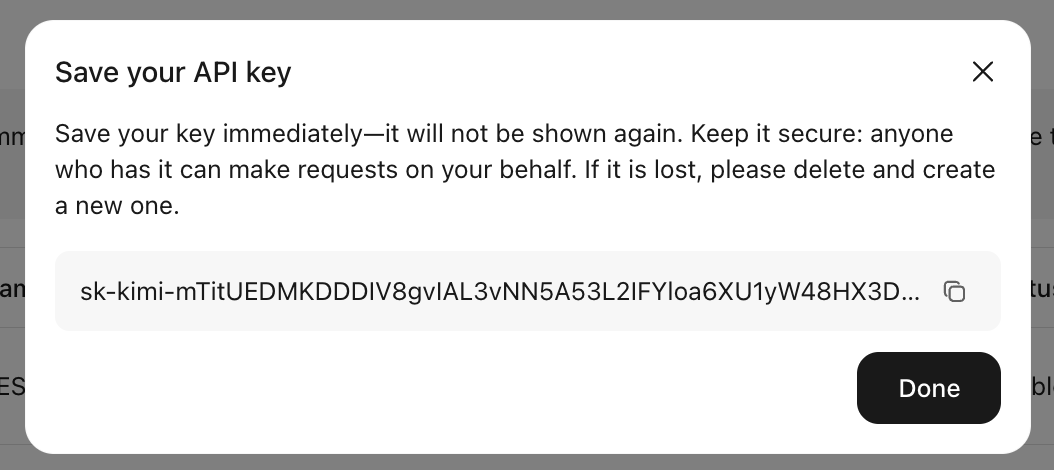

4.1 Get Kimi Code Access

-

Get a Plan: Visit the Kimi Code platform and register for a plan that suits you.

图像 -

Copy API Key: Get your dedicated API Key on the account page. Note that Keys are usually displayed only once, so copy and save it immediately.

图像 图像

4.2 Install and Login to Moltbot

If you haven’t installed Moltbot yet, use the official quick-start script:

curl -fsSL https://molt.bot/install.sh | bash

Running this command automatically downloads and installs the necessary components.

Once the installation is complete and the configuration wizard appears, you can start setting up your Jarvis agent.

4.3 Configure Kimi Model and Hooks

-

Paste API Key: Paste the API Key you obtained from Kimi Code into the configuration interface.

图像 -

Select Model: In the model selection list, choose

kimi-code/kimi-for-coding. At this point, Channel selection is optional; you can skip it for now.图像 -

Configure Hooks: This step allows you to customize session behavior.

-

Inject markdown on startup: Injects content similar to a README when the session starts (good for setting context/rules). -

Command/operation logging: Records commands and operations executed during the session for auditing and backtracking. -

Context continuity: Saves a summary of the current session context so you can resume seamlessly in a new session.

Check the Hooks you need based on your requirements.

图像 -

-

Restart Service: If you have a running Gateway locally, we recommend restarting the service to ensure the new config takes effect:

clawdbot gateway restart图像 -

Hatch the Bot: Execute

clawdbot hatchor the corresponding start command, and the system will automatically redirect to the Bot chat page.图像

At this point, you have successfully connected Kimi K2.5 and can start enjoying high-quality code generation and assistance services.

Author’s Reflection:

The design philosophy of Moltbot is interesting; it uses the word “Hatch” to describe the Bot startup, as if creating a living digital assistant. The process of connecting Kimi K2.5 is smooth largely due to its standardized API interface and clear configuration wizard. This reaffirms a point: good tools should make complex configuration feel like a fill-in-the-blank exercise, allowing users to focus on “how to use it” rather than “how to install it.”

5. Implementation Services: When Teams Need “Same-Day Out-of-the-Box”

Core Question: If the team lacks Ops or Dev resources, how can we quickly land and verify Moltbot’s value?

While the deployment and configuration processes above are technically feasible, non-technical business teams or time-critical projects often encounter detail roadblocks. Common pain points include: complex model permission setup, port forwarding dependent on local environments, debugging various Channel callbacks, and not knowing which scenario to pilot.

To address these pain points, professional technical service providers (like Juyun Tech, an AWS Premier Tier Partner) offer “Same-Day Out-of-the-Box” technical service packages to help enterprises quickly bridge the technical gap.

5.1 Service Package A: Same-Day Out-of-the-Box (Recommended)

This is the quick-start solution most suitable for teams, aiming to show results on the very first day.

-

Managed Deployment: Experts deploy directly to your AWS account (or a managed account) via one-click. -

Managed Integration: Help you connect 1 messaging entry point (choose from Slack, Telegram, WhatsApp, Discord). -

Demo Script: Provide a “Pilot Scenario Demo” script, guiding you on how to demonstrate the AI assistant’s capabilities to business stakeholders in 15 minutes. -

Standard Deliverables: Including accessible URLs, detailed usage manuals, and basic security recommendations.

Goal: Usable today, demo-able today, pilot-able today.

5.2 Service Package B: Enterprise Pilot Enhancement (1-2 Weeks)

For teams with higher governance and scaling needs, this package provides deeper support.

-

Multi-Entry Integration: Connect more messaging platforms, especially internal enterprise tools like Feishu or DingTalk. -

Basic Governance: Configure usage scope limits, group whitelists, and permission minimization principles. -

Managed Operations (Optional): Provides log monitoring, alerting, version upgrade window management, etc.

5.3 Pilot Advice: Start with “1 Scenario”

Whether you choose self-hosting or purchasing services, it is recommended to focus on just one thing during the initial pilot:

-

Pick 1 Entry: What is the team’s most used communication tool? (e.g., Slack or Feishu). -

Lock 1 Scenario: Solve one specific pain point (e.g., “Competitive Intelligence Radar” or “Sales Support Speedup”). -

Verify Fast: Run through it on day one, demo on day one, and validate ROI within 1-2 weeks.

Only after validating value in a small scope should you consider expanding to more entries and applying it to more scenarios; this is the rational path for technical implementation.

Conclusion & Practical Summary

Moltbot (formerly Clawdbot) is not just an open-source AI chatbot; it is a powerful foundation for building enterprise-grade AI assistants. Through AWS’s CloudFormation one-click deployment solution, we solved infrastructure and security challenges; by integrating collaboration platforms like Feishu, we achieved workflow integration; and by flexibly configuring multi-models like MiniMax and Kimi K2.5, we balanced performance, security, and cost.

For technical teams, following the steps in this article, you can complete infrastructure setup in 8 minutes and gradually integrate various models. For business teams, leveraging professional technical services allows you to have a demo-able, pilot-ready AI assistant on the very same day. The key is to start small, letting AI truly become a part of team productivity.

Practical Summary / Action Checklist

-

Deployment Prep: Ensure you have an AWS account and an EC2 Key Pair. -

Infrastructure: Click CloudFormation link -> Wait 8 mins for stack creation -> Note info in Outputs. -

Local Access: Install SSM Plugin, configure port forwarding, open local Web UI. -

Feishu Integration: Create Bot app on Feishu -> Configure Permissions -> Enter credentials in Moltbot -> Enable long connection callback. -

Config MiniMax: Register to get Key -> For International: clawdbot configure-> Select MiniMax M2.1 -> Enter Key; For Domestic: Edit~/.clawdbot/moltbot.json-> ChangebaseUrl-> AddauthHeader. -

Config Kimi: Register Kimi Code to get Key -> Run install script -> Select kimi-code/kimi-for-coding-> Configure required Hooks. -

Security Tip: Prioritize IAM Role calls to Bedrock, avoid exposing API Keys; configure permission whitelists for production environments.

One-Page Summary

| Component/Link | Recommended Solution | Key Steps/Commands | Core Value |

|---|---|---|---|

| Infrastructure | AWS CloudFormation | Click Deploy -> Select Key Pair -> Wait 8 mins | Enterprise security foundation, no API Key management, no public port exposure |

| Collaboration Entry | Feishu (Lark) | Create Self-Built App -> Config Bot Permissions -> Enter creds in Moltbot -> Enable Long Connection | Integrate into daily workflow, boost team adoption |

| Model Choice (A) | MiniMax (Intl) | clawdbot configure -> Select MiniMax M2.1 -> Enter Key |

Avoid Claude/ChatGPT ban risks, high cost-efficiency |

| Model Choice (B) | MiniMax (Domestic) | Edit ~/.clawdbot/moltbot.json -> Change baseUrl -> Restart |

Domestic access optimization, adapts to specific interface standards |

| Model Choice (C) | Kimi K2.5 | curl -fsSL https://molt.bot/install.sh -> Enter Key -> Select kimi-code |

Powerful code generation, ideal for dev assistance |

| Landing Service | Juyun Service Package | Buy Service A -> Provide Account Info -> Wait for Delivery | Deployed, Integrated, Demoed same-day, rapid ROI validation |

Frequently Asked Questions (FAQ)

Q1: Do I need to prepare my own model API Key to deploy Moltbot on AWS?

A: No. If you use the AWS official recommended Bedrock solution, the system authenticates via IAM Roles. This completely removes the need to manage and secure API Keys.

Q2: Do I need to open public server ports for Moltbot deployment?

A: No. The official recommendation is to use SSM Session Manager for port forwarding and access. This method does not require opening any inbound rules in the security group, greatly enhancing security.

Q3: How long does it usually take from clicking deploy to Moltbot being usable?

A: It usually takes only about 8 minutes. Once the CloudFormation stack is created, follow the instructions in Outputs for local port forwarding to start using it.

Q4: What if our team doesn’t have Ops or Dev staff to complete these configurations?

A: Consider purchasing the “Same-Day Out-of-the-Box” technical service. Service providers can handle the full process of deployment, integration, and demo scripting, letting you see results on the very first day.

Q5: What are the main configuration differences between MiniMax Domestic and International versions?

A: The International version can be configured directly via the CLI wizard. The Domestic version requires manually editing the moltbot.json file after generating the base config to change the baseUrl to the domestic service address and add authHeader: true.

Q6: What is the purpose of Hooks when connecting Kimi K2.5?

A: Hooks allow you to customize session behavior. For example, “Inject markdown on startup” can preset prompt words, “Command/operation logging” records operational logs, and “Context continuity” maintains context across multiple sessions.

Q7: Besides Feishu, what other platforms can Moltbot connect to?

A: Moltbot natively supports multiple mainstream platforms including WhatsApp, Slack, Discord, and Telegram. The configuration logic is similar to Feishu, all based on the Channel adaptation mechanism.

Q8: What scenario is recommended for an enterprise pilot?

A: We recommend “Pick one entry, pick one scenario.” For example, integrate Slack or Feishu, and choose “Competitive Intelligence Tracking” or “Customer Support Speedup” as a single pilot scenario. Get the process running and demoed first, then expand.