Elysia: Revolutionizing AI Data Interaction with Decision Tree-Powered Agents

The Current State of AI Chatbots and Their Limitations

In today’s rapidly evolving artificial intelligence landscape, chatbots have become ubiquitous. However, most systems remain confined to basic “text in, text out” paradigms. Users often cannot obtain truly intelligent interactive experiences—systems cannot dynamically select display methods based on content, lack deep understanding of data, and have completely opaque decision-making processes.

It was precisely to address these pain points that the Weaviate team developed Elysia—an open-source, decision tree-based Retrieval Augmented Generation (RAG) framework that redefines how humans interact with data through AI.

The Fundamental Limitations of Traditional RAG Systems

Most traditional RAG systems suffer from a fundamental flaw: they are essentially “blind.” These systems receive user questions, convert them into vectors, then look for some “similar” text and hope for the best results. This is like asking a blindfolded person to recommend a restaurant—they might get lucky, but are more likely to fail.

These systems typically also provide all available tools to the AI at once, which is as unrealistic as giving a toddler a complete toolbox and expecting them to build a bookshelf. The result is often AI confusion and ineffective problem-solving.

Elysia’s Innovative Solution

Elysia takes a completely different approach. It is not just a simple question-answering system, but an intelligent agent framework that understands data structure, intelligently selects tools, and dynamically decides how to present results.

Core Installation and Usage

Elysia’s installation process is extremely simple:

pip install elysia-ai

elysia start

These few commands can start the complete web interface. For developers, it can also be used as a Python library:

from elysia import tool, Tree

tree = Tree()

@tool(tree=tree)

async def add(x: int, y: int) -> int:

return x + y

tree("What is the sum of 9009 and 6006?")

If you already have Weaviate data, usage is even simpler:

import elysia

tree = elysia.Tree()

response, objects = tree(

"What are the 10 most expensive items in the Ecommerce collection?",

collection_names = ["Ecommerce"]

)

The Three Core Pillars of Elysia

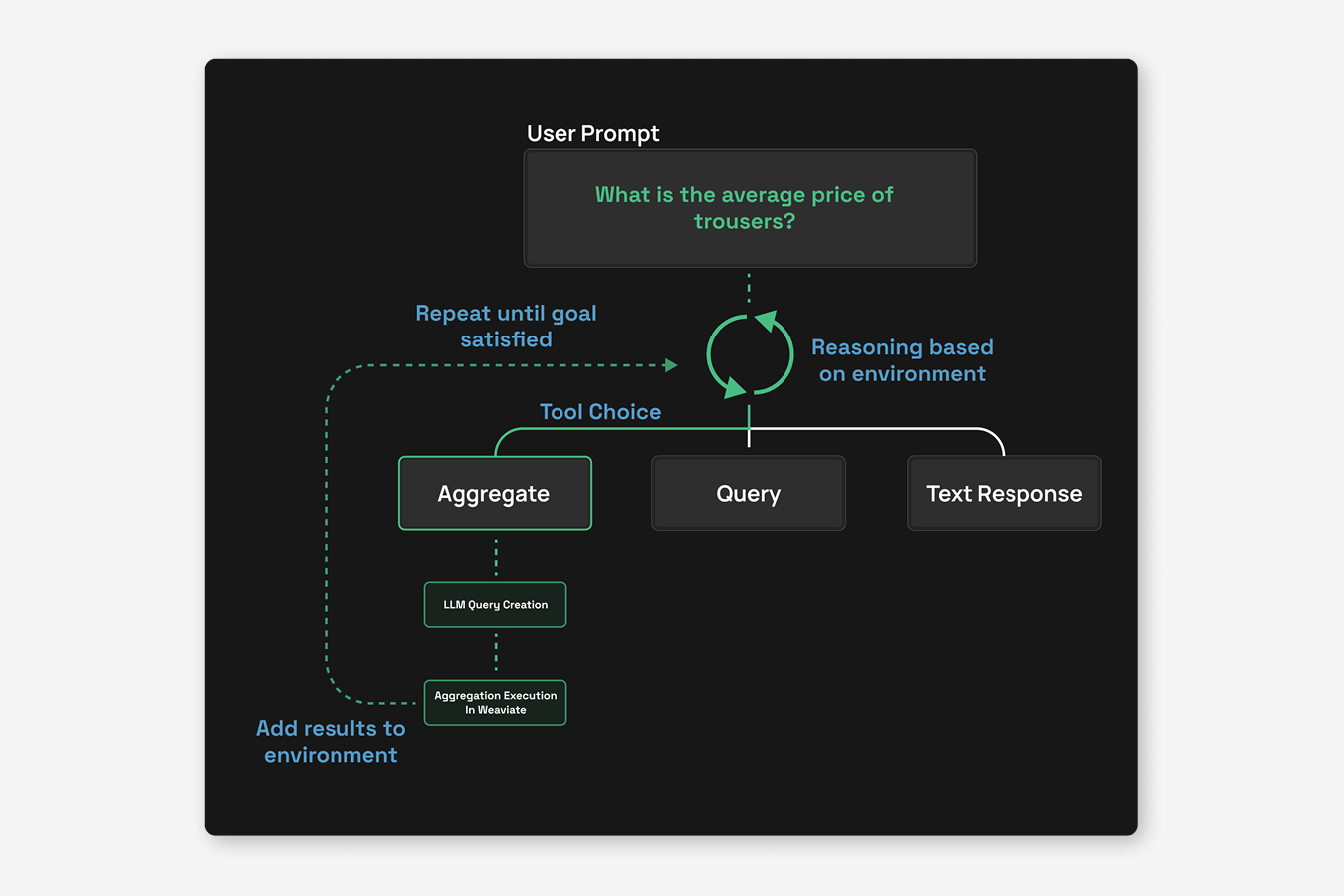

1. Decision Trees and Decision Agents

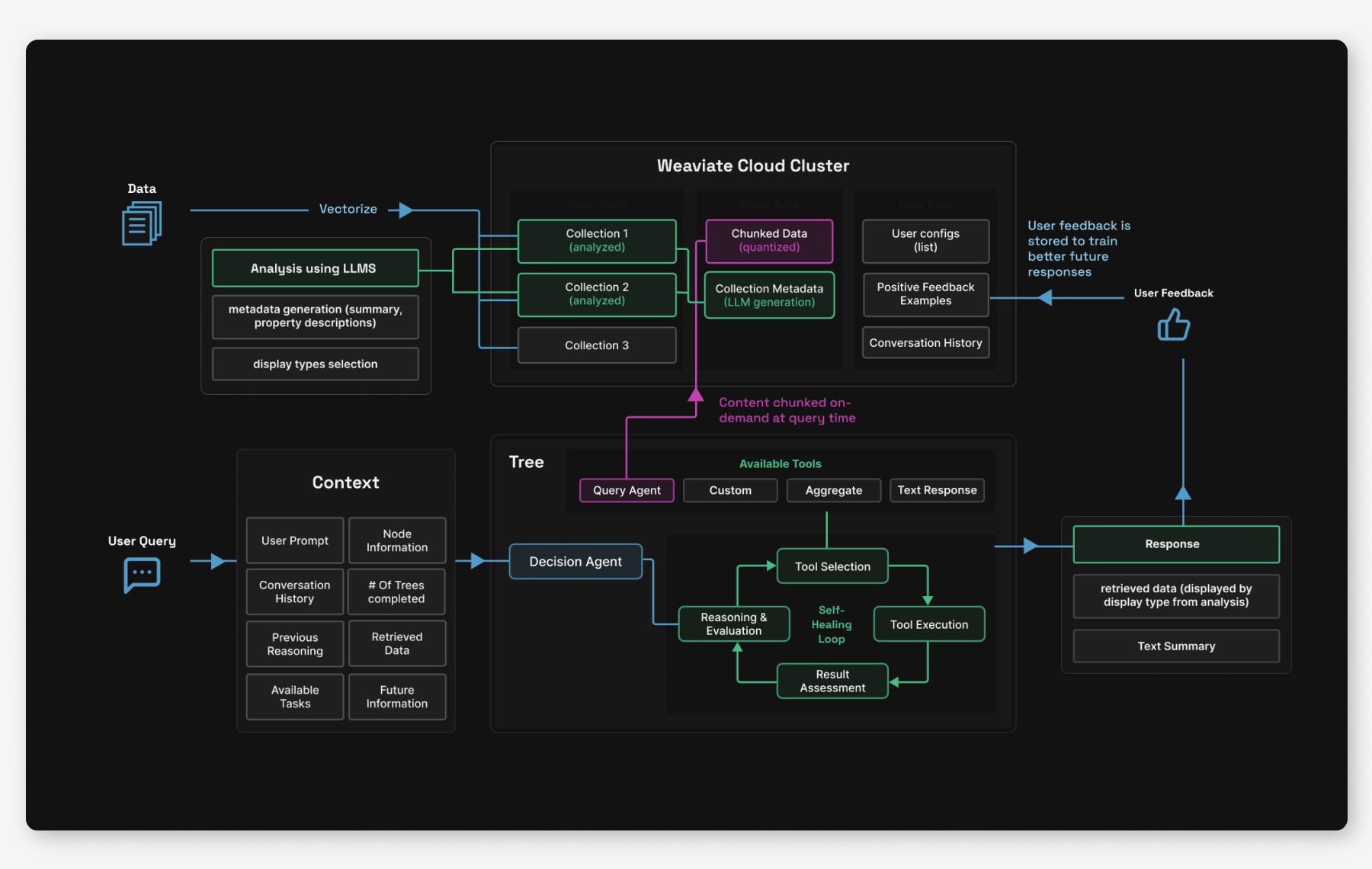

At the heart of Elysia is its decision tree architecture. Unlike simple agent platforms that access all possible tools at runtime, Elysia has a predefined network of nodes, each with corresponding operations. Each node in the tree is coordinated by a decision agent with global environmental awareness.

The decision agent evaluates its environment, available operations, past actions, and future actions to develop strategies for using the best tools. It also outputs reasoning that is passed to subsequent agents to continue working toward the same goal. Each agent understands the intentions of previous agents.

This tree structure also supports advanced error handling mechanisms and completion conditions. For example, when an agent determines that a task cannot be completed with available data, it can set an “impossible flag” during a tree step. If you ask about trouser prices in an e-commerce collection that only has jewelry, the agent will identify this mismatch and report back to the decision tree that the task is impossible.

Similarly, if Elysia queries and finds irrelevant search results, this does not constitute failure. When returning to the decision tree, the agent can recognize that it should query again with different search terms or less strict filters.

When tools encounter errors—possibly due to connection issues or typos in generated queries—these errors are caught and propagated back through the decision tree. The decision agent can then intelligently choose whether to retry with corrections or try a completely different approach. To prevent infinite loops, there is a strict limit on passes through the decision tree.

This structure provides developers with great flexibility. Users can add custom tools and branches, making the tree as complex or simple as needed. Tools can be configured to run automatically based on specific criteria—for example, a summarization tool might activate when the chat context exceeds 50,000 tokens. Other tools can remain hidden until certain conditions are met, only appearing as options when relevant to the current state.

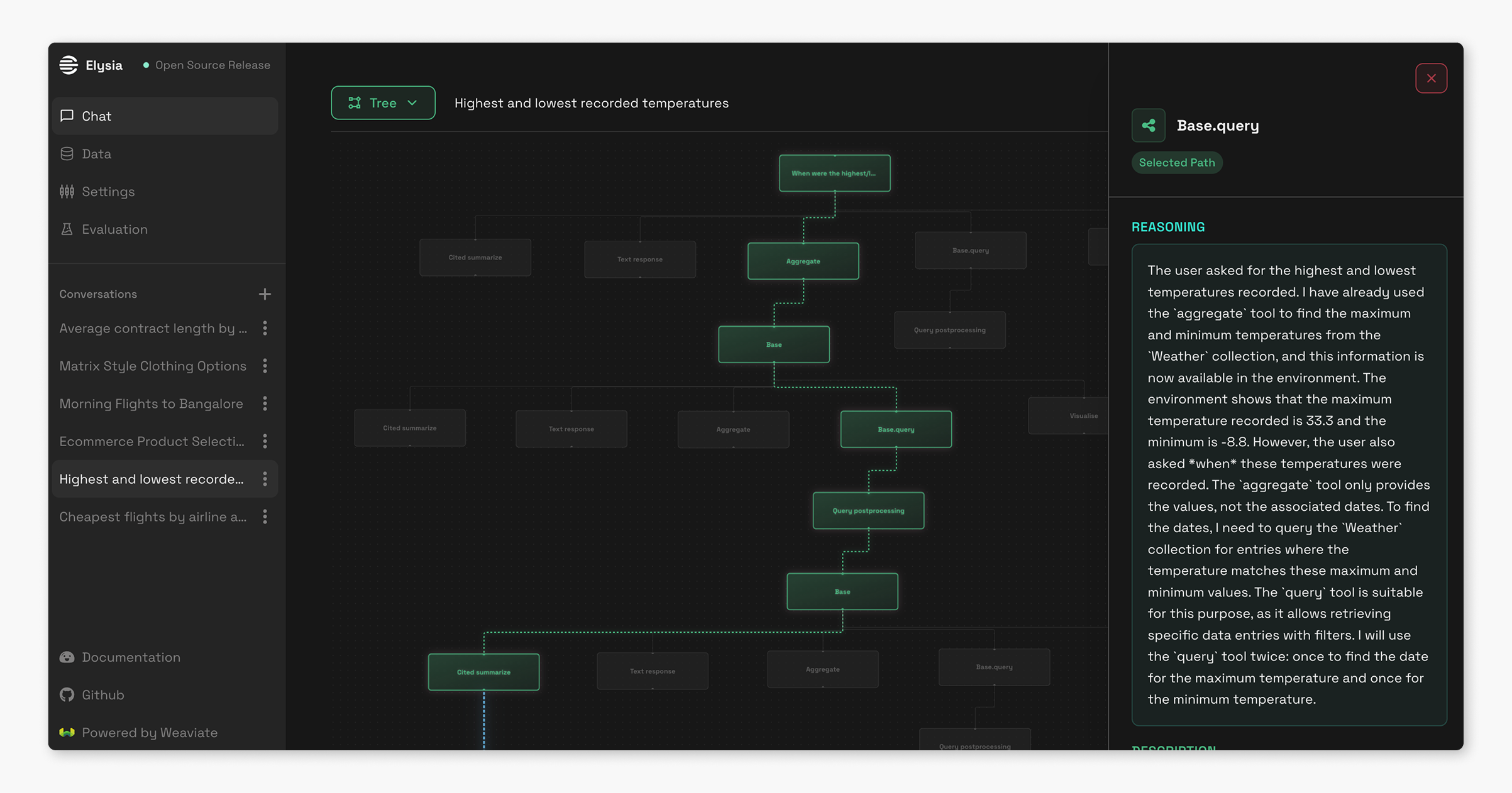

Real-time observability is one of the features that distinguishes Elysia from other black-box AI systems. The frontend displays the entire decision tree as it is traversed, allowing you to observe the LLM’s reasoning process within each node. This transparency helps users understand exactly why the system made specific choices and fix issues when they arise.

2. Dynamic Data Display Formats

While other AI assistants are limited to text responses (and sometimes images and text), Elysia can dynamically select how to display data based on what makes the most sense for the content and context. The system currently has seven different display formats: generic data display, tables, e-commerce product cards, (GitHub) tickets, conversations and messages, documents, and charts.

But how does Elysia know what display type to use for your specific data?

Before using any Weaviate tools, Elysia analyzes your collections. An LLM examines the data structure by sampling, checking fields, creating summaries, and generating metadata. Based on this analysis, it recommends the most appropriate display formats from available options. Users can also manually adjust these display mappings to better suit their needs.

In the future, this structure will also enable us to build features that allow different displays to perform different follow-up actions. A hotel display might include booking capabilities, a Slack conversation display could enable direct replies, and a product card might offer add-to-cart functionality. This could be a step further in transforming Elysia from a passive information retriever into an active assistant that helps users take action based on their data.

We will continue to add more display types to increase possible customization levels, enabling Elysia to adapt to virtually any use case or industry-specific need.

3. Automatic Data Expertise

Simple RAG systems like our own Verba struggle with complex data, multiple data types or locations, or repeated or similar data because they lack a comprehensive understanding of the environment they’re working with. After seeing the community struggle with this (and struggling ourselves), we decided this was one of the main things Elysia needed to help solve.

As mentioned above, after connecting your Weaviate Cloud instance to Elysia, an LLM analyzes your collections to examine the data structure, create summaries, generate metadata, and select display types. This is not only useful information for users—it significantly enhances Elysia’s ability to handle complex queries and provide knowledgeable responses. This capability was lacking in previous systems like Verba, which often failed with ambiguous data due to their blind search approach.

Generating metadata has proven crucial for handling complex queries and tasks within the tree. Other traditional RAG and querying systems we’ve seen often perform blind vector searches without knowing the overall structure and meaning of the data they’re searching through, simply hoping for relevant results. But with Elysia, we built the system to understand and consider the structure and content of your specific data before performing operations like querying.

The web platform also features a comprehensive data explorer with BM25 search, sorting, and filtering capabilities. It automatically groups unique values within fields and provides min/max ranges for numeric data, giving both Elysia and users a clear understanding of what’s available.

The data dashboard provides a high-level overview of all available collections, while the collection explorer allows detailed inspection of individual datasets. When viewing table data, we provide a complete object view for selected entries, enabling structured, readable display of large and nested data objects. Additionally, in the metadata tab, users can edit LLM-generated metadata, display types, and summaries, since as we all know, LLMs are far from perfect.

Additional Innovative Features

Feedback System: AI That Learns from Users

Our implemented feedback system goes far beyond simple ratings. Each user maintains their own set of feedback examples stored in their Weaviate instance. When you make a query, Elysia first uses vector similarity matching to search for similar past queries you’ve rated positively.

The system can then use these positive examples as few-shot demonstrations, enabling smaller models to provide better responses. If you’ve been using larger, more expensive models for complex tasks and rating the outputs positively, Elysia can use these high-quality responses as examples for smaller, faster models on similar queries. Over time, this reduces costs and improves response speed while maintaining quality.

Keeping interactions independent for individual users ensures personal preferences don’t affect others’ experiences and that data remains secure. The feature activates with a simple configuration checkbox and operates transparently in the background, continuously improving the entire system based solely on your interactions.

On-Demand Chunking: Smarter Document Processing

Traditional RAG systems pre-chunk all documents, which can significantly increase storage requirements. This was another issue we saw the community struggling with, so our solution was to chunk at query time rather than dealing with pre-chunking strategies. Initial searches use document-level vectors, which provide a good overview of document main points but not relevant sections within. When documents exceed a token threshold and prove relevant to the query, Elysia will step in and dynamically chunk them.

The system stores these chunks in a parallel, quantized collection with cross-references to original documents. This means subsequent queries for similar information can leverage previously chunked content, making the system more efficient over time. This approach reduces storage costs while maintaining or even improving retrieval quality.

Looking ahead to future versions, this architecture could also enable flexible chunking strategies. Different document types could use different chunking methods—code might be chunked by function or class boundaries, while prose might use semantic or simply fixed-size chunking.

Serving Frontend Through Static HTML

Another problem we wanted to solve was how to serve a NextJS frontend without having to spin up both backend and frontend servers. We discovered we could serve Elysia’s frontend as static HTML through FastAPI, eliminating the need for a separate Node.js server. This architectural change means everything can run from a single Python package, helping simplify the deployment process and reducing operational complexity. A simple pip install provides a complete, production-ready application that can run anywhere Python runs. Pretty cool, right?

Multi-Model Strategy

In addition to the feedback system allowing interchange between small and large models without quality loss, Elysia intelligently routes different tasks to appropriate model sizes based on task complexity. Small, lightweight models handle decision agents and simple tasks, while larger, more powerful models are reserved for complex tool operations requiring deeper reasoning. We defaulted to Gemini during building because of its excellent performance while also having a super large context window, fast speeds, and cost-effectiveness.

However, one of the main aspects we love about the Weaviate ecosystem and development is always being flexible in which providers, tools, and integrations we can choose. So of course, all model choices remain fully customizable through a configuration file, supporting almost any provider, including local models. Going deeper, users can also configure different models for different parts of the system, optimizing for their specific performance, security, cost, and latency requirements.

Customize Your Blob

Personalization of the entire app UI is one of the features we’ll continue to work on for future versions, but for now, you can get a taste of it by customizing your own Elysia blob that’s saved persistently in your app! In the future, these customization features will allow users to rebrand Elysia to fit their own company.

Technical Stack

The technical stack behind Elysia is relatively simple. Elysia’s retrieval is powered exclusively by Weaviate—it uses agents to build custom queries or aggregations, as well as using Weaviate’s fast vector search for quick retrieval of similar past conversations and storing conversation histories. Additionally, we use DSPy, NextJS, FastAPI, and Gemini for our go-to testing model.

Weaviate provides all the features we needed to build a robust application, such as named vectors, different search types (vector, keyword, hybrid, aggregation), and filters. Its native support for cross-references was the basis for the on-demand chunking feature, so Elysia could maintain relationships between original documents and their dynamically generated chunks. Weaviate’s quantization options also helped manage storage costs for data in our Alpha testing phase release, and the cloud collection setup allows us to easily store generated metadata and user information.

DSPy serves as the LLM interaction layer. The team chose DSPy because it provides a flexible, future-proof framework for working with language models. Beyond basic prompt management, DSPy makes it straightforward to implement few-shot learning, which powers Elysia’s feedback system. The framework would also support prompt optimization capabilities that could be included in future releases.

But the core logic of Elysia is written in pure Python. Doing this gave us complete control over the implementation and kept the tools we had to work with to a minimum. While the pip install does bring in dependencies from DSPy and other libraries, the core Elysia logic is lean and comprehensible.

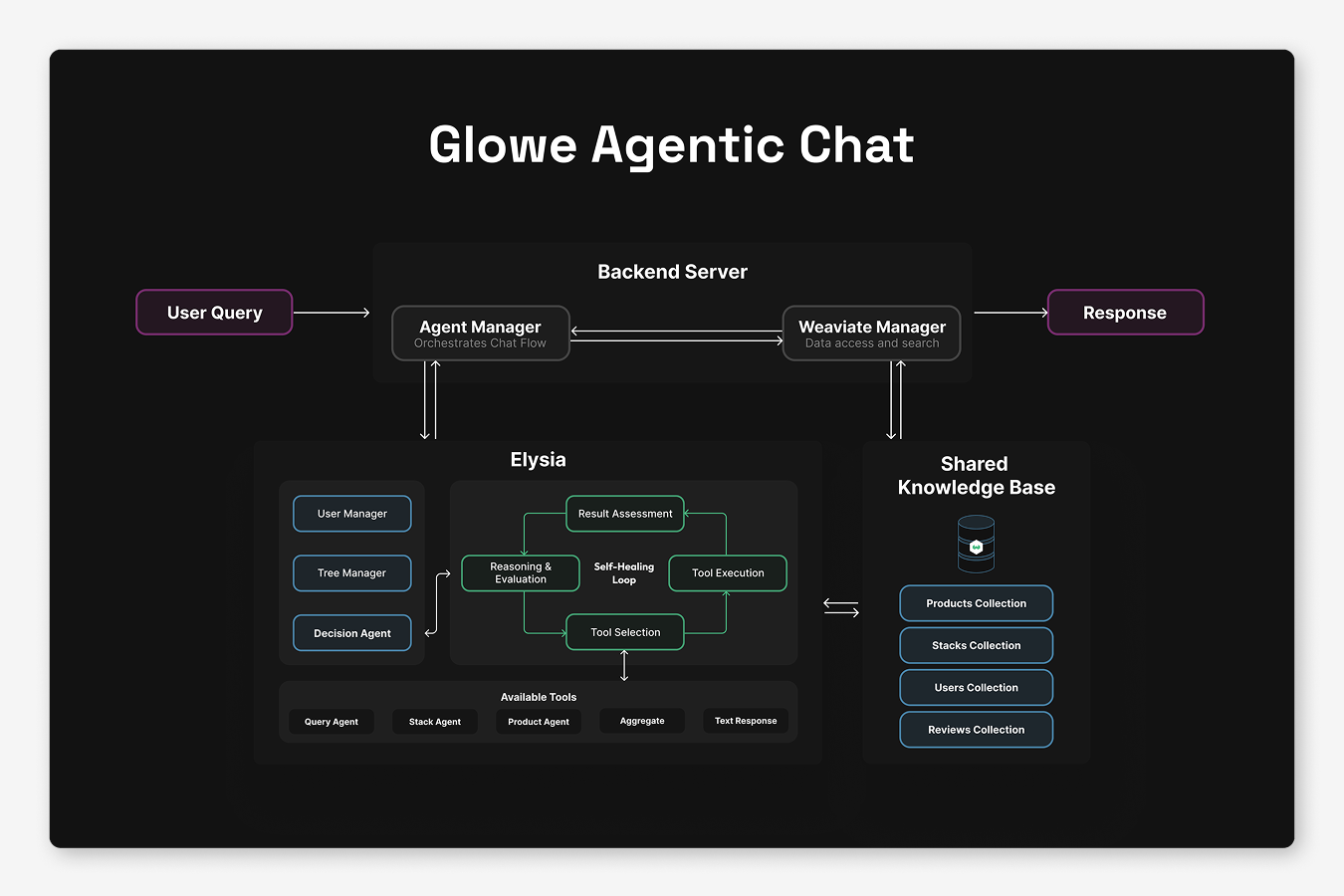

Real-World Example: Powering Glowe’s Chat

To test whether Elysia actually worked as a flexible agentic framework the way we envisioned it, we decided to use it to power the chat interface in our AI-powered skincare ecommerce app, Glowe.

For the Glowe-Elysia tree, we created three custom tools specifically for the app’s needs:

-

A query agent tool for finding the right products with complex filters (powered by the Weaviate Query Agent) -

A stack generation tool that creates product collections specific to a user through natural language -

A similar products tool that provides recommendations based on the current context and individual user

All the complex functionality—text responses, recursion, self-healing, error handling, and streaming—came built-in with Elysia. We could focus entirely on implementing the logic specific to the app, rather than building all the boring AI infrastructure.

How to Get Started

Web Application

Step 1: Install dependencies and start app

Run these commands to launch the web interface:

python3.12 -m venv .venv

source .venv/bin/activate

pip install elysia-ai

elysia start

Step 2: Get some data

If you don’t already have a Weaviate Cloud cluster with some data in it, head over to the Weaviate Cloud console and create a free Sandbox cluster.

Then, you can either add data to your cluster by following the quickstart tutorial or use the provided import script template.

Step 3: Add your configuration settings

In the config editor, you can add your Weaviate Cluster URL and API key and set your models and model provider API keys. You can also create multiple configs that allow you to switch between data clusters or model providers easily.

In the left menu bar, you can also customize your very own blob!

Step 4: Analyze your data

Under the Data tab in the menu bar on the left-hand side, you can analyze your collections, which will prompt an LLM to generate property descriptions, a dataset summary, example queries, and select display types for each collection.

When you click on a data source, you can view all the items in the collection and edit any of the metadata, including choosing additional display types or configuring the property mappings.

Step 5: Start chatting

It’s time to start asking questions! Head over to the Chat tab to create a new conversation and chat with your data. If you want to view the decision tree, click the green button on the top left of the chat view to switch to the tree view, and hover over any of the nodes to get the description of the node, instructions for the LLM, and the LLM’s reasoning. Each new user query will generate a new decision tree within this view.

Settings are also configurable on a per-chat level. You can add more detailed Agent instructions or change up your model settings.

As a Python Library

Simply install with:

pip install elysia-ai

And then in Python, using Elysia is as easy as:

from elysia import tree, preprocess

preprocess("<your_collection_name>")

tree = Tree()

tree("What is Elysia?")

Using Elysia requires access to LLMs and your Weaviate cloud details, which can be set in your local environment file or configured directly in Python. The full documentation provides detailed configuration options and examples.

Future Development

We’re still cooking 🧑🍳

We have several features planned and in progress, including custom theming similar to Verba that will allow users to match Elysia’s appearance to their brand. But beyond that, you’ll have to wait and see 👀

Conclusion: The Future of Agentic RAG

Elysia is more than just a RAG implementation—we built it to demonstrate what AI applications can become. By combining transparent agent decision-making, dynamic displays, and over-time personalization and optimization, we believe we’re on track to creating an AI assistant that understands not just what you’re asking, but how to actually present the answer effectively.

Elysia will eventually replace Verba, our original RAG application, as our next step in developing cutting-edge applications with vector databases at their core. Going beyond simple Ask-Retrieve-Generate pipelines, we’ve created an infrastructure for developing sophisticated, agentic AI applications while keeping the developer and user experiences simple and straightforward.

Whether you’re building an e-commerce chatbot, an internal company knowledge expert, or something entirely new, Elysia provides the foundation for AI experiences that extend beyond just text generation. We can’t wait to see what you build!

So, ready to get started? Visit the demo, check out the GitHub repository, or dive into the documentation to start building.