EdgeBox: Revolutionizing Local AI Agents with Desktop Sandbox – Unlock “Computer Use” Capabilities On Your Machine

Picture this: You’re hunkered down in a cozy coffee shop, laptop screen glowing with a Claude or GPT chat window. You prompt it: “Analyze this CSV file for me, then hop into the browser and pull up the latest AI papers.” It fires back a confident response… and then? Crickets. Cloud sandboxes crawl with latency, privacy concerns nag at you like an itch you can’t scratch, and those open-source CLI tools? They nail code execution but choke the second your agent needs to click around in VS Code or drag a file in Chrome.

I get it—I’ve been there, fuming at half-baked tools that promise the world but deliver a terminal tease. That’s until EdgeBox hit my radar: an open-source powerhouse that ports E2B’s cloud magic straight to your local setup, topped off with a full-blown GUI desktop. It’s not just a code interpreter; it’s the gateway to turning your LLM agents into “digital workers” that type, click, and screenshot like pros. Built on Anthropic’s Model Context Protocol (MCP)—an open standard launched in November 2024 for seamless AI-to-system connections—EdgeBox keeps everything local: zero latency, ironclad privacy. Today, I’ll walk you through why it’s a game-changer, how to dive in, and the gotchas to sidestep. By the end, you’ll be itching to spin it up and watch your agents “use the computer” for real.

Why EdgeBox Stands Out in the AI Sandbox Crowd

Traditional sandboxes? E2B shines in the cloud but racks up costs and risks data leaks; local OSS like codebox keeps things private but traps you in CLI purgatory—no GUI means no “real” computer use. Enter EdgeBox: It spins up an Ubuntu desktop in Docker on your rig, letting agents VNC in to browse GitHub, edit code, or automate drags. All data stays put, latency’s nonexistent, and it’s MCP-ready for plug-and-play with Claude Desktop or OpenWebUI.

Here’s a quick side-by-side (pulled from hands-on tests and official specs):

| Feature | EdgeBox | Typical CLI Sandbox (e.g., codebox) |

|---|---|---|

| Environment | Local Docker + GUI Desktop | CLI Terminal Only |

| Interface | MCP HTTP + VNC Viewer | CLI API Only |

| Capabilities | Code Exec + Computer Use | Code Interpreter Only |

| Privacy | 100% Local, No Cloud | 100% Local, But No GUI |

| Latency | Near-Zero (Local) | Near-Zero, But Feature-Limited |

No hype—it’s the fix for my agent dev woes: Testing “computer use” scenarios without cloud waits or manual tweaks. Forked from E2B’s open-source interpreter but supercharged with MCP, the latest v0.8.0 dropped just yesterday on September 27, patching Docker quirks for smoother sails.

Prime use cases? Data pros crunching Python scripts with instant viz screenshots; web scrapers mimicking human browser flows; even game AI sims with keyboard/mouse mocks. If your AI’s stuck in chat mode, EdgeBox flips the script.

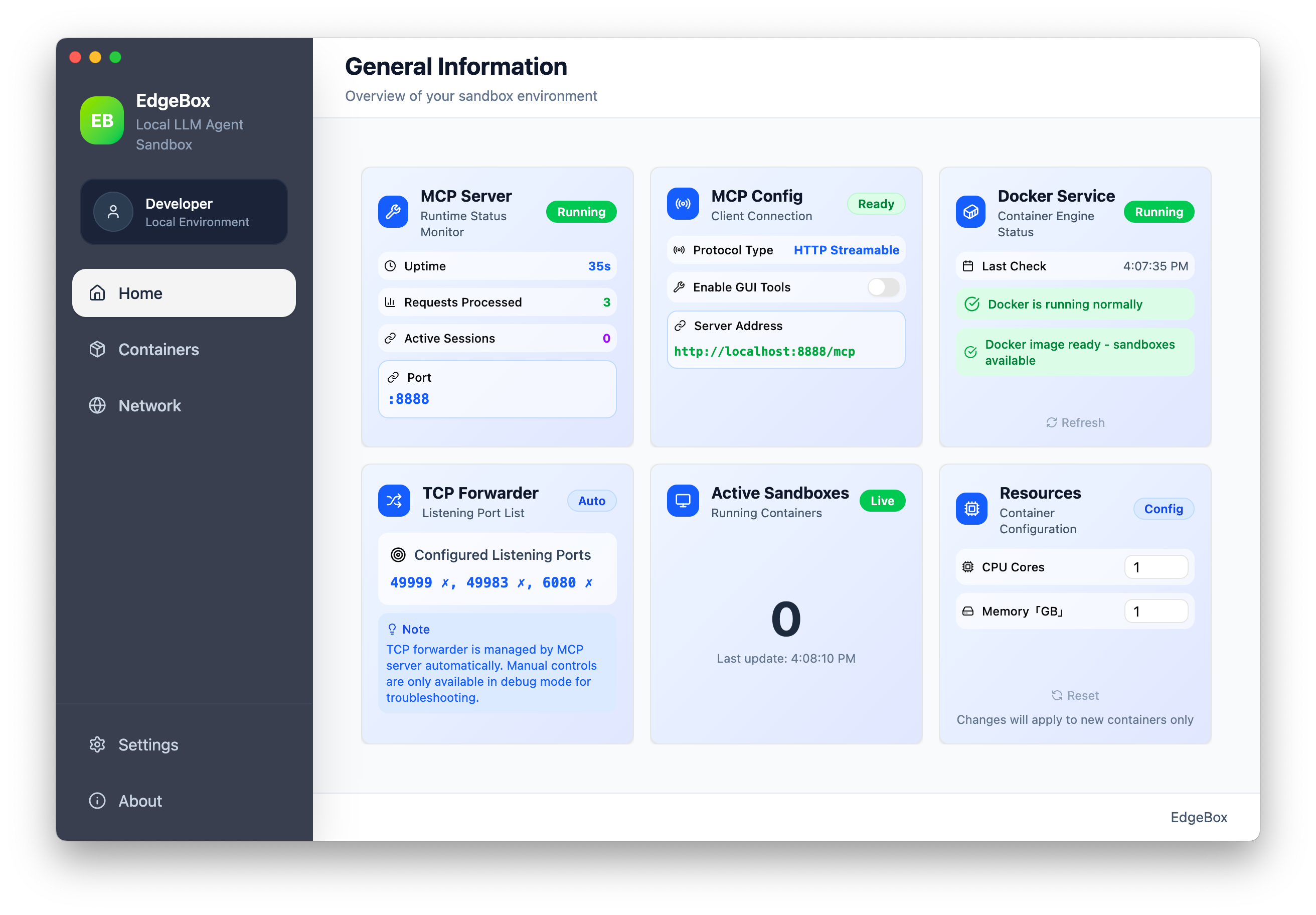

The EdgeBox dashboard at a glance: Monitor Docker and MCP server health, then connect your agent client in seconds.

Breaking Down the Core Features: Shell to Human-Like Ops

EdgeBox packs a “trifecta” punch—code execution, shell access, GUI automation—all wired through MCP. This protocol, per Anthropic’s vision, tackles the “N×M integration nightmare” with JSON-RPC over HTTP: bidirectional streams, permission gates, and SDK nods from OpenAI and Google. Your LLM just pings an HTTP endpoint, and the sandbox jumps.

Start with Full Desktop Environment (Computer Use)—the star here. Agents get a VNC-linked Ubuntu rig preloaded with Chrome, VS Code, and essentials. Need it to “Google something”? It hovers the mouse, types the URL, hits Enter, snaps a screenshot for context. I once tasked Claude with tweaking a React demo: It fired up VS Code, dragged files, built and ran—all autonomous.

Live VNC action: Agent flips between VS Code and browser, window-switching like a human multitasker.

Next, Code Interpreter & Shell: Docker silos keep rogue code contained, supporting Python, JS, R, Java, Bash with persistent state. Drop a CSV? Pandas parses, Matplotlib plots—sandbox-only. Shell runs stateful (pip install sticks around), files get full CRUD plus real-time watches. No more “agent nukes my host filesystem” horror stories.

Tying it: MCP Integration as the glue. Tools expose as MCP endpoints; multi-sessions via x-session-id headers keep tasks isolated—one for data viz, another for scraping. Hooks into LobeChat effortlessly.

Computer Use in motion: Agent types a URL, enters, screenshots—AI finally “gets” the desktop.

MCP Toolkit: Supercharging Your Agent’s Skillset

Tools split into CLI basics (always on) and GUI unlocks (toggle in settings). It’s like an RPG tree: Core execute_python for scripts, advanced desktop_mouse_drag for drags.

CLI Core Tools (Reliable Staples):

-

Code: execute_pythonfor isolated runs,execute_bashfor shell scripts. -

Files: fs_listto scan dirs,fs_writeto create,fs_watchfor live changes. -

Shell: shell_runsequential,shell_run_backgroundfor async.

GUI Desktop Tools (Enabled for Power):

-

Mouse/Keyboard: desktop_keyboard_typetexts (clipboard for Unicode),desktop_mouse_clickpositions,desktop_keyboard_combolike Ctrl+C. -

Windows: desktop_get_windowslists,desktop_switch_windowfocuses,desktop_launch_appfires Chrome. -

Vision: desktop_screenshotPNG grabs,desktop_waitfor timing.

| Category | Sample Tool | Description | Mode |

|---|---|---|---|

| CLI | execute_python |

Isolated Python Execution | Always |

| CLI | fs_read |

Read File Contents | Always |

| GUI | desktop_screenshot |

Desktop Screenshot | Enabled |

| GUI | desktop_mouse_move |

Cursor to Coordinates | Enabled |

Prompt naturally: “Launch browser, search ‘EdgeBox GitHub’, screenshot it.” Chains tools, streams outputs. Flip GUI on in app settings—Docker pulls an X11 image.

Architecture Deep Dive: Electron + Docker Harmony

Under the hood: Frontend Electron+React+TypeScript for the dash, backend Node.js+Dockerode for containers. Flow: LLM → MCP Stream → EdgeBox → Docker Sandbox (Shell + VNC).

![]()

Logo nod: EdgeBox evokes “edge computing in a boxed sandbox.”

Cross-platform bliss: .exe for Windows, .app for macOS, deb/AppImage for Linux. Cap resources? Tune CPU/RAM, bridge networks optionally. Extensible via MCP—roll custom endpoints quick.

Getting Started: Zero to Agent “Onboarding”

Prereq: Docker Desktop humming (grab from official site). Snag v0.8.0 from Releases—exe for Win, app for macOS, AppImage/deb for Linux.

Fire up the app; dashboard greens (Docker OK, MCP on 8888). Client config? Slot this JSON:

{

"mcpServers": {

"edgebox": {

"url": "http://localhost:8888/mcp"

}

}

}

Multi-session? Header tweak:

{

"mcpServers": {

"analysis": {

"url": "http://localhost:8888/mcp",

"headers": { "x-session-id": "data-viz" }

}

}

}

Test prompt: “Plot a sine curve in Python, save PNG; open browser to ‘MCP protocol,’ screenshot.” Logs + image streams flow back. Snags? Port clash—swap 8888; Docker perms—sudo restart.

Security Essentials: Isolation That Delivers

Per-session Docker containers, resource throttles against hogs, network controls for host shields. Local-only means no cloud snoops—GDPR gold. Pro tip: docker prune routinely, read-only mounts for sensitives.

FAQ: Quick Hits on EdgeBox Essentials

Q: Which LLM clients work with EdgeBox?

A: Any MCP-compatible, like Claude Desktop, OpenWebUI, LobeChat. GPT joins the party post-OpenAI’s 2025 rollout.

Q: GUI tools ghosting me?

A: Toggle “Enable GUI Tools” in settings, restart Docker. Linux? Might need apt install xvfb for X11.

Q: Performance on a budget rig?

A: 4GB RAM baseline, 2-core Docker cap suffices. VNC smooth, interactions under 50ms.

Q: What’s the license?

A: MIT—fork freely (check GitHub LICENSE).

Wrapping Up: The Dawn of Local AI “Colleagues”

EdgeBox isn’t merely a tool; it’s the bridge from chatty bots to desktop-savvy sidekicks. With MCP’s ecosystem blooming, imagine agent swarms collaborating across sandboxes. Give it a whirl: Download, configure, prompt—and see it spring to life. What’s your first “computer use” experiment? Drop it in the comments; let’s ride this wave together.