Conar.app: Revolutionizing How Developers Interact with Databases Through AI-Powered Tools

In today’s data-driven development landscape, interacting with databases remains one of the most fundamental yet challenging aspects of software engineering. From crafting complex SQL queries to optimizing database performance, developers often find themselves navigating a maze of technical complexities that can slow down productivity and innovation. Enter Conar.app – an open-source solution that’s redefining how developers interact with their databases by harnessing the power of artificial intelligence while maintaining uncompromising security standards.

Understanding the Database Interaction Challenge

Before diving into how Conar.app addresses these challenges, let’s take a moment to understand why database interactions remain difficult even for experienced developers. Working with databases typically involves multiple layers of complexity:

-

Writing syntactically correct and efficient SQL queries -

Understanding database schema structures and relationships -

Optimizing queries for performance -

Managing secure connections to database instances -

Switching between different database systems with varying syntax and capabilities

These challenges become even more pronounced when developers need to work across multiple database types or when team members have varying levels of database expertise. The cognitive load of remembering syntax details, optimization techniques, and security best practices can significantly impact development velocity.

Conar.app was born from the recognition that these challenges shouldn’t stand in the way of building great software. By combining AI assistance with a secure, open-source foundation, Conar.app creates a bridge between human intention and database execution, allowing developers to focus on solving business problems rather than wrestling with database intricacies.

What Exactly Is Conar.app?

At its core, Conar.app is an AI-powered open-source application designed to simplify database interactions. The project currently focuses on PostgreSQL database support while actively developing capabilities for MySQL and MongoDB in the near future. This strategic approach allows the team to deliver exceptional depth for PostgreSQL users while building a foundation that can accommodate other database systems.

Unlike many proprietary database tools on the market, Conar.app embraces an open-source philosophy that prioritizes transparency, community contribution, and user trust. This commitment extends beyond just making the code available – it permeates every aspect of the application’s design, particularly in how it handles sensitive database connection information.

The application’s architecture separates concerns effectively: your database connections and credentials remain under your control, while AI assistance operates within strict boundaries to enhance your productivity without compromising security. This thoughtful design ensures that developers can leverage cutting-edge AI capabilities without relinquishing control over their critical infrastructure.

The Pillars of Conar.app: Features That Matter

Security as a Foundation

In the realm of database tools, security isn’t a feature – it’s the foundation upon which everything else is built. Conar.app recognizes this fundamental truth and implements security at multiple levels:

Open-source codebase transparency: By making the entire codebase publicly available, Conar.app invites scrutiny from security experts worldwide. This transparency model, known as “security through transparency,” allows vulnerabilities to be identified and patched quickly by the community. Unlike closed-source alternatives where security claims must be taken on faith, Conar.app’s open nature means anyone can verify exactly how their data is handled.

Encrypted connection strings: Perhaps the most critical security feature is how Conar.app handles database connection information. Rather than storing raw connection strings that could expose your databases to unauthorized access, Conar.app employs strong encryption algorithms to protect this sensitive information. Even if someone were to gain access to the stored connection data, they would face significant cryptographic barriers to deciphering it.

Password protection: Beyond encryption, Conar.app implements additional authentication layers that require password verification before accessing sensitive database connections. This defense-in-depth approach ensures that multiple barriers exist between potential attackers and your database credentials.

These security measures aren’t implemented as afterthoughts – they’re designed into the application’s architecture from the ground up. For organizations operating under strict compliance requirements like GDPR, HIPAA, or SOC2, this security-first approach provides the assurance needed to adopt Conar.app across development teams.

Multi-Database Support Strategy

While many database tools focus exclusively on a single database system, Conar.app takes a more inclusive approach that acknowledges the reality of modern development environments:

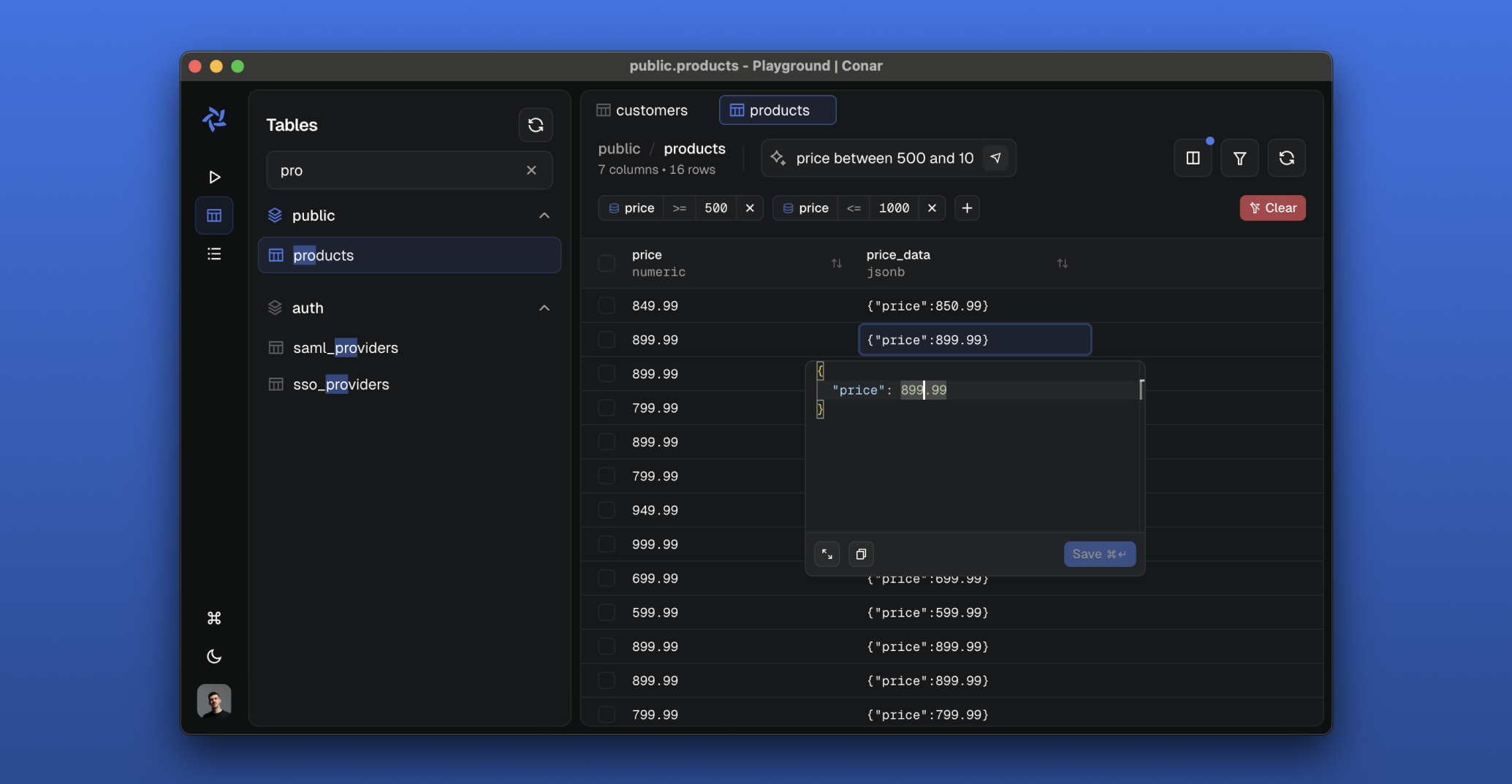

PostgreSQL excellence: As the initial focus, PostgreSQL support in Conar.app is comprehensive and deeply integrated. This includes full support for PostgreSQL’s advanced features like JSONB data types, full-text search capabilities, and complex query optimization. The AI assistant understands PostgreSQL-specific syntax and best practices, providing relevant suggestions that respect the database’s unique characteristics.

MySQL on the horizon: Recognizing MySQL’s widespread adoption, particularly in web applications and enterprise environments, Conar.app has MySQL support actively in development. This will bring the same AI-powered assistance and security features to MySQL users, creating consistency across different database environments.

MongoDB future integration: For teams working with document-based databases, MongoDB support represents the next frontier for Conar.app. This will extend the AI assistance capabilities to NoSQL paradigms, helping developers navigate the different mental models required for document database interactions.

This multi-database strategy reflects Conar.app’s philosophy that developers shouldn’t be limited by their tooling. The ultimate vision is a unified interface that understands the nuances of each database system while providing consistent, intelligent assistance regardless of the underlying technology.

AI-Powered Capabilities: Beyond Simple Query Generation

The AI capabilities in Conar.app represent more than just a novelty feature – they fundamentally change how developers interact with databases:

Intelligent SQL assistance: At its most basic level, the AI assistant can help generate SQL queries based on natural language descriptions. However, Conar.app goes beyond simple query generation by understanding context, schema structures, and performance implications. The AI doesn’t just create syntactically correct SQL – it generates optimized queries that consider indexing strategies, join efficiency, and database-specific best practices.

Flexible AI model selection: Recognizing that different AI models excel at different tasks and that organizations have varying requirements and budgets, Conar.app supports multiple AI providers. Users can switch between models from Anthropic, OpenAI, Gemini, and XAI based on their specific needs, budget constraints, or organizational policies. This flexibility ensures that Conar.app remains adaptable to changing AI landscapes and user preferences.

Continuous enhancement pipeline: The “More coming soon” tag on AI features isn’t just marketing speak – it reflects Conar.app’s commitment to evolving alongside AI advancements. The architecture is designed to incorporate new AI capabilities as they emerge, from query optimization suggestions to schema design assistance and anomaly detection in query patterns.

What makes Conar.app’s AI implementation particularly valuable is its focus on augmenting developer expertise rather than replacing it. The AI provides suggestions and explanations, helping developers understand why certain approaches work better than others. This educational component transforms routine database interactions into learning opportunities, gradually improving the developer’s database skills over time.

Technical Architecture: The Engine Behind the Experience

Understanding what powers Conar.app requires examining its technical architecture across multiple layers. This isn’t just academic interest – the technology choices directly impact performance, security, and extensibility.

Frontend Experience Layer

The user interface of Conar.app is built using a modern frontend stack that prioritizes responsiveness, accessibility, and developer experience:

React with TypeScript: The core UI framework leverages React’s component architecture combined with TypeScript’s type safety. This combination ensures that the interface remains consistent and bug-resistant while allowing for rapid iteration. TypeScript’s static typing catches potential errors during development rather than at runtime, significantly improving application stability.

Electron application framework: By building on Electron, Conar.app delivers a native desktop application experience while leveraging web technologies. This approach provides the performance and integration capabilities of desktop applications with the development velocity of web technologies. Users benefit from features like system tray integration, native notifications, and offline functionality without sacrificing the responsiveness expected from modern applications.

TailwindCSS and shadcn/ui: For styling and UI components, Conar.app employs TailwindCSS for utility-first CSS development alongside shadcn/ui for accessible, customizable component primitives. This combination allows for rapid UI development while maintaining visual consistency and accessibility standards. The design system is built with developer experience in mind, making it easier to maintain and extend the interface over time.

Vite build system: The development experience is powered by Vite, which provides lightning-fast hot module replacement and near-instantaneous server startup times. This dramatically improves developer productivity during the development process and translates to optimized production builds that load quickly for end users.

TanStack ecosystem: To handle routing, state management, data fetching, form handling, and virtualized lists, Conar.app leverages the TanStack ecosystem (formerly known as React Query ecosystem). This includes:

-

TanStack Router for type-safe routing -

TanStack Query for server state management -

TanStack Form for form state management -

TanStack Virtual for efficiently rendering large lists

This comprehensive ecosystem ensures that the frontend remains performant even when handling complex database interactions and large datasets.

Backend and Data Layer

Behind the polished interface lies a sophisticated backend architecture designed for performance, security, and scalability:

Arktype validation system: For runtime type checking and validation, Conar.app employs Arktype, which bridges the gap between TypeScript’s compile-time type checking and runtime validation needs. This ensures that data flowing through the application maintains its expected structure at every layer, preventing errors that could compromise security or functionality.

Bun runtime environment: Instead of the traditional Node.js runtime, Conar.app leverages Bun – a JavaScript runtime designed for speed and efficiency. Bun’s architecture provides significant performance improvements over Node.js, particularly for I/O-bound operations common in database applications. This choice translates to faster query execution, quicker response times, and lower resource consumption.

Hono web framework: The API layer is built using Hono, a lightweight and ultrafast web framework optimized for edge environments. Hono’s minimal overhead and excellent TypeScript support make it ideal for building the backend services that power Conar.app’s features. Its middleware architecture allows for clean separation of concerns while maintaining exceptional performance characteristics.

oRPC communication protocol: For type-safe remote procedure calls between frontend and backend components, Conar.app implements oRPC. This protocol ensures that function calls across service boundaries maintain type safety, reducing integration errors and improving developer experience when working across the frontend-backend divide.

Drizzle ORM: Database interactions are handled through Drizzle ORM, a modern TypeScript ORM that provides type-safe database queries while maintaining performance. Unlike heavier ORMs that can introduce significant overhead, Drizzle strikes a balance between developer ergonomics and runtime efficiency. Its schema-based approach ensures that database operations align with the actual database structure, preventing runtime errors caused by schema drift.

Better Auth authentication system: Security extends to user authentication through the Better Auth system, which provides robust identity management capabilities while maintaining simplicity. This system handles password hashing, session management, and authentication flows with security best practices built-in, reducing the risk of common authentication vulnerabilities.

AI Integration Layer

The AI capabilities of Conar.app are powered by a sophisticated integration layer that abstracts away the complexities of working with multiple AI providers:

AI SDK abstraction: Rather than tightly coupling to a single AI provider, Conar.app implements an SDK layer that normalizes interactions across different AI models. This abstraction allows the application to switch between providers without disrupting the user experience or requiring significant code changes. The SDK handles provider-specific nuances while exposing a consistent interface to the rest of the application.

Multi-provider support: The integration supports models from Anthropic, OpenAI, Gemini, and XAI, each with its own strengths and characteristics. This diversity allows users to select the AI provider that best matches their needs, whether that’s cost efficiency, response quality, specific domain expertise, or organizational compliance requirements.

Context management: Perhaps most importantly, the AI integration includes sophisticated context management that ensures AI models have appropriate information to generate relevant SQL suggestions without exposing sensitive data. This context management system carefully curates schema information, query history, and user preferences to provide the AI with enough context to be helpful while maintaining strict data boundaries.

Infrastructure and Service Integration

Conar.app’s architecture extends beyond just application code to include critical infrastructure components:

Supabase backend services: For managed backend functionality, Conar.app leverages Supabase – an open-source Firebase alternative built on PostgreSQL. This provides reliable database storage, authentication services, and real-time capabilities without requiring the Conar.app team to build and maintain these complex systems themselves. The Supabase integration handles user data storage, authentication tokens, and synchronization across devices.

Railway deployment platform: The application is deployed and managed using Railway, which provides infrastructure automation and scaling capabilities. This allows the Conar.app team to focus on application development rather than infrastructure management, while ensuring the service remains available and performant as usage grows.

PostHog analytics: To understand usage patterns and improve the product based on real user behavior, Conar.app integrates PostHog for product analytics. This data-driven approach helps the development team prioritize features and fixes based on actual user needs rather than assumptions. Importantly, this analytics integration is designed with privacy in mind, collecting only anonymized usage data necessary for product improvement.

Loops communication system: For user notifications, onboarding communications, and feature announcements, Conar.app employs Loops. This system ensures that users stay informed about important updates while maintaining control over their communication preferences. The integration respects user privacy by allowing granular control over notification types and frequencies.

This comprehensive architecture – spanning frontend experience, backend services, AI integration, and infrastructure – creates a cohesive system where each component plays a specific role in delivering a seamless database interaction experience. The thoughtful selection of technologies reflects Conar.app’s commitment to performance, security, and developer experience at every level.

Setting Up Conar.app for Development: A Comprehensive Guide

For developers interested in contributing to Conar.app or deploying customized versions for their organizations, understanding the development setup process is essential. The project has been designed with developer experience in mind, but certain prerequisites and steps must be followed precisely to ensure a smooth setup process.

Prerequisites and Environment Preparation

Before beginning the installation process, ensure your development environment meets the following requirements:

-

A modern operating system (macOS, Windows 10/11, or Linux) -

Node.js version 18 or higher installed -

pnpm package manager (preferred over npm or yarn for this project) -

Docker and Docker Compose installed and properly configured -

Git for version control operations -

At least 4GB of available RAM (8GB recommended for comfortable development) -

2GB of free disk space for dependencies and Docker images

These prerequisites form the foundation upon which Conar.app will be built and run. The specific requirement for pnpm rather than other package managers stems from the project’s optimization for pnpm’s efficient dependency management and disk space utilization.

Step 1: Package Installation with pnpm

The first step in setting up Conar.app locally involves installing all required dependencies using pnpm:

pnpm install

This command initiates the process of downloading and installing all JavaScript and TypeScript dependencies specified in the project’s package.json files. The installation process typically takes 2-5 minutes depending on your internet connection speed and system performance.

During this installation, pnpm creates a node_modules directory with symlinks to a global store, significantly reducing disk usage compared to traditional npm installations. This approach also speeds up subsequent installations since common dependencies are cached in the global store.

The installation process includes both runtime dependencies needed for the application to function and development dependencies required for building, testing, and linting the codebase. This comprehensive dependency installation ensures that all tools and libraries necessary for development are available immediately after completion.

Step 2: Starting Database Services with Docker Compose

Conar.app relies on PostgreSQL for persistent data storage and Redis for caching and session management. Rather than requiring developers to manually install and configure these database systems, the project leverages Docker Compose to containerize these dependencies:

pnpm run docker:start

Executing this command triggers a series of automated steps:

-

Docker Compose reads the docker-compose.yml configuration file -

It downloads the official PostgreSQL and Redis Docker images if not already present locally -

It creates and starts two container instances – one for PostgreSQL and one for Redis -

It configures network connections between containers and maps ports to the host machine -

It initializes the PostgreSQL database with necessary extensions and configurations -

It sets up persistent volumes to ensure data survives container restarts

This Docker-based approach provides several significant advantages:

-

Environment consistency: Every developer works with identical database versions and configurations -

Isolation: Database services run in isolated containers, preventing conflicts with other applications -

Reproducibility: The exact database environment can be recreated on any machine with Docker installed -

Simplified cleanup: Removing the containers completely eliminates all traces of the database setup

The PostgreSQL container is configured to listen on port 5432 (the standard PostgreSQL port) on the host machine, while Redis listens on port 6379. These ports can be modified in the docker-compose.yml file if they conflict with existing services on your machine.

Step 3: Database Schema Preparation with Drizzle Migrations

After starting the database services, the next critical step involves preparing the database schema. Conar.app uses Drizzle ORM for database operations, which includes a migration system for managing schema changes over time:

pnpm run drizzle:migrate

This command executes the database migration scripts that define the application’s data structure. The migration process performs several important operations:

-

Schema creation: It creates all necessary tables including users, connections, query history, and settings -

Index establishment: It sets up database indexes to optimize query performance for common operations -

Relationship definition: It establishes foreign key relationships between related tables -

Initial data seeding: It inserts essential configuration data and default values needed for application startup -

Version tracking: It records the migration version in a special table to prevent duplicate executions

The migration system follows a versioned approach where each schema change is captured in a timestamped migration file. This allows the database schema to evolve alongside the application code while maintaining backward compatibility and providing a clear audit trail of structural changes.

Understanding the database schema is crucial for developers contributing to Conar.app, as it defines the foundation upon which all application features are built. The migrations ensure that every development environment, testing environment, and production deployment shares an identical database structure, eliminating one of the most common sources of environment-specific bugs.

Step 4: Launching the Development Environment

With dependencies installed, database services running, and schema prepared, the final step involves launching the development servers that power Conar.app’s frontend and backend:

pnpm run dev

This command utilizes Turbo, a high-performance build system, to orchestrate the simultaneous startup of multiple development servers:

-

Frontend development server: Serves the React application with hot module replacement (HMR) enabled, allowing code changes to appear in the browser without full page reloads -

Backend API server: Starts the Hono-based API server with live reloading, automatically restarting when backend code changes are detected -

Type watching processes: Monitors TypeScript files for type errors and provides real-time feedback during development

The development environment is configured to run on specific ports by default:

-

Frontend application: http://localhost:3000 -

Backend API: http://localhost:3001

These ports can be customized through environment variables if needed. The servers are configured with CORS (Cross-Origin Resource Sharing) settings that allow the frontend to communicate seamlessly with the backend during development.

The development experience is further enhanced by integrated tooling:

-

ESLint for code quality enforcement -

Prettier for consistent code formatting -

TypeScript compiler for type checking -

Vitest for unit testing integration

This comprehensive development setup creates an environment where developers can iterate quickly while maintaining code quality and catching errors early in the development process. The hot reloading capabilities significantly reduce the feedback loop between code changes and visible results, accelerating the development workflow.

Troubleshooting Common Setup Issues

Despite careful design, development environment setup can sometimes encounter obstacles. Common issues and their solutions include:

Docker permission errors: If you encounter permission issues when starting Docker containers, ensure your user account has proper permissions to interact with the Docker daemon. On Linux systems, this typically involves adding your user to the docker group:

sudo usermod -aG docker $USER

After making this change, you’ll need to log out and back in for it to take effect.

Port conflicts: If the default ports (5432 for PostgreSQL, 6379 for Redis, 3000/3001 for application servers) are already in use by other applications, you can modify the port mappings in docker-compose.yml and the application configuration files. For example, to change the PostgreSQL port mapping:

services:

postgres:

ports:

- "5433:5432" # Maps host port 5433 to container port 5432

Insufficient memory: Docker containers and the development servers require adequate memory to function properly. If you experience crashes or slow performance, consider closing other memory-intensive applications or increasing Docker’s memory allocation in Docker Desktop settings.

Network connectivity issues: The initial pnpm install requires downloading numerous packages from the npm registry. If you experience timeouts or failures, check your network connection or configure a registry mirror if you’re in a region with restricted access to npm packages.

Database migration failures: If the migration command fails, check that the PostgreSQL container is running properly and accessible. You can verify container status with:

docker ps

Look for the postgres container in the list and ensure its status shows as “Up.”

These troubleshooting steps address the most common obstacles encountered during setup. The Conar.app community and documentation provide additional support for more complex issues.

Quality Assurance: Testing Strategies in Conar.app

Maintaining high software quality requires systematic testing approaches that catch regressions before they reach users. Conar.app implements a multi-layered testing strategy that covers different aspects of the application through specialized testing methodologies.

Unit Testing: Validating Individual Components

Unit tests form the foundation of Conar.app’s testing strategy, focusing on verifying the correctness of individual functions, components, and modules in isolation:

pnpm run test:unit

This command executes the unit test suite using Vitest, a next-generation testing framework built specifically for Vite projects. Vitest provides exceptional speed through smart caching and parallel test execution, making it practical to run the entire test suite frequently during development.

The unit tests in Conar.app follow several important principles:

Isolation: Each test focuses on a single unit of functionality without dependencies on external systems. Database calls, network requests, and file operations are mocked or stubbed to ensure tests run quickly and deterministically.

Comprehensive coverage: Critical paths through the application logic have high test coverage, particularly for security-sensitive functions, complex algorithms, and core business logic. The test suite includes edge case testing to verify behavior under unusual or unexpected conditions.

Behavior verification: Rather than testing implementation details, unit tests focus on verifying observable behavior and outputs. This approach makes tests more resilient to internal refactoring while ensuring the external contract remains intact.

Type safety validation: With TypeScript at the core of the codebase, many unit tests verify that type contracts are maintained across module boundaries. This ensures that the type safety promised by TypeScript actually manifests in runtime behavior.

A typical unit test in Conar.app might validate the encryption function used for database connection strings:

import { encryptConnection } from './security';

import { describe, expect, it } from 'vitest';

describe('security/encryption', () => {

it('should encrypt connection strings with valid key', () => {

const testConnection = 'postgres://user:pass@localhost:5432/db';

const encryptionKey = 'valid-32-byte-encryption-key-here';

const encrypted = encryptConnection(testConnection, encryptionKey);

expect(encrypted).toBeTypeOf('string');

expect(encrypted).not.toEqual(testConnection);

expect(encrypted.length).toBeGreaterThan(0);

});

it('should throw error with invalid encryption key', () => {

const testConnection = 'postgres://user:pass@localhost:5432/db';

const invalidKey = 'short-key';

expect(() => encryptConnection(testConnection, invalidKey))

.toThrow('Invalid encryption key length');

});

});

This example demonstrates how unit tests verify both success cases and failure conditions, ensuring the function behaves correctly across various inputs. The comprehensive unit test suite provides confidence that individual components work as expected before they’re integrated into the larger system.

End-to-End Testing: Validating User Workflows

While unit tests ensure individual components function correctly, end-to-end (E2E) tests verify that the entire application works together as expected from the user’s perspective. Conar.app’s E2E testing strategy simulates real user interactions with the complete application stack:

Before executing E2E tests, a dedicated test environment must be prepared:

pnpm run test:start

This command starts a specialized test server configured with test-specific settings and connects to a dedicated test database instance. The test database uses the connection string postgresql://postgres:postgres@localhost:5432/conar and is completely isolated from development or production data.

With the test environment running, the E2E test suite can be executed:

pnpm run test:e2e

These tests typically use a browser automation framework like Playwright or Cypress to simulate user interactions such as:

-

Logging into the application -

Creating and managing database connections -

Generating SQL queries with AI assistance -

Executing queries against test databases -

Reviewing query results and execution plans -

Managing application settings and preferences

A typical E2E test might verify the complete workflow of connecting to a database and generating a query:

import { test, expect } from '@playwright/test';

test('user can connect to database and generate query with AI', async ({ page }) => {

// Login to the application

await page.goto('/login');

await page.fill('#email', 'test@example.com');

await page.fill('#password', 'securepassword123');

await page.click('button[type="submit"]');

// Create a new database connection

await page.click('button:has-text("New Connection")');

await page.fill('#connection-name', 'Test PostgreSQL');

await page.fill('#connection-string', 'postgresql://test:test@localhost:5432/testdb');

await page.click('button:has-text("Save Connection")');

// Verify connection appears in list

await expect(page.locator('text=Test PostgreSQL')).toBeVisible();

// Use AI to generate a query

await page.click('text=Test PostgreSQL');

await page.fill('#ai-prompt', 'Show all users created in the last month');

await page.click('button:has-text("Generate Query")');

// Verify SQL was generated

const generatedSQL = await page.locator('#sql-editor').textContent();

expect(generatedSQL).toContain('SELECT');

expect(generatedSQL).toContain('FROM users');

expect(generatedSQL).toContain('WHERE created_at >');

// Execute the query

await page.click('button:has-text("Execute")');

// Verify results are displayed

await expect(page.locator('.query-results')).toBeVisible();

await expect(page.locator('table tr')).toHaveCount({ min: 1 });

});

This example illustrates how E2E tests validate the complete user journey, ensuring that features work together correctly in realistic scenarios. These tests catch integration issues that unit tests cannot detect, such as UI rendering problems, API communication failures, or database permission issues.

The E2E test environment is designed to be self-contained and disposable:

-

Test databases are created and destroyed with each test run -

Authentication tokens are generated specifically for testing -

No persistent data remains after tests complete -

Parallel test execution is supported for faster feedback

This isolation ensures that tests remain reliable and repeatable regardless of environment or execution order. The comprehensive testing strategy – combining unit tests for component validation and E2E tests for workflow verification – provides confidence that Conar.app functions correctly at every level before changes are released to users.

The Open Source Philosophy: Licensing and Community

Conar.app’s identity as an open-source project extends far beyond simply making the code publicly available. The choice of the Apache-2.0 License reflects a deliberate philosophy about software freedom, community collaboration, and sustainable development practices.

Understanding the Apache-2.0 License

The Apache License 2.0 is a permissive open-source license that provides specific rights and protections to users, contributors, and the original creators. When Conar.app states it’s “licensed under the Apache-2.0 License — see the LICENSE file for details,” it’s making a commitment to specific principles of software freedom:

Freedom to use: Anyone can use Conar.app for any purpose – personal projects, educational institutions, commercial enterprises, or government agencies – without requiring permission or paying licensing fees. This freedom extends to using the software as part of larger applications or integrated systems.

Freedom to modify: Users can adapt Conar.app to meet their specific needs by modifying the source code. This might include adding organization-specific security requirements, integrating with internal systems, or customizing the user interface to match brand guidelines.

Freedom to distribute: Modified versions of Conar.app can be shared with others, whether within an organization or publicly. This enables teams to create specialized distributions tailored to their workflows while still benefiting from the core application.

Freedom to patent: The Apache-2.0 License includes explicit patent grants from contributors, protecting users from patent litigation related to the covered code. This provision is particularly important for enterprise adoption, where patent risk can be a significant barrier to open-source adoption.

These freedoms come with specific responsibilities:

-

Original copyright notices must be preserved in all copies -

Significant changes to files should be documented with modification notices -

The license and NOTICE files must be included in distributions -

Trademark usage is restricted to avoid implying endorsement

The Apache-2.0 License strikes a thoughtful balance between permissiveness and protection. Unlike more restrictive licenses like the GPL, it doesn’t require derivative works to be open source, making it compatible with commercial products. Unlike extremely permissive licenses like MIT, it includes important protections around patents and explicit contribution terms.

Community Contributions and Sustainable Development

The open-source nature of Conar.app creates opportunities for community involvement that extend beyond just code contributions:

Code contributions: Developers can submit pull requests to fix bugs, add features, or improve documentation. The project likely maintains contribution guidelines that outline coding standards, testing requirements, and the review process for proposed changes.

Issue reporting: Users can identify bugs, request features, or suggest improvements through the project’s issue tracking system. Well-documented issues with reproduction steps significantly accelerate the resolution process.

Documentation improvement: Technical writing skills are as valuable as coding abilities in open-source projects. Improving documentation, tutorials, and user guides makes the project more accessible to newcomers.

Community support: Experienced users can help answer questions from newcomers, share best practices, and create learning resources that benefit the entire community.

Security vulnerability reporting: The open nature of the codebase allows security researchers to identify and responsibly disclose vulnerabilities before they can be exploited.

This collaborative model creates a virtuous cycle: more users lead to more contributors, which improves the software, attracting even more users. However, sustainable open-source development requires careful management to prevent burnout of core maintainers.

Conar.app’s architecture choices reflect this sustainability focus:

-

The modular design allows contributors to work on isolated components -

Comprehensive test coverage reduces the risk of introducing regressions -

Clear documentation lowers the barrier to entry for new contributors -

Modern tooling streamlines the development workflow

The “Built with ❤️” tagline at the end of the README isn’t mere decoration – it acknowledges the human effort and passion that drives open-source projects. This emotional investment is what transforms code repositories into communities and tools into movements.

For organizations considering adopting Conar.app, the open-source license provides assurance against vendor lock-in. Even if the original development team were to stop maintaining the project, the community could fork the codebase and continue development. This longevity protection is particularly valuable for mission-critical tools like database clients.

Frequently Asked Questions About Conar.app

How does Conar.app handle database security compared to other tools?

Security in Conar.app is implemented at multiple levels rather than relying on a single protection mechanism. Unlike many database tools that store connection strings in plaintext configuration files, Conar.app encrypts all connection information before storage. The open-source nature means this encryption implementation can be independently verified by security experts rather than taking vendor claims at face value. Additionally, the password protection feature adds an operational security layer that requires authentication before accessing sensitive connections.

The architecture separates concerns effectively: your actual database credentials never leave your environment when using the AI features. The AI assistance operates on schema metadata and query patterns rather than accessing your raw data. This defense-in-depth approach ensures that compromising one security layer doesn’t automatically grant access to your databases.

Can I use Conar.app without connecting to the cloud?

The current architecture of Conar.app involves storing encrypted connection information in the cloud service, which enables features like cross-device synchronization and AI assistance. However, the open-source nature means technically sophisticated users could potentially modify the application to store connections locally only. The project’s GitHub repository would be the place to discuss or contribute such functionality.

For users with strict air-gapped requirements, the recommended approach would be to evaluate whether the security model meets compliance needs or to contribute code that adds local-only storage options while maintaining the application’s security guarantees.

How does the AI assistance actually work when generating SQL queries?

The AI assistance in Conar.app operates by analyzing your database schema (table structures, relationships, indexes) along with your natural language description of what you want to accomplish. Rather than sending your actual data to AI providers, the system provides the AI model with metadata about your database structure and the context of your request.

When you ask the AI to “show me recent user signups,” the system translates this into relevant context for the AI model: the tables available, their columns, relationships between tables, and examples of appropriate SQL syntax for your specific database type (PostgreSQL in the current implementation). The AI then generates SQL that conforms to your database structure while accomplishing your stated goal.

The ability to switch between different AI models (Anthropic, OpenAI, Gemini, XAI) allows you to select providers based on quality, cost, or organizational policies. This flexibility is particularly valuable as AI capabilities evolve rapidly – new providers can be added without requiring significant application changes.

What makes Conar.app different from existing database clients like DBeaver or pgAdmin?

While tools like DBeaver and pgAdmin excel at database administration and complex query execution, Conar.app focuses specifically on making database interactions more accessible through AI assistance. Traditional database clients require deep SQL knowledge and database expertise to use effectively. Conar.app lowers this barrier by providing intelligent assistance that can generate, explain, and optimize queries based on natural language descriptions.

The security model also differs significantly. Many existing tools store connection information in local configuration files with minimal protection. Conar.app’s encrypted cloud storage with password protection provides stronger security guarantees while enabling seamless access across multiple devices.

The open-source nature of Conar.app combined with its modern architecture represents a fundamentally different approach to database tooling – one that prioritizes developer experience and security while leveraging AI capabilities to enhance productivity rather than replace expertise.

How can I contribute to the Conar.app project as a non-developer?

Contributions to open-source projects extend far beyond writing code. The Conar.app project welcomes various forms of non-development contributions:

Documentation improvements: Identifying gaps in documentation, creating tutorials for specific use cases, or improving the clarity of existing guides significantly enhances the user experience. Documentation contributions often have the highest impact per hour invested.

User experience feedback: Reporting confusing interface elements, suggesting workflow improvements, or identifying accessibility barriers helps shape a more intuitive application. Detailed feedback with specific reproduction steps is particularly valuable.

Community support: Answering questions from new users in discussion forums, creating learning resources, or organizing user groups builds community knowledge and reduces the support burden on core developers.

Translation assistance: Making the application accessible to non-English speakers by contributing translations expands the potential user base and creates opportunities for global collaboration.

Security vulnerability reporting: Responsible disclosure of security issues through proper channels helps protect all users. Security researchers play a crucial role in maintaining trust in open-source projects.

To get started with any of these contribution types, the best approach is to join the project’s community channels (likely available through links in the GitHub repository) and express your interest. Most open-source communities enthusiastically welcome non-coding contributions that strengthen the project ecosystem.

What are the system requirements for running Conar.app locally during development?

The development setup for Conar.app has specific requirements to ensure a smooth experience:

Hardware requirements:

-

Processor: Modern multi-core CPU (Intel i5/Ryzen 5 or better recommended) -

Memory: Minimum 8GB RAM (16GB recommended for comfortable development) -

Storage: 10GB free disk space for dependencies, Docker images, and build artifacts -

Display: 1920×1080 resolution or higher recommended for development interface

Software dependencies:

-

Operating System: macOS 12+, Windows 10/11 (with WSL2 recommended), or Linux (Ubuntu 20.04+) -

Node.js: Version 18.x or higher -

Package Manager: pnpm version 7.x or higher -

Docker: Version 20.10+ with Docker Compose plugin -

Git: Version 2.30+ for version control operations -

IDE/Editor: Visual Studio Code with recommended extensions (TypeScript, ESLint, etc.)

Network requirements:

-

Stable internet connection for initial dependency installation -

Ability to pull Docker images from public registries -

Access to npm registry for JavaScript packages -

Firewall permissions for development servers (ports 3000-3001 by default)

These requirements reflect the reality of modern full-stack JavaScript development. The Docker dependency specifically ensures consistent database environments across development machines, while the memory requirements account for running multiple development servers simultaneously alongside database containers.

For production usage (not development), the requirements would be significantly lower since users would install the compiled application rather than the full development environment.

Looking Forward: The Evolution of Conar.app

The development roadmap for Conar.app reveals a thoughtful progression from its PostgreSQL-focused beginnings toward a comprehensive database interaction platform. This evolution isn’t driven by feature chasing but by addressing genuine developer pain points in database interactions.

The current focus on PostgreSQL makes strategic sense – it’s widely adopted, feature-rich, and represents a significant portion of database workloads. By perfecting the experience for PostgreSQL users first, the Conar.app team builds a solid foundation of patterns and architecture that can be extended to other database systems.

The planned MySQL support addresses one of the most common database technologies in web applications, particularly in LAMP stack environments and enterprise settings. MongoDB support will extend Conar.app’s reach to document database users, acknowledging the growing trend toward polyglot persistence where applications use multiple database types for different purposes.

Beyond database support expansion, the AI capabilities represent the most significant area for future development. Current AI assistance focuses primarily on query generation, but the roadmap hints at more sophisticated capabilities including:

-

Query optimization suggestions based on execution plans -

Schema design recommendations -

Anomaly detection in query patterns -

Performance bottleneck identification -

Natural language data exploration without writing SQL at all

These advancements will transform Conar.app from a query generation tool into an intelligent database assistant that augments developer expertise rather than replacing it. The emphasis on understanding why certain approaches work better than others creates learning opportunities within the workflow itself.

The open-source nature ensures this evolution remains community-driven rather than dictated solely by commercial interests. Feature priorities can be influenced through GitHub discussions, pull requests, and community feedback. This collaborative development model often produces more practical, developer-focused tools than purely commercial alternatives.

As AI capabilities mature and database technologies continue evolving, Conar.app’s architecture is designed to adapt. The modular structure, comprehensive test coverage, and clean separation of concerns provide the technical foundation needed for sustainable long-term development. The Apache-2.0 license ensures this evolution remains accessible to all users regardless of their organization size or budget constraints.

The ultimate vision appears to be creating a universal interface for database interaction that understands the nuances of different database systems while providing consistent, intelligent assistance. This vision aligns with broader industry trends toward more intuitive, AI-augmented development tools that handle routine complexity while freeing developers to focus on business logic and creative problem-solving.

Conclusion: Redefining the Developer-Database Relationship

Conar.app represents more than just another database client – it embodies a fundamental rethinking of how developers interact with their data stores. By combining rigorous security practices, thoughtful architecture choices, and intelligent AI assistance within an open-source framework, it addresses pain points that have persisted throughout the evolution of database tooling.

The significance of this approach extends beyond convenience. When developers can interact with databases more efficiently and securely, they build better applications faster. When AI assistance explains not just what query to write but why it works well, developers deepen their understanding rather than becoming dependent on tools. When security is built into the architecture rather than bolted on as an afterthought, organizations can adopt modern tools without compromising their data protection requirements.

The comprehensive development setup, thorough testing strategy, and permissive licensing model all contribute to a sustainable ecosystem where the tool evolves alongside its users’ needs. This long-term perspective distinguishes Conar.app from short-lived “overnight sensation” tools that prioritize viral growth over lasting value.

For developers weary of wrestling with database complexities, for teams concerned about connection security, and for organizations seeking to democratize database access without sacrificing expertise, Conar.app offers a compelling alternative. It doesn’t eliminate the need to understand databases – rather, it removes the friction between intention and execution, allowing database knowledge to shine through more clearly.

As the project continues to evolve with MySQL and MongoDB support on the horizon, its core philosophy remains consistent: leverage technology to enhance human capability rather than replace it, prioritize security without sacrificing usability, and build in the open where community wisdom shapes better tools.

The journey of database interaction tools has progressed from command-line utilities to graphical interfaces to AI-augmented assistants. Conar.app stands at this inflection point, offering a glimpse of how AI can enhance rather than replace developer expertise when implemented thoughtfully and transparently.

In an era where data is increasingly central to application functionality, tools like Conar.app don’t just improve developer productivity – they reshape how we think about data access itself. By making database interactions more intuitive, secure, and intelligent, they enable developers to focus on what truly matters: building applications that create value for users and solve real-world problems.

The “Built with ❤️” ethos that concludes the project’s documentation isn’t merely decorative – it reflects the care, attention to detail, and user empathy that distinguishes exceptional developer tools from merely functional ones. As Conar.app continues to evolve, this human-centered approach to technical problems will likely remain its most valuable characteristic.