Chain-of-Agents: How AI Learned to Work Like a Team

The Evolution of AI Problem-Solving

Remember when Siri could only answer simple questions like “What’s the weather?” Today’s AI systems tackle complex tasks like medical diagnosis, code generation, and strategic planning. But there’s a catch: most AI still works like a solo worker rather than a coordinated team. Let’s explore how researchers at OPPO AI Agent Team are changing this paradigm with Chain-of-Agents (CoA).

Why Traditional AI Systems Struggle

1. The “Lone Wolf” Problem

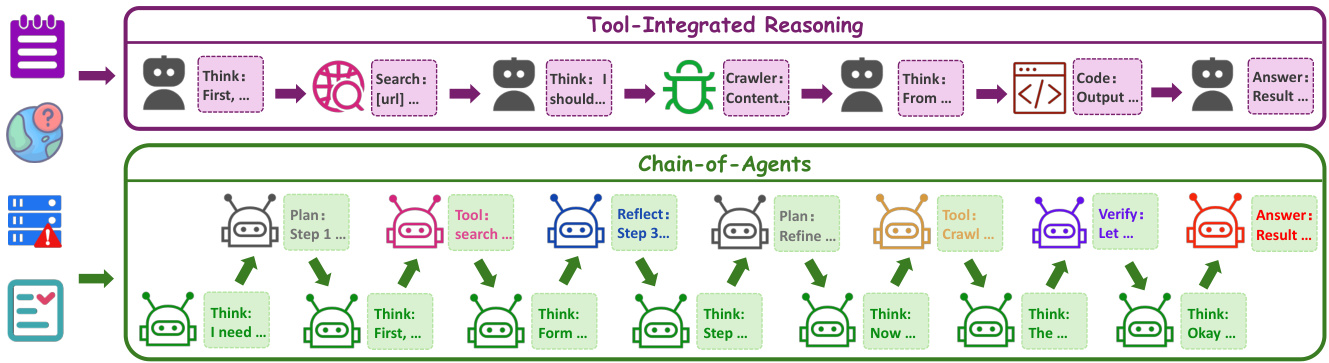

Most AI systems today use one of two approaches:

-

ReAct-style systems: Follow rigid “think → act → observe” patterns like an assembly line worker. -

Multi-agent systems: Require complex manual coordination between specialized AI “agents” (like hiring different contractors for a project).

Both approaches face critical limitations:

Think of it like comparing a single chef cooking an entire meal versus managing a kitchen with specialized chefs who don’t communicate well.

Introducing Chain-of-Agents: AI Teamwork Made Simple

The OPPO team created CoA to let a single AI model dynamically simulate team collaboration without complex setup. It’s like having a project manager who can instantly assemble the right team members for each task.

Key Components of CoA

This architecture allows the AI to:

-

Decompose complex problems -

Assign tasks to virtual “team members” -

Adapt workflows in real-time -

Learn from experience

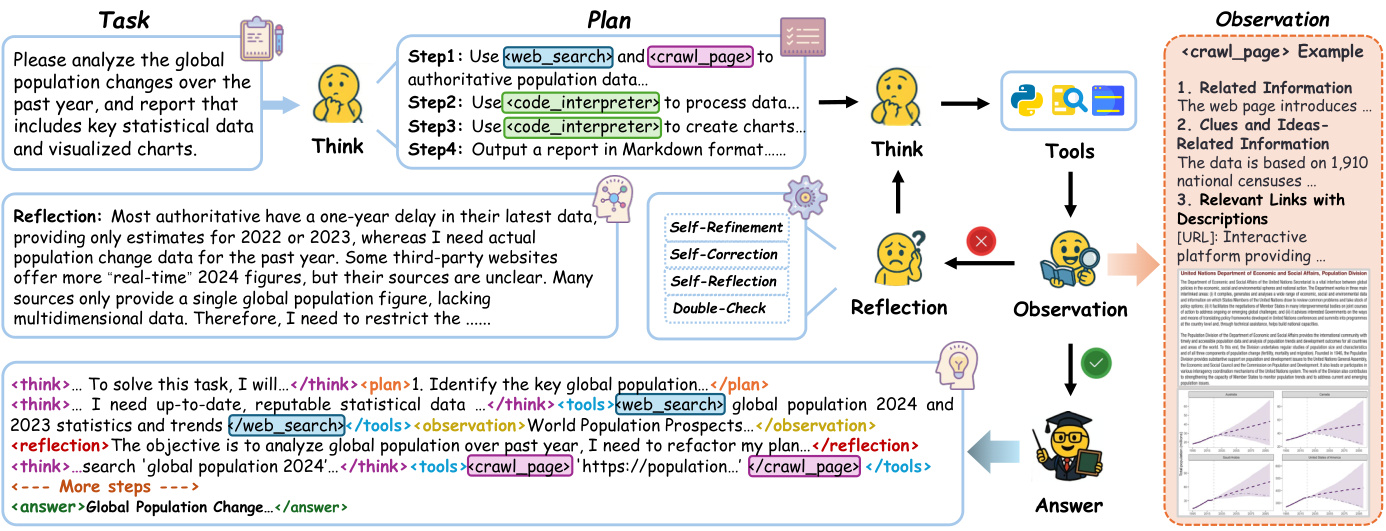

How CoA Works: The Training Process

1. Learning from the Best

Researchers used multi-agent distillation to teach CoA:

-

Record how state-of-the-art multi-agent systems solve problems -

Convert these recordings into training examples -

Fine-tune a base AI model using these examples

2. Progressive Quality Filtering

The training data went through four layers of refinement:

This created a dataset of 16,433 high-quality training examples with reasoning chains 5-20 steps long.

Experimental Results: CoA in Action

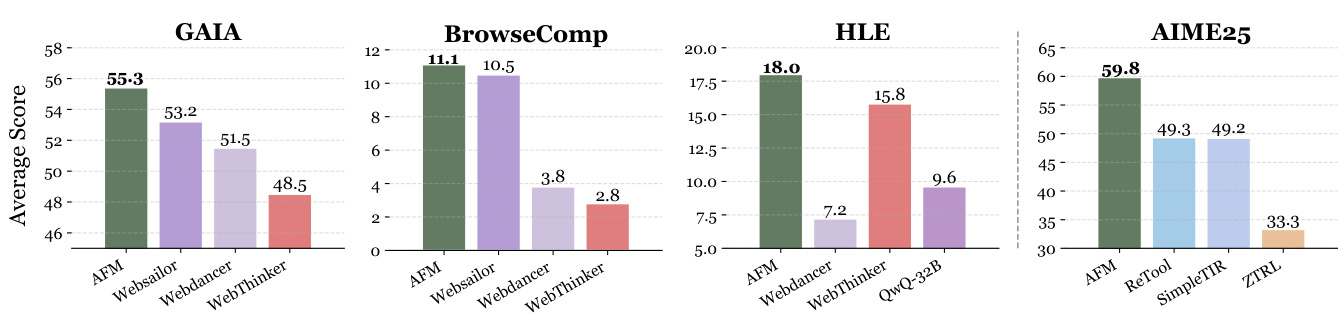

1. Web Agent Performance

On knowledge-intensive tasks like GAIA and BrowseComp:

2. Code Agent Breakthroughs

For programming tasks:

3. Mathematical Reasoning

On the challenging AIME25 benchmark:

“

“AFM achieves 59.8% solve rate, a 10.5% improvement over previous best methods” [citation:11]

Why CoA Outperforms Traditional Methods

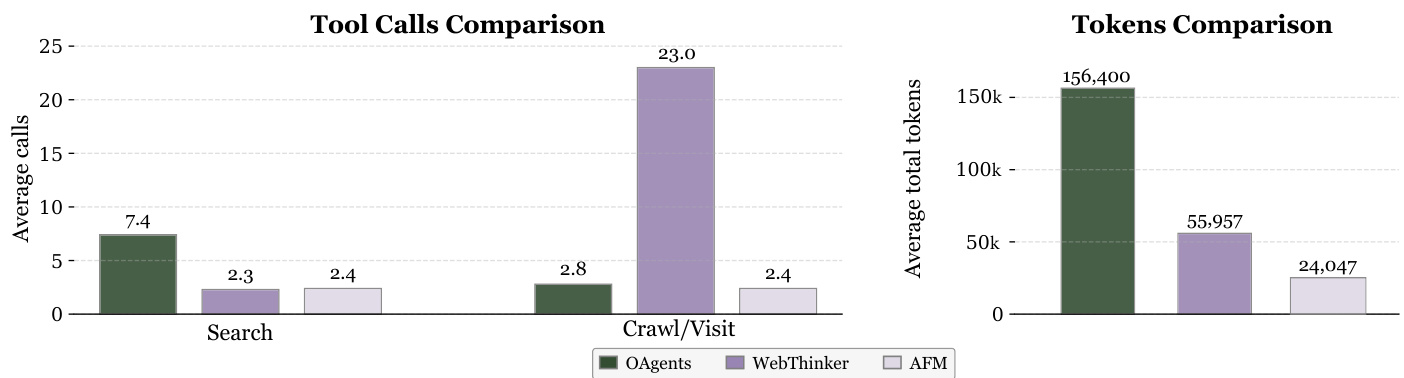

1. Computational Efficiency

Tests on GAIA benchmark showed:

2. Generalization to New Tools

CoA models showed surprising adaptability:

-

Code agents could use web search tools -

Web agents struggled with code formatting

This suggests CoA captures general collaboration patterns rather than task-specific routines.

Test-Time Scaling: The Power of Multiple Attempts

When allowed 3 attempts per question:

This outperforms traditional methods where multiple attempts yield smaller gains.

Practical Implications for SEO and Content

While CoA focuses on AI capabilities, its principles align with modern content strategies:

1. Content Quality Matters

Just as CoA prioritizes high-quality training data, search engines reward comprehensive, accurate content [citation:13]. The 16,433 training examples with 5-20 step reasoning chains mirror how detailed content outperforms shallow articles.

2. Structured Problem Solving

CoA’s planning → execution → reflection cycle resembles effective content strategies:

-

Research phase (identify user intent) -

Content creation (solve the query) -

Optimization phase (update based on performance)

3. Technical Writing Best Practices

The paper’s structure demonstrates technical writing principles [citation:16]:

-

Clear section hierarchy -

Visual aids (figures) -

Performance metrics -

Use cases

Future Directions: What This Means for AI

The open-source release of CoA (model weights, code, and data) creates opportunities for:

-

More efficient AI assistants -

Better reasoning in specialized domains -

Foundation for next-generation agent systems

As AI continues to evolve from “lone workers” to “collaborative teams,” frameworks like CoA will likely become standard for complex applications.

Conclusion

Chain-of-Agents represents a fundamental shift in how we build AI systems. By teaching models to work like coordinated teams rather than rigid pipelines, researchers have achieved state-of-the-art results across multiple domains while improving computational efficiency. As these systems mature, we can expect AI to handle increasingly complex real-world problems with the flexibility and adaptability of human teams.

All images sourced from the original research paper. For complete methodology details and benchmark results, refer to the full paper.