Burn: A Friendly Deep-Dive into the Next-Gen Deep Learning Framework for Everyone

A practical walk-through for junior college graduates and working engineers who want to train, tune, and ship models—without juggling three different languages.

Burn

Table of Contents

-

Why yet another framework? -

What exactly is Burn? -

Performance in plain English -

Hardware support at a glance -

Training & inference—end-to-end -

Your first model in five minutes -

Moving models in and out of Burn -

Real examples you can run today -

Common questions & answers -

Where to go next

Why yet another framework?

Every popular framework solves part of the problem, but it often leaves you with:

-

Python for research, C++/CUDA for production—two code bases, twice the bugs. -

No painless path from a laptop to a browser or an embedded board. -

Hand-written GPU kernels if you want real speed—steep learning curve.

Burn’s goal is simple: one Rust code base handles research, training, and deployment on anything from a Raspberry Pi to an NVIDIA A100. No rewrites, no drama.

What exactly is Burn?

| Term you might know | Burn’s counterpart | What it means for you |

|---|---|---|

PyTorch nn.Module |

#[derive(Module)] struct |

Same mental model, compile-time safety |

| CUDA kernels | Auto-generated WGSL | You write high-level math, Burn writes the shader |

torch.save |

Record trait + MPK/ZIP |

Readable, version-tolerant snapshots |

| Python GIL headache | Rust ownership | True multi-threading, no locks |

| ONNX export | Built-in ONNX import | Bring models from TF/PyTorch into Burn |

Burn is 100 % open source under MIT + Apache 2.0; you can embed it in commercial products without opening your own code.

Performance in plain English

Burn breaks speed into six practical techniques. Click each heading for the short, no-jargon version.

1. Automatic kernel fusion – “many ops become one GPU call”

When you chain small operations (add → mul → activation), Burn stitches them into one GPU kernel.

Example: the custom GELU below turns into ~60 lines of WGSL at runtime—hand-written speed without hand-written pain.

fn gelu_custom<B: Backend, const D: usize>(x: Tensor<B, D>) -> Tensor<B, D> {

let x = x.clone() * ((x / SQRT_2).erf() + 1);

x / 2

}

You keep the readable math; Burn keeps the speed.

2. Asynchronous execution – “CPU and GPU stop waiting for each other”

Burn’s first-party backends queue work in the background.

Your Python-style loop keeps feeding the GPU while the GPU keeps crunching numbers; no blocking, no wasted cycles.

3. Thread-safe building blocks – “multi-GPU without locks”

Each module owns its weights. Clone the module, send it to another thread, compute gradients, send them back—no mutexes, no race conditions.

PyTorch’s .grad mutations simply don’t exist here.

4. Smart memory management – “reuse instead of malloc”

-

Memory pool keeps frequently used buffers alive. -

Ownership rules let Burn mutate tensors in place when safe; small wins add up to large-model savings.

5. Automatic kernel selection – “right kernel, right size, right hardware”

Matrix multiply has dozens of tile/block choices. Burn micro-benchmarks once, caches the best, and reuses the decision. First run slower, every later run faster.

6. Hardware-specific features – “when your chip has a secret weapon, Burn uses it”

-

NVIDIA Tensor Cores: used in CUDA, LibTorch, Candle, WGPU back-ends. -

Apple Metal Performance Shaders: used in Metal back-end.

Future chips will come online as soon as WGSL extensions land.

Hardware support at a glance

| Back-end | Devices | Maintainer | Special notes |

|---|---|---|---|

| CUDA | NVIDIA GPUs (Linux, Windows) | Burn team | Tensor Cores active |

| ROCm | AMD GPUs (Linux) | Burn team | |

| Metal | Apple GPUs (macOS, iOS) | Burn team | Apple Silicon supported |

| Vulkan | Most desktop GPUs | Burn team | Linux & Windows |

| Wgpu | Any Vulkan/Metal/DX12 target | Burn team | Also compiles to WebAssembly |

| NdArray | Any CPU | Community | Works in no_std |

| LibTorch | CPU & GPU (via PyTorch C++) | Community | Reuses PyTorch kernels |

| Candle | NVIDIA, Apple, CPU | Community | HuggingFace back-end |

Combining back-ends (optional decorators)

-

Autodiff – adds automatic differentiation to any back-end. -

Fusion – adds kernel fusion to supported back-ends (WGPU, CUDA today). -

Router (Beta) – routes parts of the graph to GPU, others to CPU. -

Remote (Beta) – send ops over the network to a GPU box.

Example: a CPU+GPU mixed run in four lines.

type Backend = Router<(Wgpu, NdArray)>;

let gpu = MultiDevice::B1(WgpuDevice::DiscreteGpu(0));

let cpu = MultiDevice::B2(NdArrayDevice::Cpu);

Training & inference

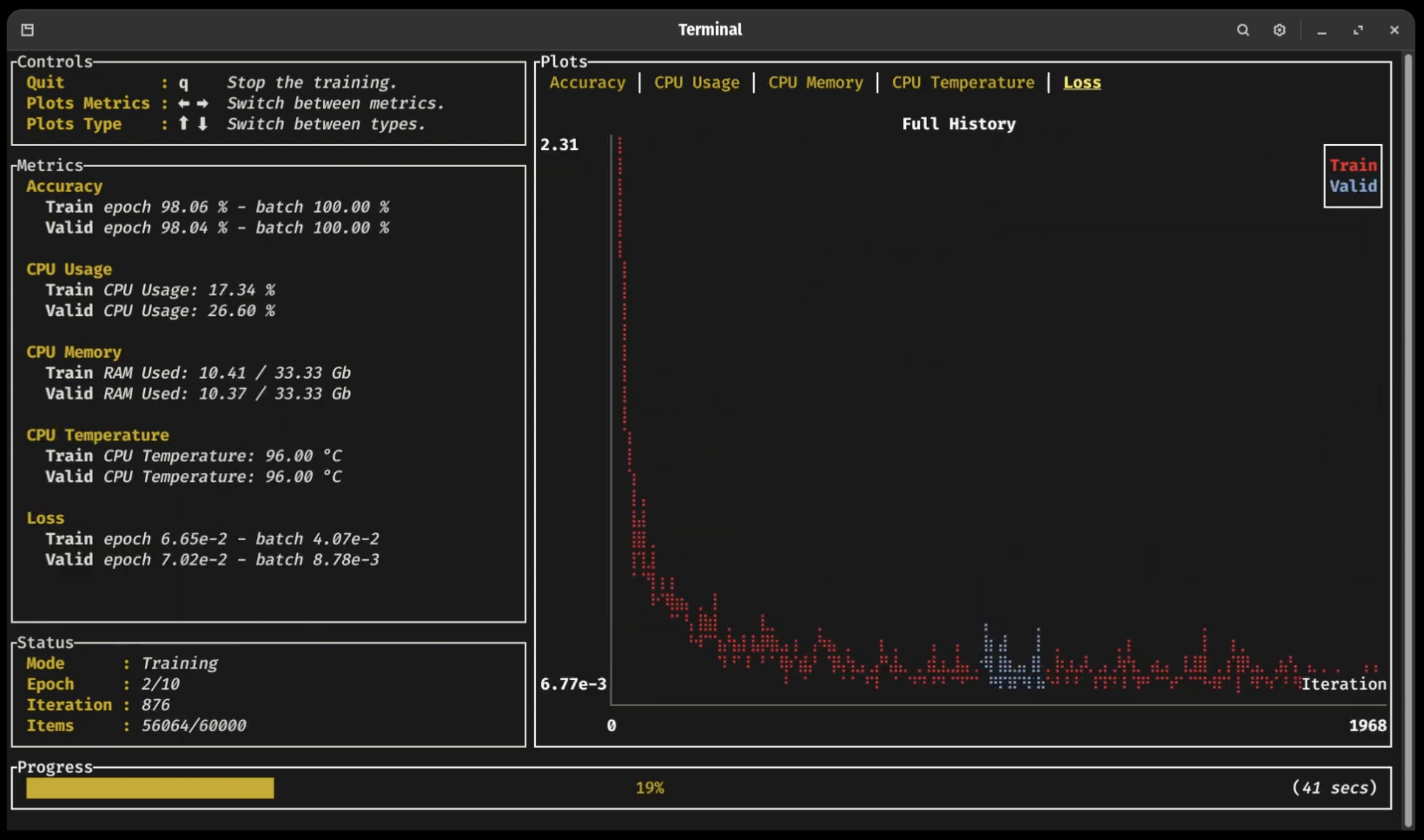

1. Terminal dashboard

A Ratatui-based TUI shows live metrics, checkpoints, and early-stop safety—no TensorBoard required.

2. ONNX import

Bring any ONNX model (exported from TensorFlow, PyTorch, etc.) into Burn:

cargo add burn-import --features onnx

See the ONNX import book section for supported ops.

3. PyTorch & Safetensors weights

Load pre-trained weights directly:

let record = PyTorchFileRecorder::new()

.load("model.pt".into(), &device)?;

let model = MyModelConfig::new().init(&device).load_record(record)?;

4. Browser inference

Compile Burn + WGPU → WebAssembly and ship a 2 MB .wasm file.

Try the live MNIST demo—no server round-trip.

5. Embedded (no_std)

NdArray back-end runs on bare-metal ARM Cortex-M.

Limitation: only CPU inference for now.

Your first model in five minutes

Step 1 — install Rust (once per machine)

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh

Step 2 — create a project

cargo new hello_burn

cd hello_burn

cargo add burn --features wgpu

Step 3 — define a tiny network

use burn::nn;

use burn::module::Module;

use burn::tensor::{backend::Backend, Tensor};

#[derive(Module, Debug)]

pub struct Mlp<B: Backend> {

linear1: nn::Linear<B>,

linear2: nn::Linear<B>,

relu: nn::Relu,

}

impl<B: Backend> Mlp<B> {

pub fn forward<const D: usize>(&self, x: Tensor<B, D>) -> Tensor<B, D> {

let x = self.linear1.forward(x);

let x = self.relu.forward(x);

self.linear2.forward(x)

}

}

Step 4 — train on MNIST

git clone https://github.com/tracel-ai/burn

cd burn/examples/mnist

cargo run --release

First run compiles shaders and benchmarks kernels; subsequent runs start immediately.

Moving models in and out of Burn

| From | To Burn | Steps |

|---|---|---|

| ONNX | Burn | burn-import crate, one function call |

PyTorch .pt |

Burn | Convert to .safetensors, then load with PyTorchFileRecorder |

| Burn | ONNX | Export is planned; currently use your own forward loop |

| Burn | WebAssembly | cargo build --target wasm32-unknown-unknown |

Real examples you can run today

| Example | What you learn | Command |

|---|---|---|

| MNIST training | End-to-end CNN training | cargo run --release |

| Custom CSV dataset | Read any tabular file | cargo run --release |

| Image classification web demo | Browser inference | trunk serve |

| Text classification | Transformer fine-tuning | cargo run --release -- --dataset ag_news |

| Custom WGPU kernel | Write your own GPU op | cargo run --release |

All examples are self-contained; clone and cargo run.

Common questions & answers

Q1: Is Rust hard to learn?

Most engineers pick up the syntax in a weekend. Burn hides the hard parts—ownership errors show up at compile time, not runtime.

Q2: Does Burn work on Windows?

Yes. Vulkan, WGPU, CUDA, and LibTorch back-ends are tested on Windows CI every day.

Q3: Do I need bleeding-edge drivers?

– CUDA: driver ≥ 515

– Vulkan: any driver that supports Vulkan 1.2

– Apple: macOS 12+

Q4: I saved a model last month—will it still load?

Burn 0.14–0.16 can read older snapshots if you enable the `record-backward-compat` feature. Load and re-save once to migrate to the new format.

Q5: How do I write a custom GPU kernel?

Write WGSL in a `.wgsl` file, register it with the WGPU back-end. The [custom-wgpu-kernel](./examples/custom-wgpu-kernel) example has 60 lines of copy-pasteable code.

Where to go next

-

Read the Burn Book

https://burn.dev/books/burn/

Short chapters: Tensors → Modules → Training → Advanced. -

Chat with the community

Discord: https://discord.gg/uPEBbYYDB6

Ask anything—#beginners channel is active. -

Contribute

-

Architecture overview: contributor-book -

Good first issues: GitHub

-

License

Burn is dual-licensed under MIT and Apache 2.0. Use it in open-source or commercial projects with no strings attached.

Ready to ship your next model in pure Rust?

Clone the repo, run an example, and see the difference.