Build a Real-Time AI Voice Assistant in 15 Minutes

“

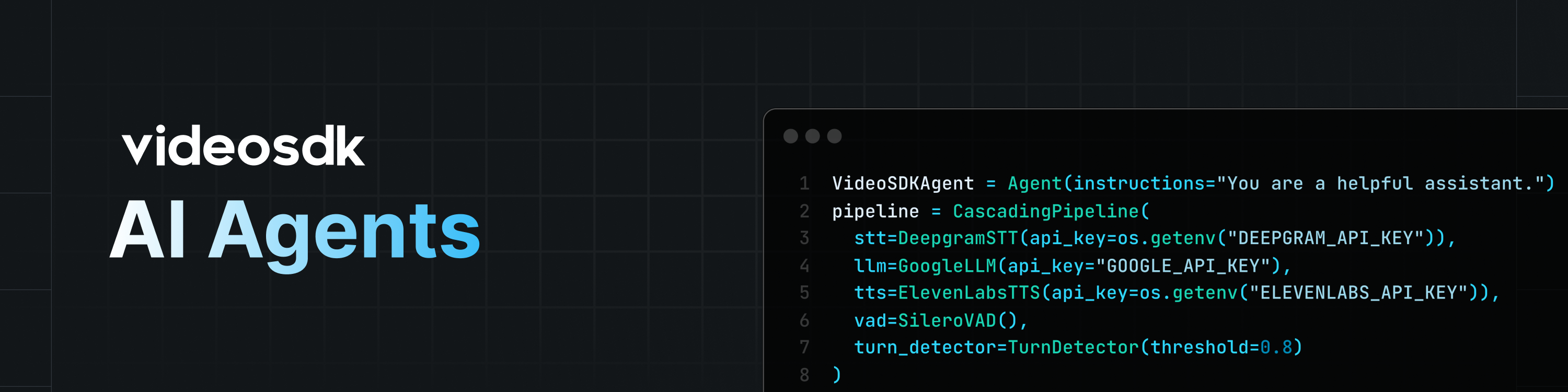

A beginner-friendly, open-source walkthrough based on VideoSDK AI Agents

For junior-college graduates and curious makers worldwide

1. Why You Can Build a Voice Agent Today

Until recently, creating an AI that listens, thinks, and speaks in real time required three separate teams:

-

Speech specialists (speech-to-text, text-to-speech) -

AI researchers (large-language models) -

Real-time engineers (WebRTC, SIP telephony)

VideoSDK wraps all three layers into a single Python package called videosdk-agents.

With under 100 lines of code you can join a live meeting, phone call, or mobile app as an AI participant.

2. What the Framework Gives You

| Capability | Human description | Everyday example |

|---|---|---|

| Real-time voice & video | The AI hears and speaks instantly | Joins a Zoom-style call to answer questions |

| SIP & telephone access | Connects to landlines and mobiles | Customers dial a 1-800 number and talk to the AI |

| Virtual avatars | Adds a talking face to the voice | A digital host presents your product on a live stream |

| Multi-model support | Swap between GPT, Gemini, Claude, etc. | Test which model gives the best customer experience |

| Cascading pipeline | Mix-and-match STT, LLM, TTS providers | Use Google STT + OpenAI LLM + ElevenLabs TTS |

| Turn detection | Knows when a human stops talking | Prevents awkward interruptions |

| Function tools | The AI can call external APIs | “Book the 3 p.m. flight to Tokyo” |

| MCP & A2A protocols | Connect to external data or other agents | One agent books flights, another sends calendar invites |

3. Architecture at a Glance

“

Image: High-level diagram showing your Python agent, VideoSDK cloud, and end-users in browsers, phones, or mobile apps.

Your code → VideoSDK cloud → Users anywhere

The cloud handles audio routing, NAT traversal, and telephone gateways so you can focus on business logic.

4. Prerequisites Checklist

| Item | How to get it | Note |

|---|---|---|

| Python 3.12+ | python.org or Anaconda | Works on Windows, macOS, Linux |

| VideoSDK account | app.videosdk.live | Free tier available |

| Auth token | Dashboard → API Keys | Keep it private |

| Meeting ID | Dashboard → “Create Room” or REST call | Re-usable link |

| Third-party keys | Whichever AI provider you choose | Examples below |

5. Installation Step by Step

5.1 Create a virtual environment

macOS / Linux

python3 -m venv venv

source venv/bin/activate

Windows

python -m venv venv

venv\Scripts\activate

5.2 Install the core package

pip install videosdk-agents

5.3 Optional plugins

# Example: install turn-detection to avoid cutting users off

pip install videosdk-plugins-turn-detector

6. Your First Agent—Greets and Good-byes

Save as main.py:

from videosdk.agents import Agent

class VoiceAgent(Agent):

def __init__(self):

super().__init__(

instructions="You are a friendly assistant. Keep answers short."

)

async def on_enter(self):

await self.session.say("Hello! How can I help you today?")

async def on_exit(self):

await self.session.say("Goodbye and take care!")

7. Teaching the AI to Fetch the Weather

7.1 External tool (re-usable in any agent)

import aiohttp

from videosdk.agents import function_tool

@function_tool

async def get_weather(latitude: str, longitude: str):

url = f"https://api.open-meteo.com/v1/forecast?latitude={latitude}&longitude={longitude}¤t=temperature_2m"

async with aiohttp.ClientSession() as session:

async with session.get(url) as resp:

data = await resp.json()

return {"temperature": data["current"]["temperature_2m"], "unit": "°C"}

Register it inside the agent:

super().__init__(

instructions="You are a helpful assistant that can check the weather.",

tools=[get_weather]

)

7.2 Internal tool (only this agent uses it)

class VoiceAgent(Agent):

@function_tool

async def get_horoscope(self, sign: str):

return {"sign": sign, "text": f"{sign} stars say: code more, worry less."}

8. Connecting Ears, Brain, and Mouth—The Pipeline

Below we use Google Gemini’s real-time model. Swap one line to use OpenAI, AWS, or any supported provider.

from videosdk.plugins.google import GeminiRealtime, GeminiLiveConfig

from videosdk.agents import RealTimePipeline

model = GeminiRealtime(

model="gemini-2.0-flash-live-001",

api_key="YOUR_GOOGLE_API_KEY", # or set GOOGLE_API_KEY in .env

config=GeminiLiveConfig(

voice="Leda", # 8 voices: Puck, Charon, Kore, Fenrir, Aoede, Leda, Orus, Zephyr

response_modalities=["AUDIO"]

)

)

pipeline = RealTimePipeline(model=model)

9. Starting the Session and Keeping It Alive

import asyncio

from videosdk.agents import AgentSession, WorkerJob, RoomOptions, JobContext

async def start_session(ctx: JobContext):

session = AgentSession(agent=VoiceAgent(), pipeline=pipeline)

try:

await ctx.connect()

await session.start()

await asyncio.Event().wait() # run until Ctrl+C

finally:

await session.close()

await ctx.shutdown()

def make_context():

return JobContext(

room_options=RoomOptions(

room_id="YOUR_MEETING_ID",

auth_token="YOUR_VIDEOSDK_TOKEN",

name="Weather Bot",

playground=True # instant web UI for testing

)

)

if __name__ == "__main__":

WorkerJob(entrypoint=start_session, jobctx=make_context).start()

Run:

python main.py

Logs should show Connected to room.

10. Client Apps—Talk to Your AI from Any Device

Pick any official quick-start repo:

-

Web (JavaScript) -

React (React) -

React Native (React Native) -

Android (Android) -

Flutter (Flutter) -

iOS (iOS)

Each client asks for the same Meeting ID your bot uses.

11. Supported Providers & Plug-ins

| Category | Providers |

|---|---|

| Real-time models | OpenAI, Google Gemini, AWS NovaSonic |

| Speech-to-Text | OpenAI, Google, Sarvam AI, Deepgram, Cartesia |

| Large Language Models | OpenAI, Google, Sarvam AI, Anthropic, Cerebras |

| Text-to-Speech | 20+ providers including ElevenLabs, AWS Polly, Google, Resemble, Speechify, Hume, Groq, LMNT |

| Voice Activity Detection | Silero VAD |

| Turn Detection | Turn Detector |

| Avatars | Simli |

| SIP Trunking | Twilio |

12. Frequently Asked Questions

Q1: Do I need a public IP or server?

No. All traffic is relayed through VideoSDK; localhost is fine.

Q2: How much free credit do I get?

$10 upon sign-up—enough for roughly 3–4 hours of voice conversation.

Q3: Can multiple bots join the same meeting?

Yes. Start each bot with its own WorkerJob and the same room ID.

Q4: How do I switch to Chinese voices?

Choose a TTS engine that supports zh-CN or set Gemini’s voice parameter to a Chinese-capable voice.

Q5: I see ModuleNotFoundError.

Install the missing plugin, e.g. pip install videosdk-plugins-xxx.

13. Going Further—Plug Your Bot into a 1-800 Number

-

Buy a number on Twilio and enable SIP Trunking. -

Point the SIP domain to VideoSDK’s provided address. -

Set sip=TrueinRoomOptions.

Your bot now answers real-world phone calls.

Official demo: AI Telephony Demo

14. Writing Custom Plug-ins

Need an unsupported model?

Follow the BUILD_YOUR_OWN_PLUGIN.md guide:

-

Inherit base STT/LLM/TTS classes. -

Implement required methods ( transcribe,generate,synthesize). -

Submit a PR—everyone can pip installyour work.

15. Next Steps & Real-Life Ideas

| Week 1 | Week 2 | Week 3 |

|---|---|---|

| Replace weather tool with your CRM lookup | Add a Simli avatar for product demos | Spin up three phone lines for sales, support, and billing |

The code is open-source and the Discord community is active—ask questions, share plug-ins, or just show off your talking fridge.

Happy building!