Baidu ERNIE 4.5: A New Era in Multimodal AI with 10 Open-Source Models

The Landmark Release: 424B Parameters Redefining Scale

Visual representation of multimodal AI architecture (Credit: Pexels)

Baidu Research has unveiled the ERNIE 4.5 model family – a comprehensive suite of 10 openly accessible AI models with parameter counts spanning from 0.3B to 424B. This release establishes new industry benchmarks in multimodal understanding and generation capabilities. The collection comprises three distinct categories:

1. Large Language Models (LLMs)

-

ERNIE-4.5-300B-A47B-Base (300 billion parameters) -

ERNIE-4.5-21B-A3B-Base (21 billion parameters)

2. Vision-Language Models (VLMs)

-

ERNIE-4.5-VL-424B-A47B-Base (424 billion parameters – largest in family) -

ERNIE-4.5-VL-28B-A3B-Base (28 billion parameters)

3. Compact Models

-

ERNIE-4.5-0.3B-Base (300 million parameters)

All models feature a 128K context window and are available on Hugging Face and AI Studio under Apache 2.0 license. The complete technical specifications are detailed below:

Three Architectural Breakthroughs

1. Heterogeneous Multimodal MoE Architecture

Heterogeneous MoE architecture enabling cross-modal learning

The core innovation lies in its Mixture-of-Experts (MoE) framework with three specialized components:

-

Modality-isolated routing: Intelligently directs text/image/video data to specialized processing pathways -

Cross-modal parameter sharing: Foundational layers process common features across modalities -

Modality-specific experts: Dedicated parameters preserve unique characteristics of each data type -

Balanced training: Router orthogonal loss prevents modality dominance during learning

This design achieves unprecedented multimodal synergy – improving visual understanding while simultaneously enhancing text comprehension capabilities.

2. Computational Efficiency Innovations

High-performance computing infrastructure (Credit: Pexels)

ERNIE 4.5 delivers industry-leading efficiency through four key advancements:

-

Intra-node expert parallelism: Optimizes resource allocation during distributed training -

FP8 mixed-precision: Accelerates computation while maintaining accuracy -

Convolutional code quantization: Enables 4-bit/2-bit lossless compression -

Dynamic role-switching: Allows real-time resource reallocation during inference

These innovations achieve 47% Model FLOPs Utilization (MFU) during pre-training – significantly higher than conventional approaches.

3. Modality-Specialized Optimization

Each model variant undergoes tailored post-training:

-

Language models: Supervised Fine-Tuning (SFT) + Unified Preference Optimization (UPO) -

Vision models: Dual-mode capability: -

Thinking mode: Enhanced reasoning for complex problems -

Non-thinking mode: High-speed perception tasks

-

Benchmark Performance: Setting New Standards

Language Model Superiority

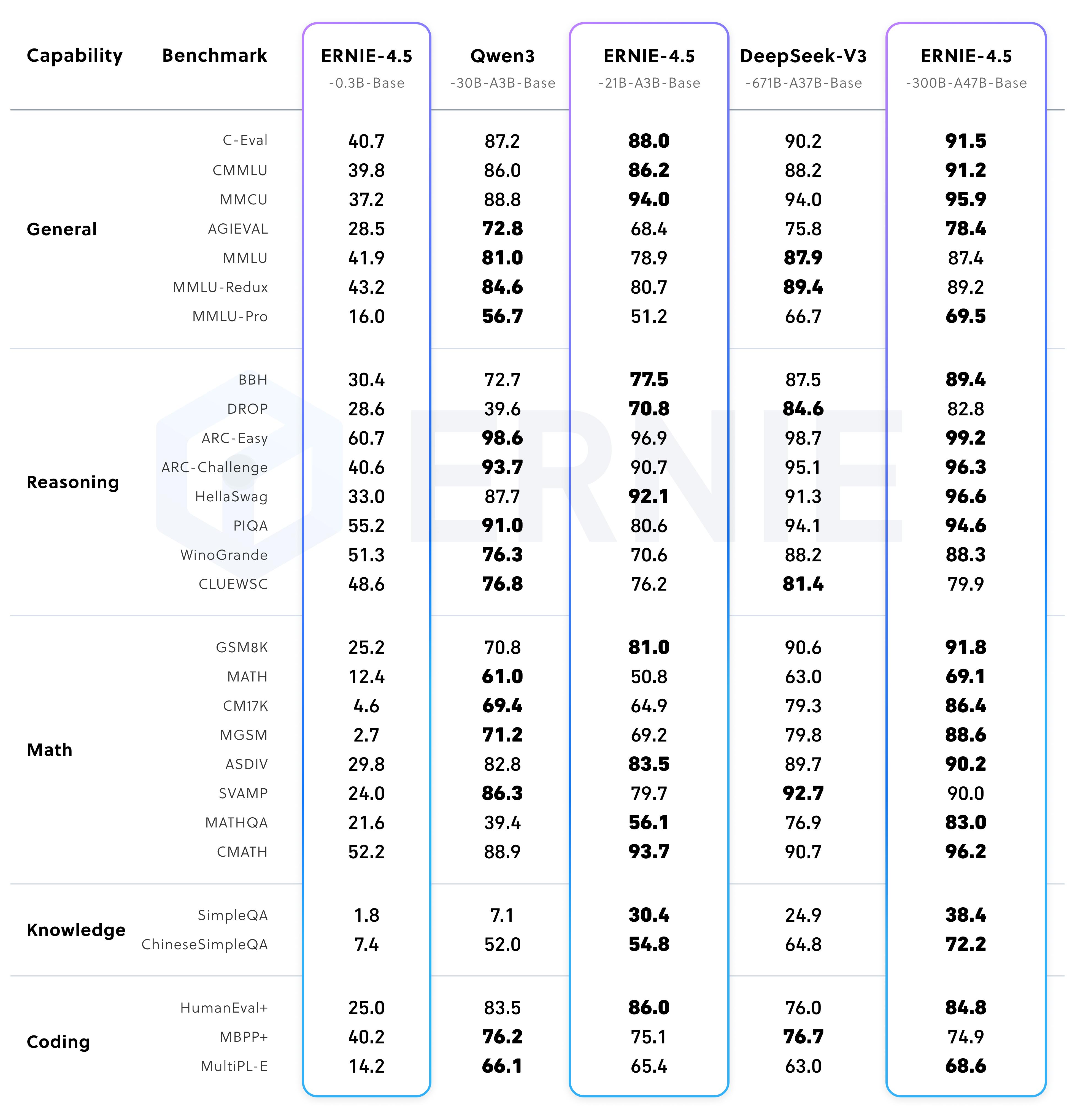

ERNIE 4.5 benchmark comparisons against leading models

-

ERNIE-4.5-300B-A47B-Base outperformed DeepSeek-V3-671B in 22 out of 28 benchmarks -

ERNIE-4.5-21B-A3B-Base exceeded Qwen3-30B performance on mathematical reasoning (BBH, CMATH) with 30% fewer parameters -

Instruction following excellence: State-of-the-art scores on IFEval, Multi-IF, SimpleQA benchmarks

Vision-Language Model Capabilities

Vision-language model performance across modalities

The compact ERNIE-4.5-VL-28B-A3B matched or exceeded Qwen2.5-VL-32B performance while using significantly fewer activation parameters.

Developer Toolkit: Industrial-Grade Implementation

ERNIEKit: Training & Optimization Suite

graph LR

A[ERNIEKit] --> B(Pre-training)

A --> C(SFT/DPO Fine-tuning)

A --> D(LoRA Adaptation)

A --> E(Quantization)

Key capabilities:

-

Full-parameter SFT and Direct Preference Optimization (DPO) -

Parameter-efficient LoRA fine-tuning -

Quantization-Aware Training (QAT) -

Multi-node distributed training support

Basic implementation workflow:

# Download base model

huggingface-cli download baidu/ERNIE-4.5-0.3B-Paddle

# Launch supervised fine-tuning

erniekit train examples/configs/ERNIE-4.5-0.3B/sft/run_sft_8k.yaml

FastDeploy: Production Inference System

Enterprise AI deployment pipeline (Credit: Pexels)

Offline inference example:

from fastdeploy import LLM, SamplingParams

llm = LLM(model="baidu/ERNIE-4.5-0.3B-Paddle", max_model_len=32768)

outputs = llm.generate("Explain quantum computing basics",

SamplingParams(temperature=0.7))

API server deployment:

python -m fastdeploy.entrypoints.openai.api_server \

--model "baidu/ERNIE-4.5-VL-424B-A47B" \

--max-model-len 131072 \

--port 9904

Quantization & Hardware Support

Practical Applications: Real-World Implementation

Enterprise Solution Cookbook

graph TD

A[Conversational AI] --> B(Customer Service Bots)

C[Knowledge Retrieval] --> D(Enterprise Search)

E[Visual Understanding] --> F(Quality Control)

G[Document Processing] --> H(Contract Analysis)

Implementation Guides:

-

Conversational Systems

-

Web-integrated dialogue implementation -

Context-aware response generation techniques

-

-

Enterprise Knowledge Engines

-

Private knowledge base integration -

Domain-specific fine-tuning methodologies

-

-

Industrial Vision Systems

Multimodal implementation example:

# Industrial defect detection system

def analyze_production_line(image_path):

vlm = load_model("ERNIE-4.5-VL-28B-A3B")

return vlm.generate(

image=image_path,

text="Identify manufacturing defects in this image",

mode="non-thinking" # Prioritize speed for real-time processing

)

Open Ecosystem & Licensing

All models are released under Apache License 2.0 permitting commercial use and modification. The complete technical report details the architectural innovations and validation methodologies:

@misc{ernie2025technicalreport,

title={ERNIE 4.5 Technical Report},

author={Baidu ERNIE Team},

year={2025},

url={https://yiyan.baidu.com/blog/publication/}

}

“

“The heterogeneous MoE architecture represents a fundamental advance in multimodal learning. By enabling specialized processing pathways while maintaining shared representations, ERNIE 4.5 achieves complementary improvements across text and visual domains rarely seen in prior systems.” – Technical Report Excerpt

Developers can access the complete model repository through: