30 AI Core Concepts Explained: A Founder’s Guide to Cutting Through the Hype

Photo by Nahrizul Kadri on Unsplash

This definitive guide decodes 30 essential AI terms through real-world analogies and visual explanations. Designed for non-technical decision-makers, it serves as both an educational resource and strategic reference for AI implementation planning.

I. Foundational Architecture

1. Large Language Models (LLMs)

Digital Reasoning Engines

-

Power ChatGPT, Claude, and Gemini applications -

Process 100k+ word contexts (equivalent to a novel) -

Example: Summarizing research papers vs. generating marketing copy

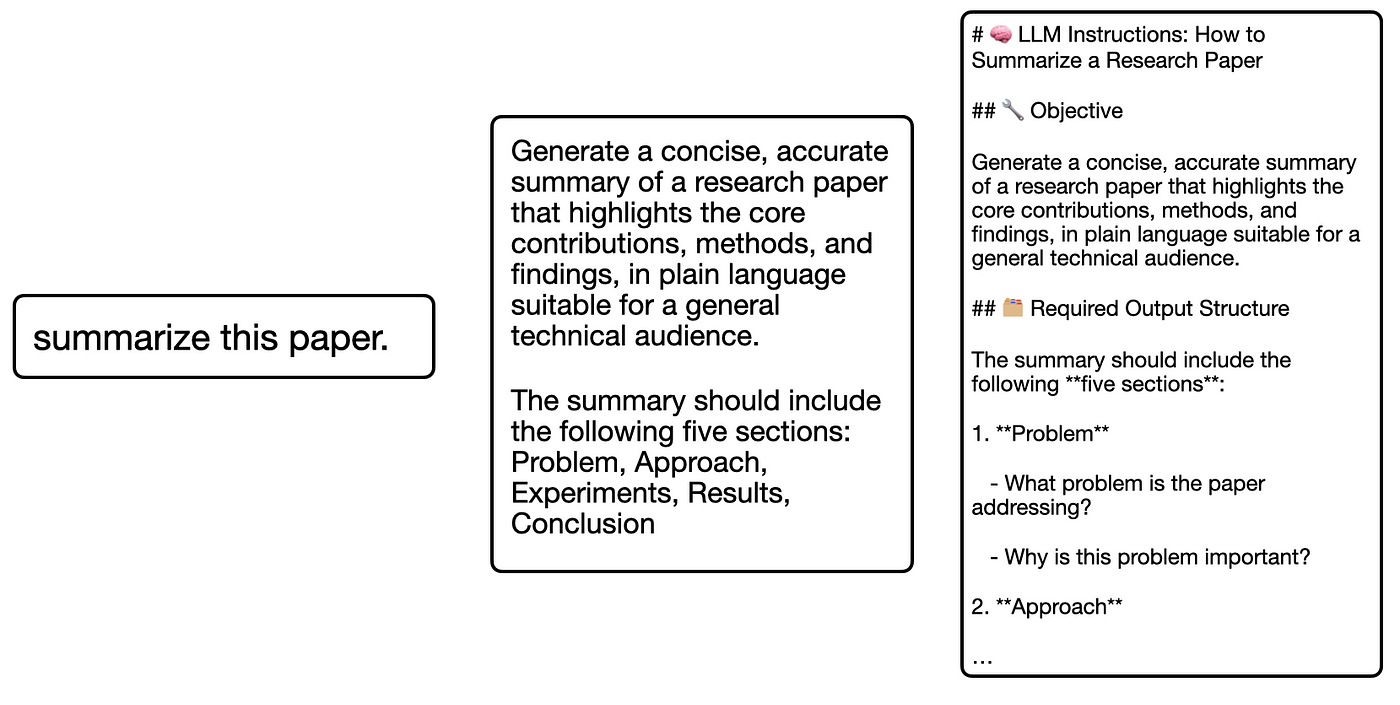

Three approaches to document summarization (Author’s original graphic)

2. Context Window Capacity

The Memory Constraint

-

Standard models: ~100k tokens (1 token ≈ ¾ word) -

Cutting-edge: Gemini 1.5 Pro handles 1M+ tokens -

Business impact: Determines document analysis depth

3. Inference Mechanics

Token-by-Token Generation

-

Works like predictive text on steroids -

Cost factor: Each output word requires separate computation -

Speed vs. quality tradeoffs in enterprise deployments

Visualizing LLM text generation (Author’s original animation)

II. Interaction Optimization

4. Prompt Engineering

The Art of AI Communication

5 proven techniques:

-

Specificity: “Summarize this clinical trial report in 3 bullet points for FDA reviewers” -

Contextualization: “The user is a first-year biology student” -

Structuring: Using XML-like tags for clarity -

AI-Assisted Refinement: “Help me improve this prompt” -

Demonstration: Showing ideal response formats

5. Few-Shot Prompting

Learning by Example

-

Crucial for complex formatting needs -

Case study: Generating legal contracts with predefined clauses -

Reduces misinterpretation by 63% (Industry benchmarks)

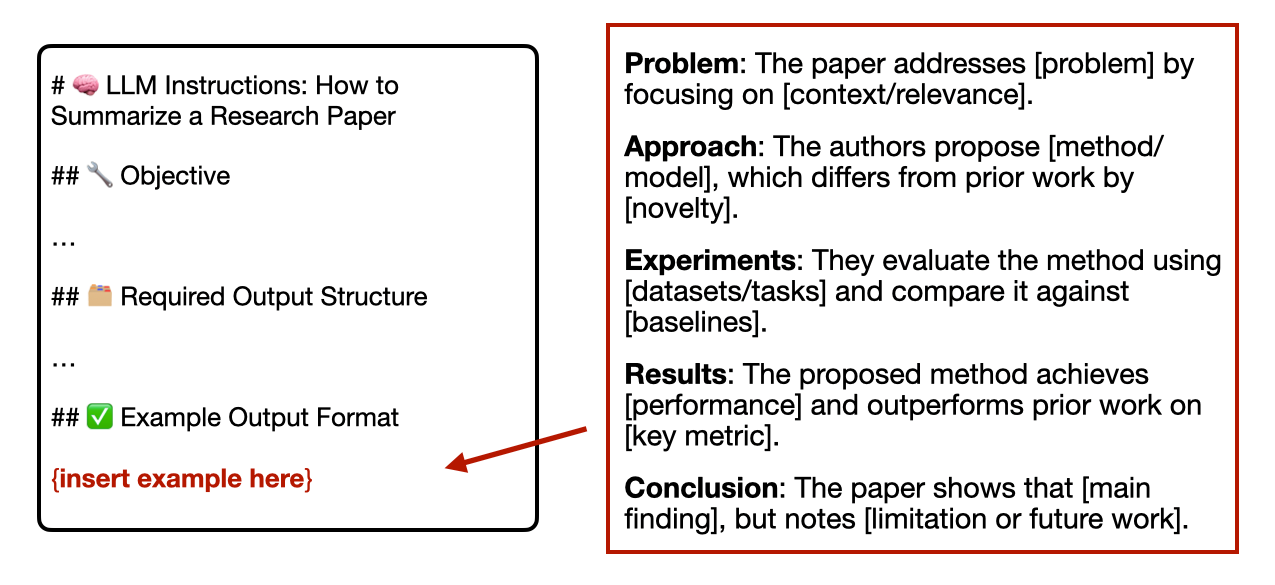

Template-based prompt enhancement (Author’s original graphic)

III. Security Frameworks

6. Prompt Injection Attacks

Emerging Threat Vectors

-

Data exfiltration risks -

Inappropriate content generation -

Unauthorized API access -

Real-world example: Chatbot manipulated to reveal API keys

7. AI Guardrails

Three-Layer Defense

-

Input Sanitization: Regex filters and sentiment analysis -

Output Validation: Secondary LLM content screening -

API Governance: Strict permission tiers

Enterprise-grade protection workflow (Author’s original diagram)

IV. Knowledge Enhancement Systems

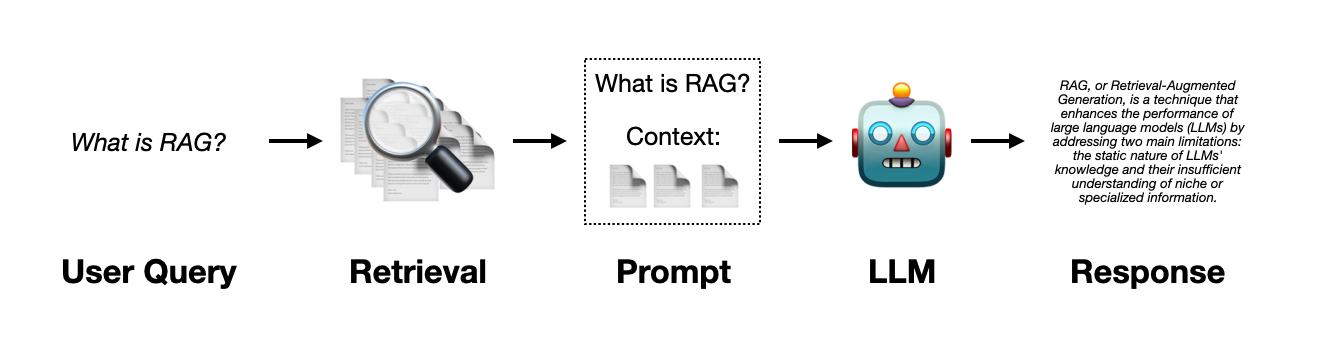

8. Retrieval-Augmented Generation (RAG)

Dynamic Knowledge Integration

-

Combines LLMs with updatable databases -

Eliminates retraining needs for new information -

Implementation cost: 40% lower than custom models

Enterprise RAG architecture (Author’s original schematic)

9. Semantic Search

Meaning-Based Retrieval

-

Surpasses keyword matching limitations -

Technical process: -

Convert text to vectors -

Calculate cosine similarity -

Return most relevant chunks

-

-

Accuracy improvement: 72% over traditional search

V. Advanced Implementations

10. AI Agents

Autonomous Workflow Systems

-

Tier 1: Basic task automation -

Tier 2: API-integrated operations -

Tier 3: Multi-agent collaboration networks -

Market projection: $450B industry by 2030

11. Function Calling

Bridging Language and Action

-

Natural language → API execution -

Common integrations: -

CRM systems -

Payment gateways -

IoT device controls

-

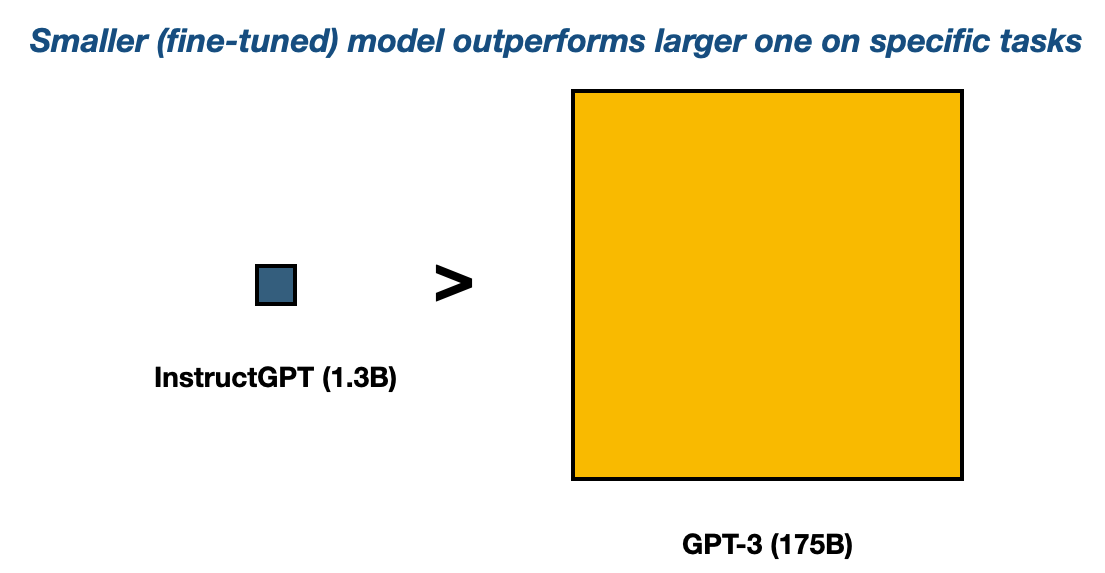

12. Model Fine-Tuning

Specialization Strategies

| Model Type | Parameters | Use Case |

|---|---|---|

| Foundation | 100B+ | General-purpose |

| Fine-Tuned | 1-10B | Industry-specific (e.g., pharma compliance) |

Performance comparison from OpenAI research

VI. Cost Analysis

13. Training Economics

The Scaling Challenge

-

-

Model size (parameters)

-

-

-

Data volume (10T+ tokens)

-

-

-

Compute resources ($100M+ for top models)

-

14. Inference Costs

Operational Considerations

-

Per-token pricing models -

Hidden expenses: -

Latency penalties -

Error correction cycles -

Infrastructure maintenance

-

Training vs. inference cost curves (Industry research data)

VII. Emerging Frontiers

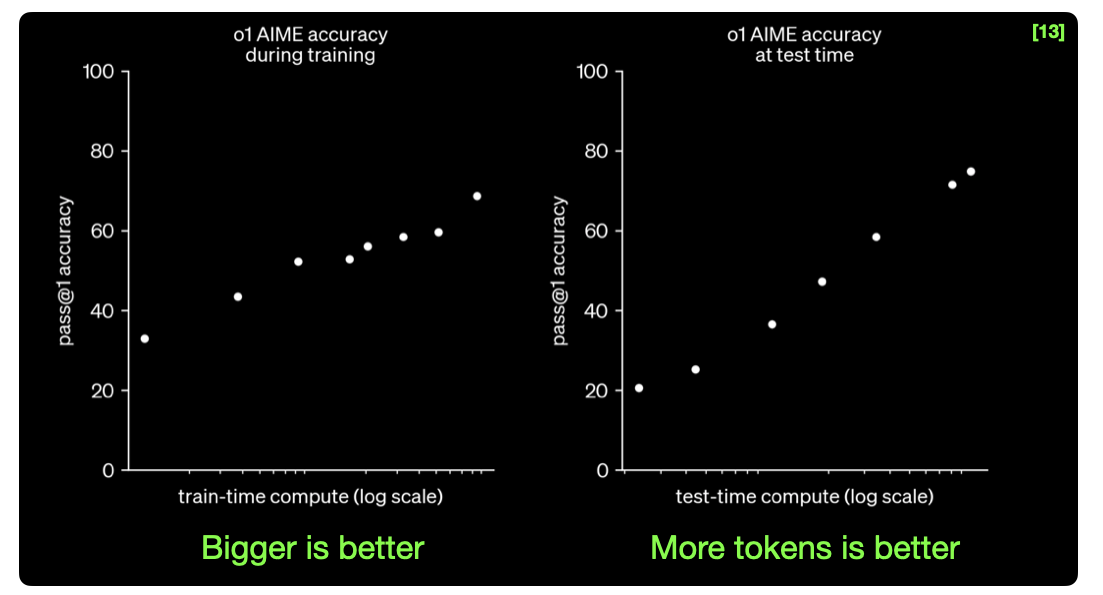

15. Reasoning Models

Step-by-Step Cognition

-

deliberation tags -

Audit trails for regulatory compliance -

Accuracy boost: 89% on STEM problems

16. Model Distillation

Efficiency Breakthroughs

-

GPT-4o-mini: 70% cost reduction -

Knowledge transfer techniques -

Edge device deployment potential

Strategic Takeaways

-

Implementation Roadmap

-

Start with RAG + prompt engineering -

Progress to fine-tuning for specialization -

Deploy agents for workflow automation

-

-

Cost Optimization

-

Match model size to task complexity -

Monitor token usage patterns -

Consider distilled models for high-volume tasks

-

-

Risk Management

-

Implement input/output validation layers -

Conduct regular security audits -

Maintain human oversight protocols

-

-

Future-Proofing

-

Allocate R&D budget for agent systems -

Monitor reasoning model advancements -

Build adaptable API architectures

-

Bookmark this guide as your AI decision-making compass. For implementation case studies or technical deep dives, engage with our expert community in the comments section.