I Built a Polymarket Trading Bot: A Complete Record of Strategy, Parameter Optimization, and Real Backtesting

A few weeks ago, I had an idea: to build my own automated trading bot for Polymarket. What drove me to spend several weeks in full development was a simple observation—there are numerous market inefficiencies on this platform waiting to be captured. While it’s true some bots are already exploiting these opportunities, they are far from sufficient. The untapped potential profit space still vastly outnumbers the active bots.

Today, my bot is complete and operational. It’s fully automated; I simply start it and let it run. At the beginning of each “Bitcoin Up or Down” round, the bot only monitors the market for the first 2 minutes (this is configurable). If during this window, the price of either “Yes” or “No” drops rapidly enough, it triggers a sophisticated automated strategy.

Core Bot Logic: Capturing Instant Volatility for Hedged Profits

The bot’s logic stems from a strategy I used to execute manually, which I automated for efficiency and speed. It operates specifically on the “BTC 15-minute UP/DOWN” market.

How does the bot work?

It runs a live watcher that automatically switches to the current BTC 15-minute round, streams the best bid/ask prices via WebSocket, displays a fixed terminal UI, and allows full control via text commands.

-

Manual Mode: You can place trades directly.

-

buy up <amount>/buy down <amount>: Spends a specified USD amount to buy. -

buyshares up <shares>/buyshares down <shares>: Places a limit (GTC) order at the current best ask to buy an exact number of shares.

-

-

Auto Mode: This is the core. It runs a repeating two-leg cycle.

-

Leg 1 (Catching the Dump): The bot only watches for price movement during the first windowMinminutes of the round. If either side’s price drops by at leastmovePctpercent (e.g., 15%) over roughly 3 seconds, it triggers Leg 1 and buys the side that just dumped. -

Leg 2 (The Hedge): After Leg 1 triggers, the bot will never buy the same side again. It waits for Leg 2 (the hedge) and only triggers it when the following condition is met: leg1EntryPrice + oppositeAsk <= sumTarget.

When this condition is satisfied, it buys the opposite side. After Leg 2 completes, the cycle finishes, and the bot resets to watch for the next dump opportunity using the same parameters.

If a new round begins before an active cycle is complete, the bot abandons the open cycle and restarts fresh on the next round with the same settings.

-

Key Parameter Configuration

The auto mode is started with the command: auto on <shares> [sum=0.95] [move=0.15] [windowMin=2]

-

shares: Position size used for each leg. -

sum: The threshold that allows the hedge leg to trigger (e.g., 0.95 means hedge when “Yes” price + “No” price < 0.95). -

move: The dump threshold required to trigger Leg 1 (e.g., 0.15 for 15%). -

windowMin: How many minutes from the round start Leg 1 is allowed to trigger.

In simple terms, the bot’s strategic idea is: wait for a violent price dump, buy the side that just dumped, then wait for the market to stabilize and hedge by buying the opposite side when the price is right, while ensuring the sum of “Yes” and “No” prices is less than 1 (implying an arbitrage opportunity or very low risk).

From Theory to Validation: Building My Own Backtesting Framework

However, this logic needed validation. Does it work long-term? More importantly, the bot has many tunable parameters (shares, sum, movePct, windowMin, etc.). Which parameter combinations are optimal and maximize profit?

My first thought was to run the bot live for a week and observe. The problem was the immense time required, allowing only one parameter set test, whereas I needed to test many.

My second idea was to backtest using historical data from Polymarket’s CLOB API. Unfortunately, for the BTC 15-minute UP/DOWN market, the historical endpoints kept returning empty datasets. Without historical price ticks, the backtest cannot detect a “dump over ~3 seconds,” cannot trigger Leg 1, resulting in 0 cycles and 0% ROI regardless of parameters.

Upon further investigation, I realized other users faced the same issue retrieving historical data for certain markets. Testing other markets that did return data led me to conclude: for this specific market, historical data is simply not retained.

Due to this limitation, the only reliable way to backtest this strategy was to create my own historical dataset by recording live best-ask prices while the bot was running.

How did I record the data?

The recorder writes snapshots to disk containing:

-

Timestamp -

Round identifier (slug) -

Seconds remaining -

UP/DOWN token IDs -

UP/DOWN best ask prices

The “recorded backtest” then replays these snapshots and deterministically applies the same auto logic. This guarantees access to the high-frequency data required to detect dumps and hedge conditions.

In total, I collected 6 GB of data over 4 days. I could have recorded more, but deemed this sufficient for testing different parameter sets.

Parameter Testing: The Gap Between Heaven and Hell

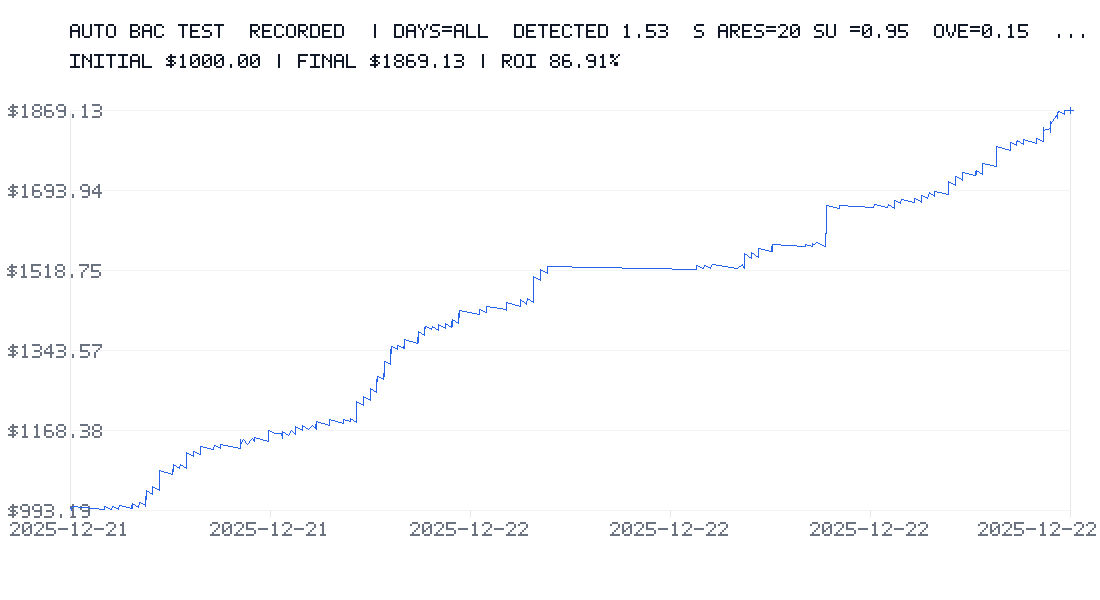

I first tested the following parameter set:

-

Initial Balance: $1,000 -

20 shares per trade -

sumTarget = 0.95 -

Dump Threshold = 15% -

Monitoring Window = 2 minutes

To stay conservative, I also applied a constant 0.5% fee rate and a 2% spread. The backtest showed an 86% Return on Investment over just a few days, turning 1,869.

Next, I tested a much riskier parameter set:

-

Initial Balance: $1,000 -

20 shares per trade -

sumTarget = 0.6 -

Dump Threshold = 1% -

Monitoring Window = 15 minutes

Result: A 50% loss after 2 days.

This clearly demonstrates that parameter selection is the most critical factor determining profit or loss. The right settings can yield substantial gains, while wrong ones can lead to significant losses.

Acknowledging Backtest Limitations: The Gap Between Model and Reality

Despite including fees and spread, my backtest has its limitations. Understanding these is crucial for assessing the strategy’s real-world risks.

-

Limited Data Sample: Using only a few days of data may not represent full market cycles. -

Overly Idealized Price Simulation: The backtest relies on recorded best-ask snapshots. In reality, orders can be partially filled or filled at different prices. Order book depth and available liquidity are not modeled. -

Insufficient Time Granularity: Data is sampled once per second. Micro-movements within a second are not captured, yet much can happen in that interval. -

Fixed Slippage Assumption: Slippage is constant in the backtest, not simulating variable network latency (e.g., 200–1500 ms) or network spikes. -

Instant Execution Assumption: Each leg is treated as an “instant” execution, with no order queue or resting orders. -

Simplified Fee Model: Fees are applied uniformly, while in reality they may depend on market/token, maker vs. taker status, fee tiers, etc. -

Conservative Loss Handling: To maintain a pessimistic estimate, I applied a rule: if Leg 2 does not execute before the market resolves, Leg 1 is treated as a total loss. This is intentionally conservative but not always realistic: -

Sometimes Leg 1 can be closed early. -

Sometimes Leg 1 expires in-the-money and wins. -

Sometimes losses are partial, not total. -

Therefore, the backtest may overestimate losses, but it provides a useful “worst-case” reference.

-

-

No Self-Market Impact: The backtest assumes you are a pure price taker with no market impact. In reality, your orders can: -

Move the order book. -

Attract or repel other traders. -

Cause non-linear slippage.

-

-

No Operational Issue Simulation: The backtest does not simulate API rate limits, errors, order rejections, process pauses, timeouts, reconnections, or instances where the bot is busy and misses signals.

The conclusion is: Backtesting is extremely valuable for identifying promising parameter ranges. However, because some real-world effects cannot be modeled, it is not a 100% guarantee of future profits.

Infrastructure and Future Optimization Directions

Currently, I plan to run this bot on a Raspberry Pi to avoid consuming my main machine’s resources and to enable 24/7 operation.

But there is significant room for optimization:

-

Performance: Rewriting in Rust instead of JavaScript would provide far better performance and faster processing speeds. -

Networking: Running a dedicated Polygon RPC node could further reduce latency. -

Deployment: Hosting the bot on a VPS close to Polymarket’s servers would also significantly cut down delay.

Undoubtedly, there are other optimizations I haven’t yet discovered. For now, I’m learning Rust, as it’s becoming an essential language in Web3 development.

Frequently Asked Questions

Q: What is the core strategy of this bot?

A: The core is a “two-leg hedging cycle.” First, it monitors for a violent price dump in either direction shortly after a round starts and buys immediately (Leg 1). Then, it waits for the market to stabilize and buys the opposite side to hedge risk (Leg 2) when the combined price of “Yes” and “No” shares falls below a safe threshold, thereby locking in profits or minimizing risk.

Q: Which parameter is the most important?

A: Based on my tests, the Dump Threshold (move) and the Hedge Threshold (sum) are the two most critical. The Dump Threshold determines when to enter; too sensitive leads to false triggers, too迟钝 misses opportunities. The Hedge Threshold determines when to hedge and lock profits; improper settings can prevent the hedge from completing or leave profits minimal. The windowMin (monitoring window) length also directly impacts the number of capturable opportunities.

Q: If the backtest shows high returns, does that guarantee live trading profits?

A: No, it does not. Backtesting is a simulation based on historical data (even if self-recorded). It cannot fully replicate all complexities of live trading, such as partial order fills, market depth impact, the market impact of your own large orders, network latency, API stability, etc. The primary purpose of backtesting is to validate strategy logic and optimize parameter ranges, not to guarantee future profits.

Q: Why choose the BTC 15-minute market? Is this strategy applicable to other markets?

A: This market was chosen for its relatively good liquidity and its fixed-timeframe nature, which facilitates automation. The strategy’s core is capturing mean reversion and spread convergence after short-term emotional volatility. Theoretically, it could be adapted to other binary prediction markets with similar characteristics. However, it would require re-testing and parameter optimization for each market’s specific liquidity and volatility profile.

Q: How much capital is needed to run such a bot?

A: The starting capital depends on your “shares” parameter per trade and your risk tolerance. In my tests, I started with $1,000. Importantly, the capital used should be money you can afford to lose entirely, and any single trade’s position size should only constitute a small fraction of your total capital for proper risk management.

Q: You mentioned rewriting in Rust. What’s wrong with JavaScript?

A: JavaScript is excellent for development speed and prototyping. However, for high-frequency, low-latency trading applications, Rust offers near-zero-overhead runtime performance, finer memory control, and stronger concurrency handling. These are crucial for reducing network request overhead and processing large volumes of real-time data, helping to capture microsecond advantages in competitive quantitative trading.

Summary and Outlook

The process of building this Polymarket bot was a complete practice journey—from strategy conception and automation implementation to rigorous backtesting. It showed me that within seemingly noisy markets, it is indeed possible to systematically capture some inefficiencies through clear logic and strict parameter discipline. Simultaneously, the gap between backtest and live trading constantly reminds me of the paramount importance of risk management.

The greatest takeaway wasn’t a “holy grail” guaranteed-profit strategy, but a methodology: Translate an intuitive strategy into quantifiable rules -> Build tools for automation -> Overcome data obstacles for backtesting -> Find robust parameter ranges through extensive testing -> Deeply understand the strategy’s limitations.

Looking ahead, beyond continuous optimization of code performance and infrastructure, the more important task is observing the strategy’s lifecycle over longer live trading periods and iterating based on changing market conditions. In the world of quantitative trading, there is no permanent solution, only continuous learning and adaptation.

This article documents in detail the technical practice and reasoning behind the personal development of a Polymarket trading bot. All content is based on actual development and testing processes. Trading involves risk, and automated trading can amplify risks. Please evaluate carefully and take full responsibility for your own decisions.