Picture this: You’re a harried AI developer with a beast of a task on your plate—research the latest breakthroughs in quantum computing and whip up a structured report for your team. You fire up a basic AI agent, the kind built on a trusty while loop, and it dives in. It smartly calls a search tool, snags a bunch of paper abstracts, and starts piecing together insights. But before long, chaos ensues: The context window overflows with raw web scraps, the agent starts hallucinating wild tangents, loses sight of the report’s core goal, and spirals into an endless loop of irrelevant “recommendations.” An hour in, you’re staring at your screen, muttering, “This thing’s too shallow.” It’s perfect for quick hits like “What to wear in Tokyo today?” but folds under anything needing days of deep dives.

If that’s hit a little too close to home, you’re not alone. Over the past year, most AI agents have hovered in this “shallow” realm—simple, elegant, but brittle when complexity kicks in. The good news? A quiet revolution is underway: Agents 2.0, or “deep agents.” These aren’t just reactive loop machines; they’re like seasoned project managers—planning ahead, breaking down tasks, holding onto persistent memories, and even delegating to specialized “sub-teams.” They tackle multi-step marathons spanning hours or days, from zero-to-report market analyses to iterative code builds, without breaking a sweat.

In this post, we’ll unpack the shift: Why do shallow agents leave us frustrated? How do deep agents rewrite the AI playbook? And crucially, how can you build one today? Stick around—you’ll see this isn’t just a tech tweak; it’s the leap that turns AI from a gadget into a genuine collaborator.

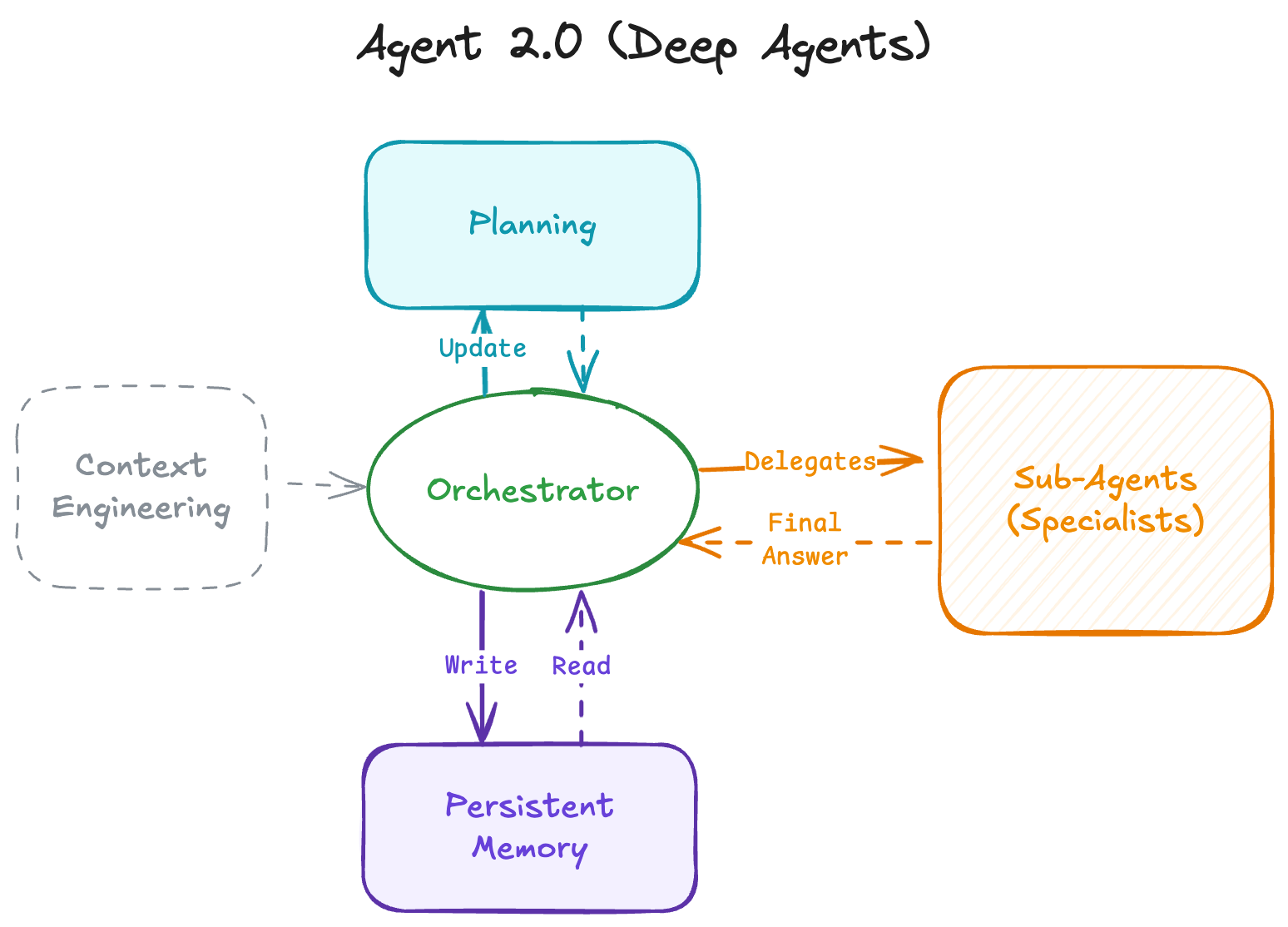

Above: A side-by-side of shallow vs. deep agent architectures—from single-threaded loops to multi-layered planning and collaboration.

The Sweet Trap of Shallow Agents: When Simple Becomes Too Simple

Remember the thrill of your first AI agent build? Toss in a user prompt, let the LLM (large language model) reason “Hey, I need a search tool for this,” invoke the API, observe the output, and loop back. It’s a stroke of genius. This “shallow” setup—Agent 1.0, as we call it—leans entirely on the LLM’s context window for state, like an improv session. Ask “What’s Apple’s stock price, and is it a buy?” and it delivers search hits plus a dash of analysis in seconds. Stateless, lightweight, ideal for those 5-to-15-step errands.

But scale it up: “Research 10 competitors, dissect their pricing models, build a comparison spreadsheet, and draft a strategic summary.” It starts strong, but tool outputs—think messy HTML or JSON blobs—quickly flood the window. Instructions get shoved out, the agent drifts from the “strategic summary” North Star, and with no backtracking baked in, it just retries blindly until you intervene.

I’ve been there: Simulating a market survey with a shallow agent, it “forgot” everything by step 20 and pivoted to weather tips. The core flaws? No depth in planning, zero recovery smarts, and a myopic view. Great for sprint tasks, disastrous for 500-step epics. That’s why devs are flocking to deep agents—a architecture built to “go deep” on the tough stuff.

The Four Pillars of Deep Agents: From Chaos to Command

Deep agents shine by decoupling planning from execution and offloading memory externally, letting AI mimic human teams in sync. At the heart: four interlocking pillars, each laser-focused on shallow pitfalls. Let’s break them down like we’re brainstorming over coffee—practical, no fluff.

Pillar 1: Explicit Planning—No More Winging It

Shallow agents “plan” implicitly through chain-of-thought: “I’ll do X, then Y.” Deep agents flip that with dedicated tools for explicit blueprints, like a Markdown to-do list. After every step, it reviews: Tags items as “pending,” “in progress,” or “done,” adds notes. Hit a snag? No blind retries—it tweaks the plan and pivots, eyes locked on the big picture.

Think project management 101: You don’t dive in sans roadmap. The payoff? Laser focus amid the noise.

Pillar 2: Hierarchical Delegation—Let Specialists Shine

No one’s a jack-of-all-trades, so why force one agent to be? Deep agents deploy an “orchestrator-sub-agent” pattern: The lead agent parcels out chunks to tailored subs—like a “researcher” for digging lit, a “coder” for data crunching, a “writer” for polishing prose. Each sub runs its own clean context and tool loops, then hands back only the distilled goods.

It’s your dream team: You orchestrate, experts execute. Subs scale complexity without the “do-everything-in-one-prompt” headache.

Pillar 3: Persistent Memory—Remember Smart, Not Everything

Context overflow kills shallow runs. Deep agents sidestep it with external “vaults”—filesystems or vector DBs—as the single source of truth. Frameworks like Claude Code or Manus grant read/write access: Agents stash intermediates (code drafts, raw data), and successors query paths or keys for just what’s needed.

The magic shift: From cramming “everything” in-head to “knowing where to look.” No more history bloat—pure efficiency.

Pillar 4: Extreme Context Engineering—Prompts as Precision Art

Smarter models crave better prompts, not fewer. Deep agents wield thousand-token system instructions: Rules for pausing to plan, spawning subs vs. solo work, tool examples, file norms, even human-in-loop protocols. Ditch “You’re a helpful AI”—opt for “On failure, backtrack to plan step 3 and log to /logs/error.md.”

It’s the AI ops manual: Detailed, prescriptive, behavior-shaping.

Together, these pillars transform reactive loops into proactive powerhouses—master context, master complexity.

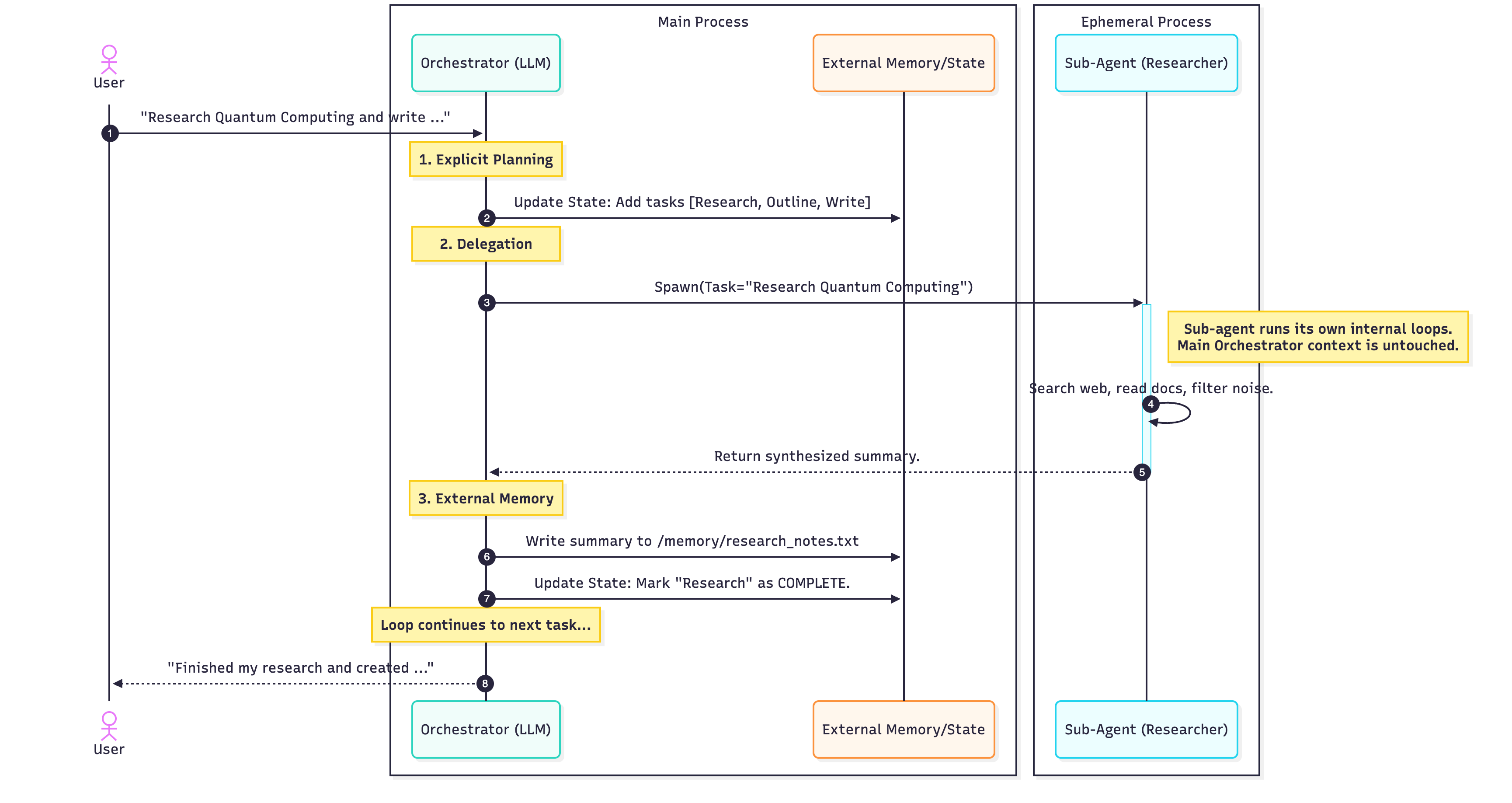

Above: Sequence diagram for a deep agent tackling “Research quantum computing and write a summary”—planning, delegation, memory handoffs, seamless flow.

Hands-On Guide: Build Your First Deep Agent with LangChain

Theory’s cool, but let’s get building. LangChain’s deepagents package makes it a breeze, baking in the pillars: Rich prompts, planning tools, sub-agent hooks, virtual filesystems. Drawing from official docs, we’ll craft a “deep researcher” for quantum trends—end-to-end in minutes.

Step 1: Set Up Your Environment

Python 3.11+ ready? Install with one command:

pip install deepagents langchain langgraph

Boom—core deps locked in, no tweaks needed.

Step 2: Craft Custom Tools and Sub-Agents

Import basics:

from deepagents import DeepAgent

from langchain.tools import Tool

import os

Whip up a search tool (swap in your API key):

search_tool = Tool(

name="search",

description="Search the web for information.",

func=lambda q: f"Mock search result for {q}: Quantum computing advances in 2025 include error-corrected qubits."

)

Sub-agent example: A “researcher” tuned for fact-gathering:

researcher = DeepAgent(

tools=[search_tool],

system_prompt="You are a researcher. Focus on gathering facts, summarize key points."

)

Step 3: Assemble the Main Agent

Enable planning and files:

main_agent = DeepAgent(

tools=[researcher.as_tool()], # Subs as tools

planning_tool=True, # Explicit plans on

file_system=True, # Persistent memory

system_prompt="""You are a deep researcher. Plan tasks explicitly, delegate to sub-agents, write outputs to /output/summary.md.

Steps: 1. Plan in Markdown. 2. Delegate research. 3. Synthesize and save."""

)

Run it:

result = main_agent.run("Research quantum computing trends and write a summary.")

print(result) # Path or final content

It spins a plan (e.g., “1. Search trends. 2. Delegate. 3. Compile.”), delegates, archives. Full code on LangChain’s GitHub.

Step 4: Test and Iterate

Feed it a gnarly prompt—watch it dodge shallow snags. Level up with LangGraph for flow viz. Under 30 minutes to a cross-day task handler.

FAQ: Answering Your Deep Agent Questions

Q: How much more does a deep agent cost compared to shallow?

A: Shallow’s near-zero overhead, but deep adds 20-50% token burn from subs and memory calls—for complex jobs, that’s a bargain in efficiency gains. Benchmark small tasks first.

Q: How do I customize sub-agents? Any LangChain alternatives?

A: deepagents is plug-and-play—tweak prompts or tools freely. Alternatives like Manus (code-focused) or Claude Code (file collab) shine in niches; docs cover migrations.

Q: Can deep agents still loop infinitely?

A: Planning tools include backtracks, plus prompt “stop rules”—way safer. Just tail logs for easy tweaks.

Q: Beginner-friendly?

A: Absolutely—install, run the demo, you’re off. Sub interactions need some LLM debugging chops, though.

Wrapping Up: The Dawn of Deeper AI Agents

From the instant gratification of shallow loops to the strategic heft of deep agents, this evolution underscores a truth: AI’s no sorcery—it’s engineering. Explicit plans, sub delegations, persistent memory, and honed prompts don’t just enable hour-long tasks; they pave for day-spanning automations. Envision tomorrow: Agents iterating codebases, market sims, human-AI hybrids.

Your move? Grab a nagging task, fork a LangChain repo, prototype away. Or ponder: With AI this “deep,” where does human ingenuity draw the line? Drop your agent tales in the comments—next up, maybe sub-agent best practices.